Introducing Astro, the Fully Managed Data Orchestration Platform, Powered by Airflow

We are thrilled to announce the release of Astro, a first-of-its-kind, fully managed data orchestration platform powered by Apache Airflow® and OpenLineage, the de facto standards for workflow automation and metadata exchange. Created by the experts behind the Airflow and OpenLineage projects, Astro allows organizations to build data pipelines easily and quickly, run them reliably and efficiently, and observe them with clarity and context. It integrates data lineage to provide complete visibility into pipelines as they are run across projects, regions, and clouds.

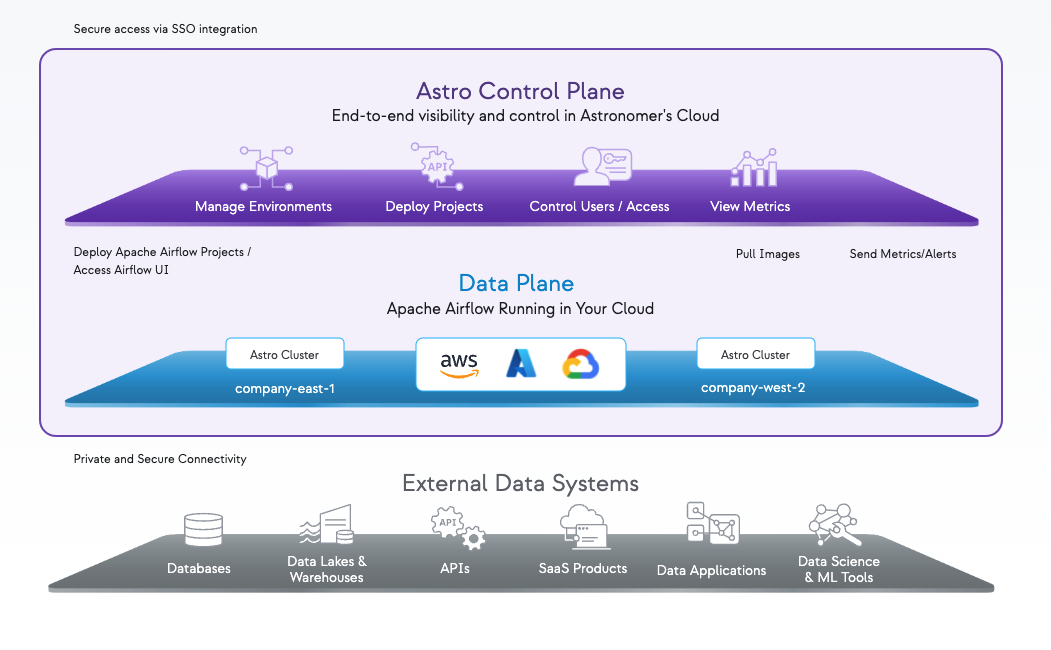

Astro is available on Amazon Web Services and Google Cloud, with Microsoft Azure support coming this summer.

Astronomer and Airflow

In many organizations, Airflow already plays a prominent role in the data ecosystem. Highly successful open source projects like Spark and Airflow tend to be brought into an organization to solve a specific problem for a single team, and then go on to gain broader adoption, often ending up supporting critical day-to-day operations. It’s then that management realizes that many business functions are dependent on the project — in the case of Airflow, on the data pipelines created and managed by it — and starts asking questions about reliability and maintainance costs.

At Astronomer, we’ve worked with thousands of Airflow environments around the world, supporting companies ranging from startups to Fortune 100 businesses, and we’ve developed a deep understanding of their pain points and data orchestration needs. To help you maximize the effectiveness of your Airflow deployments, we've compiled a list of 10 best practices for modern data orchestration. As the commercial developers behind Apache Airflow®, we’ve learned that Airflow is immensely powerful — but also that it’s only part of the solution for data pipeline management and orchestration.

That’s why we built Astro.

Complete data orchestration

Before Astro, teams using Airflow to manage their data ecosystems had two choices: they could build a bespoke framework and manage it themselves, or they could use a managed infrastructure service from one of the public cloud providers. But both of these approaches lack key elements that Astro provides — elements crucial to ensuring that business-critical pipelines are reliable, secure, and cost-effective.

Astro is a game changer for several reasons:

- Astro Runtime, Astronomer’s cloud optimized distribution of Apache Airflow®, which provides the foundation for an entirely trustworthy, efficient, reproducible Airflow experience, from development through production. It takes the guesswork out of running and troubleshooting Airflow.

- Integrated data lineage and observability, which allows teams to extract, aggregate, and analyze the metadata that pipelines emit every time they run. This feature lets teams observe the health and performance of mission-critical data pipelines and the data that flows through them, and quickly pinpoint issues when they arise.

- The Astro CLI, which offers a controlled and reproducible foundation for Airflow development, with a secure path to production, saving time and resources previously spent troubleshooting and course correcting in production. The Astro CLI installs an instance of Astro Runtime on local systems so teams can develop and test locally before deploying through an integrated CI/CD flow.

- A fully managed platform that reduces reliance on DevOps teams and offers in-place upgrades and day-one access to new features.

Astro’s architecture

Astro’s architecture

Astro’s architecture consists of a control plane and a single-tenant data plane. The control plane runs in Astronomer’s cloud and provides a central place for customers to observe and administer their Airflow deployments. The data plane lives in your cloud — AWS, Azure, and/or GCP — and is where you keep your Airflow environments and the data that flows through them.

A single point of control

Astro’s control plane is a single pane of glass you can use to observe, manage, and maximize your use of Airflow — while keeping your cloud spend under control. It gives you granular insight into your pipelines and the cloud resources your Airflow deployments are actually consuming. It also makes it easy for you to scale out and diversify your Airflow deployments, enabling you to spin up new environments as you need them — for example, to expand the capacity of an existing deployment or to create separate deployments to isolate and better support different kinds of internal teams.

Astro makes it simple to tune your Airflow deployments, too: in place of Apache Airflow®’s dozens of configurations, Astro asks you to define the CPU and memory capacity for your worker. That’s it — Astro’s built-in auto-scaling does the rest.

You can deploy and manage your Astro clusters across cloud availability zones and cloud regions; you can even manage separate Astro deployments across multiple cloud providers.

Airflow, engineered for the cloud

Astro’s data plane lives in your single-tenant cloud. It hosts the Astro clusters that power your Airflow deployments. This lets you keep your Airflow DAGs, tasks, and data running in your cloud. You own and control your code; we just manage your Astro environment for you.

Astro Runtime — Astronomer’s hardened, cloud-native distribution of Apache Airflow® — gives you a scalable, sustainable way to build, deploy, and maintain customized Airflow environments you can use to support decentralized data architectures or team-based deployments. With Astro Runtime as a common substrate on which you add your DAGs, tasks, and dependencies, you can be confident that your data pipelines will perform reliably each time they run — whether that’s on your local laptop (with our Astro CLI), or in Astro deployed in AWS, Microsoft Azure, or Google Cloud.

Astro Runtime gives you day-one access to the latest Airflow features. With in-place upgrades that take minutes instead of months, you can stay current instead of waiting in the DevOps backlog. Over 60% of Astro customers are already running Airflow 2.3 with dynamic task mapping, just five weeks after launch.

Unparalleled observability

The combination of the Astro control plane and data plane with Astro Runtime gives you unparalleled observability into your data pipelines. Key to this is Astro’s use of OpenLineage, an open standard for tracking data lineage across systems. Each time your DAGs and tasks run, they emit detailed lineage metadata. Your Astro data plane collects this metadata and sends it to the Astro control plane, where it’s made available for analysis. You can use the lineage metadata Astro extracts to observe the health and performance of your data pipelines, troubleshoot and resolve data outages, analyze the impact of upstream or downstream changes to your data pipelines, and monitor the quality of your data.

See it in action

Astro saves businesses time, money, and resources by bringing order and observability to distributed data ecosystems. It allows data teams to focus their time and energy on building pipelines that move the business, not managing Airflow — and, we can have you up and running in under an hour.

Interested in learning more? Sign up for a demo today.