Apache Airflow® at Astronomer—Taking Data Orchestration to the Next Level

Astronomer is the Airflow company. We offer the easiest and fastest way to build, run, and observe data pipelines-as-code with Apache Airflow®. We’ve delivered successful Airflow deployments to hundreds of customers including Herman Miller, Rappi, and Société Générale. More than that, we are active members of the Apache Airflow® project and a driving force behind it, collaborating with the open-source community to advance the framework and ensure it thrives.

What is Apache Airflow®?

Apache Airflow® is the world’s most popular data orchestration platform, a framework for programmatically authoring, scheduling, and monitoring data pipelines. It was created as an open-source project at Airbnb and later brought into the Incubator Program of the Apache Software Foundation, which named it a Top-Level Apache Project in 2019. Since then, it has evolved into an advanced data orchestration tool used by organizations spanning many industries, including software, healthcare, retail, banking, and fintech.

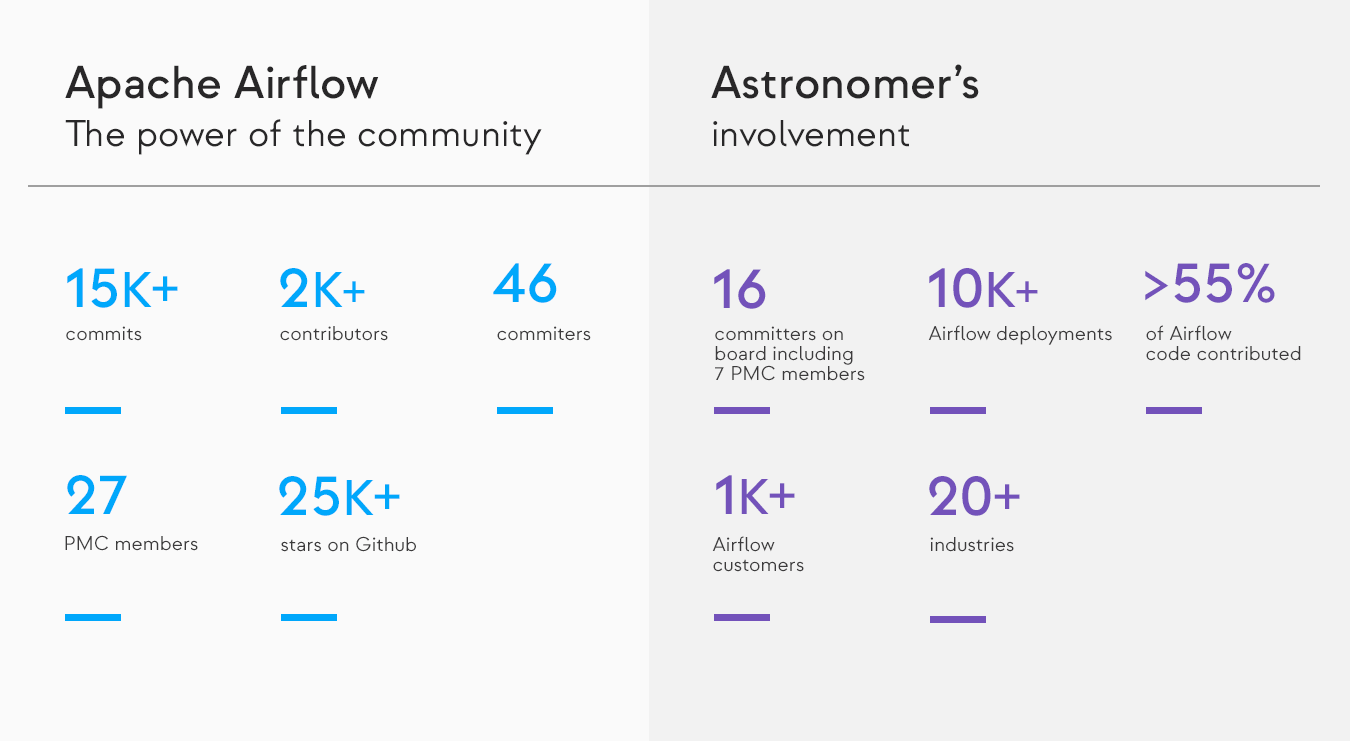

Today, with a thriving community of more than 2k contributors, 25k+ stars on Github, and thousands of users—including organizations ranging from early-stage startups to Fortune 500s and tech giants—Airflow is widely recognized as the industry’s leading data orchestration solution.

Used by thousands of data engineers and chosen as the unbiased data control plane by companies such as Apple, Adobe, Dropbox, PayPal, Tesla, and Electronic Arts, Airflow successfully connects business with data processing.

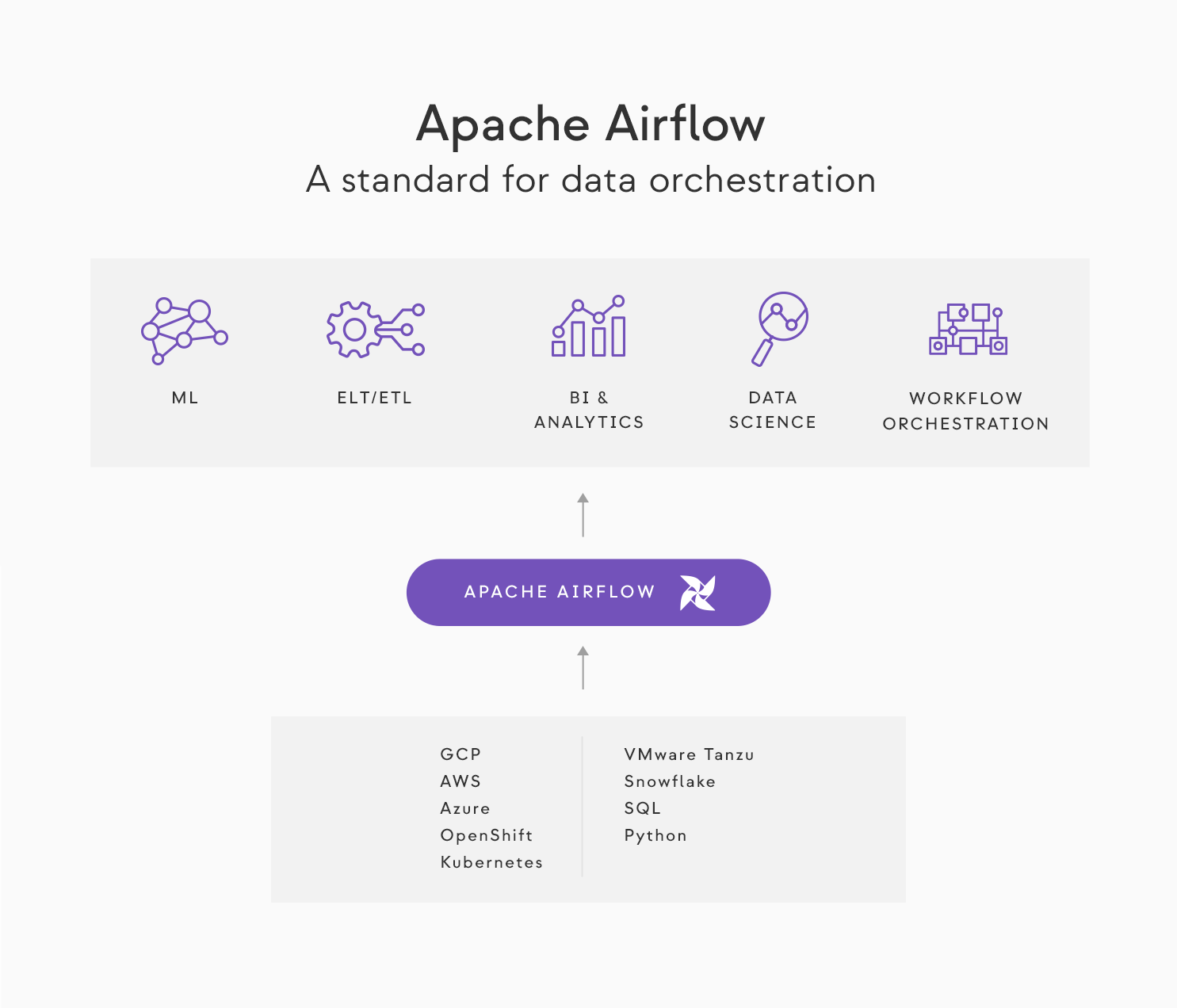

Why is Apache Airflow® the Standard for Data Orchestration?

Data orchestration is a relatively new concept. As organizations advanced to bigger volumes of data, data engineering emerged as a new profession to handle complex and business-critical data flows, and data orchestration emerged as the way to do it.

Today, it is an automated process that takes data from multiple storage locations and allows you to author, schedule, and monitor data pipelines programmatically. Data orchestration platforms let you control data, monitor systems, and draw valuable business insights.

Apache Airflow® has been widely embraced as the orchestration platform of choice, with 12 million downloads per month. To learn more about effectively leveraging Apache Airflow®, check out these 10 best practices for modern data orchestration.

One factor behind Airflow’s success is the fact that it’s built on the popular programming language Python. The rich PyPI ecosystem and metaprogramming capabilities of Python make Airflow highly flexible, enabling organizations to tailor it to their real-world needs.

But it’s Airflow’s massively engaged community, more than anything else, that accounts for its success. Talented PMC members, committers, and contributors continually release new features based on user feedback, drive both code and no-code engagement through initiatives like the Airflow Summit, and ensure that Airflow keeps up with the latest data orchestration trends.

Airflow’s unique features and community have empowered data professionals everywhere to create and run complex pipelines with a minimum of effort, and have made it a leader in data orchestration.

Apache Airflow® in Business

Apache Airflow® is an ideal tool for a wide range of use cases, including ELT/ETL, BI and analytics, machine learning, and data science.

Here are some of the ways Airflow helps Astronomer clients make better business decisions by allowing them to make sense of data:

- Fintech—Airflow helps drive investment insights by synthesizing many data sources, making it easier to run successful analyses.

- Retail—Airflow enables a data engineering team to build a data platform for analyzing demand for a specific product or service in a given place and time frame. Ideal for dynamic supply chain and dynamic pricing strategies.

- Social media—Airflow is used in training machine learning algorithms to offer "recommended" content to consumers and increase engagement.

- Banking—Airflow helps modernize a data stack without needing a cloud migration, which is a significant benefit given that many banks are still required to keep their data on-premise due to strict regulations.

- E-commerce—Airflow is used to consolidate marketing and sales data from several places in order to make better business decisions.

- Software/data—Airflow improves the data scheduling journey, allowing data engineering teams to iterate quickly and keep up with business requests.

- Entertainment—Airflow is used for gaming analytics and reports, and to create a unified platform that allows a corporation to make sense of data in AI projects.

Astronomer—The Driving Force Behind Apache Airflow®

With its rapidly growing number of downloads and contributors, Airflow is at the center of the data management conversation—a conversation Astronomer is helping to drive.

Our commitment is evident in our people. We've got a robust Airflow Engineering team and sixteen active committers on board, including seven PMC members: Ash Berlin-Taylor, Kaxil Naik, Daniel Imberman, Jed Cunningham, Bolke de Bruin, Sumit Maheshwari, and Ephraim Anierobi.

A significant portion of the work all these people do revolves around Airflow releases—creating new features, testing, troubleshooting, and ensuring that the project continues to improve and grow. Their hard work, combined with that of the community at large, allowed us to deliver Airflow 2.0 to the community in late 2020.

Airflow 2+

One of the main goals of Airflow 2.0 was to address a scheduler reliability issue, which was becoming increasingly important as the project was growing in popularity and expanding beyond the use cases of its Airbnb origins back in 2014.

The HA Scheduler addressed three main areas of concern: high availability, scalability, and performance.

High availability—Airflow 2.0 came with the ability to run multiple schedulers concurrently in an active/active model. It also offered zero downtime and no recovery time, since the other active scheduler(s) are constantly running and taking over operations.

Scalability—Airflow 2.0 Scheduler’s support of an active/active model was also the foundation for horizontal scalability since the number of schedulers can be increased beyond two, to whatever is appropriate for the load.

Performance—The Airflow 2.0 Scheduler expanded the use of DAG Serialization by using the serialized DAGs from the database for task scheduling and invocation. This reduced the time needed to repetitively parse the DAG files for scheduling. Airflow 2.0 also incorporated fast-follow implementation, as well as optimizations in the task startup process and in the scheduler loop, which reduced task latency.

Along with the new scheduler, we implemented many other features making it easier for people to adopt Airflow:

Independent providers—Providers have historically been bundled into the core Airflow distribution and versioned alongside every Apache Airflow® release. Since Airflow 2.0, they are now split into their own airflow/providers directory such that they can be released and versioned independently from the core Airflow distribution. This gives users more freedom to adjust their Airflow deployments to their specific needs.

Full REST API—The original experimental Airflow API was narrow in scope and lacked critical elements of functionality, including a robust authorization and permissions framework. Airflow 2.0 introduced a new, comprehensive REST API that is well documented and allows engineers to fully interact with Airflow without the CLI or user interface—meaning they can now create applications on top of Airflow.

TaskFlow API—Prior to Airflow 2.0, Airflow didn’t have a precise way to declare messages passed between tasks in a DAG. The TaskFlow API is a functional API that explicitly declares message passing while implicitly declaring task dependencies. In other words, it makes the DAGs cleaner and easier to understand.

While the majority of the changes in Airflow 2.0 were aimed at professionals who manage Apache Airflow® deployments, there was another category of Airflow users whose demands needed to be addressed as well: data engineers writing DAGs. This is why, in subsequent releases of Airflow 2, we’ve focused on the DAG authoring experience. In Airflow 2.2 we published new, flexible scheduling options with Custom Timetables, and Deferrable Operators that allow for more efficient work on data coming from external systems.

As we continue our work on future Airflow releases, we are keeping data engineering needs front of mind, and continuing to optimize the process of running and writing DAGs. For example, in the upcoming release of Airflow 2.3, we are delivering long-awaited Dynamic Task Templates. The feature will include—among others—the core mapping capability as well as the ability to “iterate” over a “supplied list” and execute tasks or task groups for each element in the list. Stay tuned!

The Future of Airflow

With every new release, Astronomer is unlocking more of Airflow’s potential and moving closer to the goal of democratizing the use of Airflow, so that all members of the data team can work with or otherwise benefit from it. We’ve built Astro—the essential data orchestration platform. With the strength and vibrancy of Airflow at its core, Astro offers a scalable, adaptable, and efficient blueprint for successful data orchestration.

To learn more about our Airflow involvement and Astro, schedule a call with one of our experts today.