Astro CLI: The Easiest Way to Install Apache Airflow®

There is a free, easy way to install Apache Airflow® and have a data pipeline running in under five minutes.

That may be surprising to hear, at least to someone experienced with the challenges of installing Airflow on Docker or Kubernetes. After all, getting started with any data orchestration tool and scaling to a production-grade environment at any scale has been notoriously difficult in the past.

But here at Astronomer, we’ve spent the last few years investing in a free, open source command line interface (CLI) for data orchestration that makes testing pipelines possible in less than five minutes: the Astro CLI. Our mission has been to make data orchestration easy and accessible, for customers and community users alike.

Specifically, we’ve focused on addressing questions that have come up over and over in our years of working with data practitioners — questions like:

- What is the easiest and fastest way for me to test Airflow DAGs and tasks locally?

- How should I promote code from my local environment to the cloud?

- What’s the best way for me to collaborate with my co-workers on shared data pipelines and environments?

- How can I automate all of this?

Today, we’re thrilled to give you an update on what the Astro CLI currently offers the open source community, and what kinds of problems we’re dedicated to solving for in the long term.

Are you a hands-on learner? Try the Astro CLI now or contribute on GitHub.

The Baseline Benefits

We created the Astro CLI during the early days of the company with a simple objective: Enable users to run Apache Airflow® locally and push code to their Airflow environments running on Astronomer. Prior to the Astro CLI, we had learned from our customers that there was no reasonable way to run Airflow locally. The options were Breeze, which is so cumbersome that it requires a 45-minute explainer video, or the airflow standalone command, which is impossible not to outgrow once you’re running more than a single DAG. There just wasn’t another way.

So we set out to solve that problem. Initially, the Astro CLI was really just a wrapper around Docker Compose that supported two generic commands:

astro dev start, which creates a Docker container for all Airflow components required to run a DAG. This includes the scheduler, webserver, and a simple Postgres metadata database.astro deploy, which bundles your DAG files and packages into a Docker image and pushes it to Astronomer.

As simple as this sounds, the Astro CLI gave rise to a satisfying aha moment for developers, who no longer had to wrestle with things like Docker Compose files or entry points to get started.

Run astro dev start to run Airflow on localhost:8080

Run astro dev start to run Airflow on localhost:8080

In the past two years, the Apache Airflow® open source project has since published an official Docker image, which has now become the primary way to run Airflow locally. But it’s still harder than it needs to be, and certainly harder than what you can do with the Astro CLI.

While we initially built the Astro CLI for our customers, the baseline benefits that the Astro CLI brings to local development are now just as powerful for the open source community.

Today, the Astro CLI still gets you a few basic things out-of-the-box:

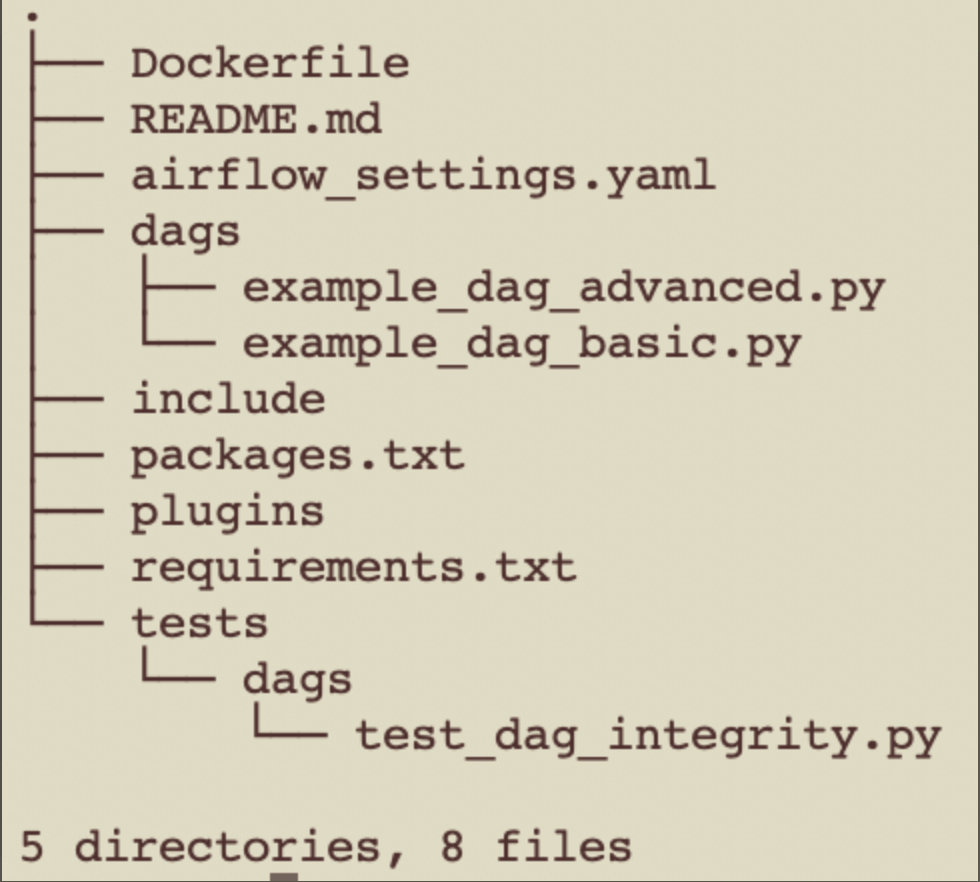

- A directory with all the files you need to run Airflow. We call it an “Astro project.” It includes dedicated folders for your DAGs, packages, plugins, and more.

- A local Airflow environment that takes one minute to start. Instead of worrying about defining and provisioning every Airflow component manually, you can install Docker and run a single command to have everything you need running on your laptop.

- An Astro Runtime image that includes the latest version of Apache Airflow® and a growing set of differentiating features built and maintained by our team. While these differentiating features are part of Astro’s commercial offering, we’re committed to making them open to use by anyone for local development.

An Astro project with all the files you need to run Airflow.

Thus far, these features have served us well — they’ve enabled local development for both our customers and community members, and saved a lot of people a lot of time.

We’ve always known, however, that as our product evolved and the data ecosystem expanded, we would need to elevate the functionality that the Astro CLI offers.

A Refined Mission

In the wake of the recent launch of Astro, our flagship cloud service and orchestration platform, and as part of our commitment to being a cloud-first company, we’ve increasingly embraced the ambitious mission of making data orchestration easy and accessible, both locally and on the cloud.

Let’s break that refined mission down into a few sub-categories:

- Make it easy to write data pipelines.

- Make it easy to test data pipelines.

- Make it easy to promote changes to your code.

- Make it easy to manage environments on the cloud.

… all while creating a world-class user experience in the command line.

For now, let’s look at how the Astro CLI makes it easier for users to test DAGs. (We have a lot more to say about writing data pipelines and how the CLI — along with the recently introduced Astro SDK — makes that easier, which we’ll get to in future posts.)

Test DAGs

A lot of the progress we’ve made in 2022 is about making it easier to test your code. As much as we’d like to say that Airflow is “just Python,” you can’t copy-paste a DAG into your IDE and expect VS Code to recognize that, for example, duplicate DAG IDs will result in an import error in the Airflow UI. There are a lot of Airflow-specific abstractions required in your DAG code that require additional logic to properly test for.

So, how are we solving that problem?

To start with, the Astro CLI has an astro dev parse command that checks for basic syntax and import errors in two to three seconds, without actually requiring that all Airflow components be running. In short, this command:

- Tells you if your DAGs cannot be parsed by the Airflow scheduler.

- Saves you time that you would otherwise need to spend pushing your changes, checking the Airflow UI, and viewing logs.

- Is the fastest way to check your DAG code as you develop in real-time.

For Astro users, this command can be enforced as part of the process of pushing code to Astro.

Run

Run astro dev parse to test for basic syntax and import errors locally.

For more advanced users, the Astro CLI also supports a native way to bake in unit tests written with the pytest framework, with the astro dev pytest command. This command:

- Is enabled by a dedicated

./testsdirectory that’s available in all Astro projects. - Includes two example pytests for users getting started.

- Runs all pytests by default every time you start your Airflow environment with a

--pytestflag. - Gives you complete control and security over your own testing framework.

import os from airflow.models import DagBag def get_import_errors(): """ Generate a tuple for import errors in the dag bag """ with suppress_logging('airflow') : dag_bag = DagBag(include_examples=False) def strip_path_prefix(path): return os.path.relpath(path ,os.environ.get('AIRFLOW_HOME')) return [(None,None)] +[ ( strip_path_prefix(k) , v.strip() ) for k,v in dag_bag.import_errors.items()]

An example pytest that checks for import errors.

For more information about these two commands, see Introducing New Astro CLI Commands to Make DAG Testing Easier. In the second half of this year, we’re looking to expand testing capabilities to the individual DAG or task. We’re interested, for example, in what would happen if we just allowed you to run a more Pythonic astro test <mydag.py> command, ensuring that you get a top-notch output in response.

Apply Changes and Promote Code

All of the Astro CLI functionality we’ve described up until this section is free and available to the open source community. For many users, that functionality may very well be enough to deliver a valuable local development experience. Many others, however, have found that the Astro CLI is even more powerful when used in the context of the Astro platform.

Let’s say that you leverage the testing framework in the Astro CLI and you have a well-written data pipeline that runs perfectly on your laptop. How do you get it running in a development or staging environment on the cloud?

With the Astro CLI, the golden command to push code to Astro is: astro deploy. This command bundles your files (DAGs, Python packages, OS-level packages, utils) into a Docker image, pushes it to our Docker registry, and runs it in your data plane.

On Astro, you have a few options:

- Create a new Airflow environment on Astro (we call it a Deployment) that’s dedicated to your new pipeline and can act as a fresh development environment.

- Push code to an existing Deployment on Astro.

- Create a CI/CD pipeline that automates pushing code to Astro every time you make a change to your project.

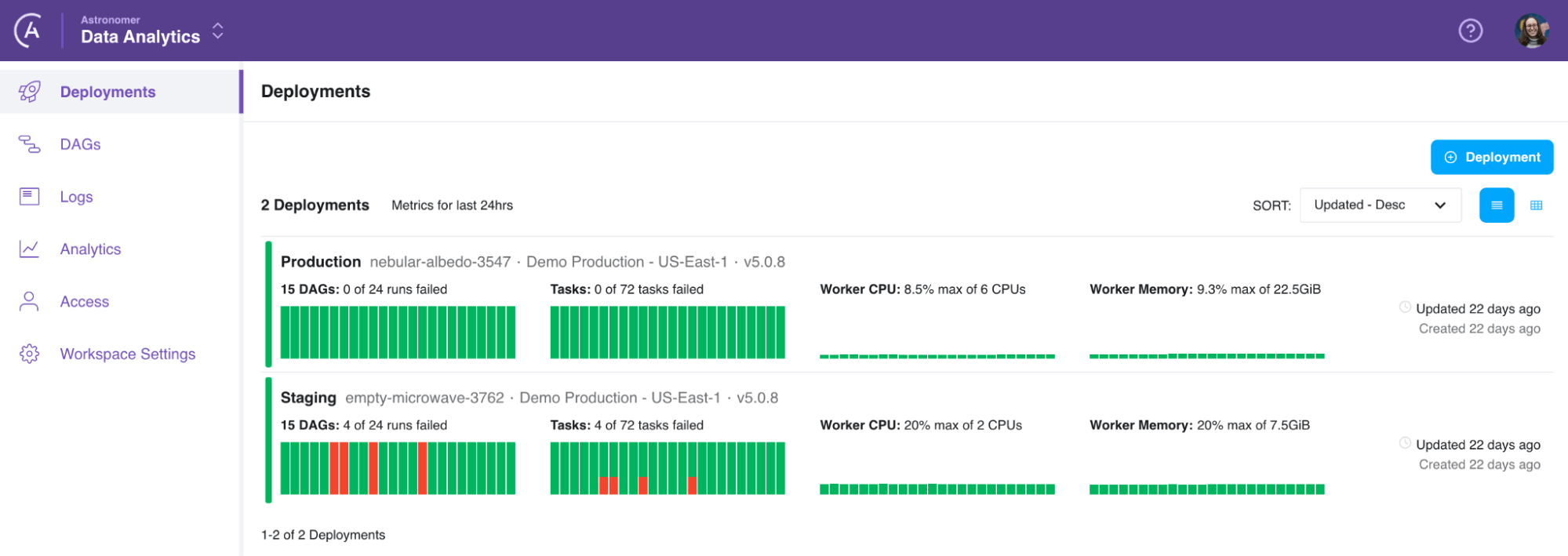

Once your code is on Astro, you can take full advantage of our flagship cloud service. View data pipelines across Deployments in a single place, optimize resource consumption, view data lineage, manage secrets, assign user permissions, and more. Everything you need to do to make sure that your data pipelines are production ready.

Over the next few months, we’ll be enriching this experience with some exciting changes. Sneak peak: A new astro deploy –dags command will allow you to push only changes to your DAG files without including your dependencies, which means speedy code deploys that don’t rely on building a new Docker image and restarting workers. We also intend to expose the concept of “environments” as a native facet of Astro, and build a tighter integration with GitHub.

Automate in the Cloud

Once your data pipeline is running on Astro, the Astro CLI has a variety of commands that allow you to automate almost everything. If you can do it manually in the Cloud UI, you can automate it with the Astro CLI and an Astro API key.

For example, you can:

- Export or import environment variables between the cloud and your local environment to avoid manually recreating or copy-pasting secrets across environments.

- Automate updating a Deployment as the requirements of your pipelines change.

- Automate creating a Deployment, deploying a few DAGs, and deleting that Deployment once the DAGs run. (Coming soon)

- Define your Deployment as code in a YAML file to make it that much easier to create new environments with those same configurations. (Coming soon)

View your deployments in a single place on Astro.

View your deployments in a single place on Astro.

In the coming months, we’ll expand our functionality here too. We’re thinking a lot about how we can make it easier for users to create, manage, and share secrets more securely.

Conclusion

To summarize, the essence of the Astro CLI is that it’s open source, free to use, and allows you to:

- Run Airflow locally.

- Test and parse your DAGs.

- Automate everything on the cloud.

There’s more coming, so stay tuned. To get started:

- Install the Astro CLI if you’ve used Airflow before.

- Try our get started tutorial if you’re new to Airflow.

If you’re running on the cloud today and looking for a development experience that’s optimized for cloud-based connectivity, observability, and governance — try Astro.

And if you’re curious about helping Astronomer solve some of the hardest and most exciting local development problems out there — reach out to us. We’re hiring.