What Exactly Is a DAG?

Directed Acyclic Graph (DAG)

A DAG is a Directed Acyclic Graph — a conceptual representation of a series of activities, or, in other words, a mathematical abstraction of a data pipeline. Although used in different circles, both terms, DAG and data pipeline, represent an almost identical mechanism. In a nutshell, a DAG (or a pipeline) defines a sequence of execution stages in any non-recurring algorithm.

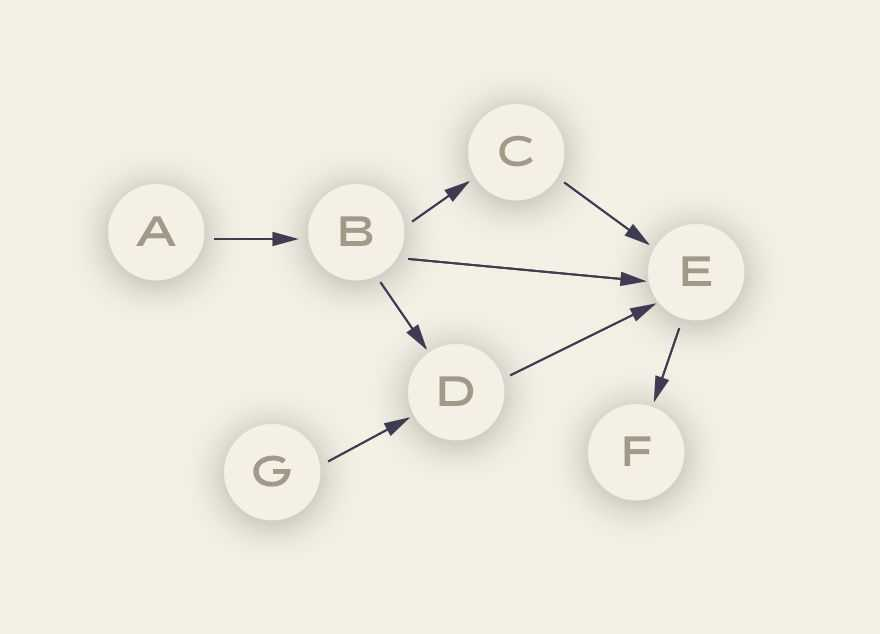

A DAG is a graph composed of circles and lines. Each circle, or “node”, signifies a specific activity and is connected by a directed line, known as an “edge.” These activities are considered directed as they must be completed in a subsequent order, and cannot self-reference. This means the edges will never lead you back to a previous point, rendering the graph acyclic.

The DAG acronym stands for:

- Directed – In general, if multiple tasks exist, each must have at least one defined upstream (previous) or downstream (subsequent) task, or one or more of both. (It’s important to note, however, that there are also DAGs that have multiple parallel tasks — meaning no dependencies.)

- Acyclic – No task can create data that goes on to reference itself. That could cause an infinite loop, which could give rise to a problem or two. There are no cycles in DAGs.

- Graph – In mathematics, a graph is a finite set of nodes, with vertices connecting the nodes. In the context of data engineering, each node in a graph represents a task. All tasks are laid out in a clear structure, with discrete processes occurring at set points and transparent relationships with other tasks.

When Are DAGs Useful?

A DAG is a useful visualization of a data pipeline as it offers a high-level understanding of a workflow – this increased understanding may help one identify areas of the pipeline that can be made more efficient.

Manually building workflow code challenges the productivity of engineers, which is one reason there are a lot of helpful tools out there for automating the process, such as Apache Airflow®. A great first step to efficient automation is to realize that DAGs can be an optimal solution for moving data in nearly every computing-related area.

The Benefits of Code-Based Pipelines

“At Astronomer, we believe using a code-based data pipeline tool like Airflow should be a standard,” says Kenten Danas, Lead Developer Advocate at Astronomer. There are many reasons for this, but these high-level concepts are crucial:

- Code-based pipelines are extremely dynamic. If you can write it in code, then you can do it in your data pipeline.

- Code-based pipelines are highly extensible. You can integrate with basically every system out there, as long as it has an API.

- Code-based pipelines are more manageable: Since everything is in code, it can integrate seamlessly into your source controls CI/CD and general developer workflows. There’s no need to manage external things differently.

An Example of a DAG

Consider the DAG above. In this DAG, each vertex (line) has a specific direction (denoted by the arrow) connecting different nodes. This is the key quality of a DAG: data can follow only in the direction of the vertex. In this example, data can go from A to B, but never B to A. In the same way that water flows through pipes in one direction, data must follow in the direction defined by the graph. Nodes from which a directed vertex extends are considered upstream, while nodes at the receiving end of a vertex are considered downstream.

In addition to data moving in one direction, nodes never become self-referential. That is, they can never inform themselves, as this could create an infinite loop. So data can go from A to B to C/D/E, but once there, no subsequent process can ever lead back to A/B/C/D/E as data moves down the graph. Data coming from a new source, such as node G, can still lead to nodes that are already connected, but no subsequent data can be passed back into G. This is the defining quality of a DAG.

Why must this be true for data pipelines? If F had a downstream process in the form of D, we would see a graph where D informs E, which informs F, which informs D, and so on. It creates a scenario where the pipeline could run indefinitely without ever ending. Like water that never makes it to the faucet, such a loop would be a waste of data flow. To put this example in real-world terms, imagine the DAG above represents a data engineering story:

- Node A could be the code for pulling data out of an API.

- Node B could be the code for anonymizing the data and dropping any IP address.

- Node D could be the code for checking that no duplicate record IDs exist.

- Node E could be putting that data into a database.

- Node F could be running a SQL query on the new tables to update a dashboard.

DAGs in Airflow

In Airflow, a DAG is your data pipeline and represents a set of instructions that must be completed in a specific order. This is beneficial to data orchestration for a few reasons:

- DAG dependencies ensure that your data tasks are executed in the same order every time, making them reliable for your everyday data infrastructure.

- The graphing component of DAGs allows you to visualize dependencies in Airflow’s user interface.

- Because every path in a DAG is linear, it’s easy to develop and test your data pipelines against expected outcomes.

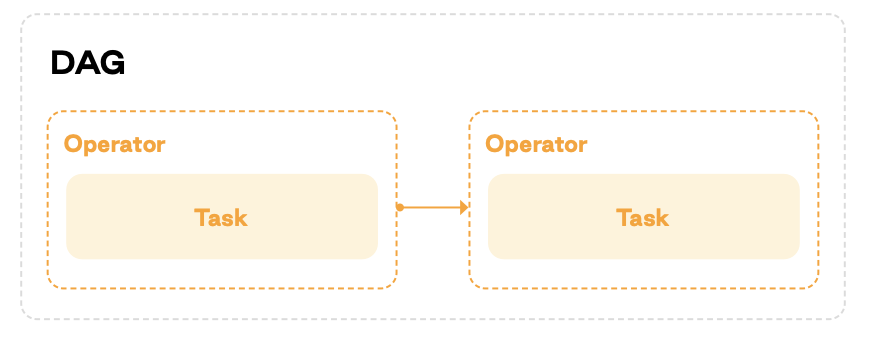

An Airflow DAG starts with a task written in Python. You can think of tasks as the nodes of your DAG: Each one represents a single action, and it can be dependent on both upstream and downstream tasks.

Tasks are wrapped by Operators, which are the building blocks of Airflow, defining the behavior of their tasks. For example, a Python Operator task will execute a Python function, while a task wrapped in a Sensor Operator will wait for a signal before completing an action.

The following diagram shows how these concepts work in practice. As you can see, by writing a single DAG file in Python, you can begin to define complex relationships between data and actions.

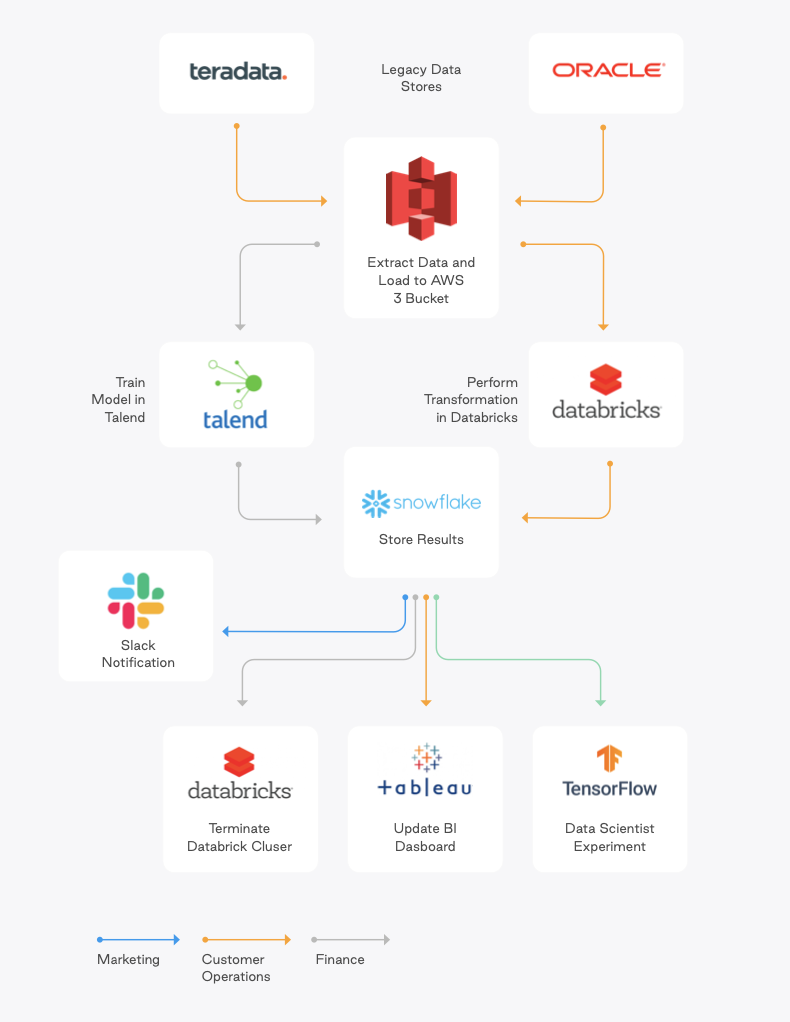

You can see the flexibility of DAGs in the following real-world example:

Using a single DAG, you are able to:

- Extract data from a legacy data store and load it into an AWS S3 bucket.

- Either train a data model or complete a data transformation, depending on the data you’re using.

- Store the results of the previous action in a database.

- Send information about the entire process to various metrics and reporting systems.

Organizations use DAGs and pipelines that integrate with separate, interface-driven tools to extract, load, and transform data. But without an orchestration platform like Astronomer, these tools aren’t talking to each other. If there’s an error during the loading, the other tools won’t know about it. The transformation will be run on bad data, or yesterday’s data, and deliver an inaccurate report. It’s easy to avoid this, though — a data orchestration platform can sit on top of everything, tying the DAGs together, orchestrating the dataflow, and alerting in case of failures. Overseeing the end-to-end life cycle of data allows businesses to maintain interdependency across all systems, which is vital for effective management of data.

Increase your productivity, learn more about Astro.

Learn more:

- Introduction to Airflow DAGs Guide

- DAGs - The Definitive Guide Ebook

- Coding Your First DAG for Beginners Video Tutorial

- DAG writing best practices Guide

- Intro to Airflow Webinar

- Understanding the Airflow UI Guide

- Operators 101 Guide

- Managing Your Connections in Apache Airflow® Guide

Frequently Asked Questions

What does the DAG stand for?

DAG stands for “Directed Acyclic Graph”. DAGs represent a series of activities that happen in a specific order and do not self-reference (loop). It is these directed and non-cyclical properties that give the graph its name.

What is DAG used for?

A DAG is used to visually represent a data workflow and the discrete processes that occur to the data while in the pipeline. A high-level view of the process may offer greater workflow understanding, which leads to increased operational efficiency.

What is a DAG model?

A DAG is a graph that conceptually represents the discrete and directed relationships between variables. A DAG is often used to visually represent a data pipeline.