How an Improved DAG-Testing Command in the Astro CLI Made Its Way into Airflow

7 min read |

At Astronomer, we get that a great developer experience makes for a great overall Airflow experience.

The easier it is for authors to build and test DAGs on their local systems, the faster they can deploy them to production — and from there to rapidly deliver new reports, dashboards, and operational analytics, as well as integrate new sources of data to enrich existing analytics.

A great Airflow development experience also means that DAG authors can quickly build and test fixes for data outages, and refactor DAGs to accommodate growth. Smooth development leads to happier data engineers, data scientists, and analysts — along with satisfied business customers, who receive timely access to the data they need to do their jobs.

One of Airflow 2.5’s headline features is a product of Astronomer’s obsession with improving the Airflow development experience. The Airflow CLI’s rebuilt airflow dag test command came out of work by Astronomer’s Daniel Imberman to improve DAG testing in the Astro CLI, an open-source Airflow development environment that anyone can use to build and test DAGs on their local systems.

Imberman, a Strategy Engineer with Astronomer and a member of the Apache Airflow® project management committee, says he was looking for a faster way to test his DAGs in the Astro CLI under real-world conditions. His idea was simple enough: instead of scheduling and running DAGs in the Astro CLI’s virtual Airflow environment — which creates separate containers for Airflow, its scheduler, database, and triggerer daemon — why not build Airflow’s parsing and execution functions into a compact local library that users could run from the command line to test their DAGs?

Imberman teamed with David Koenitzer, the Astronomer Product Manager who oversees the Astro CLI, to build a new DAG-testing capability for that CLI. Once Imberman recognized his library could be used for the same purpose in the Airflow CLI, the rebuilt airflow dag test was born.

Making DAG Testing in the Astro CLI Even Faster

The Astro CLI’s built-in Python code linter (astro dev parse) already helps DAG authors debug common Python and Airflow syntax errors locally, but it cannot identify logical errors that only pop up when code actually runs in production.

Given this limitation, DAG authors need a way to see how their DAGs will behave under production-like conditions, ideally on their local systems. Previously, authors used the Astro CLI to bring up a full-blown local Airflow environment, and then manually run and debug their DAGs. This took a lot of time, especially when authors had to repeatedly retest complex DAGs.

This was the impetus for astro run, Imberman’s new DAG-testing implementation for the Astro CLI.

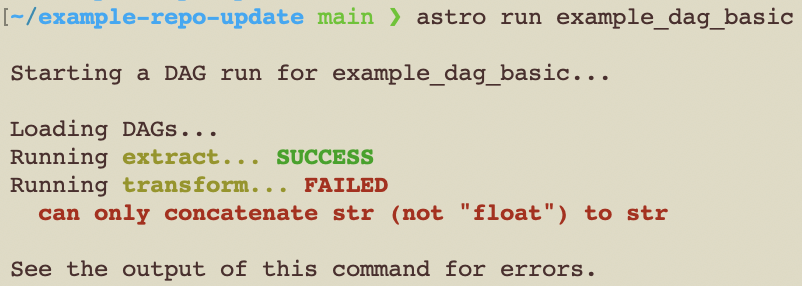

Imagine that a DAG contains an error in logic: say, a Python expression that tries to add a floating point (float) to a string (str) value. The DAG checks out okay using the Astro CLI’s built-in linter. However, using astro run to test the DAG triggers the following error in the Astro CLI’s console:

can only concatenate str (not “float”) to str

The built-in linter, which only evaluates Python syntax errors, not errors in logic, won’t catch this.

An example of an error that wouldn’t be caught by the astro dev parse linter.

Until now, to find errors like this, DAG authors had to go outside of their workflows to run and test their DAGs in Airflow. This was not only distracting, but also time consuming: It took far too long to cold-start a local Airflow instance, bring up the Airflow UI, find your DAG, manually run it, and navigate to the UI’s task-log view to debug errors. In too many cases, authors skipped this process and instead settled for running their local unit tests before promoting their code to QA testing, basically throwing the problem over the wall to testing engineers. This stretched out development cycles and delayed the deployment of code to production.

Now, with astro run — which rapidly parses and executes DAG code — authors can quickly identify and debug Python or Airflow errors that occur at runtime.

This makes for a drastic improvement over the status quo in Airflow, says Koenitzer. “The Astro CLI is now what Python development should be like: you do everything in one place — the local terminal — so you go much faster,” he says. “Plus, you know for certain your DAGs will run the way you expect in production.”

Two CLIs, Two Different Experiences

Both the Airflow CLI and the Astro CLI now use Imberman’s local library to run and test DAGs.

Users type either airflow dag test (Airflow CLI) or astro run (Astro CLI) to call the new Airflow library, which automatically picks up, parses, and runs the DAG they pass as a command-line parameter.

In both the Airflow CLI and Astro CLI, users can test against the same connections and environment variables they use in production Airflow. However, because they aren’t scheduling and running their DAGs in a full-blown Airflow environment, authors can cycle much faster. Both CLIs also output useful diagnostic information directly to the console — although the Astro CLI renders this in human-readable language.

And authors working in both CLIs can set debugging breakpoints — i.e., predefined places where their DAG stops executing, enabling them to observe its point-in-time state. Another commonality is that both commands run instantly, enabling users to iterate faster.

But there are a couple of crucial differences. The first is that the robust roadmap for the Astro CLI’s astro run capability lists a half dozen improvements planned for the next 12 months, including the ability to:

- Test individual tasks within DAGs

- Set a specific execution day and time, which is useful for simulating backfills

- Take input from dictionaries

- Run a selected group of tasks

Over the next year, astro run should evolve to support an interactive experience that allows authors to selectively run either DAGs or individual tasks, as well as start and stop DAG/task execution as needed.

The second difference is that, unlike the Airflow CLI, the Astro CLI is designed to provide a reproducible Airflow development experience. Authors can use the Astro CLI to customize a standard Airflow base image — a default Python environment and Airflow install, packed into a container — that runs the same way anywhere. By building DAGs, custom code, and other dependencies into this image, users can feel confident that DAGs they build locally will run reliably in their production Airflow environments.

This differs from the Airflow CLI, which uses the Python environment installed on the author’s local system. If this environment is 100% compatible with their production Airflow environment, authors can feel confident that DAGs built and tested locally will run as expected. This is almost never the case, however. The most likely scenario is that authors will use airflow dag test to fix errors before pushing DAGs to software testing, where engineers will fix other errors detected during real-world testing.

The Astro CLI Supports the Software Development Lifecycle

DAG testing in the Astro CLI compresses the time it takes for authors to build, test, and deploy DAG code, whether it’s destined for quality assurance testing (QA), user acceptance testing (UAT), or production.

When an author builds and tests with the Astro CLI and astro run, DAGs not only get promoted to production more quickly, but tend to run more reliably (without bugs or edge cases) in the production environment.

Because the Astro CLI is open source, and because it can work with custom-built Airflow runtime images, anyone can use it to build, deploy, and maintain their DAGs. The Astro CLI gives users a common command-line environment that supports the full software-development lifecycle — from local development and debugging, to QA testing and UAT, to staging and deployment.

Get started with the Astro CLI, the open-source command-line interface for data orchestration

Get started free.

OR

By proceeding you agree to our Privacy Policy, our Website Terms and to receive emails from Astronomer.