Accelerating ML Application Development: Production-Ready Airflow Integrations with Critical AI Tools

import FeaturedItem from "../@OLD_components_og/FeaturedItem.astro"; import PopupVideo from "../@OLD_components_og/PopupVideo.astro";

Generative AI and operational machine learning play crucial roles in the modern data landscape by enabling organizations to leverage their data to power new products and increase customer satisfaction. These technologies are used for virtual assistants, recommendation systems, content generation, and more. They help organizations build a competitive advantage through data-driven decision making, automation, enhanced business processes, and customer experiences.

Apache Airflow® is at the core of many teams’ ML operations, and with new integrations for Large Language Models (LLMs), Airflow enables these teams to build production-quality applications with the latest advances in ML and AI.

Simplifying ML Development

All too frequently, machine learning models and predictive analytics are created in silos, far removed from production systems and applications. Organizations face a perpetual challenge to turn a lone data scientist’s notebook into a production-ready application with stability, scaling, compliance, etc.

Organizations that standardize on one platform for orchestrating both their DataOps and MLOps workflows, however, are able to reduce not only the friction of end-to-end development but also infrastructure costs and IT sprawl. While it may seem counterintuitive, these teams also benefit from more choice. When the centralized orchestration platform, like Apache Airflow®, is open-source and includes integrations to nearly every data tool and platform, data and ML teams can pick the tools that work best for their needs while enjoying the benefits of standardization, governance, simplified troubleshooting, and reusability.

Apache Airflow® and Astro (Astronomer’s fully managed Airflow orchestration platform) is the place where data engineers and ML engineers meet to create business value from operational ML. With a massive number of data engineering pipelines running on Airflow every day across every industry and sector, it is the workhorse of modern data operations, and ML teams can piggyback off of this foundation for not only model inference but also training, evaluation, and monitoring.

Optimizing Airflow for Enhanced ML Applications

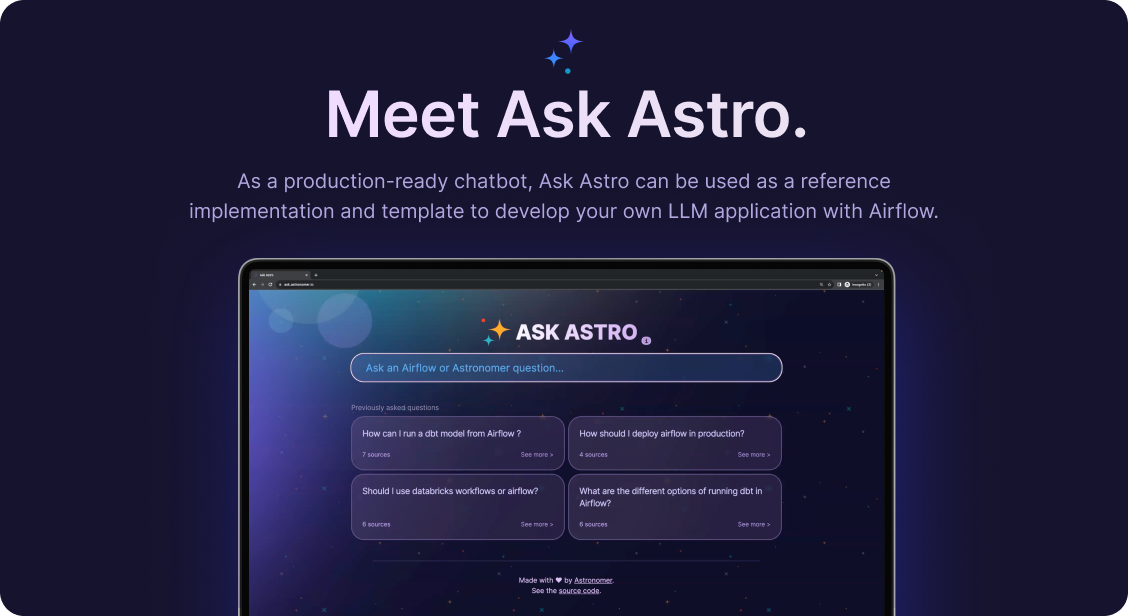

As organizations continue to find ways to leverage large language models, Airflow is increasingly front and center for the operationalization of things like unstructured data processing, Retrieval Augmented Generation (RAG), feedback processing, and fine-tuning of foundation models. To support these new use-cases and to provide a starting point for Airflow users, Astronomer has worked with the Airflow Community to create Ask Astro—as a public reference implementation of RAG with Airflow for conversational AI.

More broadly, Astronomer has led the development of new integrations with vector databases and LLM providers to support this new breed of applications and the pipelines that are needed to keep them safe, fresh, and manageable.

Connect to the Most Widely Used LLM Services and Vector Databases

Apache Airflow®, in combination with some of the most widely used vector databases (Weaviate, Pinecone, OpenSearch, pgvector) and natural language processing (NLP) providers (OpenAI, Cohere), offers extensibility through the latest in open-source development. Together, they enable a first-class experience in RAG development for applications like conversational AI, chatbots, fraud analysis, and more.

OpenAI

OpenAI is an AI research and deployment company that provides an API for accessing state-of-the-art models like GPT-4 and DALL·E 3. The OpenAI Airflow provider offers modules to easily integrate OpenAI with Airflow. Users can generate embeddings for data, a foundational step in NLP with LLM-powered applications.

View tutorial → Orchestrate OpenAI operations with Apache Airflow®

get_embeddings = OpenAIEmbeddingOperator.partial( task_id="get_embeddings", conn_id=OPENAI_CONN_ID, model="text-embedding-ada-002", ).expand(input_text=captain_responses)

Cohere

Cohere is an NLP platform that provides an API to access cutting-edge LLMs. The Cohere Airflow provider offers modules to easily integrate Cohere with Airflow. Users can leverage these enterprise-focused LLMs to easily create NLP applications using their own data.

View tutorial → Orchestrate Cohere LLMs with Apache Airflow®

get_embeddings = CohereEmbeddingOperator.partial( task_id="get_embeddings", conn_id=COHERE_CONN_ID, ).expand(input_text=recipes_list)

Weaviate

Weaviate is an open-source vector database, which stores high-dimensional embeddings of objects like text, images, audio, or video. The Weaviate Airflow provider offers modules to easily integrate Weaviate with Airflow. Users can process high-dimensional vector embeddings using an open-source vector database, which provides a rich set of features, exceptional scalability, and reliability.

View tutorial → Orchestrate Weaviate operations with Apache Airflow®

import_data = WeaviateIngestOperator( task_id="import_data", conn_id=WEAVIATE_USER_CONN_ID, class_name=CLASS_NAME, input_json=import_data_func( text_file_path=TEXT_FILE_PATH, class_name=CLASS_NAME ), trigger_rule="none_failed", )

pgvector

pgvector is an open-source extension for PostgreSQL databases that adds the capability to store and query high-dimensional object embeddings. The pgvector Airflow provider offers modules to easily integrate pgvector with Airflow. Users can unlock powerful functionalities for working with vectors in a high-dimensional space with this open-source extension for their PostgreSQL database.

View tutorial → Orchestrate pgvector operations with Apache Airflow®

import_embeddings_to_pgvector = PgVectorIngestOperator.partial( task_id="import_embeddings_to_pgvector", trigger_rule="none_failed", conn_id=POSTGRES_CONN_ID, sql=( f"INSERT INTO {TABLE_NAME} " "(book_id, title, year, author, description, vector) " "VALUES (%(book_id)s, %(title)s, %(year)s, " "%(author)s, %(description)s, %(vector)s) " "ON CONFLICT (book_id) DO NOTHING;" ), ).expand(parameters=book_embeddings)

Pinecone

Pinecone is a proprietary vector database platform designed for handling large-scale vector-based AI applications. The Pinecone Airflow provider offers modules to easily integrate Pinecone with Airflow.

View tutorial → Orchestrate Pinecone operations with Apache Airflow®

pinecone_vector_ingest = PineconeIngestOperator( task_id="pinecone_vector_ingest", conn_id=PINECONE_CONN_ID, index_name=PINECONE_INDEX_NAME, input_vectors=vectorized_data, )

OpenSearch

OpenSearch is an open-source distributed search and analytics engine based on Apache Lucene. It offers advanced search capabilities on large bodies of text alongside powerful machine learning plugins. The OpenSearch Airflow provider offers modules to easily integrate OpenSearch with Airflow.

View tutorial → Orchestrate OpenSearch operations with Apache Airflow®

search_for_keyword = OpenSearchQueryOperator( task_id=f"search_for_{KEYWORD_TO_SEARCH}", opensearch_conn_id=OPENSEARCH_CONN_ID, index_name=OPENSEARCH_INDEX_NAME, query={ "size": 0, "query": { "match": {"lines": {"query": KEYWORD_TO_SEARCH, "fuzziness": "AUTO"}} }, "aggs": { "most_mentions_person": {"terms": {"field": "speaker"}}, "most_mentions_song": {"terms": {"field": "title"}}, }, }, )

Additional Information

By enabling data-centric teams to more easily integrate data pipelines and data processing with ML workflows, organizations can streamline the development of operational AI, and realize the potential of AI and natural language processing in an operational setting.Ready to dive deeper on your own? Discover available modules designed for easy integration—visit the Astro Registry to see the latest AI/ML sample DAGs.