Debugging Airflow Made Easy: 3 Key Steps to Debug your DAGs

As developers, we would all love to live in a world where our code ran successfully the first time, every time. Unfortunately, that’s not the real world - errors in code, including your data pipelines, are inevitable. The better question is not how to avoid all errors entirely, but how to catch and debug them effectively before they cause you headaches in production.

Fortunately, Airflow (and the wider Airflow ecosystem) has you covered with a suite of tools, features, and community resources that make debugging your DAGs straightforward. In this post, we’ll walk through some high-level methods for easily debugging your DAGs. While we can’t cover every possible issue, following these tips will help you find and solve bugs and get to production faster.

Set up a good local development environment

Having a good local development environment is one of the best ways to make debugging your DAGs easier. Nobody wants to have to wait for a deployment to happen, only to then find out your new DAG has a simple syntax error. Local development allows you to see and test your changes in real time, so you have instantaneous feedback and can catch and fix bugs early.

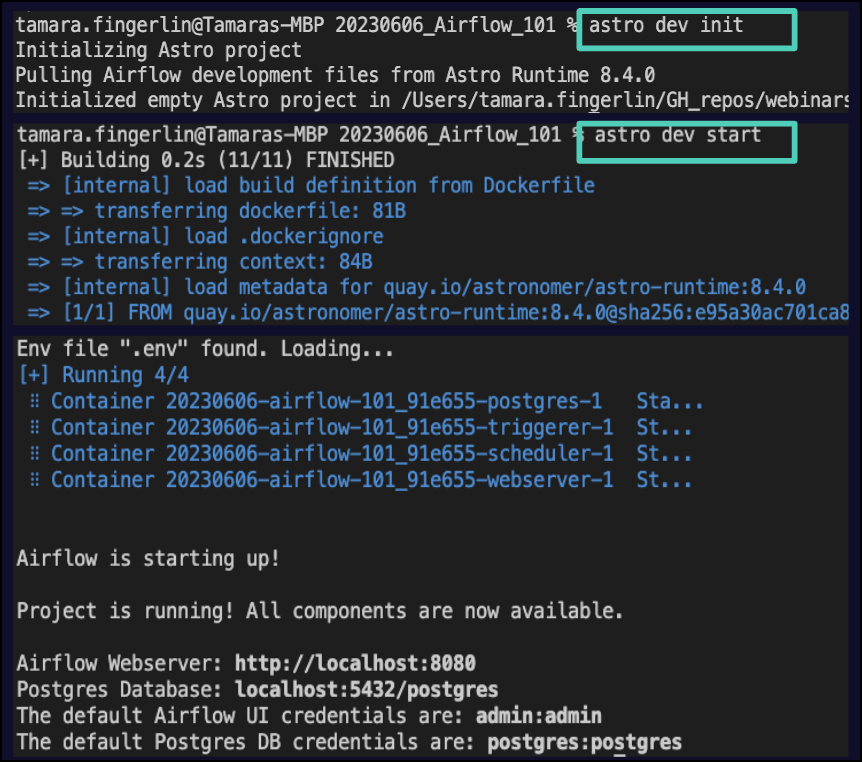

The open-source Astro CLI is a great option. It’s easy to install, runs on Docker, and you can get a local Airflow instance running with just two commands.

When working locally, any changes you make to your DAG Python files will be instantaneously reflected in your local Airflow instance. The Astro CLI also comes with built-in testing functionality (more on testing later), so you can debug programmatically if you prefer that to working in the Airflow UI.

Some organizations have restrictions around what code you can work with and what data you can access locally, so you may not be able to do all of your debugging in your local development environment. But even if you can’t test and debug your full pipeline, it can still be worth it to check your basic code locally first to catch simple errors like syntax issues, mis-named variables, etc., which tend to be the most common anyway.

Eliminate common issues

Speaking of common issues, we’ve found that bugs in Airflow DAGs tend to follow the 80/20 rule: a smaller subset of issues account for a large portion of overall bugs people encounter. Airflow is so widely used that we couldn’t possibly account for all of the potential bugs you might run into, but we can provide guidance on some of the more frequent ones. Eliminating these common issues is one of the best first steps to debugging your DAGs.

Some common DAG issues include:

- DAGs don’t appear in the Airflow UI: DAGs not being imported into Airflow is the first sign something is wrong. It’s also a great application for local development: typically you will see an import error in the Airflow UI that will tell you what’s going on. You can also check for import errors programmatically using the Astro CLI (

astro dev run dags list-import-errors) or the Airflow CLI (airflow dags list-import-errors). If you don’t see an import error but your DAGs still don’t show up, there are a few other things you can check. More on that here. - Dependency conflicts: This can be a headache whenever you’re working with Python applications. Your DAGs might fail to import because the proper packages aren’t installed in your Airflow environment, either because you didn’t add them, or because they conflict with core Airflow packages. There are several ways of managing dependency conflicts in Airflow. See this doc for more.

- DAGs are not running correctly: Maybe your DAG is showing up in the UI, but it isn’t running on the schedule you intended. Or it’s not showing the code you expected. Or maybe no DAGs are running because your scheduler isn’t functioning properly. For more issues like this, and tips to solve them, see this doc.

You may also have issues with specific tasks, such as tasks not showing up within a DAG, not running when you expected due to scaling limitations, unexpected failures, or issues with dynamic task mapping. For tips on how to debug all of these issues and more, see this doc.

Implement effective DAG testing

Knowing that errors in your DAG code are inevitable, a crucial step to effectively running Airflow in production is setting up safeguards so your bugs are caught and dealt with early. Implementing effective testing of your DAGs, both during local development and during deployment using CICD, is always worth the effort.

There are multiple open source options for testing your DAGs. In Airflow 2.5+, you can use the dag.test() method, which allows you to run all tasks in a DAG within a single serialized Python process without running the Airflow scheduler. This allows for faster iteration and use of IDE debugging tools when developing DAGs. You can easily add the method to any DAG:

from airflow.decorators import dag from pendulum import datetime from airflow.operators.empty import EmptyOperator @dag( start_date=datetime(2023, 1, 1), schedule="@daily", catchup=False, ) def my_dag(): t1 = EmptyOperator(task_id="t1") dag_object = my_dag() if __name__ == "__main__": dag_object.test()

dag.test() can also be used for testing variables, connections, etc. For more, see our guide.

The Astro CLI also has built-in testing functionality to check for import errors, standardization applied by your team (e.g. we always need at least two retries), and even whether your DAGs are ready to upgrade to the latest Airflow version. Tests with the Astro CLI can be run locally and integrated into your CICD pipeline so they run before your code is deployed.

More generally, testing is a complex topic, and needs will vary for every team. For more high-level guidance, check out our testing DAGs guide and read our Comprehensive Guide to Testing Airflow DAGs blog.

Get additional help

Debugging DAGs is something every Airflow developer will need to do, and sometimes it can get tricky due to the wide range of use cases Airflow is used for. But never fear, if the sections above don’t solve your problem, you have additional resources available to you:

- Check out our Debug DAGs guide or watch our webinar recording for additional tips for fixing common issues.

- Read our blog 7 Common Errors to Check When Debugging Airflow DAGs

- Join the Airflow Slack and post in

#newbie-questions,#troubleshooting, or any channel related to your specific issue. This is a great way to take advantage of the vast Airflow community, especially for more complex issues. Chances are, someone else has encountered it as well. - If you think you’ve found a bug in Airflow, open an issue in the Airflow GitHub repo.

- If you are an Astronomer customer, reach out to Astronomer support with any Airflow or Astro related questions or issues.

And finally, if your Airflow headaches are slowing you down, consider a managed Airflow service like Astro. You can get started with a 14 day free trial.

Happy debugging!