Improving Ask Astro: The Journey to Enhanced Retrieval Augmented Generation (RAG) with Cohere Rerank, Part 4

Ask Astro: A RAG-based Chat Assistant using Large Language Models (LLM)

Ask Astro has been a popular tool for developers and customers seeking answers about Astronomer products and Apache Airflow® since its initial release. Built from the power of a RAG-based LLM chat system, Ask Astro aims to provide users with swift and straightforward access to their inquiries along with related documents as source information. It was released in the #airflow-astronomer channel on Apache Airflow®’s Slack, as well as at ask.astronomer.io, and within a few days it was answering hundreds of questions a day. However, as the application gained popularity and question queries became more diverse, the need for a more refined document retrieval and answer accuracy became evident. As such, we integrated Hybrid Search from Weaviate and Cohere Rerank into our existing system, significantly enhancing Ask Astro's quality.

Before Cohere: Utilizing an Embedding Model for Document Retrieval

Initially, Ask Astro ran on a blend of LangChain, GPT-3.5, GPT-4, and text-embedding-ada-002 (an OpenAI embedding model). While this system served as a robust foundation for our tasks, we encountered accuracy limitations that occasionally obscured the clarity of the responses.

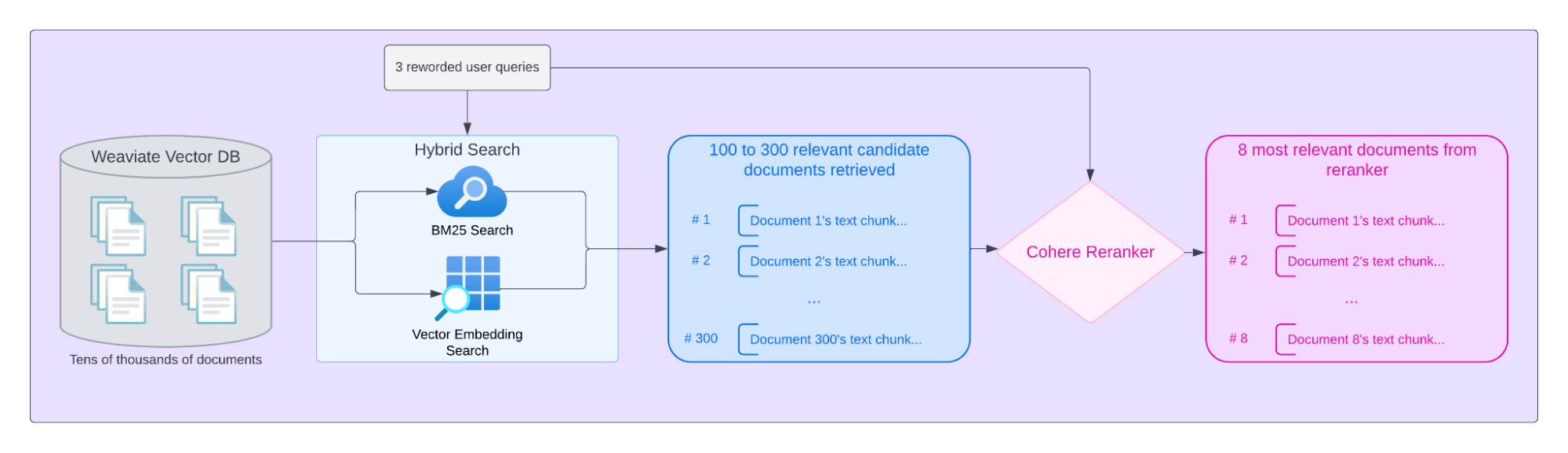

In the original setup, when a user asked a question, the question was reworded 3 times using GPT-3.5 and LangChain’s Multi-query retriever. For each rephrased query, the 4 most relevant documents were determined based on vector embeddings created by text-embedding-ada-002 and stored in Weaviate’s vector database index, using the Weaviate provider for Airflow, part of a collection of providers that are useful for building LLM applications with Airflow. The system then collated the 12 total documents from the 3 rephrased prompts and sent them, along with the original user query, into the GPT-4 model to generate a final response.

However, there were instances where certain queries and keywords did not align effectively with the embedding model, resulting in the retrieval of unrelated documents. A striking example of this was when a query like "what is the Astro SDK", which refers to an official Python SDK package provided by Astronomer, led to the retrieval of unrelated documents and elicited an inaccurate response suggesting that "no such thing exists."

A New Technique with Hybrid Search

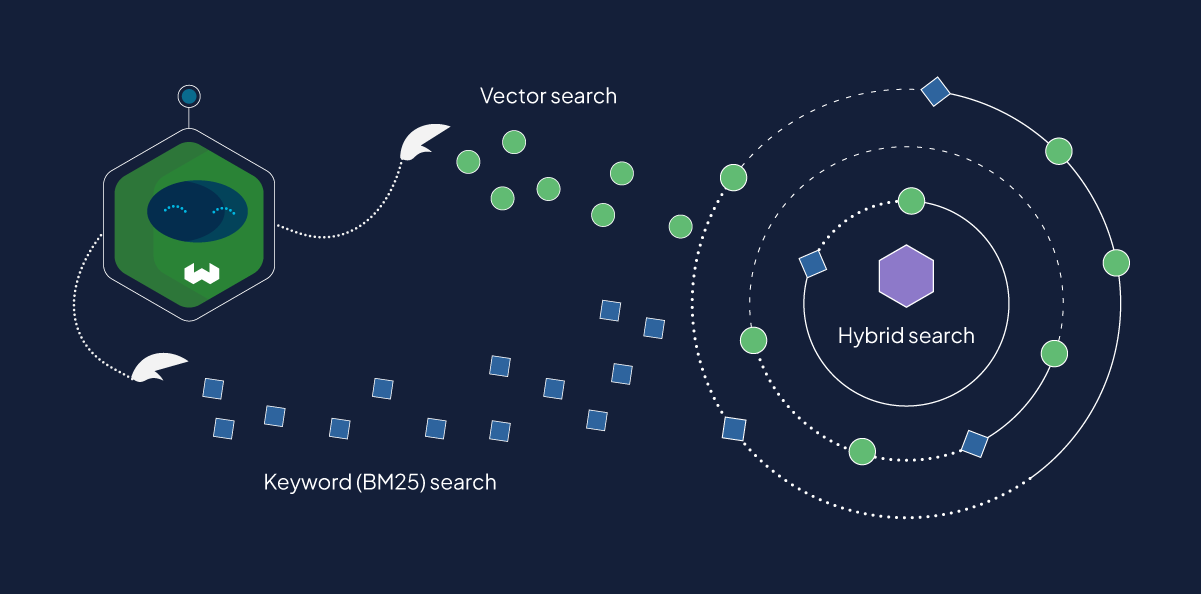

To enhance precision, we have introduced Weaviate's hybrid search into our retrieval chain, a method which ingeniously combines the BM25 sparse vector search and a dense vector similarity search using an embedding model through a rank fusion scoring system. Different from using an embedding model, BM25 sparse vector search is a technique that generates scores based on term frequency and document length normalization, providing a highly accurate measure of document relevance.

The use of the hybrid search technique is particularly advantageous as it enables us to utilize the best aspects of both keyword-based search algorithms from BM25 and vector search techniques from vector embedding models. This is achieved by coalescing both dense and sparse vectors through a weighted scoring calculation, resulting in a significant enhancement in the accuracy of our retrieval.

Furthermore, we also strategically maximized our search reach by retrieving 100 documents for each of the three prompts. This allows for a larger pool of potential documents, which can range from 100 to 300 after deduplication. Having a larger pool is crucial when the system has re-ranking, as it ensures a broader representation of potentially relevant documents, increasing the likelihood of capturing a diverse set of information related to the given prompts. Additionally, with a larger set of candidate documents, the system becomes more robust to noise or errors in the initial retrieval process. This robustness is essential because a smaller pool of candidate documents is more susceptible to false positives or irrelevant documents, which gives less leeway to the subsequent re-ranking phase. Moreover, having a greater number of candidate documents provides the re-ranking process with more options. This enables it to consider various features and signals for discriminating between relevant and non-relevant documents, which contributes to improved overall ranking quality in the final selection of documents.

Refinement with Cohere Rerank

Traditionally, re-ranking or cross encoders are a widely-utilized technique in recommender systems designed to enhance the quality of recommendations. It accomplishes this by filtering down the larger pool of candidates into a smaller collection and adjusting the sequence in which items are presented to users. The process of re-ranking can be approached in several ways such as the utilization of filters, modifications made to the score returned by the ranking algorithm, or alterations to the sequence of recommended items.

The Cohere Rerank endpoint acts as the final stage of a search flow.

The Cohere Rerank endpoint acts as the final stage of a search flow.

In the context of our RAG chat assistant system, the re-ranker plays an integral role. The re-ranker in this scenario is a more performant language model that processes a pair of inputs at a time: the user prompt and the corresponding document content. It then calculates a relevancy score for each input pair. This process is repeated for each of our 100 to 300 candidate documents. Ultimately, we retain only the top 8 highest-scoring documents, significantly improving the accuracy of the response generated.

Beyond the Stars: Results and Conclusion

To evaluate the accuracy of Ask Astro, we regularly run a set of carefully compiled Astronomer related questions each time we introduce any changes to the data ingestion or the answer-generating chain. After integrating hybrid search and Cohere Rerank, we evaluated the performance of our system by running it against the same dataset. The results of our most recent evaluation showed around 13.5% improvement in terms of numbers of questions with notable increased accuracy of response and relevance of documents retrieved. The previous glaring issues with questions similar to the one about Astro SDK described earlier were all resolved with not only correct answers but most relevant documents being retrieved. In addition, a number of other questions have seen more relevant documents being retrieved for answer response generation. Subjectively, we also felt that the quality of the responses improved generally across the board.

Cohere Rerank was instrumental in steering these significant improvements. It played a pivotal role in increasing the quality of responses of Ask Astro, helping to address and rectify the prior issues with irrelevant documents supplied to the LLM during answer generation as a result of the inconsistent performance of vector similarity search with the embedding model. Together with hybrid search, this integration marks a significant milestone in the evolution of Ask Astro, setting a new standard for answer accuracy and relevance of the documents provided to the user as source information.