Ask Astro: An open source LLM Application with Apache Airflow®, Part 2

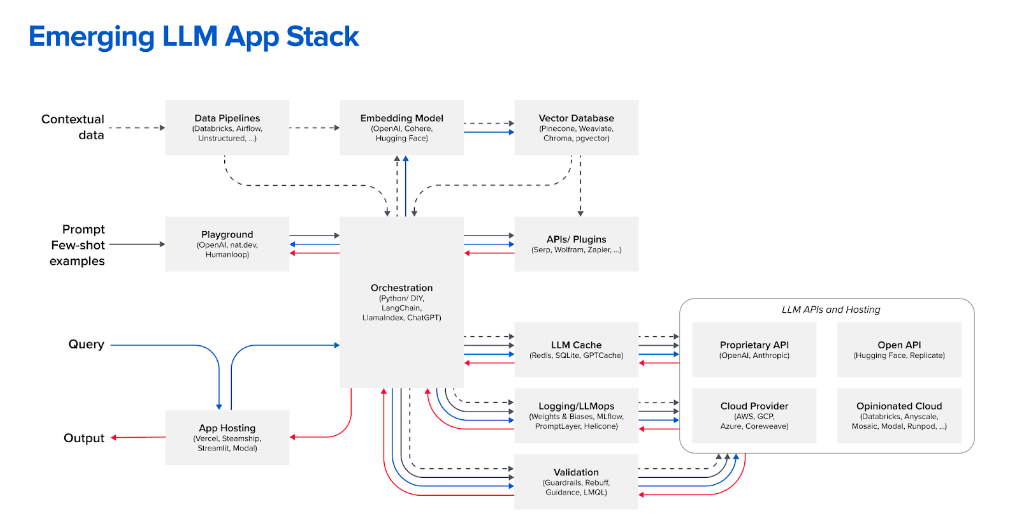

In the excitement surrounding Large Language Models (LLMs), very little is being said about how to operationalize LLM applications and the data pipelines that support them. This blog post is the first in a series of articles chronicling the development and operationalization of an LLM-powered application and shows a real-life example of overcoming the challenges of day-2 operations with LLMs. Astronomer has created a reference implementation of Andreessen Horowitz’s Emerging Architectures for LLM Applications, which this series will describe in detail. We’ll provide a recipe for building a production knowledge interface, along with insights into the synergy between LLM operations and Apache Airflow®.

In this blog post, we'll introduce you to "Ask Astro," an intelligent chatbot powered by LLMs. But this isn't just another chatbot story. This is the story about how Airflow is used to make Airflow more useful.

Meet our Airflow expertise assistant: Ask Astro

“Ask Astro” is an LLM-powered chatbot that, like many enterprise applications, started from a desire to leverage a wealth of knowledge in domain-specific documents.

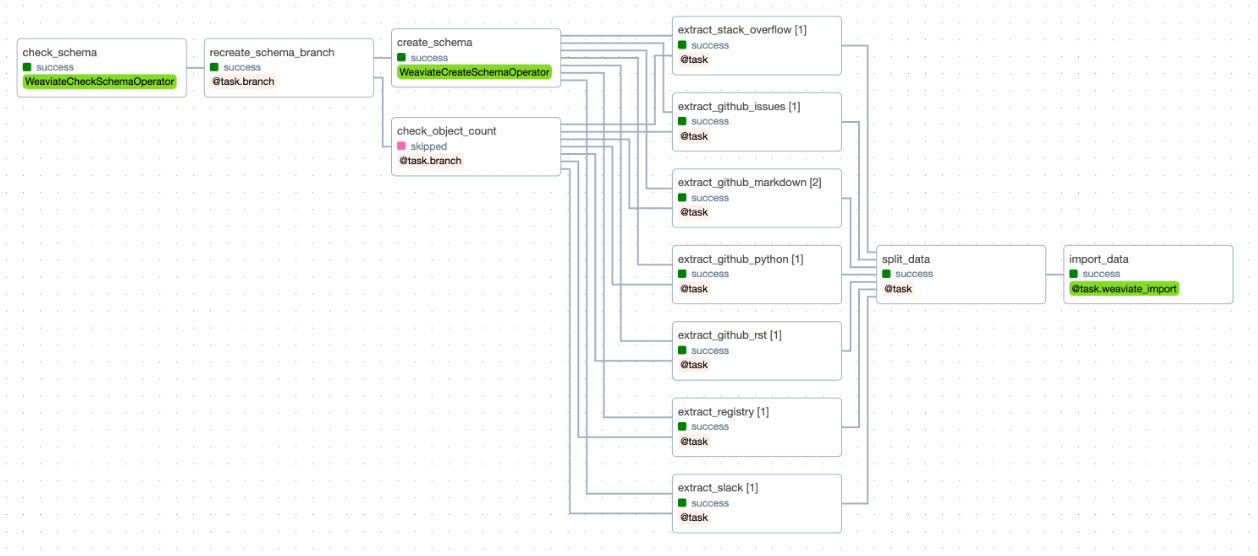

As the commercial entity behind Apache Airflow®, the biggest and most active community in the history of the Apache Software Foundation, the Astronomer team includes some of the most knowledgeable community members. They capture a wealth of information on Airflow and are deeply committed to making Airflow better. The Ask Astro application leverages Retrieval Augmented Generation with documents from Github, Stack Overflow, Slack, the Astronomer Registry, and blog posts to build a Weaviate vector store. Weaviate retrieval, along with OpenAI embedding and generative AI models, power not only a Slackbot but also web-based search applications, Airflow DAG authoring assistance, and more to come.

Ask Astro is more than just another chatbot. We chose to develop the application in the open and regularly post about challenges, ideas, and solutions in order to develop institutional knowledge on behalf of the community. As such, Ask Astro is also:

- A reference implementation: Andreessen Horowitz’s Emerging Architectures for LLM Applications has done a great job of summarizing the state of LLM architectural components. Apache Airflow®, of course, plays a central role in the architecture and Ask Astro is a real-life reference to show how to glue all the various pieces together.

- A conversation starter: We started with some informed opinions about this reference implementation, but we also believe strongly in the power of community development. We are committed to being open and transparent about the hurdles we encountered, how we overcame them, and why we made the choices we did. This is more about jointly growing ecosystem knowledge than about trying to create another “standard” from our opinions or boasting expertise.

- A template: We are also making all resources for Ask Astro available publicly. We believe that competitive advantage comes from leadership and curation. In addition to driving a dialogue around best practices and design principles, the various components of Ask Astro are available as templates. We would love to see broad usage of these templates to help organizations build high-quality, day-2 operations for their LLM applications.

Stay tuned…

Following the overall development process we will be back with more details on document ingest, embedding and chunking, feedback loops, user interfaces and serving, monitoring, and observability.

Check out the GitHub repository and the demo. Most importantly, tell us what you think. We want to hear about what you are building, your ideas, and comments.

Other articles in this series