In this webinar video we cover:

- Authoring DAGs that leverage AWS services

- Airflow Connections

- IAM roles vs Access Keys

- Connecting to multiple AWS accounts in a single DAG

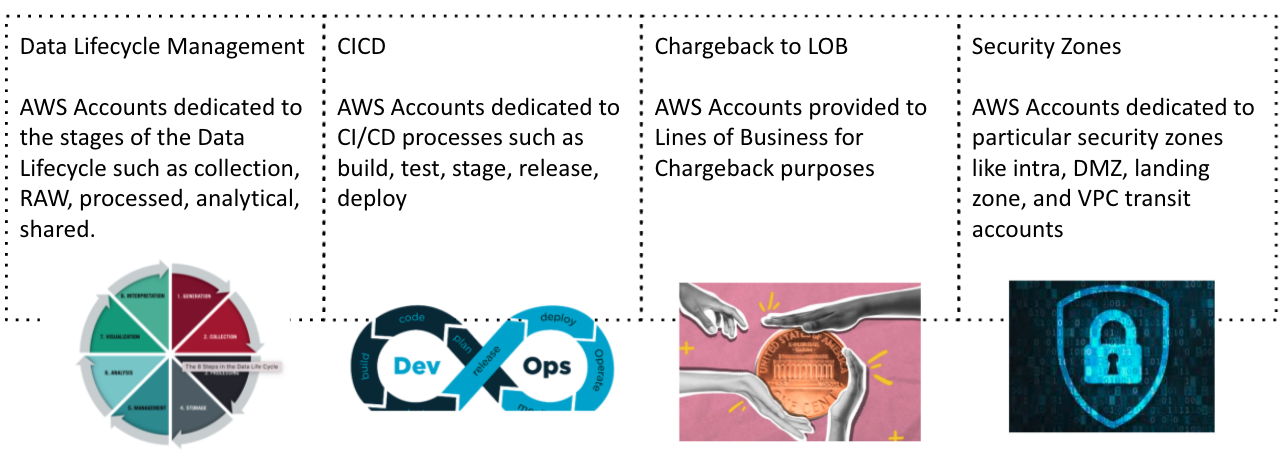

Why Would We Need Multiple AWS Accounts?

In AWS, it’s common for organizations to use multiple AWS accounts for various reasons, from Dev, Stage, Prod accounts to accounts being dedicated to LOBs. What do you do when your Data Pipeline needs to span AWS accounts? This webinar shows how you can run a single DAG across multiple AWS accounts in a secure manner.

DAG Overview

- Astronomer Airflow Running on EKS Cluster in AWS Account for shared services (“Referred to as AWS Account 3”)

- EMR Job running in AWS dedicated to raw data processing (“AWS Account 1”)

- Athena Query run in AWS account for data query (“AWS Account 2”)

- AWS Permissions granted to Airflow using IAM Cross Account Role, no Access Keys/Secret Access Keys needed! (Although the same setup can be completed using IAM User Access Key/Secret Access Key if preferred)

Why are companies writing DAGs that span multiple AWS Accounts?

How to write DAGs that span multiple AWS Accounts

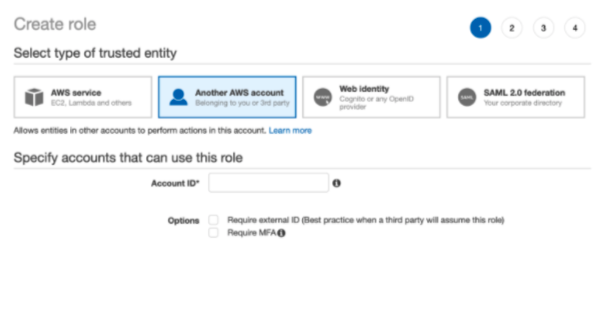

- In both AWS-Account-1 and AWS-Account-2

- Create a cross account role with the account ID of the DS Shared Account

- Attach an IAM policy to the role granting appropriate permissions

- Make Note of the role ARN

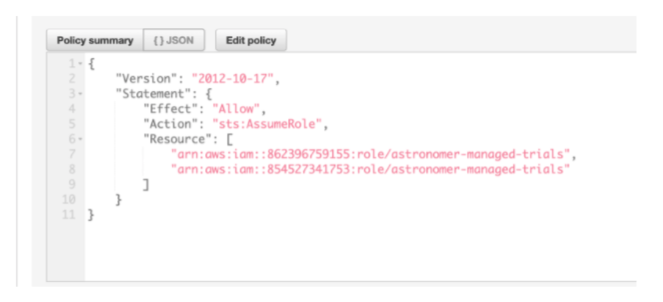

- In the AWS-Account-3 (airflow account)

- Find the IAM role that airflow will be running as

- Attach an IAM policy granting permissions to assume role

- In the resource field, put the role ARNs for cross account roles created in step 1

Which IAM Role does Airflow Run as before assuming a role?

Depends on a few factors:

- If Airflow is running on standard AWS infrastructure (EC2), the default role is the roll attached to the EC2 instance running airflow

- If Airflow is running on EKS, the default role is the roll attached to the EC2 nodes that EKS is running on.

- Default role can be overwritten by providing an access key / secret access key in the AWS connection setup.

- If Airflow is running outside of AWS (on-prem), then access key is likely required

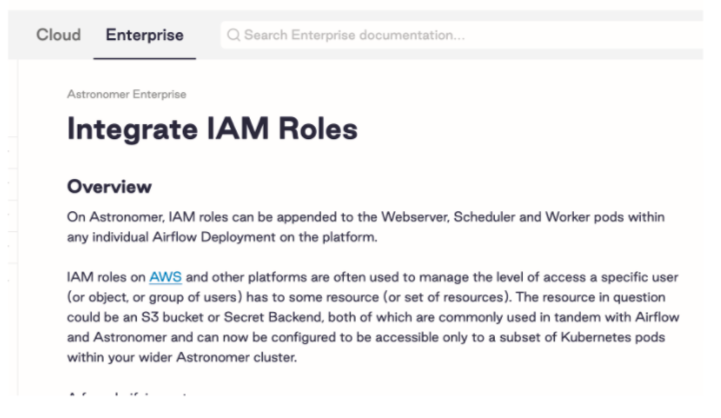

Astronomer Enterprise - Additional options for IAM on EKS

- With Astronomer Enterprise, you have additional options for providing specific IAM roles to specific pods running Airflow.

- Allows customers to granularly and dynamically assign IAM roles to multiple airflow deployments that will dynamically grow / shrink with auto-scaling capabilities.

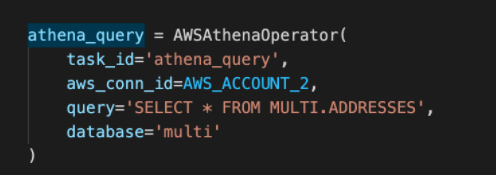

- In Airflow

- Unde Admin, create a new connection

- Under extras, provide the Role ARN of the cross account role created in AWS-Account-1

- Repeat a & b for AWS-Account -2

- In DAGs

- Reference appropriate AWS accounts in tasks

- For most AWS Operators, this is done using aws_connd_id

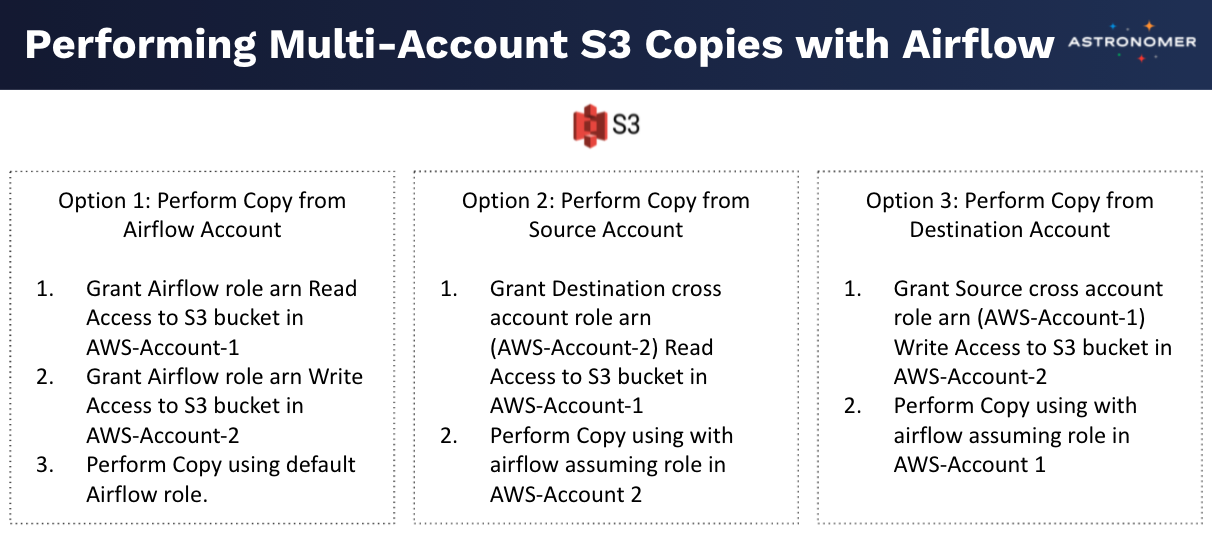

What about copying data between S3 buckets in two different AWS accounts?

What if we’d prefer to use Access Keys instead of Cross Account Roles?

Access Key Method

-

In both DSC (account 1) & Pre-Prod (account 2)

- Get a access key/secret access key for a peculiar user

- Ensure the IAM policy assigned to the user has appropriate permission in AWS

-

In Airflow

- Admin, create new connection

- Paste Access Key in the Login field

- Paste Secret Access Key in the password field

Join the 1000’s of other data engineers who have received the Astronomer Certification for Apache Airflow® Fundamentals. This exam assesses an understanding of the basics of the Airflow architecture and the ability to create basic data pipelines for scheduling and monitoring tasks.