Introducing Cosmos 1.0: the best way to run dbt Core in Airflow

10 min read |

Note: Since this post was first published in July 2023, thousands of data teams have adopted Cosmos and it is now downloaded over 1.3 million times per month.

Additionally, you can now deploy dbt projects to Astro, our managed data platform, significantly reducing the complexity and fragmentation associated with handling multiple data tools.

Apache Airflow®, often hailed as the “swiss army knife” of data engineering, is an open-source platform that enables the creation, scheduling, and monitoring of complex data pipelines. A typical pipeline is responsible for extracting, transforming, then loading data - this is where the name “ETL” comes from. Airflow has, and always will, have strong support for each of these data operations through its rich ecosystem of providers and operators.

In recent years, dbt Core has emerged as a popular transformation tool in the data engineering and analytics communities. And this is for good reason: it offers users a simple, intuitive SQL interface backed by rich functionality and software engineering best practices. Many data teams have adopted dbt to support their transformation workloads, and dbt’s popularity is fast-growing.

The best part: Airflow and dbt are a match made in heaven. dbt equips data analysts with the right tool and capabilities to express their transformations. Data engineers can then take these dbt transformations and schedule them reliably with Airflow, putting the transformations in the context of upstream data ingestion. By using them together, data teams can have the best of both worlds: dbt’s analytics-friendly interfaces and Airflow’s rich support for arbitrary python execution and end-to-end state management of the data pipeline.

Airflow and dbt: a short history

Despite the obvious benefit of using dbt to run transformations in Airflow, there has not been a method of running dbt in Airflow that’s become ubiquitous. For a long time, Airflow users would use the BashOperator to call the dbt CLI and execute a dbt project. While this worked, it never felt like a complete solution - with this approach, Airflow has no visibility into what it’s executing, and thus treats the dbt project like a black box. When dbt models fail, Airflow doesn’t know why; a user has to spend time manually digging through logs and hopping between systems to understand what happened. When the issue is fixed, the entire project has to be restarted, wasting time and compute re-running models that have already been run successfully. There are also Python dependency conflicts between Airflow and dbt that make getting dbt installed in the same environment very challenging.

In 2020, Astronomer partnered with Updater to release a series of three blog posts on integrating dbt projects into Airflow in a more “Airflow-native” way by parsing dbt’s manifest.json file and constructing an Airflow DAG as part of a CI/CD process. This was certainly a more powerful approach and solved some of the growing pains called out in the initial blog post. However, this approach was not scalable; an end user would download code (either directly from the blog post, or from a corresponding GitHub repository) and manage it themselves. As improvements or bug fixes are made to the code, there’s no way to push updates to end users. Similarly, the code is somewhat opinionated: it works very well for the use case it solves, but if you want to do something more complex or tailored to your use case, you were better off writing your own solution.

Cosmos: the next generation of running dbt in Airflow

Our team has worked with countless customers to set up Airflow and dbt. At a company-wide hackathon in December, we decided to materialize our experience in the form of an Airflow provider. We gave it a fun, Astronomer-themed name - Cosmos - and because of overwhelming demand from our customers and the open source community, we’ve decided to continue developing on the original idea and taking it from hack week project to production-ready package. Cosmos, which is Apache 2.0 licensed, can be used with any version of Airflow 2.3 or greater. In just the last 6 months it’s grown to 17,000 downloads per month, almost 200 GitHub stars, and 35+ contributors from Astronomer, Cosmos users, and the entire Airflow community.

Today, we’re releasing our first 1.0 stable release and we feel strongly that Cosmos is the best way to run Airflow and dbt together.

It’s powerful. Seriously.

“With Cosmos, we could focus more on the analytical aspects and less on the operational overhead of coordinating tasks. The ability to achieve end-to-end automation and detailed monitoring within Airflow significantly improved our data pipeline’s reliability, reproducibility, and overall efficiency.”

- Péter Szécsi, Information Technology Architect, Data Engineer, Hungarian Post

Airflow is an extremely powerful and fully-functional orchestrator, and one of the driving principles behind Cosmos is to take full advantage of that functionality.

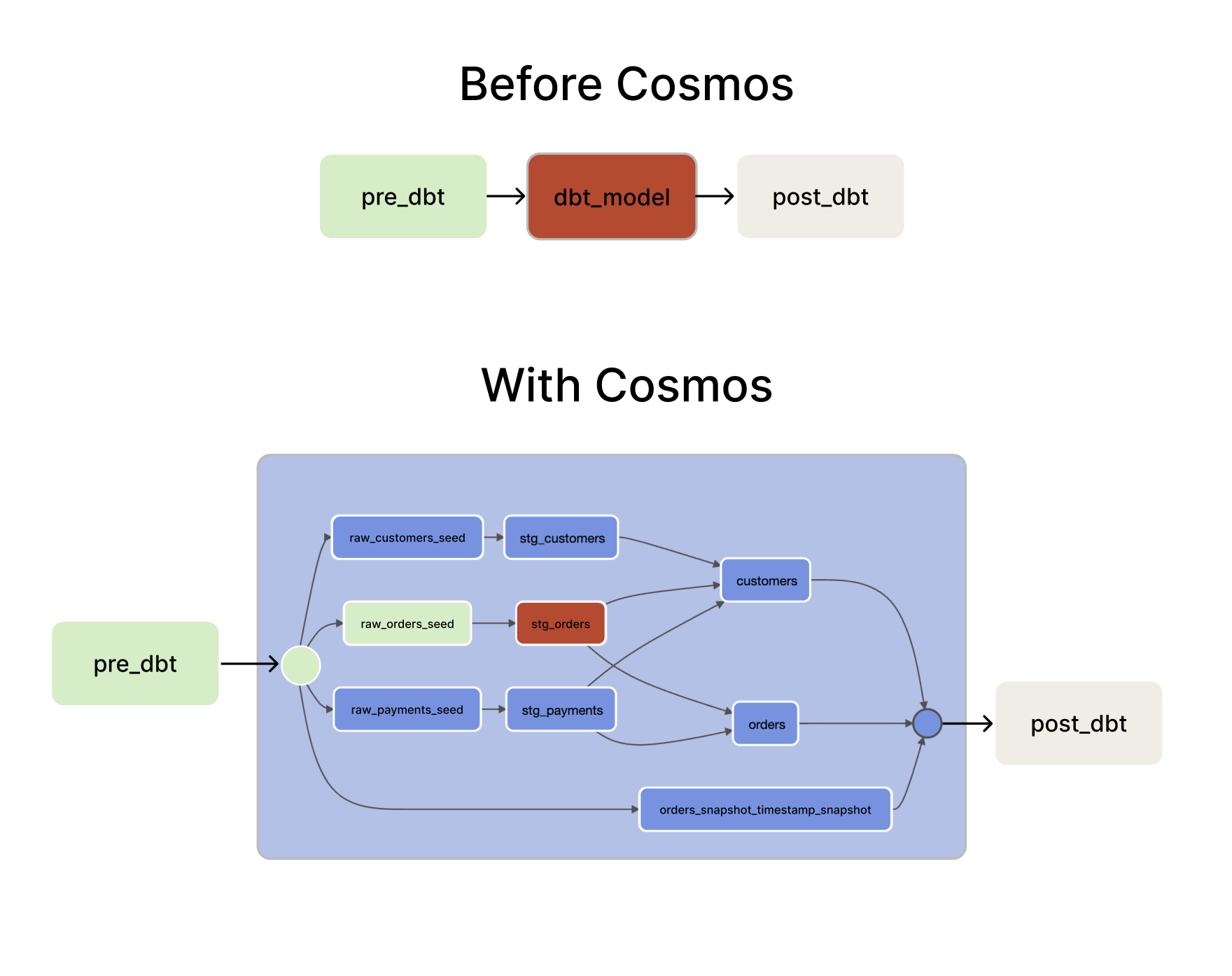

To do so, Airflow needs to understand what it’s executing. Cosmos gives Airflow that visibility by expanding your dbt project into a Task Group (using the DbtTaskGroup class) or a full DAG (using the DbtDAG class). If one of your dbt models fails you can immediately drill into the specific task that corresponds to the model, troubleshoot the issue, and retry the model. Once the model is successful, your project continues running as if nothing happened.

We’ve also built a tight coupling with Airflow’s connection management functionality. dbt requires a user to supply a profiles.yml file on execution with credentials to connect to your database. However, most of the time, Airflow’s already interacting with that database with an Airflow connection. Cosmos will translate your Airflow connection into a dbt profile on runtime, meaning that you don’t have to manage two separate sets of credentials. You can also take advantage of secrets backends to manage your dbt profiles this way!

It’s easy to use

“Astronomer Cosmos has allowed us to seamlessly orchestrate our dbt projects using Apache Airflow® for our start-up. The ability to render dbt models as individual tasks and run tests after a model has been materialized has been valuable for lineage tracking and verifying data quality. I was impressed with how quickly we could take our existing dbt projects and set up an Airflow DAG using Cosmos.”

- Justin Bandoro, Senior Data Engineer at Kevala Inc.

Running your dbt projects in Airflow shouldn’t be difficult– it should “just work”. Cosmos is designed to be a drop-in replacement for your current dbt Airflow tasks. All you need to do is import the class from Cosmos, point it at your dbt project, and tell it where to find credentials. That’s it! In most cases, it takes less than 10 lines of code to set up.

Here’s an example that uses Cosmos to render dbt’s jaffle_shop (their equivalent of a “hello world”) project and execute it against an Airflow Postgres connection:

from cosmos import DbtTaskGroup, ProjectConfig, ProfileConfig

# then, in your DAG

jaffle_shop = DbtTaskGroup(

project_config=ProjectConfig("/path/to/jaffle_shop"),

profile_config=ProfileConfig(

profile_name="my_profile",

target_name="my_target",

profile_mapping=PostgresUserPasswordProfileMapping(

conn_id="my_postgres_dbt",

profile_args={"schema": "public"},

),

)

)

While it’s easy to set up, it’s also extremely flexible and can be customized to your specific use case. There’s a whole set of configuration you can look at to find out more, and we’re actively adding more configuration. For example, you can break up your dbt project into multiple sub-projects based on tags, you can configure the testing behavior to run after each model so you don’t run extra queries if the tests fail early, and you can run your dbt models using Kubernetes and Dockers for extra isolation.

Most importantly, Cosmos has native support for executing dbt in a Python virtual environment. If you’ve tried to set up Airflow and dbt together, chances are you’ve experienced dependency hell. Airflow and dbt share Python requirements (looking at you, Jinja) and they’re typically not compatible with each other; Cosmos solves this by letting you install dbt into a virtual environment and execute your models using that environment.

And it runs better on Astro!

“Cosmos has sped up our adoption of Astro for orchestrating our System1 Business Intelligence dbt Core projects without requiring deep knowledge of Airflow. We found the greatest time-saver in using the Cosmos DbtTaskGroup, which dynamically creates Airflow tasks while maintaining the dbt model lineage and dependencies that we already defined in our dbt projects."

- Karen Connelly, Senior Manager, Business Intelligence at System1

While Cosmos works well wherever Airflow’s running - whether that’s on Astro, another managed Airflow service, or open-source Airflow - it runs extraordinarily well on Astro. Astro users get to take advantage of the dbt OpenLineage integration without doing any extra work with Astro’s lineage platform. You can use Astro to understand the relationships between your dbt models, Airflow tasks, and Spark jobs, which becomes increasingly important as your data team and ecosystem grows.

Astro users also get to take advantage of DAG-based deploys with their dbt projects to deploy new changes very quickly, set up alerting to be notified immediately if a dbt model fails or takes too long, and create virtual environments easily with the Astro CLI.

And finally, for Astro users running our hybrid, task-based pricing model, we’re only charging one task run per Cosmos Task Group/DAG run. This way, you can run your dbt models on Airflow using Cosmos without worrying about the price implications of running Airflow tasks per dbt model.

Cosmos is a culmination of years of experience working with dbt, and we truly feel that it’s the right way to run dbt with Airflow. It is a succession to the existing methods of running dbt and Airflow, both because it’s easier to use and it’s more functional.

| BashOperator | Manifest Parsing | Cosmos | |

|---|---|---|---|

| Ease of Use | Import and use the BashOperator | Download code and manage it yourself | Import and use the DbtTaskGroup or DbtDAG class |

| Observability | One task per project | One task per model | Two tasks per model: one for the model, one for the tests |

| Retries | Can only rerun the entire project | Can rerun individual models | Can rerun individual models |

| Python Dependency Management | Have to install dbt Core into your Airflow environment | Have to install dbt Core into your Airflow environment | Can install dbt Core into a virtual environment and execute models using that environment |

| External Dependencies | Need to manually combine with data-driven scheduling | Need to manually combine with data-driven scheduling | Data-driven scheduling is built-in |

| Connection Management | Need to manage Airflow connections and dbt Profiles | Need to manage Airflow connections and dbt Profiles | Airflow connections are automatically translated into dbt Profiles |

This is just the beginning of Cosmos, and we hope you’re just as excited as us with the direction the product is heading. If you want to get started right away, check our Learn resources on how you can use Cosmos and head over to a free trial on Astro.

Don’t forget to star and watch the repository so you stay up to date with new releases!

–

I’d like to thank the entire Astronomer team for their help in building Cosmos. While the initial idea was born out of a hackathon project, the entire team has been instrumental in building Cosmos into what it is today - particularly Chris Hronek, Tatiana Al-Chueyr, Harel Shein, and Abhishek Mohan. I’d also like to thank the dbt community for their support and feedback on Cosmos. We’re excited to continue building Cosmos and making it the best way to run dbt in Airflow.