Introducing Airflow 2.6

Apache Airflow® 2.6 was released earlier this week. This release comes just a few months after Airflow 2.5 was released, and both build upon features started in previous releases, while also introducing brand new concepts. This release is a milestone for the community. Quantitatively, it contains over 500 commits from over 130 contributors, adding up to 35 new features, 50 general improvements, and 27 bug fixes. It also coincides with another milestone for the project – surpassing 30K Github stars.

It would be remiss not to mention that in addition to the 2.6 release, the community also put out a new providers release which contains, among other things, an official Kafka Provider.

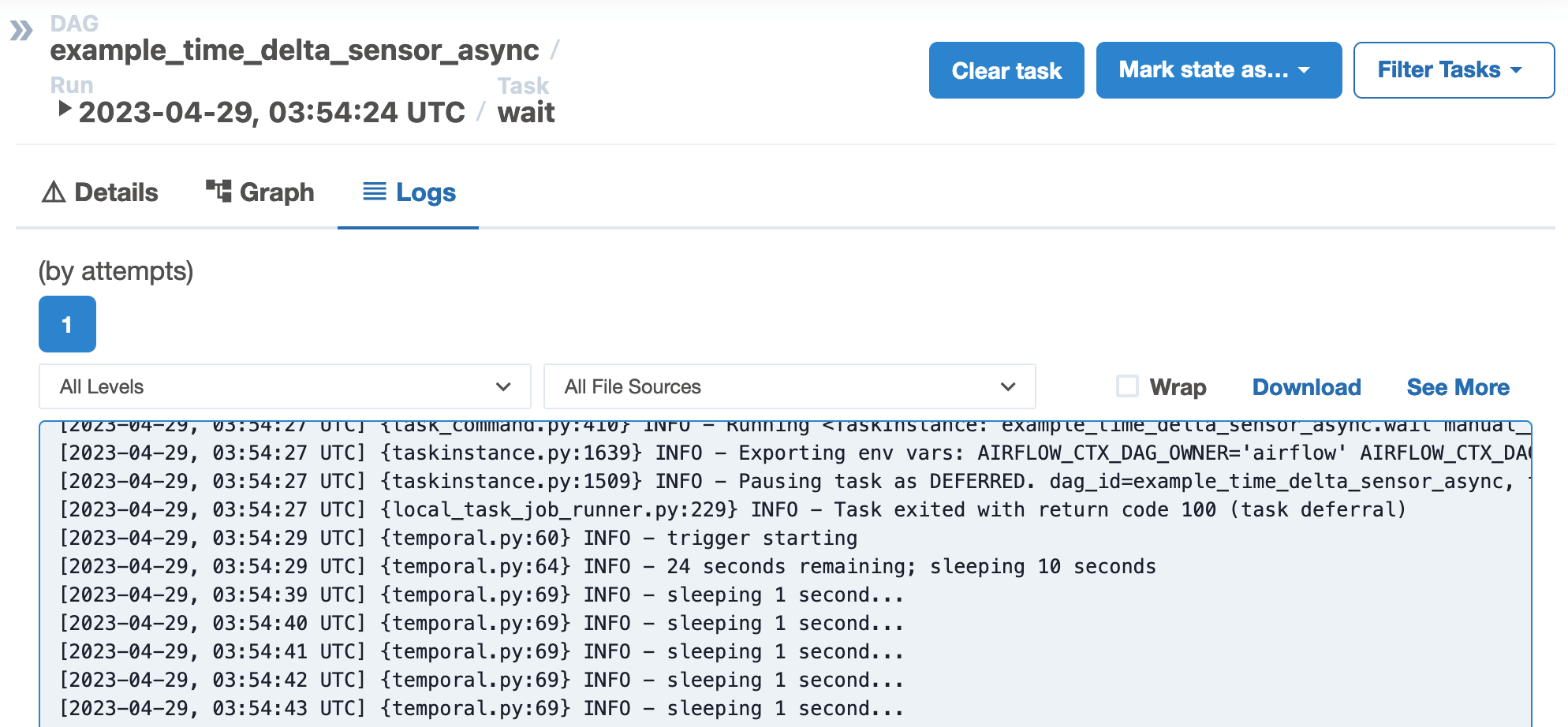

Consolidated Trigger Logs in the UI

Airflow 2.2 introduced deferrable tasks and added a new component to Airflow, the triggerer. The triggerer monitors tasks that have entered the deferred state, and thus vacated their worker slot, resulting in cost savings and more efficient infrastructure utilization. The logs for the triggerer would previously be outside of the task logs, resulting in users needing to switch between views when debugging tasks built with deferrable operators.

Airflow 2.6 brings the triggerer logs into the UI, right alongside the task logs, resulting in a much cleaner debugging experience.

Notifiers: A New, Extendible Way to Add Notifications

Airflow’s on_*_callback parameter allows DAGs to execute a Python callable based on the state of a DAG (i.e. on_failure, on_success, etc.). This parameter is a natural place to include custom logic that users require for sending notifications to email, Slack, or other systems of their choice about the state of their DAG.

Historically, these callbacks were generally written in an ad-hoc way. Additionally, these functions would often require re-implementing an integration to a system (Slack, email, teams, etc.) that Airflow already had a pre-built integration (Provider) for, but couldn’t intuitively be used in the scope of that callback.

Notifiers introduce a new object in Airflow, designed to be an extensible layer for adding notifications to DAGs. Users can build notification logic from a new base object, and call it directly from their DAG files.

For example, a notifier class might look something like this:

from airflow.notifications.basenotifier import BaseNotifier from my_provider import send_message class MyNotifier(BaseNotifier): template_fields = ("message",) def __init__(self, message): self.message = message def notify(self, context): # Send notification here, below is an example title = f"Task {context['task_instance'].task_id} failed" send_message(title, self.message)

This can now be re-used in DAGs:

from airflow import DAG from myprovider.notifier import MyNotifier from datetime import datetime with DAG( dag_id="example_notifier", start_date=datetime(2022, 1, 1), schedule_interval=None, on_success_callback=MyNotifier(message="Success!"), on_failure_callback=MyNotifier(message="Failure!"), ): task = BashOperator( task_id="example_task", bash_command="exit 1", on_success_callback=MyNotifier(message="Task Succeeded!"), )

Both of these can be found on the official Airflow docs.

Users can also create their own notifiers:

# all custom notifiers should inherit from the BaseNotifier class MyS3Notifier(BaseNotifier): """An notifier that writes a textfile to an S3 bucket containing a string with information about the DAG and task sending the notification as well as task status and a message. :param message: The message to be written into the uploaded textfile. :param bucket_name: A list of topics or regex patterns the consumer should subscribe to. :param s3_key_notification: key to the file into which the notification information should be written, has to be able to receive string content. :param aws_conn_id: The connection ID used for the connection to AWS. :param include_metadata: The function that should be applied to fetched one at a time. :param s3_key_metadata: The full S3 key from which to retrieve file metadata. """ template_fields = ( "message", "bucket_name", "s3_key_notification", "aws_conn_id", "include_metadata", "s3_key_metadata", )

Full example here.

It’s going to be incredible to see what notifiers the community creates over the next few months and how users will fold them into their DAGs.

No More Tasks Stuck in Queued

“Why is my task stuck in queued?” is a question almost every Airflow user has had to ask themselves. The “stuck queued task” can be difficult to debug, and it can result in missed data SLAs and incorrect numbers on dashboards. Furthermore, because the task would be “stuck” in the queued state, users would often not even know there was a problem.

Airflow 2.6 introduced a consolidated task_queued_timeout parameter that can be used regardless of executor or environment. The new task_queued_timeout parameter will fail a task if it stays in the Queued state for past a determined amount of time, allowing for the users retry and failure logic to kick in. (read more about it from the PR’s author here).

Taking the GridView to the Next Level

Almost 1 year ago, Airflow 2.3 introduced the Grid View, a much more intuitive interface to debug, monitor, and troubleshoot DAG runs over time. With the release of 2.6, the Grid View now shows the DAG graph allowing users to see and interact with the shape of their DAG, across subsequent DAG runs.

A ton can be said about how this can be helpful, but a picture (gif) says 1000 words.

So Much More

All of this just begins to scratch the surface of what’s in Airflow 2.6. All of this doesn’t even include:

- A new Continuous Timetable, a perfect fit for that situation where “a DAG should always be running”

- A UI for triggering based on DAG level params

- CLI commands for testing connections and getting DAG details

- Performance improvements made by preloading imports

Learn More and Get Started

Although 2.6 was just released, there’s already work underway for follow up 2.6 releases (2.6.1, 2.6.2, etc.), as well as the 2.7 and beyond. If you’re looking to learn more, we’ll be doing a What’s New in Airflow 2.6 webinar on May 9th where we’ll dive deeper into the new features. If you’re looking for an easy way to get your hands on the 2.6 release, you can get started with the AstroCLI. And for 2.6 specific examples, head over to this repo.

And as always, if you want some help upgrading to 2.6 or with your current Airflow environment, start a trial of Astro, and we’ll be in touch.

A special thank you to everyone who helped make this release possible. You can read more about it on the Apache Airflow® blog.