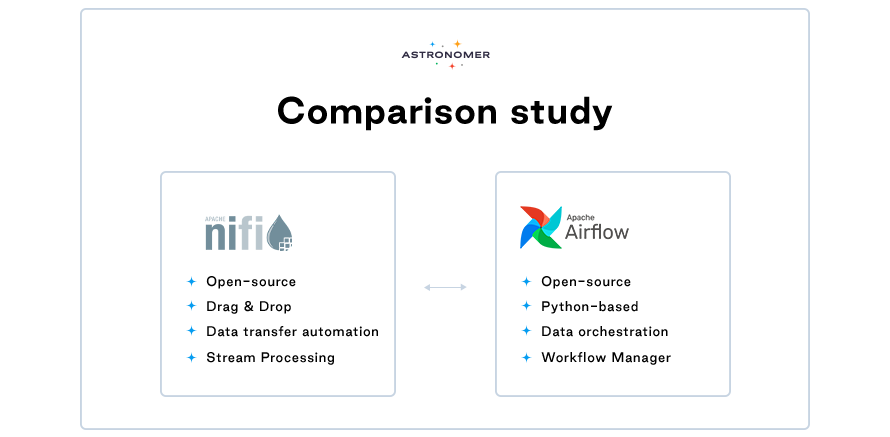

Apache NiFi vs. Apache Airflow®

Apache Airflow® and Apache NiFi are, in fact, two whistles to a somewhat different tune. Still, you may be wondering which one is better suited for your expectations and goals. By the end of this article, you will no longer have doubts.

Although essentially different, both Apache Airflow® and Apache NiFi are tools designed to manage the golden asset of most organizations: data.

As the data volumes keep expanding, enterprises create a rising need for data warehousing projects and advanced analytics solutions. ETL (Extract, Transform, Load) is a critical component of a modern data stack, as it guarantees that data is successfully integrated across many databases and applications. Both Apache Airflow® and Apache NiFi are crème de la crème among the most popular ETL tools. In order to choose the right tool for your needs, you have to ask yourself - what exactly are you going to do with your data? But before that, let's go through the background and get to know these two pets.

Apache NiFi Basics

NiFi is an abbreviation for Niagara Files, initially produced by the NSA. The platform is written in Java and designed to handle big amounts of data and automate dataflow. It's a simple, powerful data processing and distribution system, allowing for the creation of scalable directed graphs of data routing and transformation. Data may be filtered, adjusted, joined, divided, enhanced, and verified. Apache NiFi does not require any programming skills, which can be either a benefit or a limitation, and it runs on JVM, supporting JVM languages.

Apache NiFi is an ETL tool typically used for long-running jobs, suitable for processing both periodic batches and streaming data. Data acquisition, transportation, and a guarantee of delivery are all NiFi fortes.

Key Benefits of Apache NiFi

- Architecture, making NiFi simple yet powerful. As FlowFile incorporates meta-information, the tool's possibilities aren't restricted to CSV, but it can also handle binary files, among others.

- Data Provenance. It is a linked service that tracks nearly everything in your dataflows, making it possible to use different queue rules (FIFO, LIFO, and others). It's really useful since you can observe how the data was stored or processed, although it requires a large amount of storage space.

- Over 100 processors for downloading files via HTTP, Google Data Source, S3 and uploading them to data receivers such as MySQL.

- Simplistic UI, which can be either a pro or a con. Some users have expressed dissatisfaction with the Apache NiFi interface - indeed, it's not spectacular, but it's functional, basic, and clean, with no extra components. Not everyone likes the vintage 90s vibe, but the interface is web-based and highly configurable.

Apache NiFi Limitations

- It can be very difficult to manage at scale. Drag-and-drop is nice, but if you need to take an exact copy of your pipeline and put it in a different environment, you'd likely have to go back in the UI and recreate all the settings.

- For long SQL queries, there is no automatic adjustment of text fields. They have to be adjusted manually, and in NiFi, setting up manually managed jobs can be challenging.

Apache Airflow® Basics

Some people claim that Apache Airflow® is "cron on steroids", but to be more precise, Airflow is an open-source ETL tool for planning, generating, and tracking processes. It is compatible with cloud providers such as GCP, Azure, and AWS. Astronomer makes it possible to run Airflow on Kubernetes.

Apache Airflow® is a super-flexible task scheduler and data orchestrator suitable for most everyday tasks. Airflow can run ETL/ELT jobs, train machine learning models, track systems, notify, complete database backups, power functions within multiple APIs, and more. Organizations typically use the platform to create workflows as directed acyclic graphs (DAGs) of tasks. Sounds complicated? It really shouldn't - rich command-line utilities make conducting complex DAG operations a breeze. The Airflow scheduler performs tasks on an array of workers while adhering to specific requirements.

Key Benefits of Apache Airflow®

- Code-first: Airflow and all the workflows are written in Python (although each step can be written in any language), which allows to dynamically generate DAGs. Workflows defined as code are easier to test, maintain, and collaborate on. Customization of complex transformations doesn't get any simpler than this. Moreover, Python allows for effortless collaboration with data scientists.

- Rich UI: The user interface is really intuitive and a truly functional way to access the metadata. It makes it easy to turn schedules on and off, visualize DAG's progress, make SQL queries, watch pipelines in production, monitor them, and resolve emerging issues at once. Due to rich visualization components, you can see all of the running pipelines and follow their progress. Everything is very lean and elegant. A powerful Jinja engine for templating makes it possible to parametrize scripts.

- Scalability: It's easy to define operators and executors, and you can modify the library to meet the amount of abstraction that best suits your context. Airflow offers multiple methods for horizontal scaling.

- Very active, constantly growing open-source community.

Airflow Limitations

- Airflow is not the best choice for stream jobs. Streaming data workflows is not the purpose of this platform.

Summary

By nature, Apache Airflow® is an orchestration framework, not a data processing framework, whereas Apache NiFi's primary goal is to automate data transfer between two systems. Thus, Airflow is more of a "Workflow Manager" area, and Apache NiFi belongs to the "Stream Processing" category. These two tools aren't mutually exclusive, and they both offer some exciting capabilities and can help with resolving data silos. It's a bit like comparing oranges to apples - they are both (tasty!) fruits but can serve very different purposes.

What Apache Airflow® and Apache NiFi surely have in common is that they are open-source, community-based tools. Airflow seems to have a broader approval with 23.2K GitHub stars and 9.2k forks, and more contributors. It’s probably due to the fact that it has more applications, as by nature Airflow serves different purposes than NiFi. Still, both tools can offer lots of built-in operators, constant updates, and support from their communities.

Apache NiFi is a perfect tool for handling big data - extracting it and loading it to a given space. It's an extensible platform known for its great error handling and simple interface. There is no better choice when it comes to the "set it and forget it" kind of pipeline, as NiFi doesn't offer live monitoring nor statistics per record. It’s perfect if you don’t want to get into coding at all - NiFi is “drag-and-drop” based, and this tool is surely a "go-to" solution for live batch streaming. Scheduling capabilities are not very robust, but technically, NiFi is not a scheduler.

Apache Airflow®, on the other hand, is a perfect solution for scheduling specific tasks, setting up dependencies, and managing programmatic workflow. A big, vibrant community keeps updating the tool and making it better with every update. Airflow takes lots of work off your IT team's hands, because it is one of the most robust platforms for orchestrating workflows or pipelines. It allows you to easily see the dependencies, code, trigger tasks, progress, logs, and success status of your data pipelines. Airflow is a data orchestrator which goes way beyond managing data - it helps to deliver data-driven insights, as a result making businesses grow.

“Before Airflow, our pipelines were split, some things were done on Cron, some on NiFi, some on other tools. We wanted to bring it all together. With NiFi, CRED developers had to make a copy of all the pipelines and use it for their specific use cases. If they wanted to change something, for example, upgrade to a newer Airflow version, they needed to update both copies,“ said Omesh Patil, Data Architect at CRED.

Long story short, there is no "better" tool. It all depends on your exact needs - NiFi is perfect for basic big data ETL process, while Airflow is the “go-to” tool for scheduling and executing complex workflows, as well as business-critical processes.

Astronomer – Making Airflow Even Better

Astronomer enables you to centrally develop, orchestrate, and monitor your data workflows with best-in-class open source technology. With multiple provider packages in our registry, it's intuitive and straightforward to take off with your ETL pipelines and take full advantage of your data.

Would you like to get in touch with one of our experts? Let's connect and get you started!