1. What is Machine Learning?

One way of thinking about machine learning is that it replaces a classical programming paradigm, where you would take rules plus data and get answers by combining the two. With machine learning, you invert the process, starting with the known answers plus the data, and discovering the rules. Those rules, in turn, can be used to predict answers that produce value.

2. What isMLOps?

Machine learning operations (or MLOps) can be thought of as getting your machine learning algorithm, which already solves a business need, to deliver its value at scale.

With MLOps, you need to:

- Design and build the service/platform that will host the machine learning model

- Orchestrate the steps

- Set up alerting on behavior against thresholds

- Deploy the model, making it available to end-users

- Implement protocols for phased roll-outs and rolling back

- Set up logging and trends visualization

- Allow for:

- Hyperparameter optimization

- Active learning

- Transfer learning

Planning how to do each of these steps is what gets your machine learning model to produce its value both sustainably and at scale.

3. Example of an ML Workflow

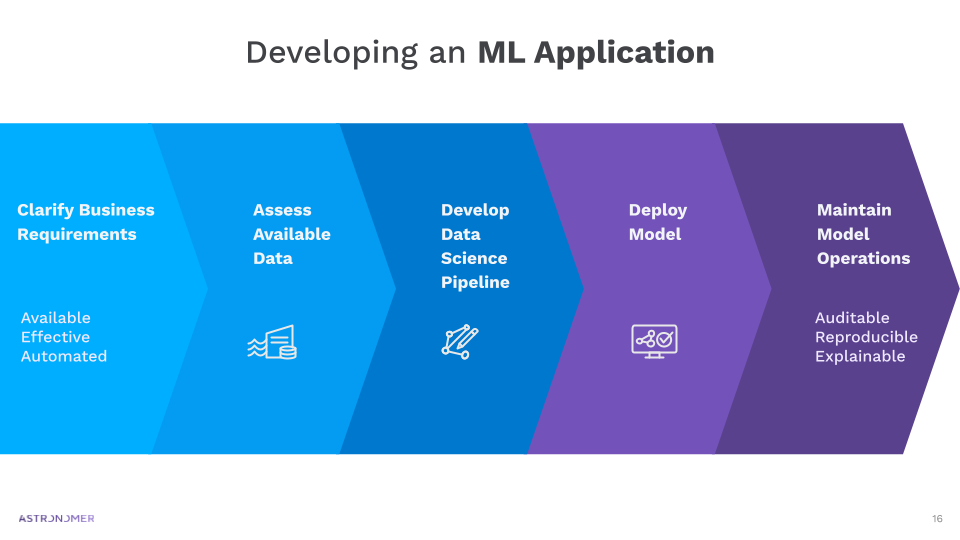

4. Lifecycle of an ML Application

To break down the steps of developing a machine learning application:

You start by clarifying the business requirements — not just the purpose of the model but also how available the model needs to be. Common questions you need to ask: How effective does the model need to be? What are the acceptable ranges of its performance? To what degree do the steps need to be automated?

Next, you assess the available data and begin to develop the pipeline. This part usually takes the longest.

Then you deploy the model, and go on to ensure the model is auditable and that the entire cycle is reproducible.

It’s also essential that your machine learning models be explainable, so that you can understand all the steps leading to the predicted output.

5. How Do We Achieve an ML Application in Production?

There isn’t currently a standard way to productionize your machine learning application — you can achieve the same value in different ways. It’s all about your needs and what standards you have to meet.

Different approaches include:

- Productionized Notebooks

- MLOps Platforms

- Custom Pipelines

You can leverage Airflow for all the approaches above. Airflow handles orchestration as part of machine learning operationalization at a level of reliability not always offered by other tools.

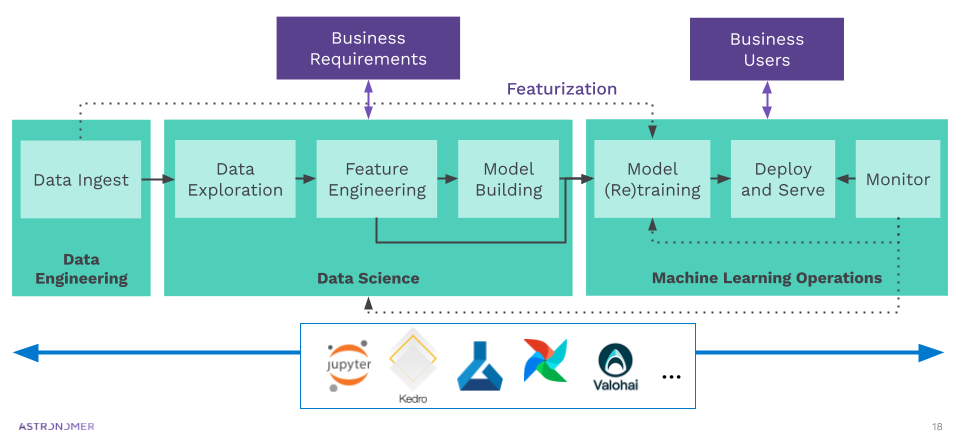

6. Typical End-to-End ML Pipeline

A typical end-to-end machine learning pipeline:

- Starts with data ingestion, which usually is the responsibility of data engineering.

- Advances to data exploration, feature engineering, and model building — all parts of the more experimental phase, which usually falls under the category of data science.

- Move on to MLOps, where the model is trained, retrained, deployed, served, and monitored.

In each of the three steps above, it’s important to work with your business counterparts to define the requirements and ensure that your pipeline adds value for users. Once you have settled on a model, the next step is featurization — going straight from data ingestion to model training, as illustrated in the example pipeline above.

7. Why Airflow?

Airflow has several features and qualities that make constructing and automating machine learning pipelines easier.

- It’s Python native

- It provides a standard interface for your entire data team

- It integrates smoothly with all your favorite data tools

- It has built-in features to assist in logging, monitoring, and alerting to external systems

- It’s extensible via custom operators and templates

- It’s data agnostic

- It’s cloud-native (but cloud-neutral)