Set up Remote Execution Agents on Astro

Remote Execution is a feature in Airflow 3 that allows you to run your Airflow tasks on any machine, in the cloud or on-premises. When using Remote Execution, only the information that’s essential for running the task, such as scheduling details and heartbeat pings, is available to Airflow system components. Everything else stays within the remote environment, making this a key feature in highly regulated industries.

This tutorial covers when to use Remote Execution and how to set it up on Astro with Remote Execution Agents running on AWS EKS or on-premises infrastructure. While this guide focuses on these specific environments, the concepts and steps can be adapted for other Kubernetes clusters, for example running on GCP or Azure.

Remote execution on Astro is only available for Airflow 3.x Deployments on the Enterprise tier or above. See Astro Plans and Pricing.

When to use remote execution

You might want to use Remote Execution in the following situations:

- Running tasks that need to access and/or use sensitive data that cannot leave a particular environment, such as an on-premises server. This requirement is common in highly regulated industries like financial services and health care.

- Running tasks that require specialized compute, such as a GPU or TPU machine to train neural networks.

You can accomplish Remote Execution in two ways:

- When running Airflow on Astro, you can use Remote Execution Agents with the AstroExecutor.

- When running open source Airflow, you can use the EdgeExecutor, which is part of the edge3 provider package.

This tutorial covers the steps for setting up Remote Execution Agents on Astro to run on AWS EKS and on-premises.

Time to complete

This tutorial takes approximately one hour to complete.

Assumed knowledge

To get the most out of this tutorial, you should have an understanding of:

- The Airflow components and how they work together.

Prerequisites

- An Astronomer account on the Enterprise tier.

- Access to AWS EKS or an on-premises Kubernetes environment.

- kubectl installed.

- An S3 bucket to store XComs.

- If you are using AWS EKS, you need to have eksctl and the aws cli installed.

Step 1: Create a Remote Execution Deployment

To start registering Remote Execution Agents, you first need to create a dedicated Remote Execution Deployment on Astro.

-

Make sure you have a dedicated cluster in your Astro Workspace. If you don’t, you can create a new dedicated cluster. When creating a new cluster, you can leave the VPC Subnet range at its default setting (

172.20.0.0/19) or customize it for your needs. Note that it can take up to an hour for a new cluster to be provisioned. If you later want to use customer managed workload identity to read logs from Remote Exection Agents running on AWS EKS, you need to create your dedicated cluster on AWS. -

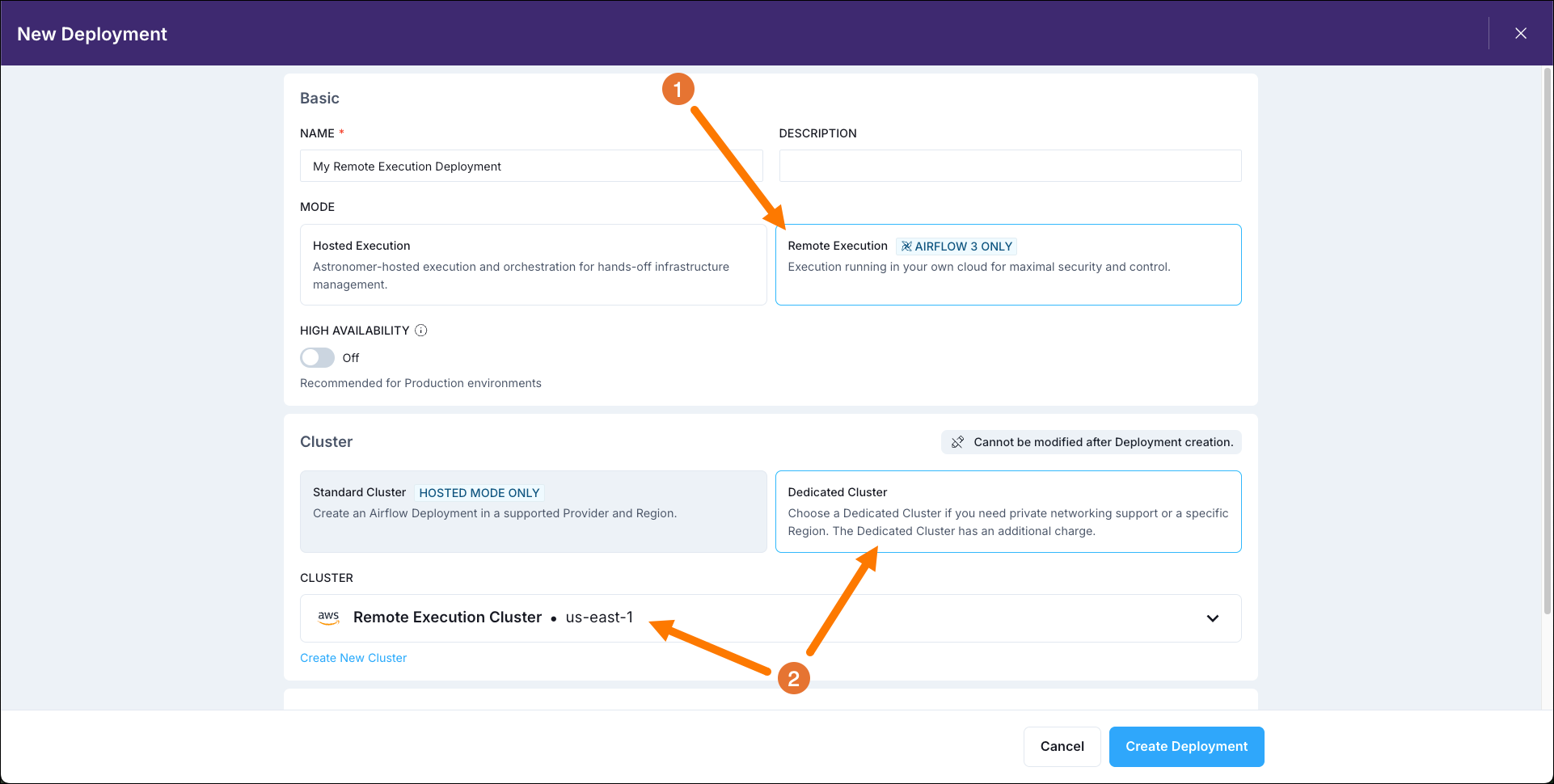

Create a Remote Execution Deployment in your Astro Workspace.

- Select Remote Execution as the execution mode.

- Select your dedicated cluster.

Step 2: Create an Agent Token

Your Remote Execution Agents will need to authenticate themselves to your Astro Deployment. To do this, you need to create an Agent Token.

-

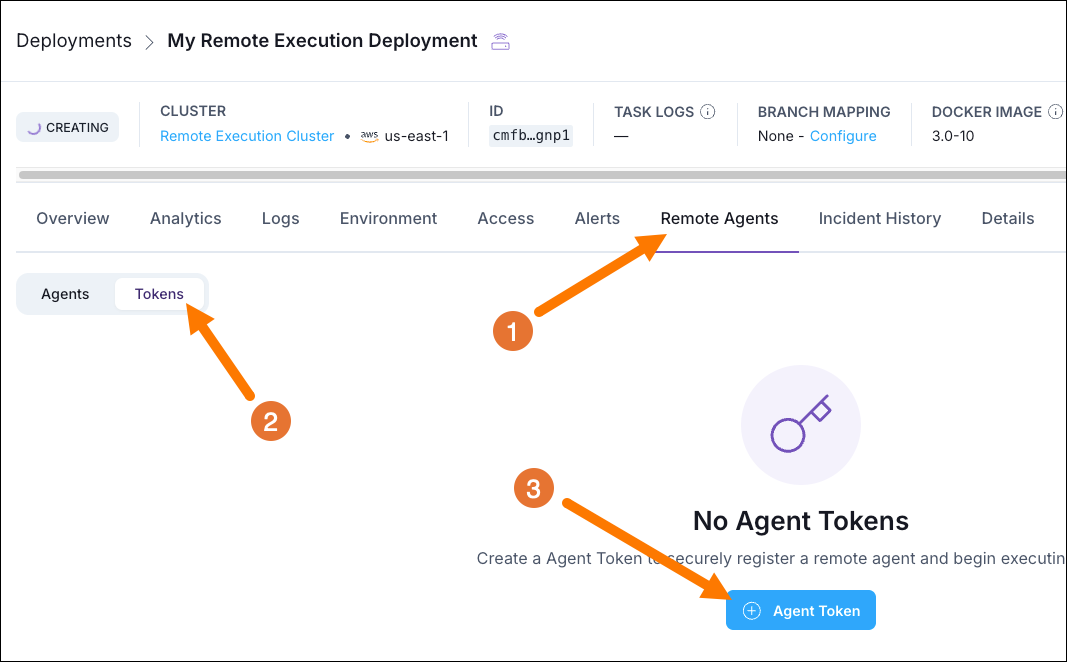

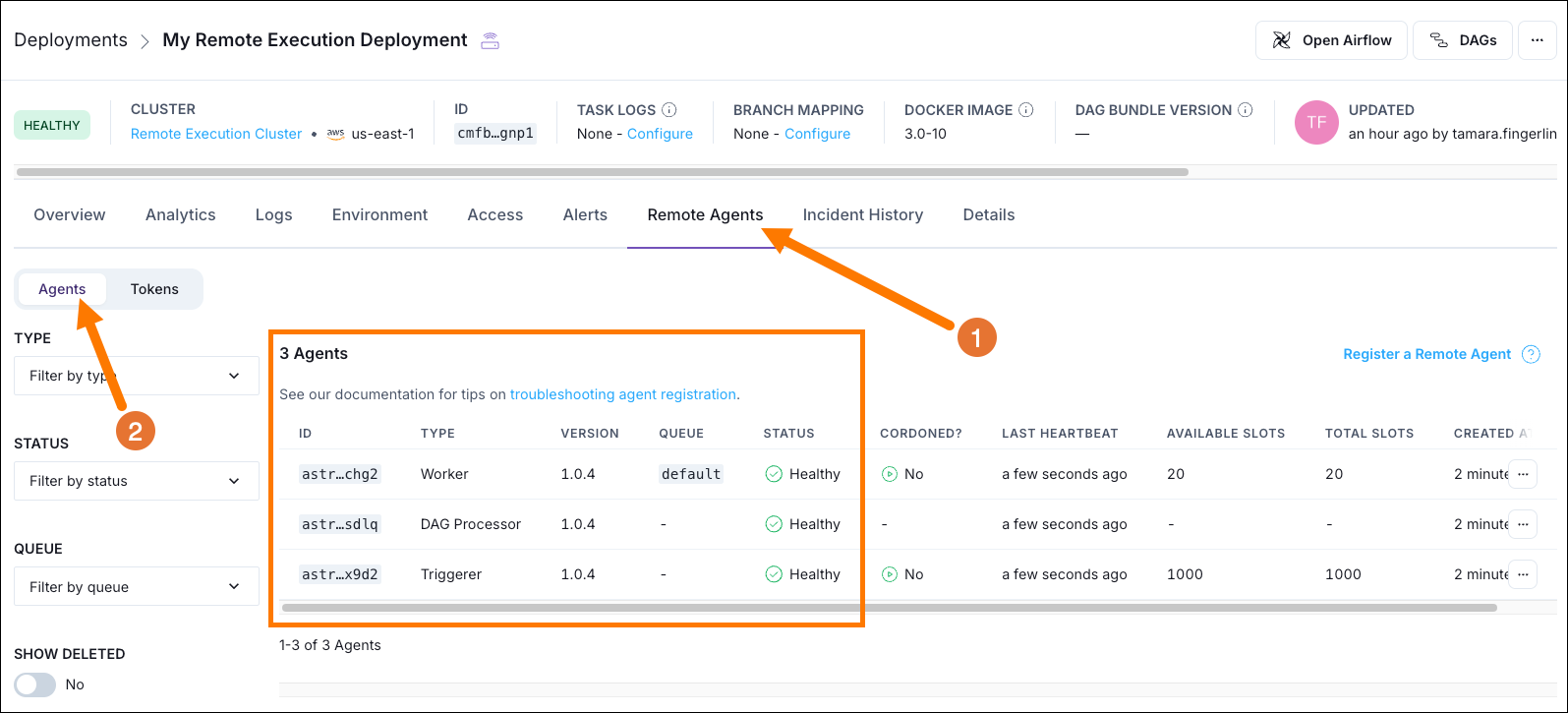

In the Astro UI, select the Remote Execution Deployment you created in the previous step and click on the Remote Agents tab.

-

Select Tokens.

-

Click on +Agent Token and create a new Agent Token.

-

Make sure to save the Agent Token in a secure location as you will need it later.

Step 3: Create a Deployment API Token

Your Remote Execution Agents will also need to fetch the right images from your Astro Deployment. To do this, you need to create a Deployment API Token.

-

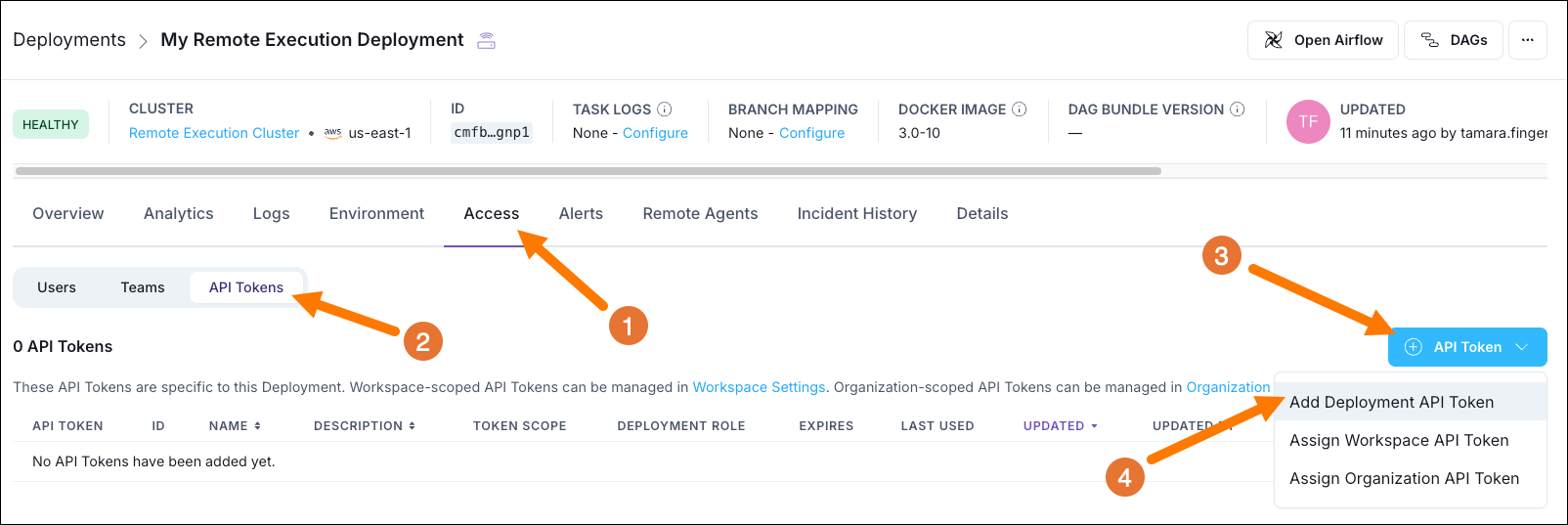

In the Astro UI, select the Remote Execution Deployment you created in Step 1 and click on the Access tab.

-

Select API Tokens.

-

Click on + API Token.

-

Select Add Deployment API Token and create a new Deployment API Token with Admin permissions.

-

Make sure to save the Deployment API Token in a secure location as you will need it later.

Step 4: Retrieve your values.yaml file

-

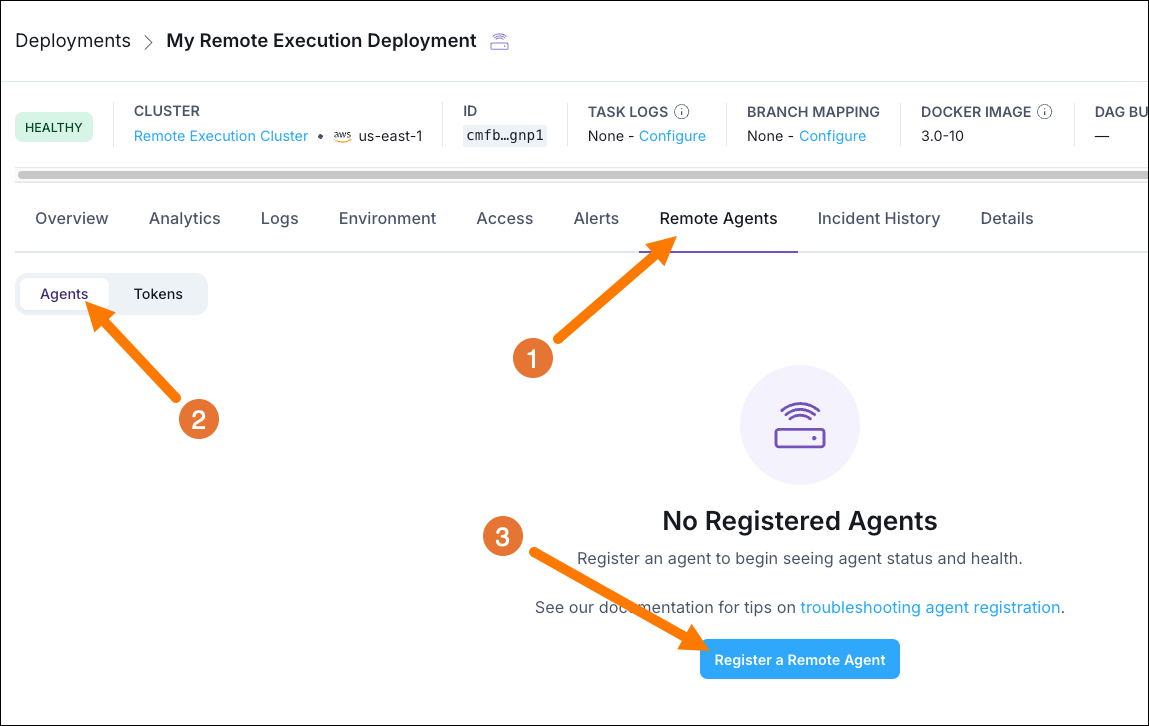

In the Astro UI, select the Remote Execution Deployment you created in Step 1 and click on the Remote Agents tab.

-

Click on Register a Remote Agent.

-

Download the

values.yamlfile you are given.

Note that no Remote Execution Agents show up in the list yet, they will only appear in the Remote Agents tab when they start heartbeating!

Step 5A: Set up your Kubernetes cluster on EKS

This step covers the setup for deploying the Remote Execution Agent on AWS EKS. For a simple on-premises setup see Step 5B.

-

Authenticate your machine to your AWS account. If your organization uses SSO, use

aws configure ssoand log in via the browser. Make sure to set theAWS_PROFILEenvironment variable to the profile (CLI profile name) you used to log in withexport AWS_PROFILE=<your-profile-name>. You can verify your profile by runningaws sts get-caller-identity. -

To create a new EKS cluster, you need to define its parameters in a

my-cluster.yamlfile. Make sure theworkersnode group is large enough to support your intended workload and the Agent specifications in yourvalues.yamlfile for all 3 Agents. You can use the below example as a starting point, make sure to update<your-cluster-name>and<your-region>with your own values. -

Create the EKS cluster by running the following command. Note the cluster creation can take up to 15-25 minutes.

-

Configure

kubectlto use your new EKS cluster by running the following command. Replace<your-cluster-name>with the name of your cluster. -

Verify that

kubectlis aimed at the right cluster by running:The output should look similar to this:

Step 5B: Set up your local Kubernetes cluster

Alternatively, you can deploy the Remote Execution Agent on your on-premises cluster. If you want to test Remote Execution locally, a good option is to use the Kubernetes feature of Orbstack or Docker Desktop. In this step we’ll use Orbstack as an example.

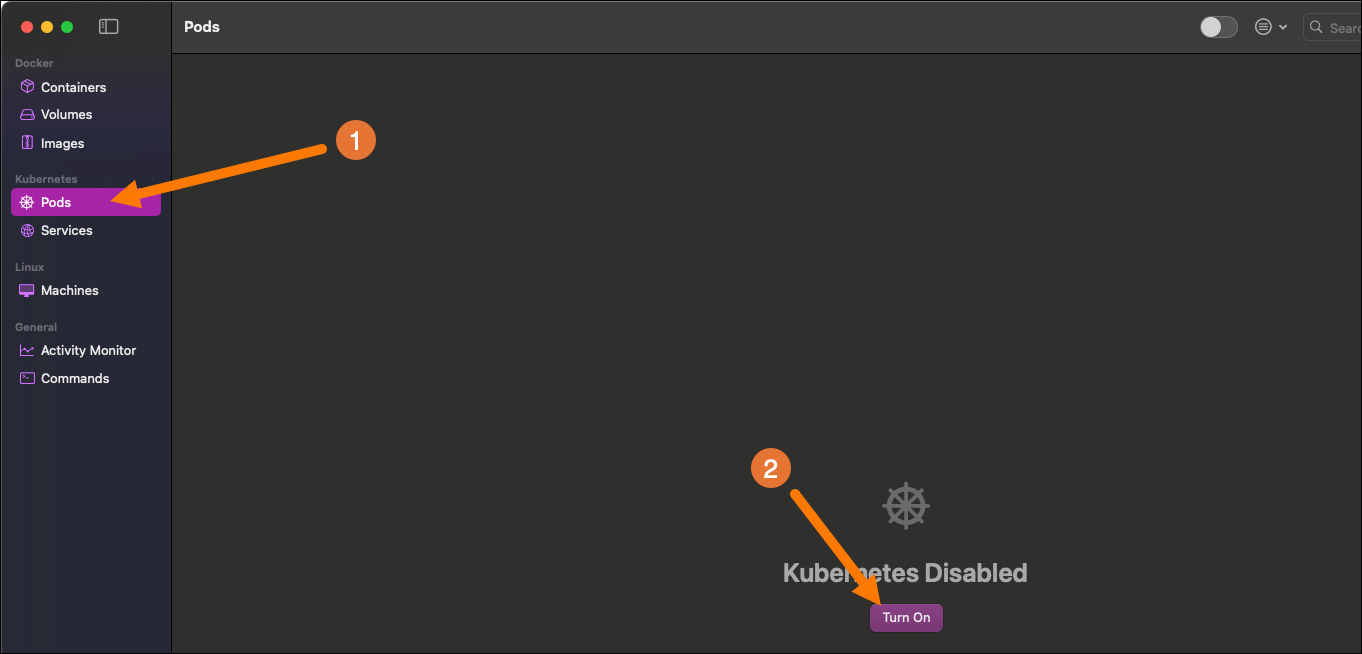

-

Enable the

Kubernetesfeature in Orbstack.

-

Switch to the orbstack context:

Step 6: Deploy the Remote Execution Agent

-

Create a new namespace for the Remote Execution Agent by running:

-

Create a secret containing the Agent Token named

my-agent-tokenby running the following command. Replace<your-agent-token>with the Agent Token you created in Step 2. Replace<your-namespace>with the namespace you created. -

Create a secret containing the Deployment API Token named

my-astro-registry-secretby running the following command. Replace<your-deployment-api-token>with the Deployment API Token you created in Step 3 and replace<your-namespace>with your namespace. -

Modify your

values.yamlfile to add<your-namespace>, as well as the names for your agent token (agentTokenSecretName) and deployment API token (imagePullSecretName). -

Modify your

values.yamlfile to add your Dag bundle configuration to thedagBundleConfigListsection.Note that you need to store your Dags in a Dag bundle accessible to your Remote Execution Agents. Below is an example of a

GitDagBundleconfiguration working with a Git connection namedgit_default(set in thecommonEnvsection later in this tutorial). -

Modify your

values.yamlfile to add your XCom backend configuration to thexcomBackendsection. For this tutorial we’ll use the Object Storage XCom Backend. The credentials are set in thecommonEnvsection later in this tutorial. -

Modify your

values.yamlfile to set a secrets backend, we’ll use the Local Filesystem Secrets Backend as a placeholder. Note that if you want to install an external secrets backend, you need to provide the relevant provider packages to the worker containers and credentials incommonEnv. For more information on how to interact with secrets backends, see Configure a secrets backend. -

Modify your

values.yamlfile to add necessary environment variables to thecommonEnvsection. Make sure to replace all placeholders with your own values. -

Install the Helm chart by running the following command. Replace

<your-namespace>with your namespace. -

Verify that the 3 Remote Execution Agent pods are running by running the following command. Replace

<your-namespace>with your namespace.The output should look similar to this:

On Astro you can see the 3 Remote Execution Agent pods happily heartbeating to your Astro Deployment. When you open the Airflow UI on this Astro Deployment, you’ll be able to see and interact with all Dags contained in the configured Dag bundles.

You can now run tasks on the remote EKS cluster! In order to be able to use XCom, see Step 7 for more information.

If you ever need to update the helm chart you can use the following command. Replace <your-namespace> with your namespace.

Step 7: Configure XCom

If you want to use XCom to pass information between tasks running using Remote Execution, you need to configure a custom XCom backend. You already laid the foundation for this in Step 6 when setting the following:

But in order for the worker pod to be able to use the XCom backend, you need to install the necessary Airflow provider packages on it. To make installation faster we recommend using a constraints file.

-

Create your

constraints.txtfile (see GitHub for an example). Make sure that it includes the Airflow Common IO provider and the Amazon provider with thes3fsextra. -

Add the constraints file as a configmap to the k8s cluster. Replace

<your-namespace>with the namespace you created in Step 6. -

Update your

values.yamlfile to install the necessary provider packages in theworkerssection. Update the versions as needed. Note that you also need to update thePYTHONPATHenvironment variable to include the shared packages. Note that yourimageversion likely differs from the one in the example below. -

Update your

values.yamlfile to mount the constraints file. -

Update the helm chart by running the following command. Replace

<your-namespace>with your namespace. -

Run a Dag that uses XCom to verify the setup. Remember that you need to push the Dag to your Dag bundle location for it to be accessible to the Remote Execution Agent.

If you’d like to see your task logs displayed in the Airflow UI, see our docs on Task logging for remote Deployments.

Step 8: (optional, AWS only) Use a secrets backend

If you want to use a secrets backend to store your connections and variables, you need to configure the Remote Execution Agent to use it.

-

First, you need an IAM role to attach this policy to. The IAM role’s trust policy needs to include the EKS OIDC ID. So you need to fetch that first. Replace

<YOUR_EKS_CLUSTER_NAME>with the name of your EKS cluster and<YOUR_AWS_REGION>with the region of your EKS cluster. -

Create a new file called

my-airflow-trust-policy.jsonand add the following trust policy. Replace<your-account-id>with your AWS account ID,<your-region>with the region of your EKS cluster,<your-namespace>with the namespace you created in Step 6, and<your-cluster-oidc-id>with the EKS OIDC ID you fetched in the previous substep. -

Create a new IAM role called

RemoteAgentsRolewith the trust policy you created in the previous step. -

Create a new file called

my-airflow-secrets-policy.jsonand add the following policy. Replace<your-region>with the region of your EKS cluster and<your-account-id>with your AWS account ID.Create the policy using the following command.

-

Attach the

AirflowSecretsManagerAccesspolicy to theRemoteAgentsRolerole. -

Update the

serviceAccountsection in yourvalues.yamlfile to annotate the role to your service accounts. Replace<your-account-id>with your AWS account ID. -

Update the

commonEnvsection in yourvalues.yamlfile to configure the secrets backend. Replace<your-role-arn>with the ARN of the IAM role you created in the previous step. -

Since the secrets backend is also used in the Dag processor and Triggerer components and part of the Airflow Amazon provider, you need to install the necessary provider packages on these components as well, like you did for the worker pods when configuring the XCom backend in Step 7. Note that your

imageversion likely differs from the one in the example below. -

Update the helm chart with the new

values.yamlfile. -

Now your tasks have access to the secrets backend! You can store connections under

airflow/connectionsand variables underairflow/variables.

Step 9: (optional, AWS only) Configure logs in the Airflow UI

When using Remote Execution with a Deployment running on AWS and the Remote Execution Agent running on AWS, you can configure your task logs to be read from an S3 bucket using a customer workload identity.

-

Create a new IAM policy called

AirflowS3Accessand attach the following policy. Replace<your-logging-bucket>with the name of your logging bucket. Make sure to record the policy ARNarn:aws:iam::<your-acccoun-id>:policy/AirflowS3Accessfrom the output of the command.This is the policy you need to create in the

my-airflow-s3-policy.jsonfile. -

Attach the

AirflowS3Accesspolicy to theRemoteAgentsRolerole you created and addd to the service account annotations in Step 8. Replace<your-account-id>with your AWS account ID. -

Update the

commonEnvsection in yourvalues.yamlfile to configure the logs to be written to S3. Replace<your-logging-bucket>with the name of your logging bucket and<your-deployment-id>with the ID of your deployment. -

Update the helm chart with the new

values.yamlfile. Upon the next Dag run you should be able to see the logs in your S3 bucket. -

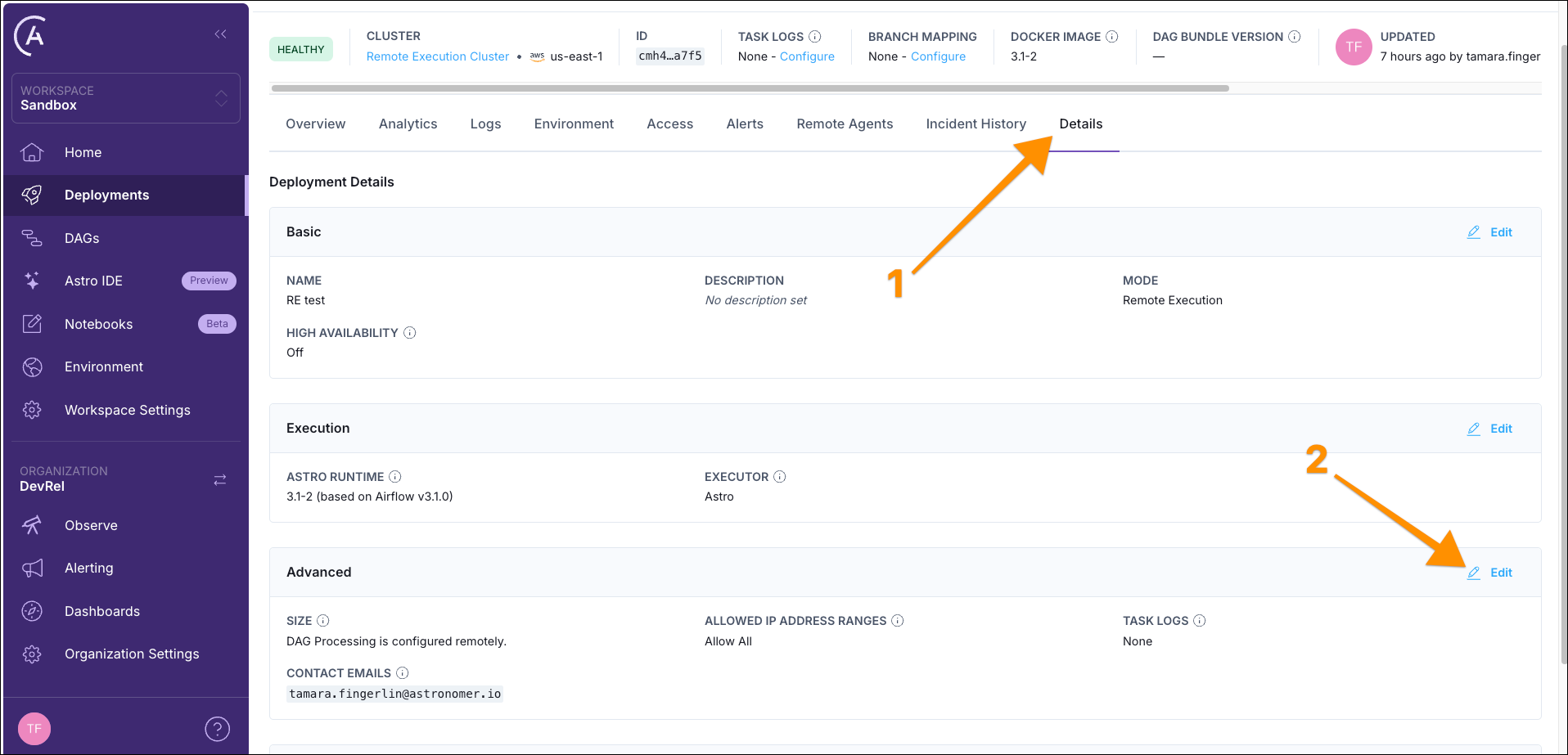

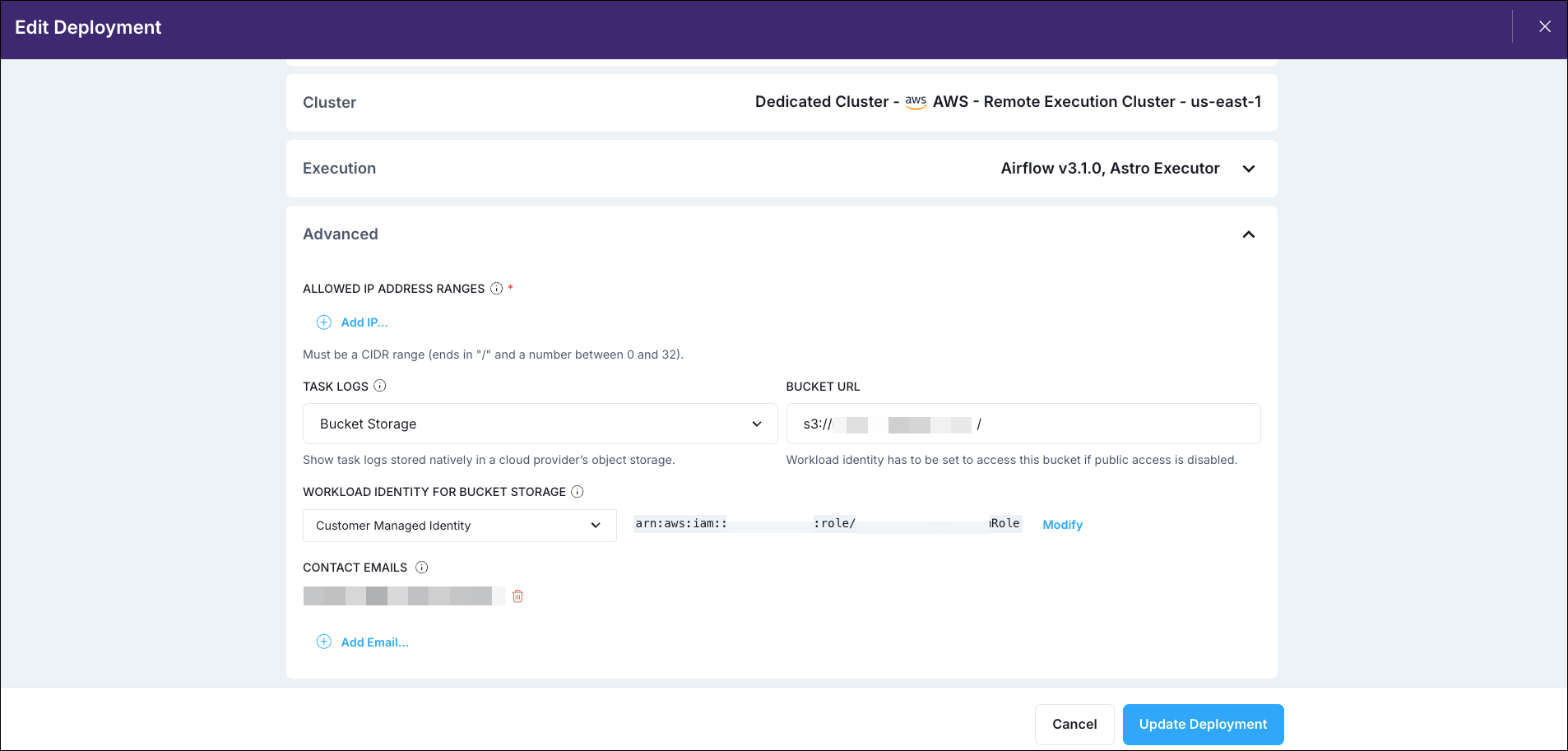

To see the logs in the Airflow UI, you need to configure the Astro Deployment to use the S3 bucket for task logs. In the Astro UI, navigate to your Deployment and click the Details tab. Click Edit in the Advanced section.

Select Bucket Storage in the Task Logs field and add the Bucket URL as

s3://<your-logging-bucket>/<your-deployment-id>. Select Customer Managed Identity in the Workload Identity for Bucket Storage field and use yourRemoteAgentsRoleIAM role ARN for the Workload Identity ARN before running the provided bash script.

-

Now you should be able to see the task logs in the Airflow UI.