Revolutionizing Data Orchestration and MLOps with Apache Airflow® and Astronomer at Chiper

Introduction to Pioneering Data Orchestration at Chiper

At Chiper, we are dedicated to empowering local commerce by providing a digital ecosystem that connects and enhances the operations of small and medium-sized stores. Our mission is to revolutionize the traditional retail market through technology, offering a seamless and efficient way for store owners to manage their inventory, pricing, and distribution.

As leaders in the digitalization of retail in Latin America, our journey to enhance data processes and implement robust MLOps practices has been central to our innovative approach. Traditional methods were becoming inefficient for our rapidly expanding business, which led us to embrace Apache Airflow® for its robustness, scalability, and community support. The integration of Airflow, along with Astronomer's platform, has significantly improved our data orchestration and workflow management systems, setting a new standard in retail technology. (Visit our website: https://growth.chiper.co/co/stores).

In this post we will cover how we had implemented a solid MLOps pipeline and data orchestration with the help of Airflow in multiple use cases.

Advanced Cronjob Orchestration with Airflow

The integration of Apache Airflow® significantly transformed our approach to cronjob orchestration. Airflow's DAGs (Directed Acyclic Graphs) provided a flexible way to define and manage workflows, allowing us to easily visualize task dependencies and progress. We leveraged Airflow’s extensive operator library, particularly the KubernetesPodOperator, to dynamically create and manage Kubernetes pods. This enabled us to run isolated tasks in containers, improving resource utilization and scalability.

Further, we implemented custom sensors and hooks in Airflow to monitor external triggers and system states, ensuring our workflows were responsive to real-time events and changes. For example we have a process that has to notify via email to our allied carriers about the cost of each day’s shipping router and also weekly about a cost invoice. In the past, this was a manual task so it was very tedious since, for example: if one day there was a carrier that didn't do any shipping routes, we had to manually delete it from our report. And also it could become confusing because there were many reports to be sent, some daily and some weekly, but with Airflow this process could be automated.

Streamlining CI/CD with Jenkins Integration

Our CI/CD pipeline, powered by Jenkins, plays a pivotal role in maintaining a smooth and efficient deployment process. Jenkins' versatility in handling various build and test environments made it an ideal choice for our needs. Integrating Jenkins with Airflow allowed us to automate the testing and deployment of our Airflow DAGs and related components.

To ensure the robustness of our code, we incorporated unit tests that verify the internal logic of the code. These tests are crucial in identifying and resolving issues at an early stage, thus maintaining the high standards of our software development process.

Additionally, we integrated a SonarQube flow into our Jenkins pipeline. This integration enforces the compliance of our code with the policies established by our MLOps team. SonarQube scans our code for quality issues and vulnerabilities, ensuring that our code adheres to the best practices in software development and meets the stringent requirements of our MLOps team. This addition has further elevated the level of quality assurance in our operations, making our deployment process not only more streamlined but also more aligned with our organizational standards for quality and reliability.

Optimizing Vertex AI Tasks Using Airflow

Incorporating Vertex AI into our data processing pipeline opened new possibilities in handling compute-intensive machine learning tasks. Airflow’s ability to trigger and monitor Vertex AI processes provided us with a high degree of control and visibility over these tasks. We utilized custom operators in Airflow to interface with Vertex AI, enabling the seamless initiation of model training and evaluation jobs. This integration was particularly beneficial for managing long-running and resource-intensive tasks, as Airflow’s scheduling and monitoring capabilities ensured optimal resource utilization and timely completion of these processes.

To provide a clearer understanding, a typical Vertex AI workflow might involve the following sequence of tasks: data preprocessing, model training, model evaluation, and model deployment. Long-running or resource-intensive tasks in this context often include training deep learning models on large datasets or performing extensive hyperparameter optimization to refine model performance. While Vertex by itself allows us to monitor each step of the process, it is a manual task that easily becomes inefficient due to the big amount of time this process can take to complete. So the monitoring capabilities of Airflow are really helpful in optimizing this so that the developer doesn't have to keep his attention on Vertex and just has to wait Airflow to notify him about what happened to the Vertex Pipeline execution.

Implementing Multiple Airflow Instances for Strategic Environment Management

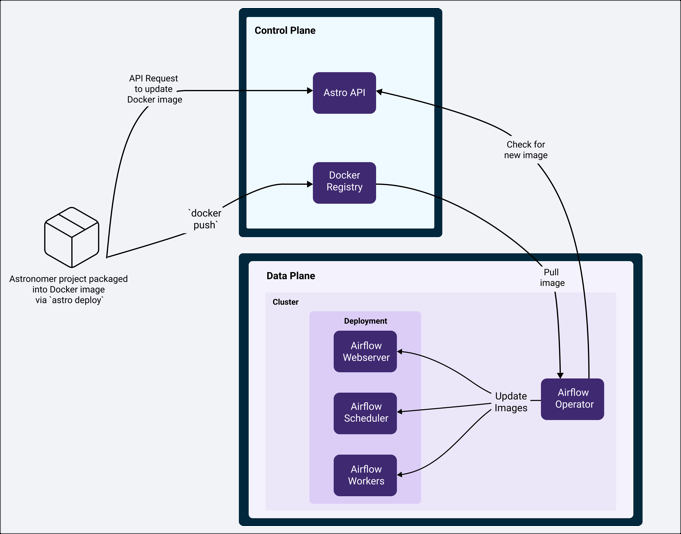

Deploying multiple Airflow instances on Astronomer was a strategic decision aimed at creating a robust and isolated environment setup. Astronomer, for those who may not be familiar, is a platform designed to simplify the deployment, management, and scaling of Apache Airflow®. It provides a cloud-native environment that is optimized for running Airflow, making it easier for teams to implement, monitor, and scale their data workflows.

Using Astronomer, we were able to maintain separate Airflow instances for different stages of development and operational needs. This architecture allowed for a clear distinction between environments. For instance, our development instance was configured with additional logging and debugging tools, tailored for a development setting where experimentation and testing are frequent. In contrast, the production instance was optimized for performance and reliability, ensuring that our critical data processing tasks run smoothly and efficiently.

This separation ensured that our development activities did not impact the production workflows. It enabled us to test new features and updates in the development instance without risking the stability of our production environment. The flexibility and scalability provided by Astronomer played a crucial role in this strategic implementation, allowing us to manage our Airflow instances in a way that best suited our organizational needs and workflow demands.

Real-Time Monitoring and Alerting with Slack Integration

Implementing real-time monitoring and alerting was a critical aspect of our Airflow deployment. Airflow’s native monitoring capabilities were augmented with custom alerting mechanisms that integrated with Slack. We developed a series of custom plugins and hooks that allowed us to send real-time alerts to specific Slack channels based on the status of our workflows. These alerts included detailed information such as task failure reasons, logs, and retry attempts. This level of detail was instrumental in enabling our team to quickly diagnose and resolve issues, thereby maintaining high uptime and reliability of our data processes.

MLOps Consistency: From Simple Cronjobs to Complex ML Models

Establishing a consistent and reliable MLOps pipeline was a key objective in our adoption of Airflow. The platform’s flexibility in handling various types of workflows made it an ideal choice for deploying both simple cronjobs and complex machine learning models. We developed custom ML operators and sensors in Airflow to handle various stages of ML model lifecycle, including data preprocessing, model training, evaluation, and deployment. This approach ensured that our ML models were not only deployed efficiently but also monitored and managed throughout their lifecycle, providing us with insights into model performance and opportunities for optimization.

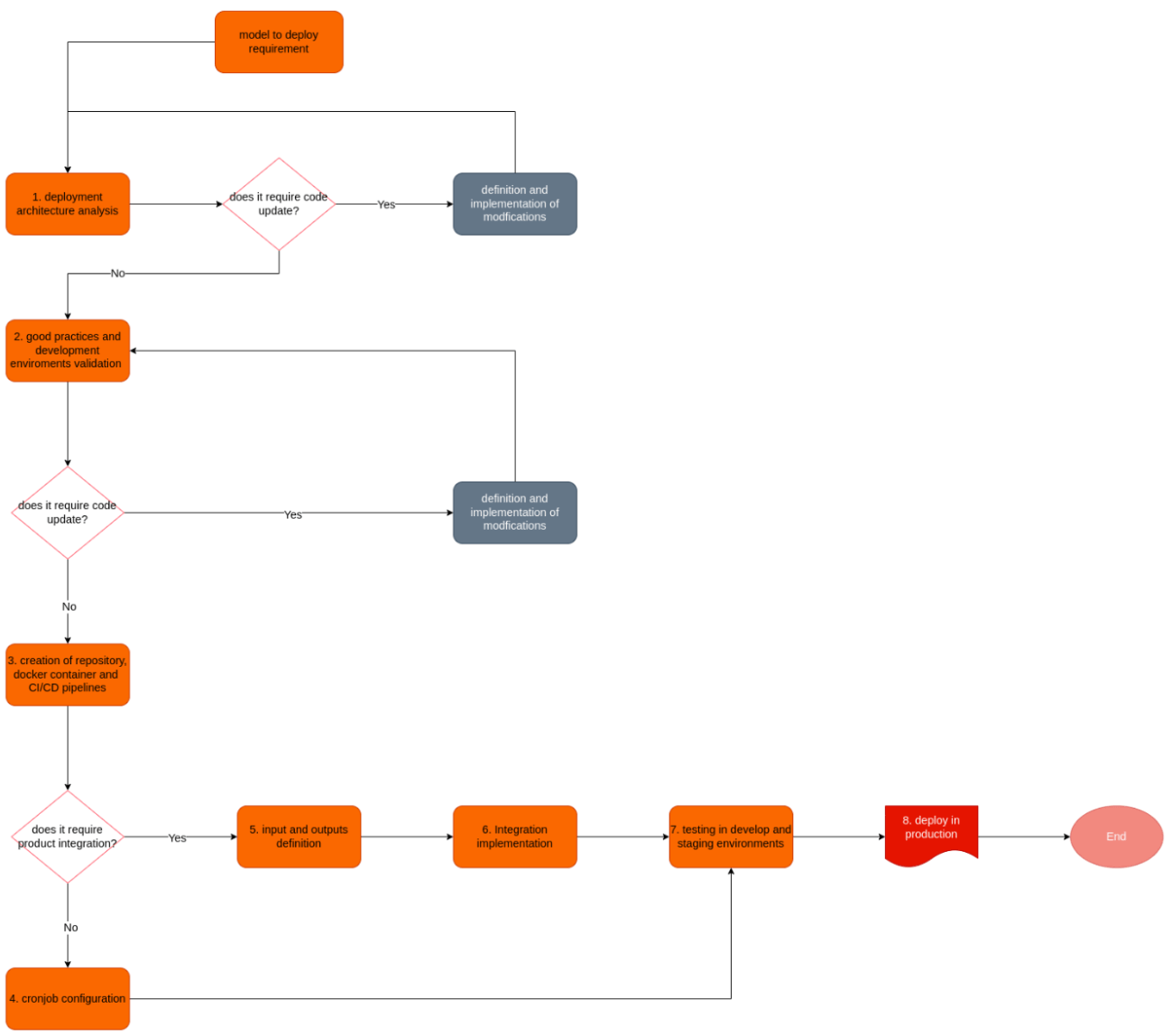

Cronjob planning, designing, deployment workflow

Models continues deployment cycle

Impact on Key Business Operations

The adoption of Airflow and Astronomer has had a profound impact on our key business operations. The automation and efficiency brought by these tools have enabled more accurate and timely updates to our inventory management systems, optimized our distribution and routing algorithms, and allowed for more dynamic and responsive pricing strategies. These enhancements have translated into significant business value, driving growth, improving customer satisfaction, and giving us a competitive edge in the market.

Conclusion: Setting a New Standard in Data Orchestration and Machine Learning

Our journey with Apache Airflow® and Astronomer has not only transformed our data orchestration capabilities but also set a new standard in how we approach machine learning operations. This system is a cornerstone in our continuous pursuit of operational excellence and business innovation, always keeping in mind our objective, empower small shopkeepers.