Data Orchestration: The Dividing Line Between Generative AI Success and Failure

As organizations strive to leverage Generative AI, they often encounter a gap between its promising potential and realizing actual business value. At Astronomer, we've seen firsthand how integrating generative AI (GenAI) into operational processes can transform businesses. But we’ve also observed that the key to success lies in orchestrating the valuable enterprise data needed to fuel these AI models.

This blog post outlines the critical role of data orchestration in deploying generative AI at scale. I’ll highlight real-world customer use cases where Apache Airflow®, managed by Astronomer's Astro, has been instrumental in successful applications, before wrapping up with useful next steps to get you started.

What’s the Role of Data Orchestration in the GenAI Stack?

Generative AI models, with their extensive pre-trained knowledge and impressive ability to generate content, are undeniably powerful. However, their true value emerges when combined with the institutional knowledge that’s captured in your rich, proprietary datasets and operational data streams. Successful deployment of GenAI involves orchestrating workflows that integrate valuable data sources from across the enterprise into the AI models, grounding their outputs with relevant and up-to-date business context.

Integrating data into GenAI models (for inference, prompting, or fine-tuning) involves complex, resource-intensive tasks that need to be optimized and repeatedly executed. Data orchestration tools provide a framework — at the center of the emerging AI app stack — that not only simplifies these tasks but also enhances the ability for engineering teams to experiment with the latest innovations coming from the AI ecosystem.

The orchestration of tasks ensures that computational resources are used efficiently, workflows are optimized and adjusted in real-time, and deployments are stable and scalable. This orchestration capability is especially valuable in environments where generative models need to be frequently updated or retrained based on new data or where multiple experiments and versions need to be managed simultaneously.

Apache Airflow® has become the standard for such data orchestration, crucial for managing complex workflows and enabling teams to take AI applications from prototype to production efficiently. When run as part of Astronomer’s managed service, Astro, it also provides levels of scalability and reliability critical for business applications, and a layer of governance and transparency essential for managing AI and machine learning operations.

The following examples illustrate the role of data orchestration in GenAI applications.

Conversational AI for Support Automation

A leading digital travel platform already used Airflow managed by Astro to manage data flows for its analytics and machine learning pipelines. Keen to accelerate the potential of GenAI in the business, the company’s engineers extended Astro into their new trip planning tool that recommends destinations and accommodations to millions of users daily, powered by large language models (LLMs) and streams of operational data.

This type of conversational AI, often seen as chat or voice bots, requires well-curated data to avoid low-quality responses and ensure a meaningful user experience. Because the company has standardized on Astro to orchestrate both its existing ML/operational pipelines and GenAI pipelines, the trip planning tool is able to surface more relevant recommendations to users while offering a seamless browse-to-booking experience.

Astronomer’s own support application, Ask Astro, utilizes LLMs and Retrieval Augmented Generation (RAG) to provide domain-specific answers by integrating knowledge from multiple data sources. By publishing Ask Astro as an open source project we show how Airflow simplifies both the management of data streams and the monitoring of AI performance in production.

Content Generation

Laurel, an AI company focused on automating timekeeping and billing for professional services, demonstrates the power of content generation as another common GenAI use case. The company employs AI to create timesheets and billing summaries from detailed documentation and transactional data. Managing these upstream data flows and maintaining client-specific models can be complex and requires robust orchestration.

Astro serves as a "single pane of glass" for Laurel’s data, handling massive quantities of user data efficiently. By adopting machine learning into its Airflow pipelines, Laurel not only automates critical processes for its clients, it makes them literally twice as efficient.

Reasoning and Analysis

Several support organizations are using Airflow-managed AI models to reroute support tickets, reducing resolution time significantly by matching tickets with agents based on expertise. This showcases the application of AI in reasoning to provide business logic for enhanced operational efficiency.

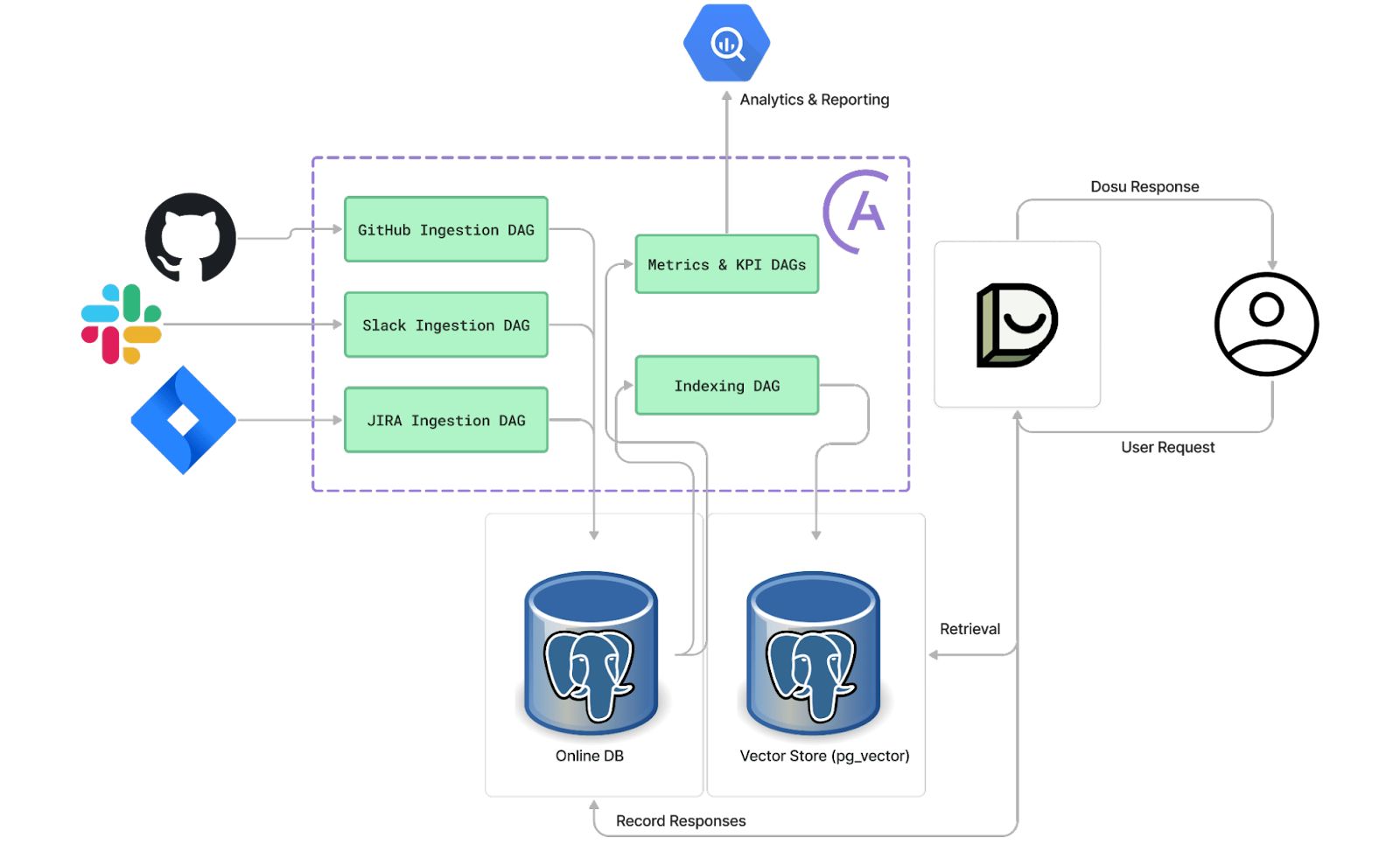

Dosu, an AI platform for software engineering teams, uses similar orchestration to manage data pipelines that ingest and index information from Slack, github, Jira, and so on. Reliable, maintainable, and monitorable data pipelines are crucial for Dosu’s AI applications, which help categorize and assign tasks automatically for major software projects like LangChain.

Figure 1: Dosu’s AI workflows orchestrated by Airflow running in Astro

Streamlining Application Development with AI and Airflow

Large language models also aid in code generation and analysis. Dosu and Astro use LLMs for generating code suggestions and managing cloud IDE tasks, respectively. These applications necessitate careful data management from repositories like GitHub and Jira, ensuring organizational boundaries are respected and sensitive data is anonymized. Airflow's orchestration capabilities provide transparency and lineage, giving teams confidence in their data management processes.

Next Steps to Getting Started with Data Orchestration

By leveraging Airflow's workflow management and Astronomer's deployment and scaling capabilities, development teams don’t need to worry about managing infrastructure and the complexities of MLOps. Instead they are free to focus on data transformation and model development, which accelerates the deployment of GenAI applications while enhancing their performance and governance.

To help you get started we have recently published our Guide to Data Orchestration for Generative AI. The guide provides you with more information on the key required capabilities for data orchestration along with a cookbook incorporating reference architectures for a variety of different generative AI use cases.

For those looking to dive deeper into Airflow best practices, we also recommend this resource: 10 Best Practices for Modern Data Orchestration with Airflow.

Our teams are ready to run workshops with you to discuss how Airflow and Astronomer can accelerate your GenAI initiatives, so go ahead and contact us to schedule your session.