Astro Observe Data Quality: Now in Public Preview with Event-Driven Monitoring

7 min read |

When we introduced data quality monitoring in Astro Observe this past spring, we started with a simple premise: data quality checks work best when they’re connected to the pipelines that create and move your data. Today, we’re excited to announce that Data Quality monitoring in Astro Observe is now available in public preview with powerful new capabilities that fundamentally change when and how you validate data.

Most data quality tools operate independently of orchestration, monitoring your warehouse after data arrives and piecing together context through complex integrations. Astro Observe takes a different approach by embedding data quality monitoring directly at the orchestration layer where your pipelines run. This means you can validate data the moment it lands, use table lineage to focus monitoring on the tables that actually matter for business-critical pipelines, and immediately understand which data products are at risk when quality issues surface. Monitoring data quality in the same context as your pipeline execution, table lineage, and data product SLAs makes it possible to run quality checks only when jobs complete, scope monitoring to the exact tables that power your most important data products, and assess business impact the moment failures occur.

The Problem with Waiting

Here’s a scenario most data teams know too well: Your fraud detection pipeline runs at 3:15 AM, processing overnight transactions and flagging suspicious activity. Your scheduled data quality checks don’t run until 8:00 AM. By the time those checks catch that reference data failed to load properly, your fraud model has been scoring transactions against an empty lookup table for hours, missing genuine fraud while falsely flagging legitimate customers. Support tickets are piling up, actual fraudulent transactions have cleared, and you’re now investigating why your model’s accuracy tanked overnight.

The gap between when data arrives and when quality checks run creates a window where silent failures slip through unnoticed. Without visibility into pipeline execution, traditional data quality tools can only check tables on fixed schedules. They don’t know when your pipelines complete or which tables actually power your critical data products. This disconnect forces teams to either monitor everything (creating overwhelming alert noise) or rely on scheduled checks that catch problems too late to prevent downstream impact.

Custom SQL Monitors in Observe: Define Your Logic, Choose Your Timing

Standard data quality checks (monitoring row volumes, tracking null percentages, detecting schema drift) catch common problems and work well on fixed schedules. But every organization has unique business rules that determine whether data is actually trustworthy, and those rules often need validation the moment data lands, not hours later on some predetermined schedule.

Custom SQL monitors in Astro Observe change this dynamic. You define the exact validation logic that matters for your business: checking for duplicate transaction IDs, verifying referential integrity between tables, flagging records where business rules are violated, or ensuring critical timestamp fields fall within expected ranges. You write the SQL that encodes what quality means in your context.

But here’s what makes custom SQL monitors fundamentally different: you can choose when they run. Configure them on a schedule if that makes sense for your use case (daily aggregation tables, weekly reporting snapshots, monthly reconciliation processes). Or configure them to trigger automatically when data lands in a table. The moment a pipeline writes records to your monitored table, Astro Observe executes your validation logic, catching issues in real-time before downstream systems consume bad data.

This event-driven execution works because Astro Observe sits at the orchestration layer with direct visibility into pipeline execution. When an Airflow task completes and writes to Snowflake, Observe knows. It can trigger your custom quality checks immediately rather than waiting for the next scheduled run. For data powering critical use cases like real-time dashboards, operational analytics, or ML model training, this means detecting problems in minutes instead of hours and preventing downstream impact rather than cleaning up after it.

Beyond Custom Logic: Out-of-the-Box Monitoring for Common Patterns

While custom SQL monitors unlock unlimited flexibility and event-driven execution, you don’t need to write SQL to start monitoring data quality in Astro Observe. Observe offers out-of-the-box monitors that handle the most common validation patterns.

- Data volume checks track row counts over time, alerting when unexpected spikes or drops suggest upstream pipeline failures or data loss.

- Schema consistency monitors detect column type changes that break downstream processing before errors cascade through your data products.

- Data completeness checks track null value percentages across critical fields, surfacing data integrity issues early.

These out-of-the-box monitors run on configurable schedules and require no custom code. Just point them at the tables you want to monitor, set alert thresholds, and Astro Observe handles the rest. They’re ideal for broad coverage across many tables where event-driven execution isn’t critical, letting you reserve custom SQL monitors for the high-value use cases where business-specific validation logic and real-time detection matter most.

Note: Custom SQL monitors are currently available for Snowflake. Out-of-the-box monitors work for both Snowflake and Databricks today.

Full Pipeline Context When Issues Surface

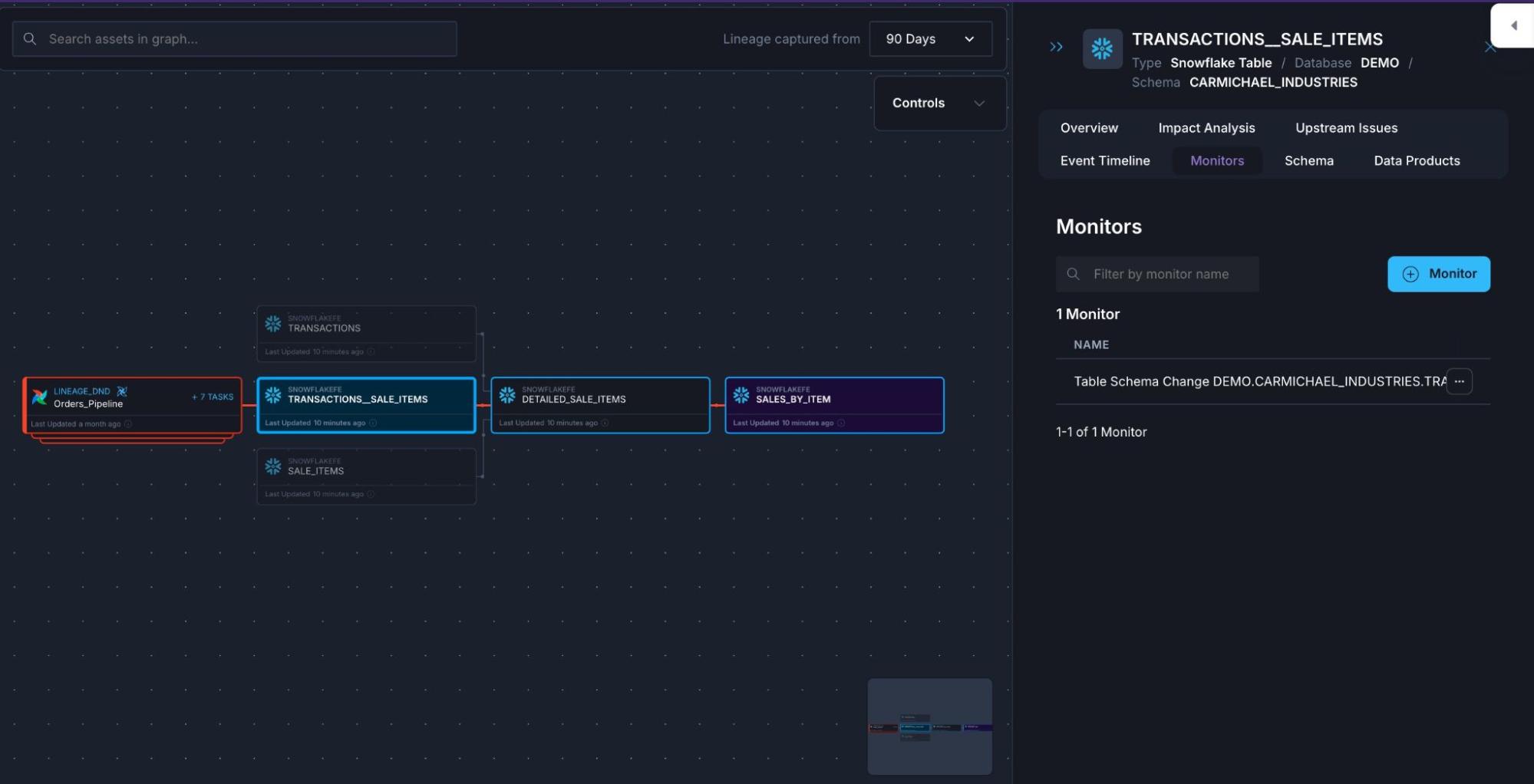

When you configure a custom SQL monitor or set up quality checks on a table in Astro Observe, you immediately see how that table connects to your Airflow Dags—which tasks produce it, what schedule drives its refresh, what upstream datasets it depends on, and which downstream data products consume it. This isn’t inferred through complex integrations. It’s native context that comes from operating where orchestration happens.

When a data quality alert fires, Astro Observe shows you which Airflow Dag and task wrote the failing table, what upstream dependencies fed into it, and which data products downstream are now at risk. Table-level lineage traces data flow not just through Airflow-orchestrated pipelines but across your warehouse tables, helping you understand both the upstream data sources as well as downstream dependencies—even tables not directly managed by your Dags. You’re not hunting for context or bouncing between tools. The connection between pipeline execution, data quality metrics, and downstream impact is right there, making root cause analysis faster and blast radius assessment clearer.

Prioritizing What Matters with the Asset Catalog

Not every table deserves the same level of monitoring attention. Some tables power critical executive dashboards refreshed multiple times per day. Others are staging tables that only live for minutes before being transformed and discarded. Treating everything equally leads to alert fatigue and wasted engineering effort.

Astro Observe’s Asset Catalog discovers tables from your data warehouse connections and surfaces them alongside Airflow Dags, tasks, and datasets. But discovery alone isn’t enough. You need to know which assets actually matter. Table popularity scoring highlights the most frequently accessed tables based on query patterns, helping you prioritize where quality monitoring delivers the highest return.

Use event-driven custom SQL monitors on the high-value tables that power your most critical data products. Apply scheduled out-of-the-box checks for broader coverage across everything else. Let less-critical staging tables run without monitors at all. This prioritization matters at scale. When you’re managing hundreds or thousands of tables, you can’t monitor everything with the same rigor.

Getting Started and What’s Next

If you’re already using Astro Observe, you can connect your Snowflake or Databricks warehouse today and start configuring monitors: both scheduled out-of-the-box checks for broad coverage and event-driven custom SQL monitors for business-critical validations.

For teams still managing data quality through disconnected tools or fighting fires after bad data has already propagated downstream, Astro Observe offers a different approach: catch issues at the source, validate quality when data lands, and resolve problems faster with full pipeline context built in.

If you’re interested in learning more about data quality in Astro Observe, schedule a demo with a member of our team.

Get started free.

OR

By proceeding you agree to our Privacy Policy, our Website Terms and to receive emails from Astronomer.