Astro IDE and Human-in-the-Loop: A First Look at Airflow 3.1 and AI Agent comparison

12 min read |

Some of the most exciting moments for the Airflow community are releases. Each of them brings new features enhancing developer experience and expanding how we can use Airflow. The 3.1 release last week was no different. Among UI enhancements and React plugin support, a foundational new feature—a first class method to add human-in-the-loop interaction—was added. For Airflow users, this opens up a whole new area of use cases from AI workflows to operational business management.

As part of the Astronomer DevRel team I get to explore new features in the weeks before the release to produce educational content, for humans but also for our friendly helpful robots: AI coding assistants.

With Astronomer’s in-browser AI-assisted Astro IDE entering public preview and 37% of Astro customers already running at least one Airflow 3 Deployment, it was now time for a test run.

Does the Astro IDE really know all about the latest Airflow features?

Has it learned from my guides and example dags? Is it really better at Airflow than other AI-powered IDEs? And what does it get wrong?

The prompt

Just a week after the 3.1 release, I opened the Astro IDE (you can try it too in a free Astro trial!) and entered a short prompt:

Can you write me a dag with a human in the loop task?

My goal was to evaluate if the Astro IDE was aware of the new feature and would produce a dag showing at least one human-in-the-loop operator for users to learn about it through direct interaction.

I gave no other context to the Astro IDE and used the default “Learning Airflow” project, which at the time of writing is identical to the Astro project created by the astro dev init Astro CLI command; it contains one simple example dag which does not contain any reference to human-in-the-loop.

The human-in-the-loop feature which was added in Airflow 3.1 (released on 2025-09-25) adds 4 new deferrable operators to the Airflow standard provider that wait until a human (or other system) provides input. This input may be a choice between options, branches in the dag or adding additional data in parameters. The feature is fully integrated into the Airflow UI rendering forms for humans to fill out and also comes with a set of API endpoints to use other systems like Slack or Email to gather responses. Learn more human-in-the-loop and other 3.1 features in our upcoming webinar What’s new in Airflow 3.1.

Testing the AI-written dag

The Astro IDE made a plan, spent some time reading the docs and looking at our best practice dags, and after a minute, I had my dag. To my delight it included several of the new human-in-the-loop operators, and even referenced the guide I wrote on the topic in its doc string!

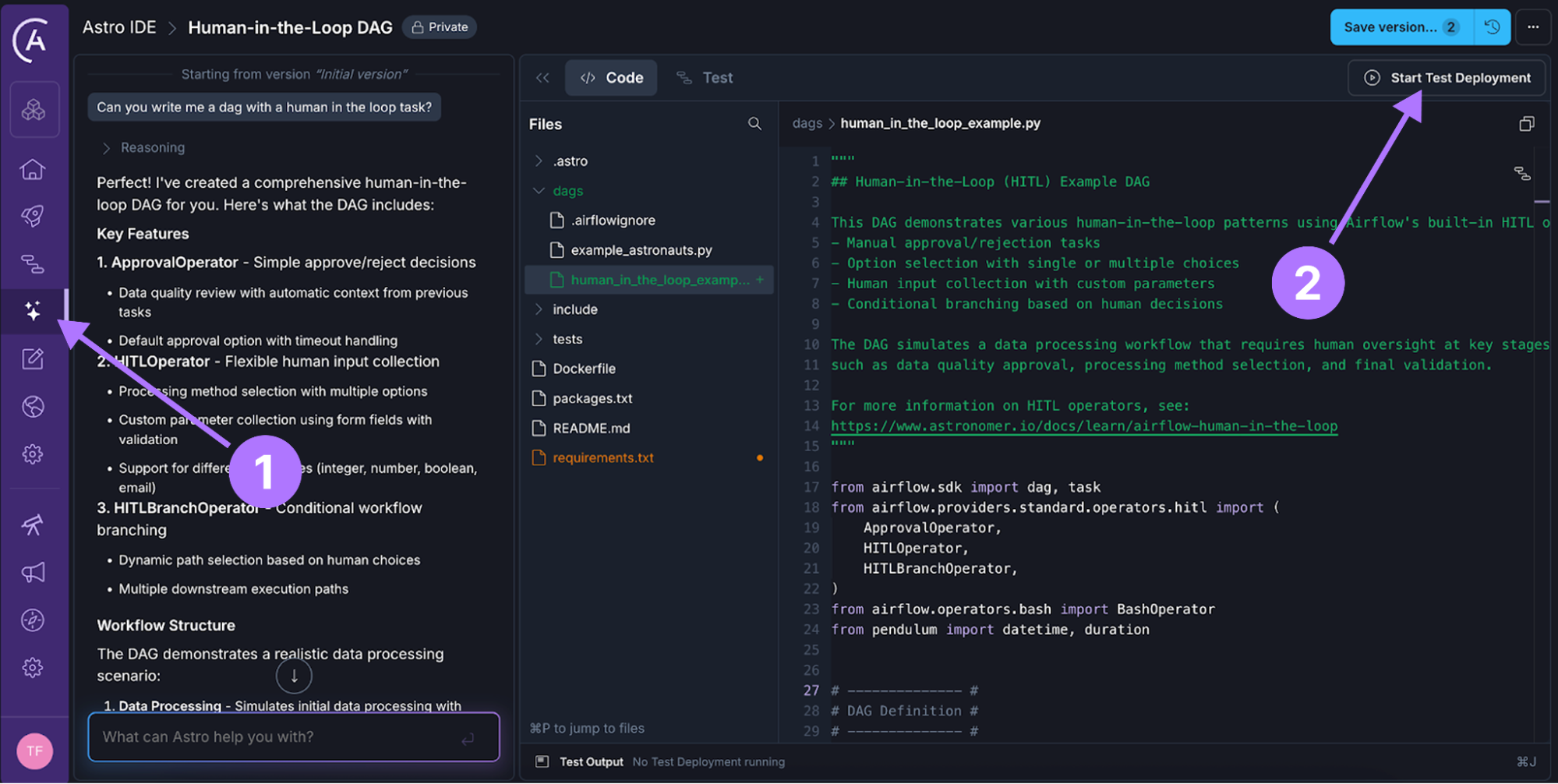

Screenshot of the Astro IDE after letting the AI assistant generate the dag. 1: You can find the Astro IDE in the sidebar under the star icon. 2: You can start a test deployment using the button in the top right corner.

Without even reviewing the code, I clicked on “Start Test Deployment”, curious to see the dag the AI had produced and if it correctly implemented the new human-in-the-loop feature.

Develop, debug, and deploy with the Astro IDE

Two minutes later I was ready to run my dag… or not: before even opening the Airflow UI, the Astro IDE alerted me of an import error. Maybe I had celebrated too early?

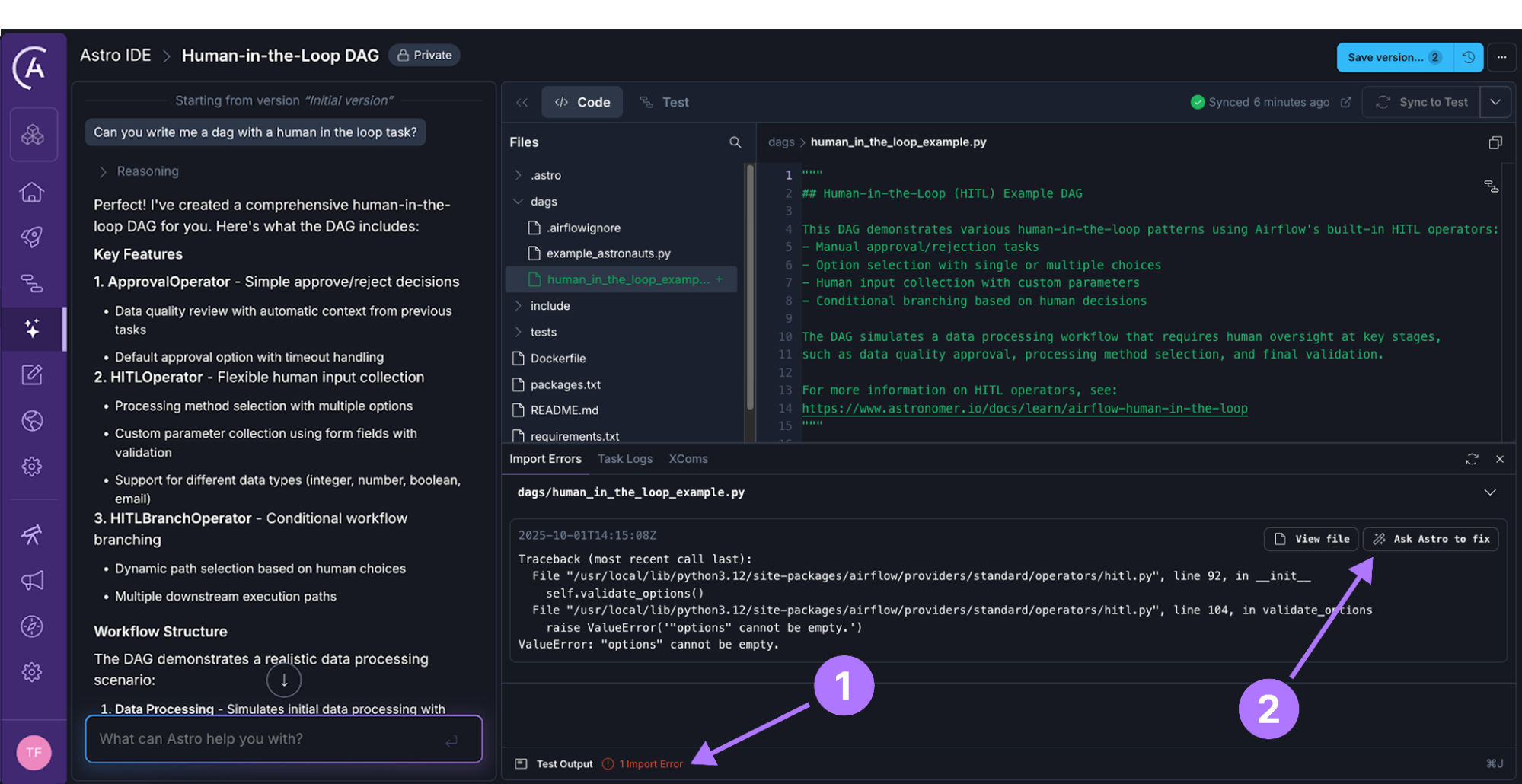

Screenshot of the Astro IDE after starting the test deployment. Clicking on the red import error (1) opens the stack trace directly in the IDE and the Ask Astro to fix button (2) aids you in debugging.

Looking at the error more closely, I saw that it related to the options parameter in one of the HITLOperators that was instantiated. I could have just clicked “Ask Astro to fix”, but in this case, I decided to find and fix the bug myself to understand exactly what had gone wrong and how to help the AI get this right the next time.

Traceback (most recent call last):

File "/usr/local/lib/python3.12/site-packages/airflow/providers/standard/operators/hitl.py", line 92, in __init__

self.validate_options()

File "/usr/local/lib/python3.12/site-packages/airflow/providers/standard/operators/hitl.py", line 104, in validate_options

raise ValueError('"options" cannot be empty.')

ValueError: "options" cannot be empty.

Zooming in on the relevant lines I saw that the AI had assumed options could be an empty list when the user is asked to input params, which is possible when using the HITLEntryOperator but not when using the HITLOperator directly.

Why did the AI get this wrong? Knowing that the usefulness of AI is all about the context it has, the Astro IDE has tools to search our own internal and external documentation and best practice example dags, alongside each organization’s provided code base, information about their Astro deployments, connections and, if Observe is enabled, asset information.

What happened was that I missed mentioning that options need to contain at least one element in our guide. Sorry robot, my bad!

custom_parameters_input = HITLOperator(

task_id="collect_custom_parameters",

subject="Configure Processing Parameters",

body="""

# ... Longer body text ...

""",

options=[], # Mistake! Options can't be an empty list!

params={

"batch_size": {

"type": "integer",

"minimum": 100,

"maximum": 10000,

"default": 1000,

"description": "Number of records to process in each batch",

}

# ... More params ...

},

)

I quickly switched the HITLOperator with HITLEntryOperator, removed the options param and synched my changes to the Test deployment. These edits are all possible from directly with the Astro IDE in my browser, no local development environment setup or context switching necessary.

Time for Attempt #2!

Running the dag in the test deployment

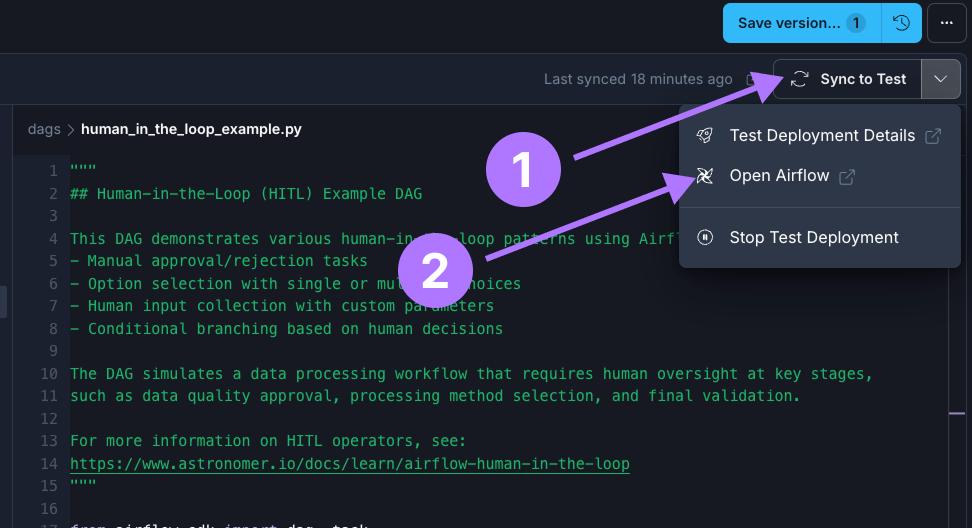

This time no import errors were reported. Without further ado I opened the Airflow UI of the test deployment directly from the IDE and ran my dag.

Zoomed in screenshot of the Astro IDE showing the button to Sync changes to the test deployment (1) and how to open the Airflow UI (2).

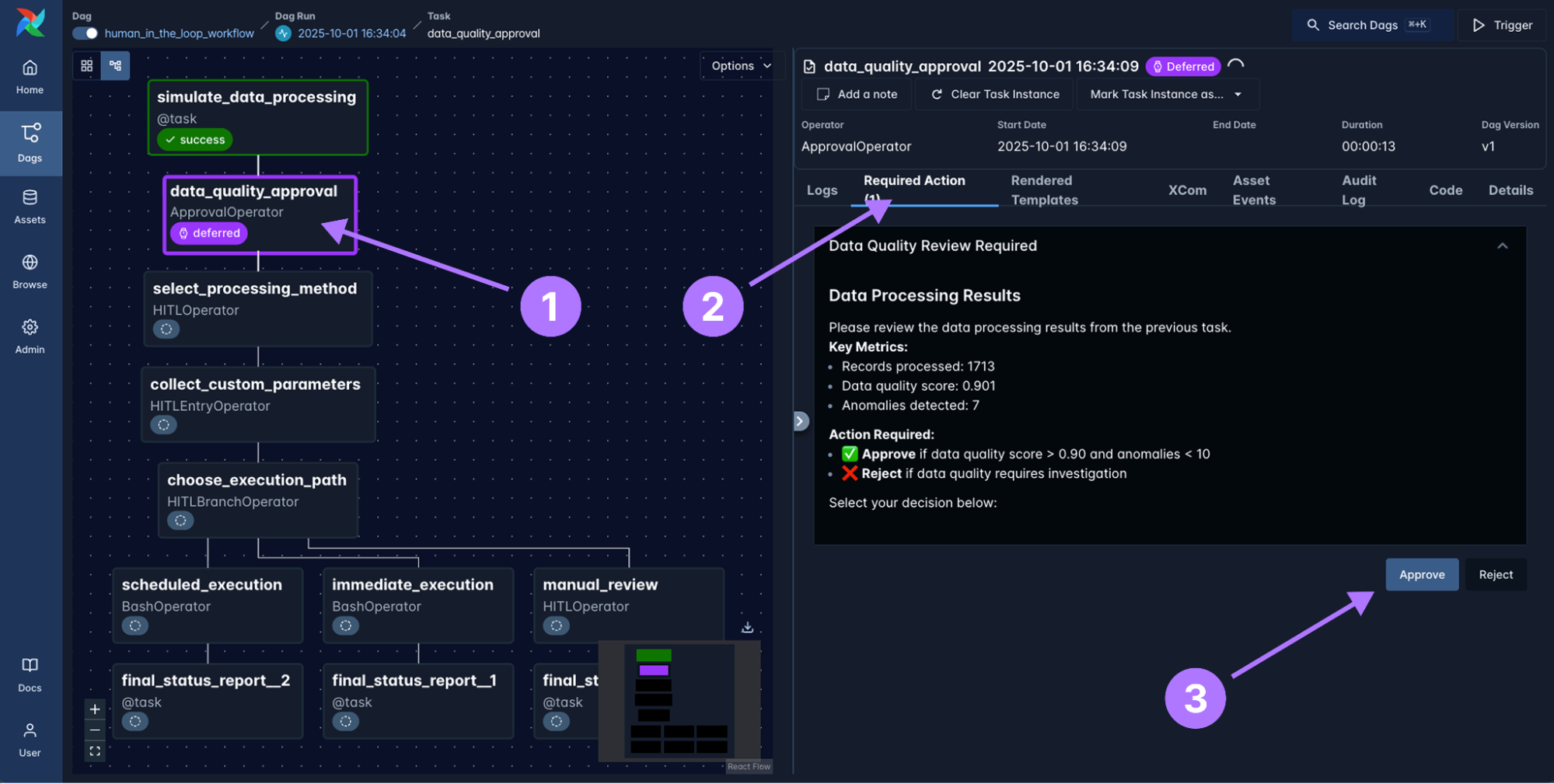

After adding the HITLEntryOperator the dag now consisted of 11 tasks, including one of each of the different HITL operators: HITLOperator, ApprovalOperator, HITLEntryOperator and HITLBranchOperator.

Interaction with these operators worked as intended. Once they started running, they were deferred and the Required Actions tab popped up in the UI asking me for input on example data.

Screenshot of the Airflow 3.1 UI showing the dag the Astro IDE generated with the first human-in-the-loop operator in its deferred state and the contents of the Required Actions tab. You can respond to a required action (be the human in the loop) by clicking on the task instance (1) then on Required Actions (2) and then, after optionally providing additional input, choosing a response (3).

All 4 new operators were implemented correctly and rendered forms like these to approve/reject, choose an option, or provide manual data to my dag at runtime. The only task that failed was a downstream BashOperator with the following error:

UndefinedError: 'dict object' has no attribute 'batch_size'

Inspecting the dag code, the reason was an assumption the AI had made about how to extract the information given to params in the human-in-the-loop operators in downstream tasks. It correctly used Jinja templating to pull information from XCom but was not aware that all params are nested inside the XCom object under 'params_input'.

After correcting the indexing by adding ['params_input'] as shown in the code snippet below, the dag ran without any failures.

immediate_execution = BashOperator(

task_id="immediate_execution",

bash_command="""

echo "Batch size: {{ ti.xcom_pull(task_ids='collect_custom_parameters')['params_input']['batch_size'] }}"

""", # correct params XCom pull

)

While looking at the dag code I spotted another minor mistake, the type of change I’d mark as “nit” in a code review. It imported the BashOperator from airflow.operators.bash which still works but is deprecated as of Airflow 3, the correct import now is airflow.providers.standard.operators.bash.

And that was it! The Astro IDE-generated dag might be a little more verbose than what I would have written, but overall provides a great hands on introduction to the human-in-the-loop feature!

You can find the full dag ready to run in this repository.

Comparing to other Agents

After the Astro IDE did such a great job implementing a feature that was only a few days old, I was curious if this was really due to our behind the scenes AI orchestration (of course powered by Airflow) that fed our guides and examples to the Astro IDE.

I decided to give the exact same prompt to one LLM and two coding agents:

- ChatGPT with gpt-5: Using a temporary chat without memory to avoid contamination through my chat history.

- Cursor with gpt-5: Using the same default Astro CLI project as was present in the Astro IDE with one example dag that is initialized when running

astro dev initwith the Astro CLI version 1.36.0. Memory was turned off. - Cursor with claude-4.5-sonnet: Using the same default Astro CLI project as was present in the Astro IDE with one example dag that is initialized when running

astro dev initwith the Astro CLI version 1.36.0. Memory was turned off. I picked Claude-4.5-sonnet since it was released 4 days after Airflow 3.1, on September 29.

All tests were run on October 2nd 2025, a week after the Airflow 3.1 release.

ChatGPT with gpt-5

The first test candidate was ChatGPT. Having no awareness of the new feature, GPT-5 attempted a mock implementation using a custom sensor.

"""Bad example of human-in-the-loop DO NOT USE"""

from airflow import DAG

from airflow.operators.empty import EmptyOperator

from airflow.sensors.base import PokeReturnValue, BaseSensorOperator

from airflow.utils.dates import days_ago

import time

# Example: Human-in-the-loop approval sensor

class HumanApprovalSensor(BaseSensorOperator):

def poke(self, context) -> PokeReturnValue:

# Replace with real check (DB flag, REST API, S3 file, etc.)

# For demo: simulate waiting for "approval" file/flag

approved = check_for_human_approval()

if approved:

return PokeReturnValue(is_done=True)

return PokeReturnValue(is_done=False)

def check_for_human_approval():

# Replace this with actual logic:

# e.g., query DB, call API, check Airflow Variable, etc.

# Simulate not approved for first few pokes

return int(time.time()) % 60 > 30

with DAG(

dag_id="human_in_the_loop_dag",

start_date=days_ago(1),

schedule=None,

catchup=False,

) as dag:

start = EmptyOperator(task_id="start")

# ML model that needs human approval before continuing

run_model = EmptyOperator(task_id="run_model")

# Human approval checkpoint

wait_for_human = HumanApprovalSensor(

task_id="wait_for_human_approval",

poke_interval=30, # Check every 30 seconds

timeout=3600, # Timeout after 1 hour

)

# Continue pipeline after approval

continue_pipeline = EmptyOperator(task_id="continue_pipeline")

start >> run_model >> wait_for_human >> continue_pipeline

Cursor with gpt-5

Maybe a proper coding agent would do better? Opening the default Astro CLI sample project in cursor and instructing the gpt-5 agent with the same prompt, it chose to create a PythonSensor waiting for an Airflow Variable to be set to “true”.

"""Bad example of human-in-the-loop DO NOT USE"""

def _is_approved() -> bool:

"""

Return True when the Variable `HITL_APPROVED` is set to a truthy value.

Missing Variable defaults to "false" so the sensor will wait.

"""

value = Variable.get("HITL_APPROVED", default_var="false")

return str(value).strip().lower() in {"true", "1", "yes"}

# ...

wait_for_approval = PythonSensor(

task_id="wait_for_human_approval",

python_callable=_is_approved,

poke_interval=30,

timeout=60 * 60 * 12, # 12 hours

mode="reschedule",

doc_md=(

"This task waits until the Airflow Variable `HITL_APPROVED` is set to a truthy value.\n"

"Use Admin → Variables to set it, or CLI: `airflow variables set HITL_APPROVED true`."

),

)

Cursor with claude-4.5-sonnet

After gpt-5 was not able to correctly implement the feature, I thought that maybe the recently released claude-4.5-sonnet model would do better. Again using the Cursor Agent mode, I provided the same prompt in a new environment. Claude decided the human-in-the-loop action should just be the act of marking a task as successful in the UI.

"""Bad example of human-in-the-loop DO NOT USE"""

@task.sensor(poke_interval=30, timeout=3600, mode="poke")

def await_approval(data_summary: dict[str, Any]) -> bool:

"""

Human approval checkpoint. This task will wait until manually marked as success.

In production, you could enhance this by:

- Checking an external approval system

- Reading from XCom values set by a custom approval UI

- Integrating with Slack/email approval workflows

For this example, manually mark this task as 'Success' in the UI to approve.

"""

# This sensor will keep checking (poking) until manually marked successful

# In a real scenario, you might check an external approval system here

print("Waiting for human approval...")

print(f"Data summary: {data_summary}")

# Return False to keep waiting (task will be marked success manually)

# You could also check XCom or external systems here

return False

Comparing the dags side by side, the Astro IDE won this bake off. Cursor did not even come close, neither using gpt-5 nor claude-4.5-sonnet.

Learnings and continuous AI improvement

The Astro IDE created a dag initially including 3 of the 4 new operators with one import error and one Jinja error. This performance was based on the first set of documentation and example dags it was provided on the day of the new Airflow 3.1 release, already being miles ahead of gpt-5. Not bad!

But it can do even better. The pieces of information that were missing were:

- The

optionsparameter of theHITLOperatoris mandatory and needs to be provided as a list with at least one string option in it. - The

HITLEntryOperatoris an alternative that does not take anoptionsparameter, it will automatically create one option with the valueOk. - All values provided to human-in-the-loop operators via

paramsare pushed to XCom under theparams_inputkey, which means retrieving them in a downstream task with a Jinja template takes the form{{ ti.xcom_pull(task_ids='my_task_id')['params_input']['my_param'] }}. And, while we are at it, all chosen options are pushed as a list underchosen_options.

You now know this… and the Astro IDE does too, because our blog posts are ingested automatically to improve the IDE! If you’d like to learn even more about the new features in Airflow 3.1, make sure to sign up to our What’s new in Airflow 3.1 webinar on October 22nd!

Thank you so much for reading, human or robot! 😊

Resources

- Try the Astro IDE with a free Astro trial

- Learn more about all Airflow 3.1 features in our webinar

- Deep-dive into human-in-the-loop in with our guide and YouTube video

Get started free.

OR

By proceeding you agree to our Privacy Policy, our Website Terms and to receive emails from Astronomer.