Apache Airflow® vs. Apache Beam: A Comparative Guide

11 min read |

The need to compare data tools and to keep hunting for the perfect one seems never-ending. We get it - choosing the right data management tool for a business is a virtually final decision. Once you go all-in with a data orchestrator, moving to another tool would be a waste of time, money, and resources. That’s why it’s worthwhile to find out which workflow manager is ideally suited for your specific needs and ready to grow with you. Today, we will help you choose by looking into the differences and similarities between two of our favorites: Apache Airflow® and Apache Beam.

Airflow vs. Beam

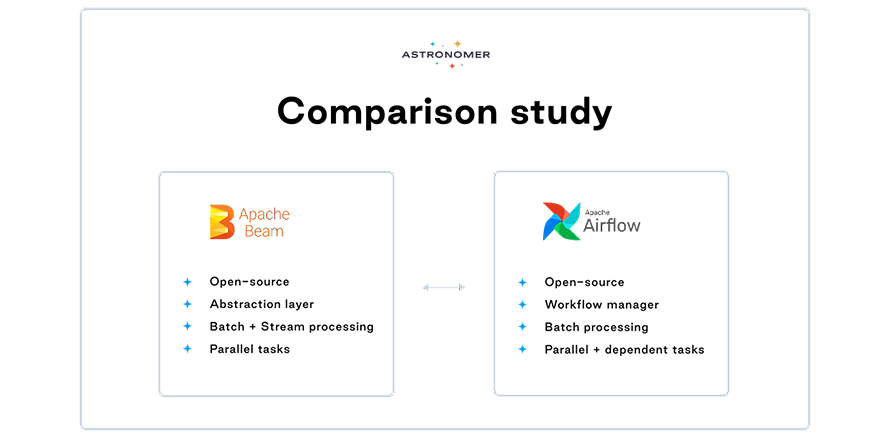

On the surface, Apache Airflow® and Apache Beam may look similar. Both were designed to organize steps of processing the data, to ensure that these steps are executed in the correct order. Both tools visualize the stages and dependencies in the form of directed acyclic graphs (DAGs) through a graphical user interface (GUI). Both are open-source, too. Airflow seems to have a broader reach with 23.5K GitHub stars and 9.5k forks, and more contributors. It’s probably because it has more applications, as by nature Airflow serves different purposes than Beam.

When digging a little deeper, we will find significant differences in the capabilities of these two tools and the programming models they support. Although they have some overlapping use cases, there are many things that only one can handle well. Let’s have a look!

Apache Airflow® Basics

Heavy users claim that Airflow can do anything, but more precisely, Airflow is an open-source workflow management tool for planning, generating, and tracking processes.

The tool is a super-flexible task scheduler and data orchestrator suitable for most everyday tasks. Airflow can orchestrate ETL/ELT jobs, train machine learning models, track systems, notify, complete database backups, power functions within multiple APIs, and more. Using BashOperator and PythonOperator Airflow can run any bash or Python script. It doesn’t get any more customizable than that. Python also makes orchestration flows easy to set up (for people familiar with Python, of course).

In Airflow, every task is part of a DAG. Tasks can consist of Python code you write yourself, or it may use built-in operators designed to interact with external systems. These tasks may depend on one another, meaning that one task can only be triggered once the task it depends on has finished running. The Airflow scheduler runs your tasks on an array of workers while adhering to the requirements you specify.

Key Benefits of Airflow

- Code-first: Airflow and all the workflows are written in Python (although each step can be written in any language), allowing users great flexibility in defining their DAGs. Workflows defined as code are easier to test, maintain, and collaborate on. Customization of complex transformations doesn’t get any simpler than this. Moreover, Python allows for effortless collaboration with data scientists.

- Rich UI: The user interface is really intuitive and a truly practical way to access task metadata. It makes it easy to turn schedules on and off, visualize DAG’s progress, watch pipelines in production, access logs, and resolve emerging issues at once. Due to rich visualization components, you can see all of the running pipelines and follow their progress. Everything is very lean and elegant. A powerful Jinja engine for templating makes it possible to parametrize scripts.

- Scalability: Airflow users have lots of control over their supporting infrastructure and multiple choices of executors that make scaling possible for each individual use case. Airflow provides a world-class, highly available scheduler that allows scaling your orchestration workloads horizontally and vertically as well - in the latest version, Airflow 2.2 enables you to leverage asyncio framework to scale asynchronous orchestration tasks almost infinitely with minimal use of computing resources

- Very active, constantly growing open-source community. Recently, the community has launched Airflow 2.2, and now customizable timetables are available to the joy of all the Airflow users. The tool is constantly growing and adapting to users’ needs. In September 2021, Airflow surpassed Apache Spark as the Apache Software Foundation tool with the highest number of contributors.

- Availability: you can set up and run Airflow on-premises, but you can also choose among multiple managed services: Astronomer, Google Cloud Composer, Amazon MWAA.

- Being data processing agnostic: Airflow does not make any assumptions on how the data is processed by any of the myriads of services it uses; thus it makes it easy to change and replace any of the particular services it uses (today we use Spark, to process this step, tomorrow we can change to, for example, Flink). This can be both a pro and a con - each task has to be tailored to the specific service, but on the other hand, you can quickly catch up with new releases of those services. Plus, whenever there are other new processing engines you would like to use, as long as they have an API, you can start using them in Airflow in a blink of an eye - with native speed and performance.

Airflow Limitations

-

Airflow was designed as a batch orchestrator, meaning streaming use cases are not thoroughly supported. Quoting the Airflow docs page: “Airflow is not a data streaming solution.”

-

Airflow is somewhat complex to set up and manage following its distributed nature; however, this has been mitigated by using managed services. Moreover, the community also did a great job releasing Helm Chart that allows you to set up distributed Kubernetes deployment with ease.

Apache Beam Basics

Apache Beam is more of an abstraction layer than a framework. It serves as a wrapper for Apache Spark, Apache Flink, Google Cloud Dataflow, and others, supporting a more or less similar programming model. The intent is that once someone learns Beam, they can run on multiple backends without getting to know them well. Beam creates batch and streaming data processing jobs, becoming an engine for dataflow, also basing the process on DAGs. The DAG nodes create a (potentially branching) pipeline. The DAG nodes are all active simultaneously, passing data pieces from one to the next as each performs some processing on it. Because of the unified model, batch and stream data are processed within Beam in the same way.

One of the main reasons to use Beam is the ability to switch between multiple runners such as Apache Spark, Apache Flink, Samza, and Google Cloud Dataflow. Without Beam, a unified programming model, varied runners have different capabilities, making it difficult to provide a portable API. Beam attempts to strike a delicate balance by actively incorporating advances from these runners into the Beam model while simultaneously engaging with the community to influence these runners’ roadmaps. The Direct Runner runs pipelines to ensure that they comply with the Apache Beam paradigm as precisely as possible. Runners are provided with smarts - the more, the better.

Key Benefits of Beam

- Unifying batch and streaming: many systems can perform batch and streaming operations, but they usually do so via distinct APIs. However, with Beam, batch and streaming are merely two points on a continuum of latency, completeness, and cost. There is no learning/rewriting cliff when moving from batch to streaming. So, if you construct a batch pipeline today but your latency requirements change tomorrow, you can easily alter it to streaming within the same API. A valuable tool for windowing (splitting data during stream processing), watermarks, and triggers is included as an added benefit (handling events that come late or out-of-order).

- Portability across runtimes: the same pipeline may be operated in numerous ways since data forms and runtime requirements are separated. That implies there is no need to rewrite code when migrating from on-premises to the cloud or from a legacy system to something cutting-edge. It’s possible to quickly compare choices to discover the optimal combination of environment and performance for current needs. It may be a combination of things, such as processing sensitive data on-premise with an open-source runner and processing other data in the cloud with a managed service. Beam can handle it.

- APIs with a higher level of abstraction: as data, code, and environments constantly shift, even the most meticulous hand tweaking may fail. So instead of leaking knowledge about the underlying runtime, Beam’s APIs concentrate on capturing aspects of your data and logic. This is important for portability, but it also provides runtimes with a lot of freedom in how they execute. Beam’s Source APIs, for example, are designed to avoid overspecifying sharding inside a pipeline. Instead, they provide runners with the necessary hooks to dynamically redistribute work across available machines. By effectively removing straggler shards, it can make a significant impact on performance.

- Availability as a managed service in Google Cloud Platform.

Beam Limitations

- If you would like to use the R programming language for developing applications, Beam is not a good choice, as it doesn’t support R.

- Beam Model has to be updated whenever capabilities of underlying execution engines (Spark, Flink, Samza, etc.) change. This means that it will always be a bit behind when those release new, significant features. Moreover, some of the features might simply not be available or possible to use due to the unified model of Beam. Plus, you are limited to using only the tools that Beam supports.

- Due to the unified model, the performance of Beam might not be the same as using the underlying services directly. Almost by definition, such unification brings some performance penalty (although the Beam community has done a great job improving that in recent years).

- Quite often, Beam uses a sledgehammer to kill a fly. The resources need to be provisioned from fresh VMs in Google Cloud Platform dataflow, the most commonly used Beam runtime, which takes 3-4 mins. DAGs running operators, like in Airflow, can often do the work in much less time.

Summary

Indeed, Airflow and Apache Beam can both be classified as “Workflow Manager” tools. However, the better you get to know them, the more different they become. Airflow shines in data orchestration and pipeline dependency management, while Beam is a unified tool for building big data pipelines, which can be executed in the most popular data processing systems such as Spark or Flink. Beam is said to be more machine learning focused, while Airflow doesn’t have a specific purpose - it can do any data orchestration.

You should definitely consider using Beam if you want streaming and batch jobs with the same API; it is easily adjustable. Beam is also really effective for parallel data processing jobs, where the issue may be divided into several smaller data bundles. Beam may also be used for ETL (Extract, Transform, and Load) activities and pure data integration, but in this case, it’s just one of the suitable tools, not the best one. If you need to use, for example, Flink and Spark, Beam is a perfect integrator for them. Overall, Beam is a fantastic option for autoscaled real-time pipelines and huge batches in machine learning.

Airflow, on the other hand, is perfect for data orchestration. It works particularly well when the data awareness is kept in the source systems, and modern databases are obviously highly aware of their data content. Airflow creates robust pipelines, and it is the absolute best tool for tasks that can only be triggered if their dependencies are met (contrary to parallel tasks). With Airflow you can also get the data lineage from when data enters the database.

It’s important to remember that Airflow and Beam can work together, too. Airflow can be used to schedule and trigger Beam jobs, alongside the rest of the tasks it triggers. Long story short: the choice of your tool always depends on your specific needs, and Airflow is not Beam’s competition in any sense - instead, they complement each other.

Astronomer, Making Airflow Even Better

Astronomer enables you to centrally develop, orchestrate, and monitor your data workflows with best-in-class open source technology. With multiple provider packages in our registry, it’s intuitive and straightforward to take off with your tasks and pipelines, and take full advantage of your data.

Would you like to get in touch with one of our experts? Let’s connect and get you started!

Co-authors: Kenten Danas, Field Engineer at Astronomer, and Jeff Lewis, Senior Solutions Architect at Astronomer.

Get started free.

OR

By proceeding you agree to our Privacy Policy, our Website Terms and to receive emails from Astronomer.