Airflow in Action: Qualcomm Chip Design Orchestration at Million-Task Scale with Airflow 3

5 min read |

At the Airflow Summit, Dheeraj Turaga and Nicholas Redd walked through how Qualcomm’s Snapdragon CPU team uses Apache Airflow® to orchestrate chip design workflows at extreme HPC scale. You will learn how they replaced a Jenkins-heavy setup with Airflow, using it to schedule Electronic Design Automation (EDA) workloads, handle failures, and route execution across multiple data centers and lab hardware.

Million-task reality of Snapdragon SoC

Qualcomm’s Orion CPU team builds the CPU cores that ship in the Snapdragon lineup. These chips are used in Android-powered smartphones, by automotive applications, and more, with Airflow playing a critical role in their design.

Their Airflow instance orchestrates compute-heavy EDA workloads that routinely demand 8 to 16 CPU cores, hundreds of GB of RAM, and massive I/O. At Qualcomm scale, this is not just a handful of jobs. They run millions of tasks per day across multiple globally distributed data centers, with some cloud capacity in the mix.

HPC makes the rules

To run those workloads on shared compute farms, they rely on HPC schedulers like SLURM and LSF. These schedulers allocate resources like CPU and memory, enforce fair-share, and provide resource tracking for long-running, high-throughput EDA jobs.

“Before orchestration, there was chaos”

Prior to adopting Airflow, the team leaned heavily on Jenkins. Nicholas sums it up with the framing they used internally: “Before there was automation, before there was orchestration, there was chaos. And before there was chaos, there was Jenkins.”

They described brittle infrastructure, high maintenance overhead, and lots of unversioned freestyle workflows that were hard to generalize across teams and rarely maintained after the original author moved on. Jobs failed frequently. Scaling quality and consistency across the org was painful.

Why Airflow?

They selected Airflow because it gave them a stable, scalable platform for Python-authored pipelines that could be reviewed and versioned, with a web UI that improved auditability, debugging, and visibility for both engineers and non-engineers.

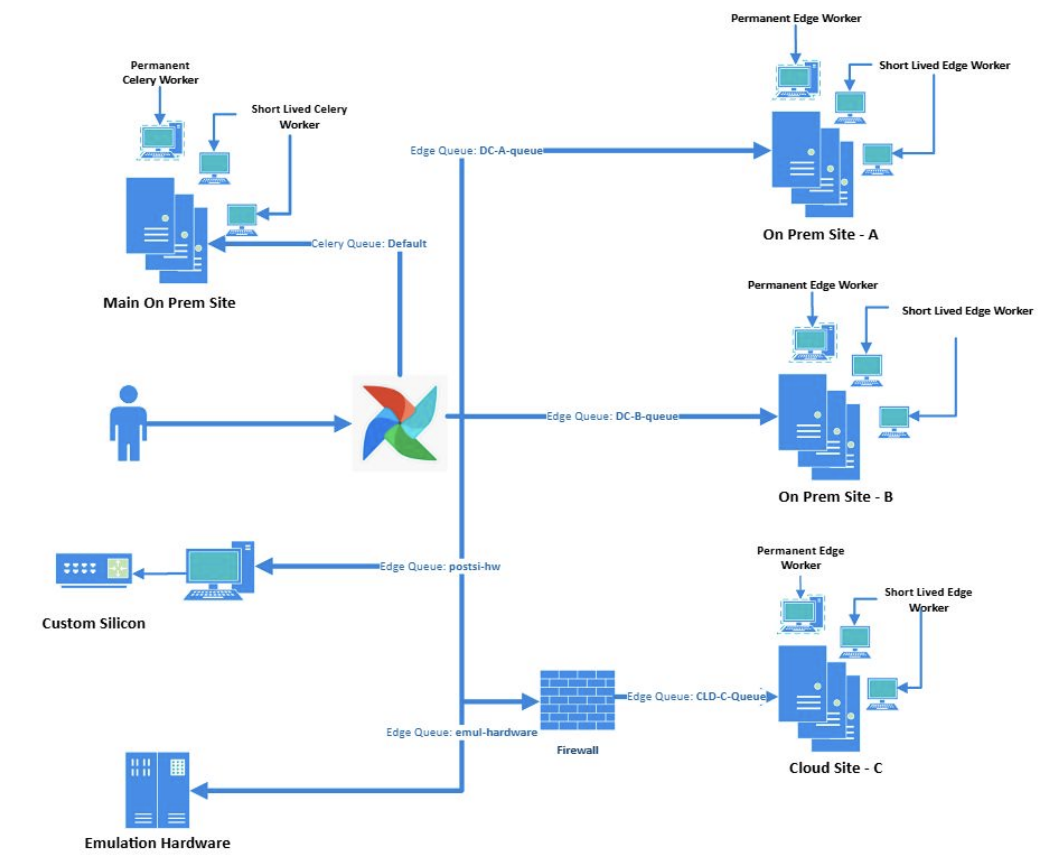

Their production journey started on Airflow 2.x, and in the talk they highlight that they are now on Airflow 3.1 with both Celery and Edge workers deployed across multiple data centers.

Figure 1: Qualcomm’s multi-data center Airflow architecture. Image source.

Workers on demand, not on payroll

A core integration point is how they connect Airflow to SLURM and LSF. Rather than permanently managing hundreds of worker nodes, they built dynamic Celery workers launched on demand through the HPC scheduler.

One always-on Celery worker anchors the pool, and short-lived workers spin up with explicit resource requests (CPU, memory) and a wall-time lease. A housekeeping Dag checks lease expiry and decommissions workers automatically. They also contributed upstream improvements, including extending the Celery CLI to better support remote queue management and worker shutdown.

Scale breaks single data centers

Scale creates two hard problems: throughput and placement. The speakers shared that they run 1 to 2 million simulations per day and often consume 500,000 to 800,000 CPUs at any given moment, which exceeds the capacity of any single data center.

To break “data center handcuffs,” they adopted EdgeExecutor and worked with the Airflow community to mature it, landing on a distributed model with a persistent edge worker per data center that spawns short-lived workers to execute tasks. This lets them route workloads to wherever compute is available by shifting queues.

They also extend the same Airflow instance into the lab by running edge workers on hosts connected to custom silicon boards, enabling post-silicon tests to reuse the same orchestration patterns as pre-silicon runs.

Multiple workflows, one control plane

They grounded the talk with practical workflow examples:

-

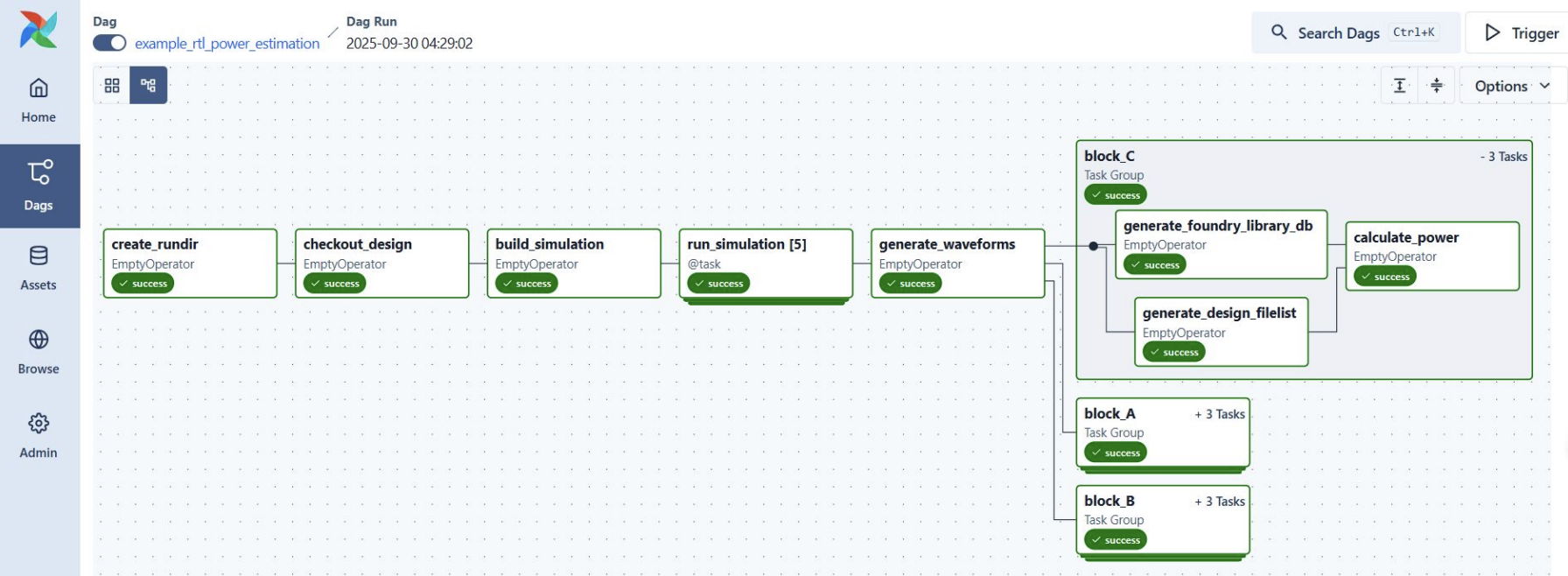

A common design verification flow creates a run directory, checks scratch space, checks out design sources from version control, generates design file lists, and runs EDA tools and simulations.

-

Power estimation workflows build on simulation outputs by generating waveform data and using signal-level time series to estimate power across scenarios and validate power budgets.

-

Physical design workflows focus on fitting modules into silicon area constraints, using assumptions to validate that designs can be placed within allotted die space.

Figure 2: The structure of Qualcomm’s chip design Airflow Dag for power estimation. Image source.

Steal this playbook

The big takeaway is that Airflow can act as a unified orchestration layer for semiconductor workflows that need HPC scheduling, reproducibility, and multi-site execution, without forcing teams to develop and maintain custom orchestrators.

Watch the Semiconductor (Chip) Design Workflow Orchestration with Airflow replay to see the full production architecture, the deployment model, and the concrete patterns Qualcomm uses to templatize workflows, test Dags, and run EDA at scale.

Edge execution and Remote Execution on Astro

Serving the same use cases as the edge executor, the Astro fully-managed Airflow service offers Remote Execution, eliminating the need for you to run, provision and manage remote workers yourselves.

Remote Execution separates orchestration from execution so you can get a fully managed Airflow control plane, while at the same time having full control over your workers in your own environment. The control plane is maintained, scaled, and secured by Astronomer while all task execution, data, and secrets stay inside your own cloud or on-premises environment and within your compliance boundary.

Astro’s Remote Execution lets you run multiple, distributed Airflow environments cleanly, so you can isolate workloads for reliability, security, and compliance without losing a unified control plane. Each environment offers the flexibility to use its own Remote Execution Agents with purpose-built configuration, including container images and dependencies, Dag sources, secrets and XCom backends, and workload identities.

Especially valuable for regulated environments, only outbound encrypted connections are used and there is no need for inbound firewall exceptions. Astro’s exclusive Remote Execution Agents authenticate with the organization’s IAM role and policy and run tasks under customer-managed identities. This aligns with zero-trust principles and removes the need to trade security for operational efficiency.

You can learn more by downloading our whitepaper: Remote Execution: Powering Hybrid Orchestration Without Compromise.