Airflow in Action: Inside Deutsche Bank’s Regulated Data Workflows

7 min read |

In his Airflow Summit session Christian Förnges, Data Engineer at Deutsche Bank, walked through how Apache Airflow® is used to orchestrate critical data workflows inside one of the most tightly regulated banking environments in the world. The Learn from Deutsche Bank talk showed attendees how the bank balances compliance, security, and operational reliability while running Airflow across hybrid on-prem and cloud infrastructures at scale.

Deutsche Bank’s operating reality: regulation first, speed second

Deutsche Bank is one of the world’s largest global clearing banks, operating payment and corporate banking services across 35 countries. That global footprint brings significant complexity, including dozens of payment systems and decades-old legacy platforms, where failures can disrupt payment flows across the broader financial network and are therefore subject to strict, multi-jurisdictional regulatory oversight.

Early in the talk, Christian set expectations clearly when it comes to getting approval on new tech:

“What takes you guys a week to do, takes us three months to half a year.”

Tooling decisions, architectural changes, and even basic access require multiple layers of approval, auditability, and regulatory sign-off. Engineers need special permission to access GitHub, and common SaaS tools are entirely off-limits due to data leakage risk.

The regulatory bar is uncompromising. Deutsche Bank must demonstrate completeness, accuracy, and timeliness of data processing with effectively zero error budget, especially for anti-financial crime (AFC), regulatory reporting, and account statement generation. Add country-specific data residency rules, conflicting retention requirements (GDPR vs. tax regulations), and strict access controls, and the result is a highly controlled and mission-critical data environment.

How Deutsche Bank uses Airflow in practice

Operating with tight regulatory constraints, Deutsche Bank uses Airflow as its dependable orchestration layer, valuing the control, visibility, and repeatability it provides.

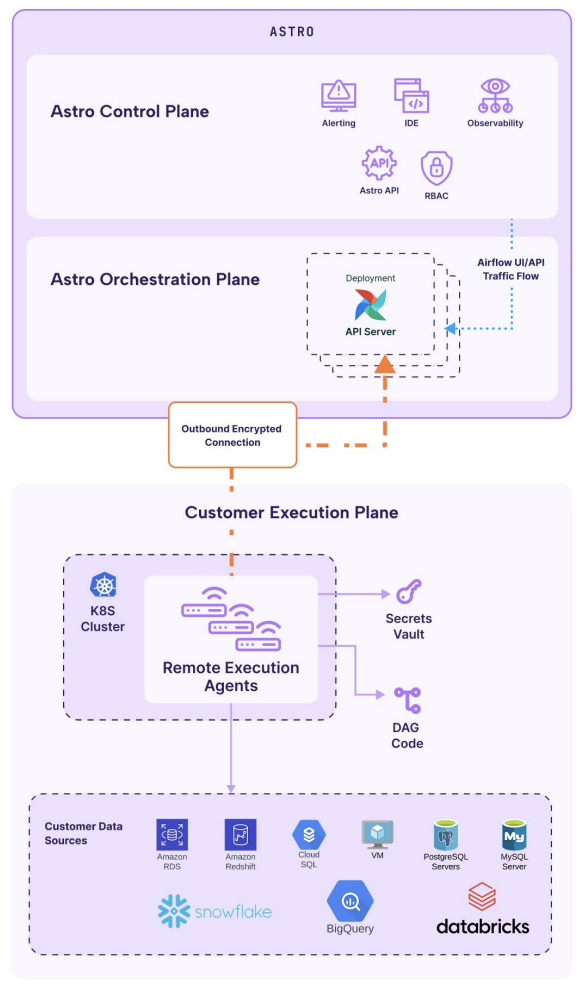

A core use case is End of Day (EOD) loading. Deutsche Bank receives large volumes of batch files from upstream systems after daily processing completes, often originating from legacy platforms. Airflow orchestrates ingestion, transformation, and publication of this data into standardized, JSON-based datasets, while enforcing immutability, one-time execution, and full auditability required for regulatory review.

Figure 1: Deutsche Bank’s End of Day loading workflow using Airflow Run IDs to enforce exactly once loading. Image source.

A key design principle of EOD loading is data immutability and exactly-once execution. Instead of overwriting data, each pipeline run is tied to a unique Airflow Dag run ID, ensuring a given file can only be loaded once. Every dataset is versioned and tagged with that run identifier, allowing auditors to trace precisely which data was processed, when it ran, and how a specific result at a specific point in time was produced, even after later data corrections.

The bank runs intraday pipelines with streaming workflows under Airflow’s control. The processing is event-driven, with Airflow acting as the single pane of glass for operations and support. Given the strict “four-eyes” principle and recorded production access, Airflow’s centralized UI becomes critical for monitoring, controlled restarts, and audited interventions.

Airflow is also used as a controlled job runner for sensitive operational tasks where direct access to infrastructure is intentionally restricted. This includes running Spark jobs, executing DDL operations, managing certificates, and triggering one-off recovery or maintenance workflows. In several cases, Airflow is the only approved path to safely execute these actions in production.

When regulations permit, Deutsche Bank runs data workloads on cloud platforms, while other countries require processing to remain strictly on-premises due to data residency and sovereignty rules. Airflow acts as a unifying control plane across these environments, giving teams the flexibility to run the same orchestration patterns in cloud or on-prem infrastructure without changing how pipelines are built or operated.

Key lessons learned from Deutsche Bank

- In regulated environments, orchestration value comes from auditability, control, and transparency, not just feature velocity.

- Centralized orchestration provides operational leverage when access is restricted and every action must be provable.

- Designing for immutability and traceability upfront pays dividends during audits and incident reviews.

To hear the full story, including architectural diagrams and real-world examples, watch the replay of Christian’s Airflow Summit session: Learn from Deutsche Bank.

Freedom to Run Anywhere with Astronomer

For teams in regulated industries, adopting Apache Airflow often comes with a tradeoff. Many want commercial support, operational guarantees, or relief from managing Airflow at scale, but cannot move sensitive data or workloads outside tightly defined compliance boundaries.

Astronomer is designed to support those realities. Rather than forcing a single deployment model, it provides options that allow organizations to standardize on Airflow while respecting regulatory, security, and data residency constraints.

This flexibility is especially important in hybrid environments, where some workloads can run in the cloud while others must remain on-premises. Astronomer’s architecture allows teams to adopt Airflow consistently across these environments, even as regulatory requirements differ by country, workload, or data domain.

Remote Execution: Enabling Secure, Cloud-Native Orchestration

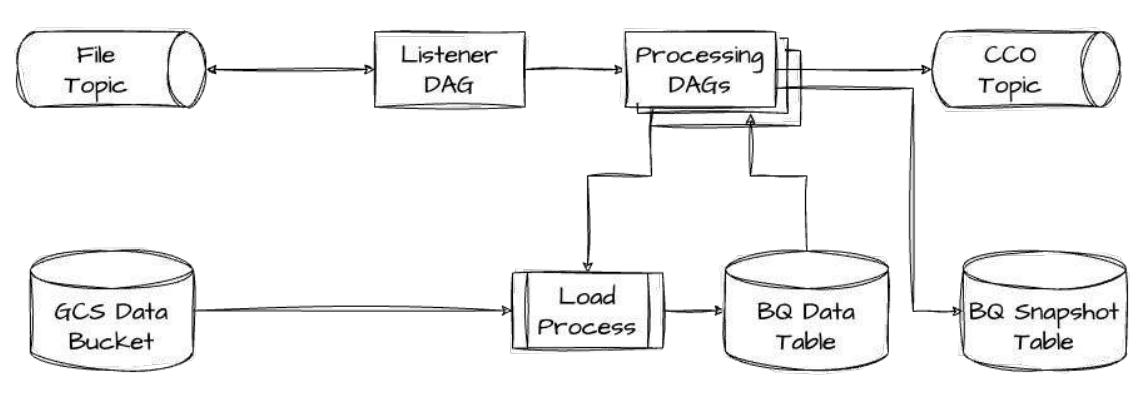

Remote Execution available with the Astro managed Airflow service cleanly separates orchestration from execution. Astronomer operates the Airflow control plane, handling scaling, security, and reliability, while all task execution and data remain inside the customer’s own cloud or on-premises environment and within their compliance boundary. It does this through a three-plane architecture:

- The control plane manages users and metadata but never sees your data.

- The orchestration plane schedules workflows in a single-tenant environment.

- The execution plane (fully yours) runs the tasks using your infra, secrets, and workload identities.

Figure 2: Stepping through Remote Execution’s architecture and traffic flow

All communication between the execution plane and Astro’s orchestration plane uses outbound-only, encrypted connections. The Remote Execution Agents communicate only to report task status, agent health, and to retrieve the next task to execute. Neither proprietary data nor code ever leave the customer’s execution environment.

There is no need for inbound firewall exceptions. Astro’s exclusive Remote Execution Agents authenticate with your IAM and run jobs under customer-managed identities aligning with zero-trust principles.

With remote execution, Astro delivers the benefits of managed Airflow without customer data ever leaving their secured and approved environment, making it suitable for highly regulated and sensitive workloads. You can learn more by downloading our whitepaper: Remote Execution: Powering Hybrid Orchestration Without Compromise.

Astro Private Cloud

For organizations that cannot adopt any managed services, Astro Private Cloud delivers enterprise-grade Airflow-as-a-Service entirely within your own environment. It runs exclusively on customer-managed infrastructure—across private cloud, on-premises, or fully air-gapped deployments—providing complete ownership over data, network boundaries, and security controls.

Astro Private Cloud consolidates fragmented Airflow usage into a centrally governed platform with isolated, , multi-tenant deployments. A unified control plane enables teams to standardize orchestration, enforce security and governance policies, and manage multiple Airflow environments while individual teams operate independently within dedicated namespaces.

By combining centralized governance with full infrastructure control, Astro Private Cloud reduces operational overhead, strengthens security and compliance, and enables organizations to reliably scale orchestration across the enterprise.

Conclusion

Christian Förnges’ session offers a grounded look at what it takes to run Apache Airflow in production when regulatory scrutiny is constant and failure is not an option. Deutsche Bank’s experience shows that Airflow’s strength extends well beyond scheduling workflows, to providing a transparent, auditable, and reliable control plane for data operations at scale.