Note: There is a newer version of this webinar available: Airflow 101: How to get started writing data pipelines with Apache Airflow®.

Topics to be discussed:

- What is Airflow

- Core Components

- Core Concepts

- Flexibility of Pipelines as Code

- Getting Airflow up and Running

- Demo DAGs

What is Airflow?

Definition: Apache Airflow® is a way to programmatically author, schedule and monitor data pipelines.

- Airflow is the De-facto Standard for Data Orchestration

- Born inside AirBnB, open-sourced, and graduated to a Top-Level Apache Software Foundation Project

- Leveraged by 1M+ data engineers around the globe to programmatically author, schedule, and monitor data pipelines

- Deployed by 1000s of companies as the unbiased data control plane, translating business rules to power their data processing fabric

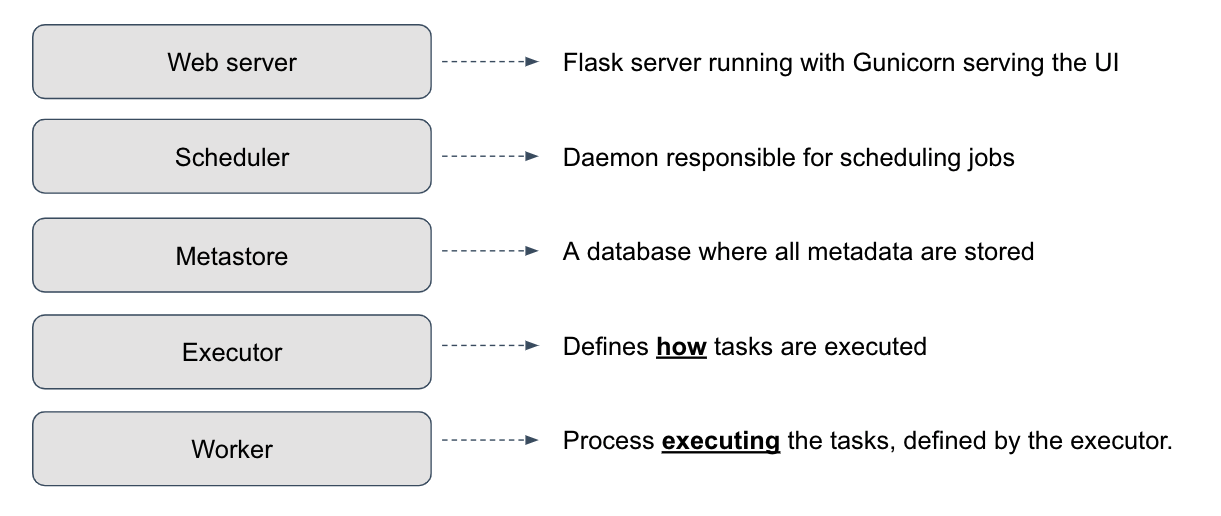

Core Components

Executors

- Local- good for local and development environments

- Celery- good for high volume of short tasks

- Kubernetes- good for autoscaling and task-level configuration

Plus Sequential, but no parallelism with this one

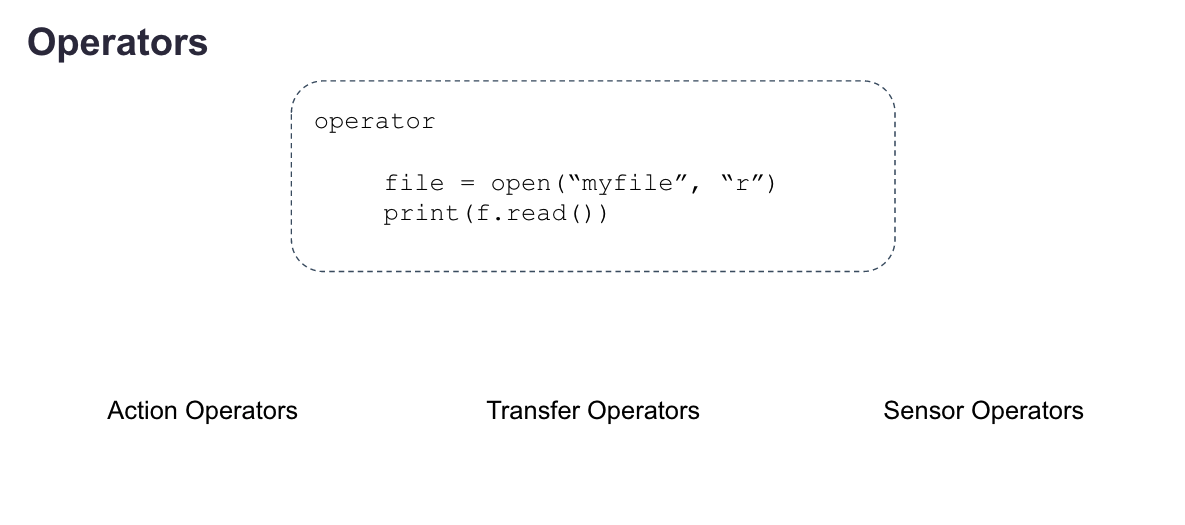

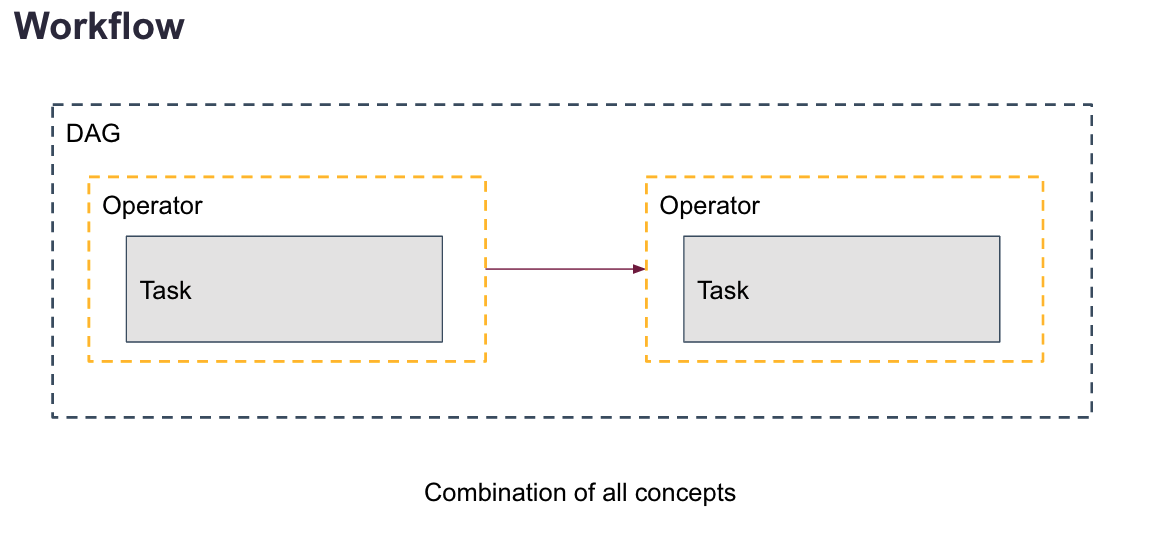

Task

- Instance of an Operator

Task Instance

- Represents a specific run of a task: DAG + TASK + Point in time

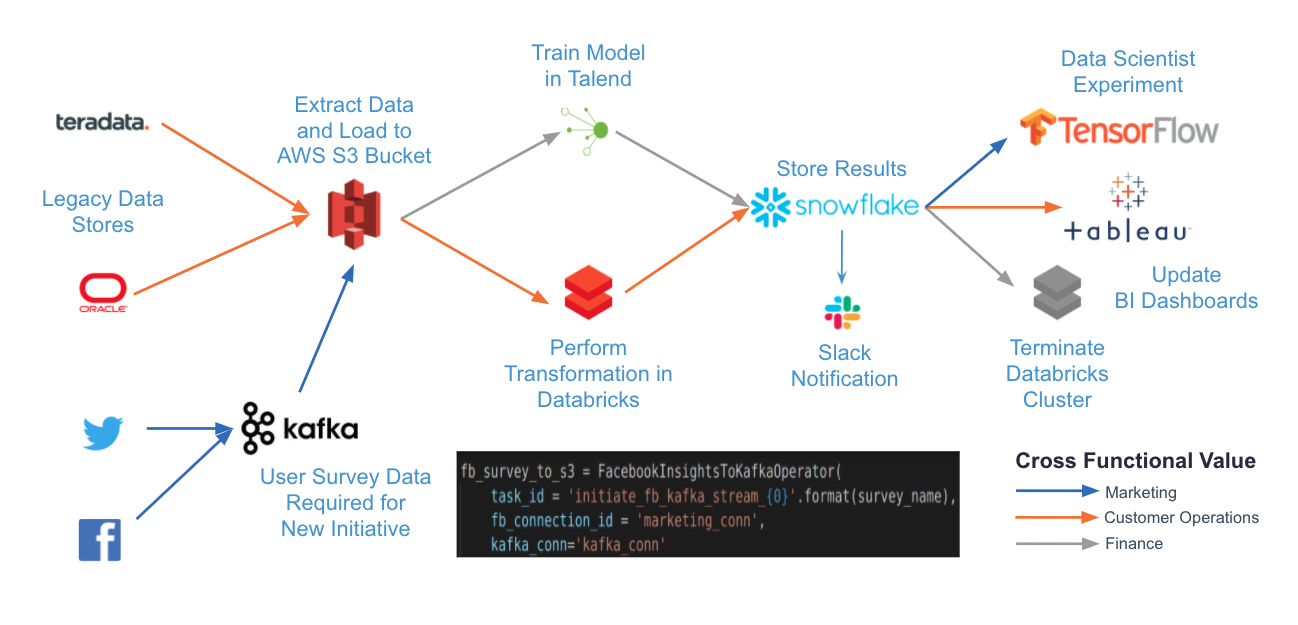

Flexibility of Data Pipelines-as-Code

Getting Started with Apache Airflow®

The easiest way to get started with providers and Apache Airflow® 2.0 is by using the Astronomer CLI. To make it easy you can get up and running with Airflow by following our Quickstart Guide.

Join the 1000’s of other data engineers who have received the Astronomer Certification for Apache Airflow® Fundamentals. This exam assesses an understanding of the basics of the Airflow architecture and the ability to create basic data pipelines for scheduling and monitoring tasks.

To access the demo DAGs used in the Intro to Airflow webinar, visit this Github repository