Use Cohere and OpenSearch to analyze customer feedback in an MLOps pipeline

Info

This page has not yet been updated for Airflow 3. The concepts shown are relevant, but some code may need to be updated. If you run any examples, take care to update import statements and watch for any other breaking changes.

With recent advances in the field of Large Language Model Operations (LLMOps), you can now combine the power of different language models to more efficiently get an answer to a query. This use case shows how you can use Apache Airflow to orchestrate an MLOps pipeline using two different models: You’ll use embeddings and text classification by Cohere with an OpenSearch search engine to analyze synthetic customer feedback data.

Before you start

Before trying this example, make sure you have:

- The Astro CLI.

Clone the project

Clone the example project from the Astronomer GitHub. To keep your credentials secure when you deploy this project to your own git repository, create a file called .env with the contents of the .env_example file in the project root directory.

The repository is configured to create and use a local OpenSearch instance, accessible on port 9200. If you already have a cloud-based OpenSearch instance, you can update the value of AIRFLOW_CONN_OPENSEARCH_DEFAULT in .env to connect to your own instance.

To use the Cohere API, you need to create an account and get an API key. A free tier key is sufficient for this example. To use your API key, replace <your-cohere-api-key> in the .env file with your API key value.

Run the project

To run the example project, open your project directory and run:

This command builds your project and spins up 6 containers on your machine to run it:

- The Airflow webserver, which runs the Airflow UI and can be accessed at

https://localhost:8080/. - The Airflow scheduler, which is responsible for monitoring and triggering tasks.

- The Airflow triggerer, which is an Airflow component used to run deferrable operators.

- The Airflow metadata database, which is a Postgres database that runs on port

5432. - A Python container running a mock API that generates synthetic customer feedback data, accessible at port

5000. - A local OpenSearch instance, running on port

9200.

To run the pipeline, run the analyze_customer_feedback DAG by clicking the Play button. Note that the get_sentiment and get_embeddings tasks can take a few minutes to complete while the Cohere API processes the text. If you want to quickly test the DAG, set the NUM_CUSTOMERS variable at the beginning of the DAG to a lower number.

The other parameters at the beginning of the DAG, such as TESTIMONIAL_SEARCH_TERM or FEEDBACK_SEARCH_TERMS can be adjusted as well to change parts of the OpenSearch queries in the DAG. If you adjust these parameters, make sure to also change the feedback_options in the app.py file of the mock API to create customer feedback that matches your updated search terms.

Project contents

Data source

The data in this example is generated using the app.py script included in the project. The script creates synthetic customer reviews based on a list of examples and a set of randomized customer parameters.

Project overview

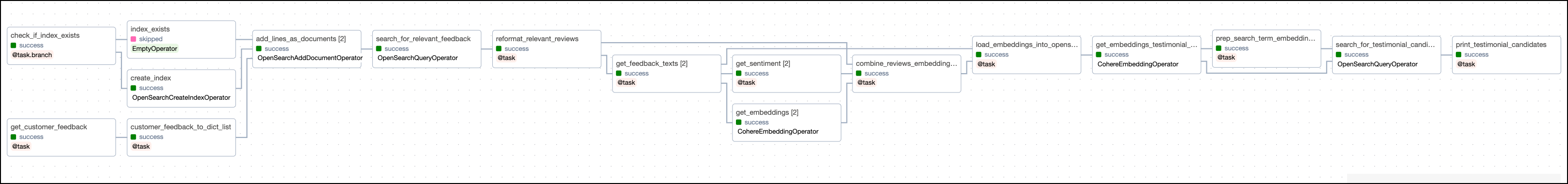

This project contains two DAGs, one for the MLOps pipeline and one DAG to delete the index in OpenSearch for testing purposes.

The analyze_customer_feedback DAG ingests data from the mock API and loads it into an OpenSearch index. The DAG then uses the Cohere API to get sentiment scores and embeddings for a subset of the customer feedback returned by a keyword OpenSearch query. The embeddings and sentiment analysis scores are ingested back into OpenSearch and a final query is performed to get the positive feedback most similar to a target testimonial. The DAG ends by printing the retrieved testimonial to the logs.

The delete_opensearch_index DAG deletes the INDEX_TO_DELETE in OpenSearch. This DAG is used during development to allow the analyze_customer_feedback DAG to create the index from scratch. Run this DAG if you would like to start from a clean slate, for example when making changes to the index schema to adapt the project to your own use case.

Project code

This use case showcases how you can use the OpenSearch and Cohere Airflow provider to analyze customer feedback in an MLOps Airflow pipeline.

The tasks in the analyze_customer_feedback DAG can be grouped into four sections:

-

Ingest customer feedback data into OpenSearch

-

Query customer feedback data from OpenSearch

-

Perform sentiment analysis on relevant customer feedback and get embeddings using the Cohere API

-

Query OpenSearch for the most similar testimonial using a k-nearest neighbors (k-NN) algorithm on the embeddings and filter for positive sentiment

Several parameters are set at the beginning of the DAG. You can adjust the number of pieces of customer feedback returned by the mock API by changing the NUM_CUSTOMERS variable.

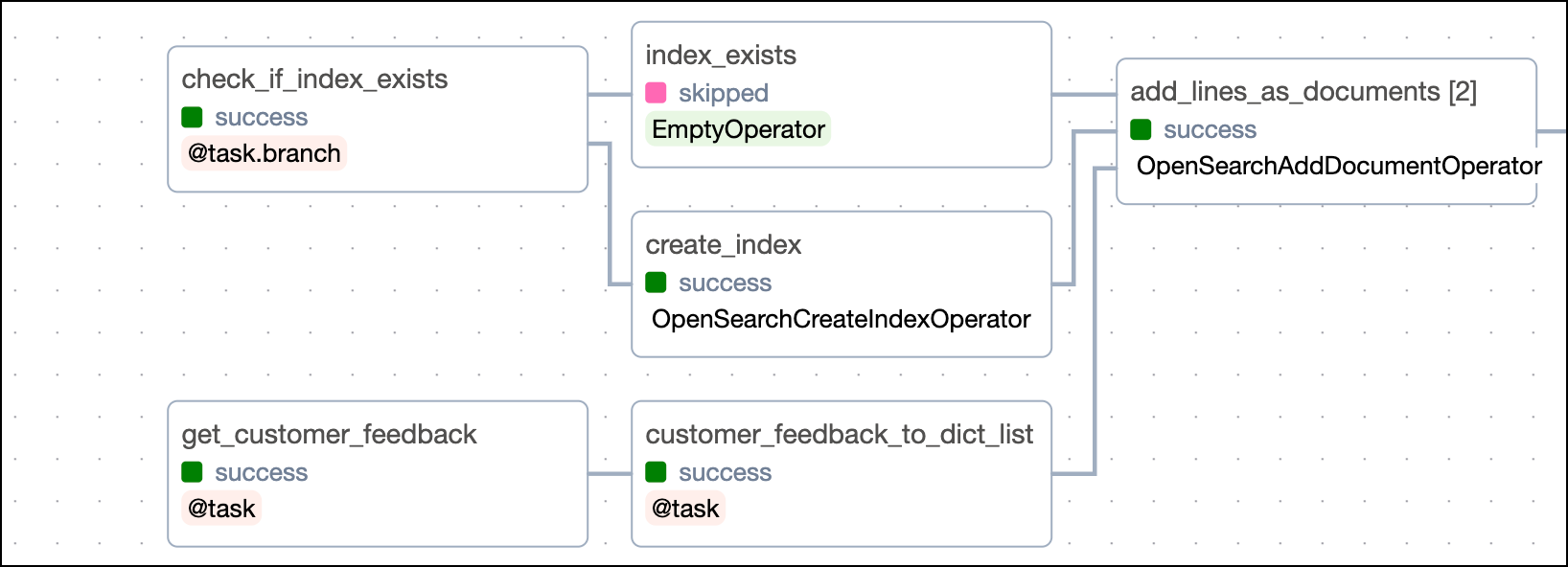

Ingest customer feedback data into OpenSearch

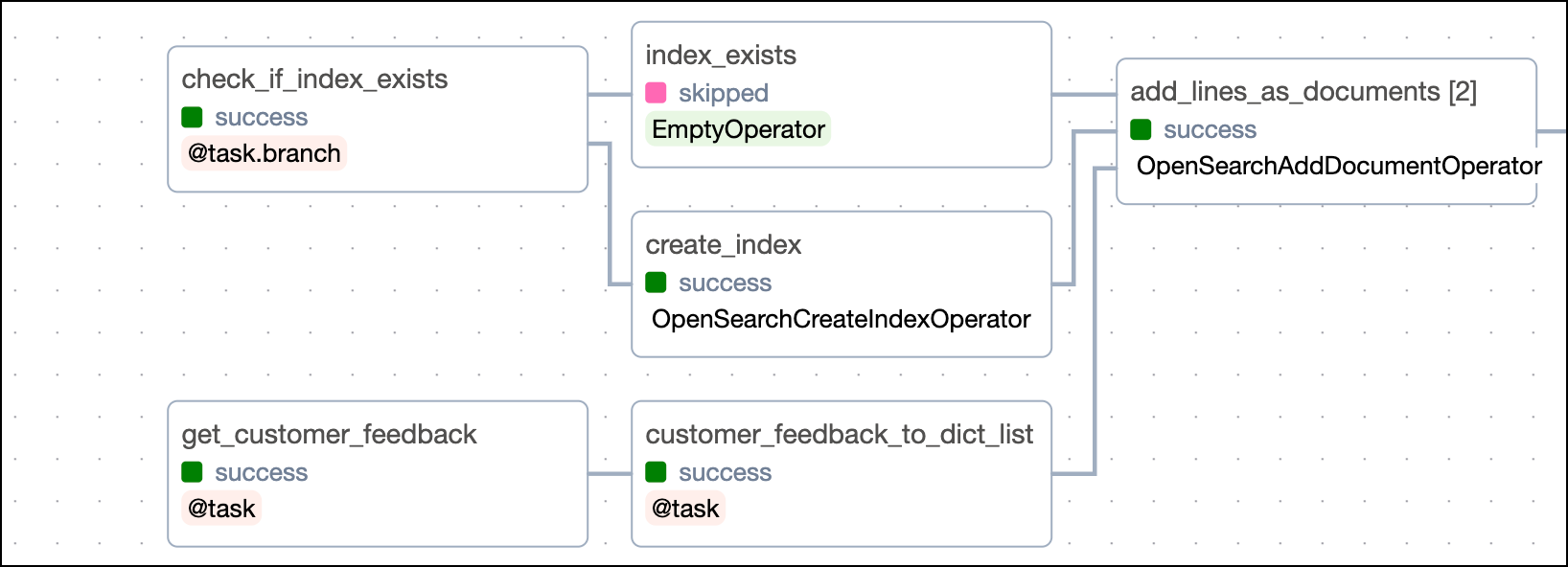

The first six tasks in the analyze_customer_feedback DAG perform the necessary steps to ingest data from the mock API into OpenSearch.

First, the check_if_index_exists task uses the OpenSearchHook to check if an index of the name OPEN_SEARCH_INDEX already exists in your OpenSearch instance. The task is defined using the @task.branch decorator and returns a different task_id depending on the result of the check. If the index already exists, the empty index_exists task will be executed. If the index does not exist, the create_index task will be executed.

The create_index task performs index creation using the OpenSearchCreateIndexOperator. Note how the dictionary passed to the index_body parameter includes the properties of the customer feedback documents, including fields for the embeddings and sentiment scores.

Running in parallel, the get_customer_feedback task makes a call to the mock API exposed at customer_ticket_api:5000.

The payload of the mock API is in the format of:

Next, the customer_feedback_to_dict_list task transforms the above payload into a list of dictionaries to be provided to the OpenSearchAddDocumentOperator document and doc_id parameters.

The resulting list of dictionaries takes the form of:

Lastly, the add_lines_as_documents task uses the OpenSearchAddDocumentOperator to add the customer feedback data to the OpenSearch index. This task is dynamically mapped over the list of dictionaries returned by the customer_feedback_to_dict_list task to create one mapped task instance per set of doc_id and document parameter inputs. To map over a list of dictionaries, the .expand_kwargs method is used.

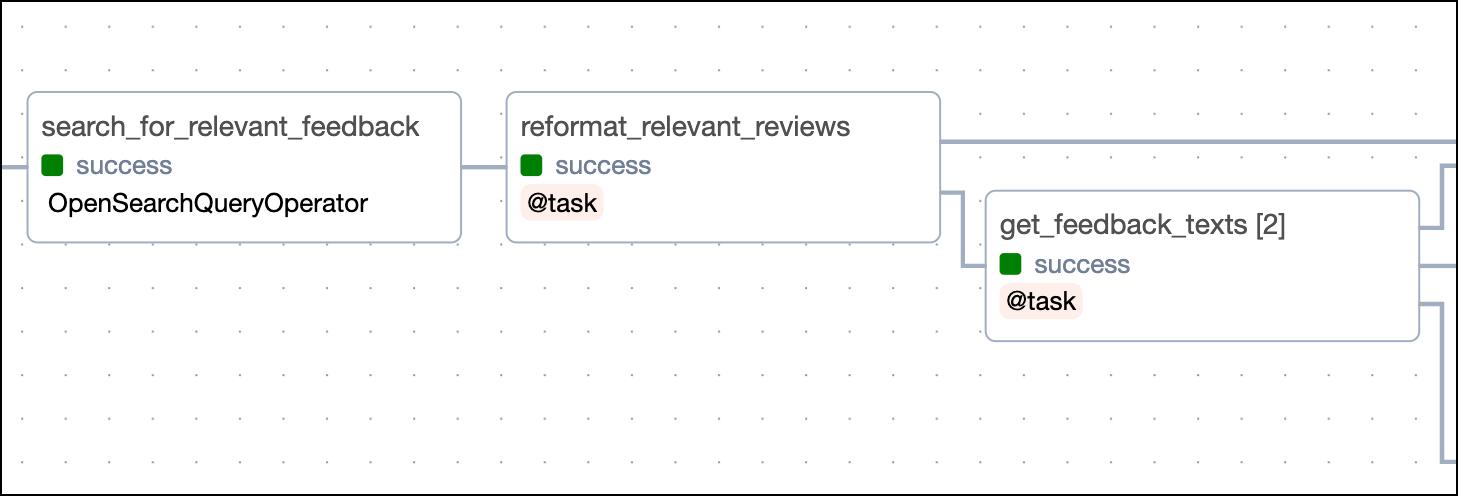

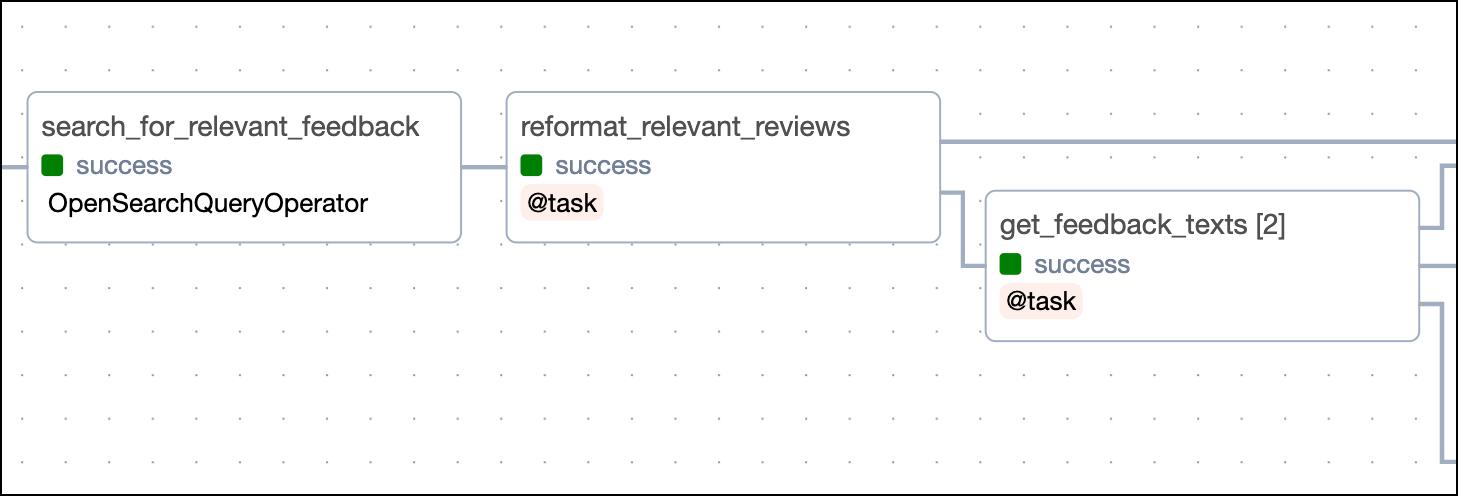

Query customer feedback data from OpenSearch

In the second part of the DAG, OpenSearch is queried to get the subset of customer feedback data we are interested in. For this example, we chose feedback from Swiss customers in the A test group using cloud service A who mentioned the user experience in their feedback.

The search_for_relevant_feedback task uses the OpenSearchQueryOperator to query OpenSearch for the relevant customer feedback data. The query is defined using query domain-specific language (DSL) and passed to the query parameter of the operator. OpenSearch fuzzy matches the terms provided in the FEEDBACK_SEARCH_TERMS variable while filtering for the CUSTOMER_LOCATION, AB_TEST_GROUP, and PRODUCT_TYPE variables.

The returned customer feedback data is then transformed using the reformat_relevant_reviews task to get a list of dictionaries.

The dictionaries returned contain a flattened version of the verbose output from the search_for_relevant_feedback task. They take the following format:

A second task, get_feedback_texts, is dynamically mapped over the list of dictionaries returned by the reformat_relevant_reviews task to extract the customer_feedback field from each dictionary.

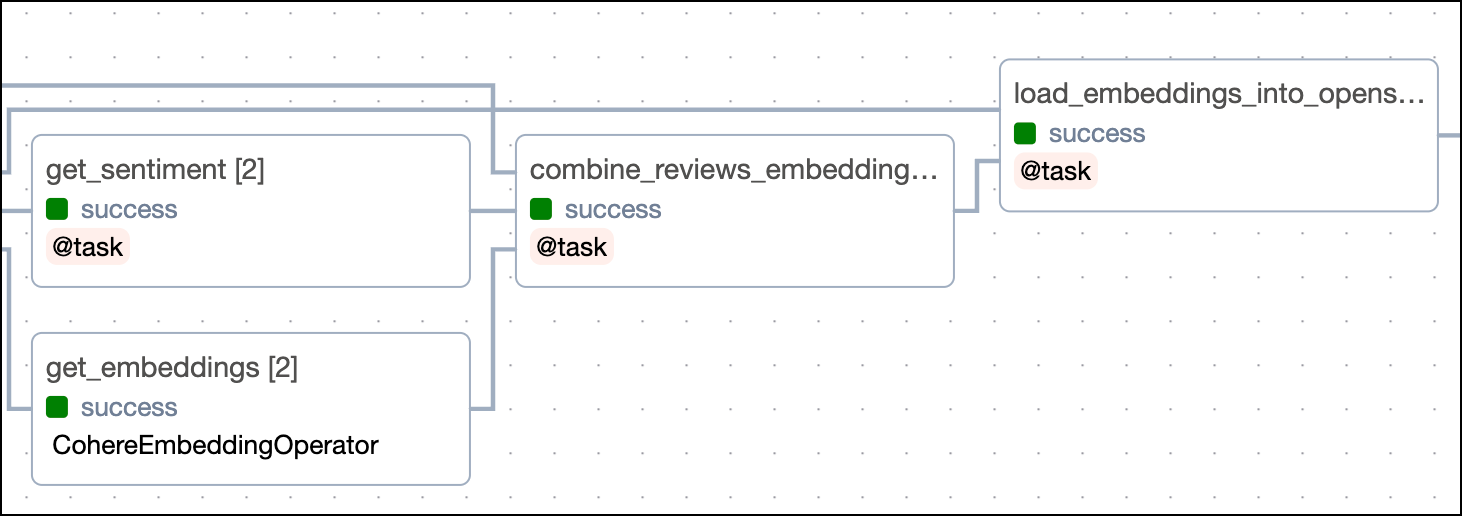

Perform sentiment analysis on relevant customer feedback and get embeddings using the Cohere API

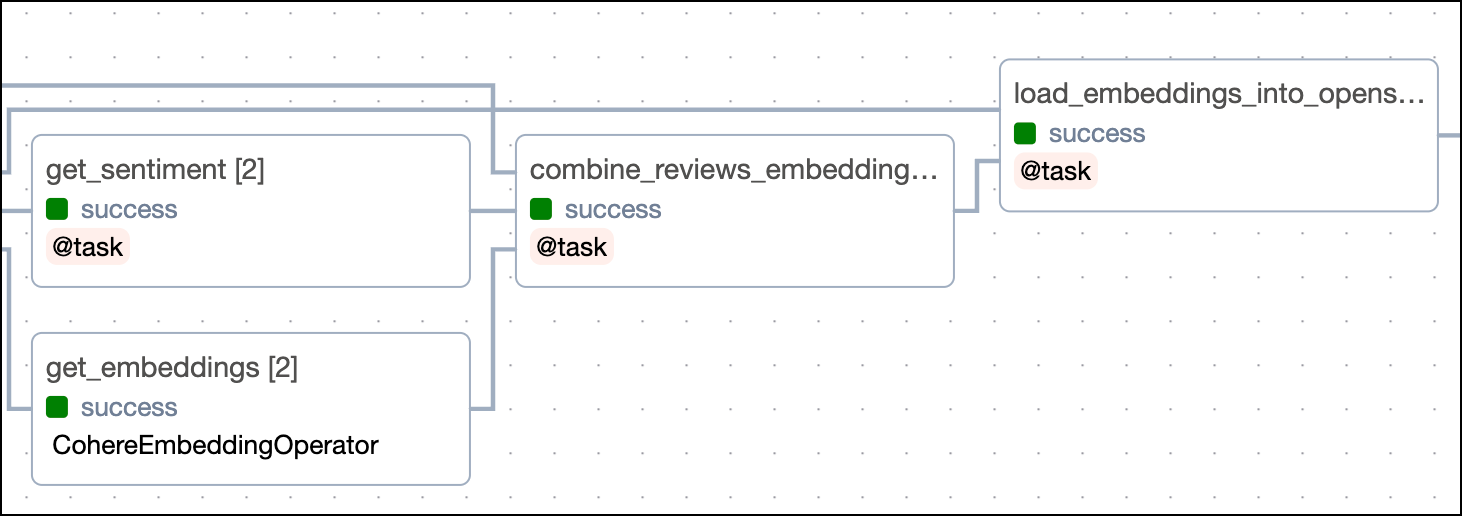

The third section of the DAG consists of four tasks that perform sentiment analysis, get vector embeddings and load the results back into OpenSearch.

The first task in this section, get_sentiment, uses the CohereHook to get the sentiment of the customer feedback using the Cohere API text classification endpoint. The task is dynamically mapped over the list of feedback texts returned by the get_feedback_texts task to create one mapped task instance per customer feedback to be analyzed in parallel. Sentiment examples are stored in the classification_examples file in the include folder.

Sentiment scores are returned in the format of:

In parallel, the CohereEmbeddingOperator defines the get_embeddings task which uses the embedding endpoint of the Cohere API to get vector embeddings for customer feedback. Similar to the get_sentiment task, the get_embeddings task is dynamically mapped over the list of feedback texts returned by the get_feedback_texts task to create one mapped task instance per customer feedback to be embedded in parallel.

Next, the combine_reviews_embeddings_and_sentiments task combines the embeddings and sentiment scores into a single list of dictionaries.

For each combined dictionary, the load_embeddings_into_opensearch task uses the OpenSearchHook to update the relevant document in OpenSearch with the embeddings and sentiment scores.

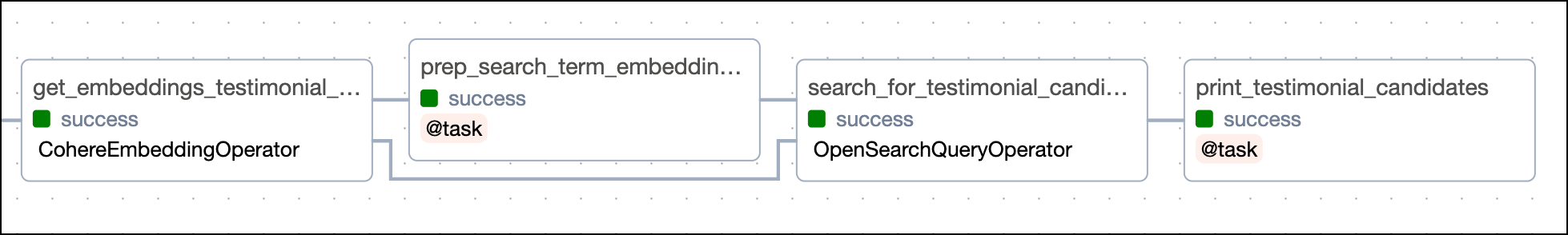

Query OpenSearch for the most similar testimonial using k-NN on the embeddings and filter for positive sentiment

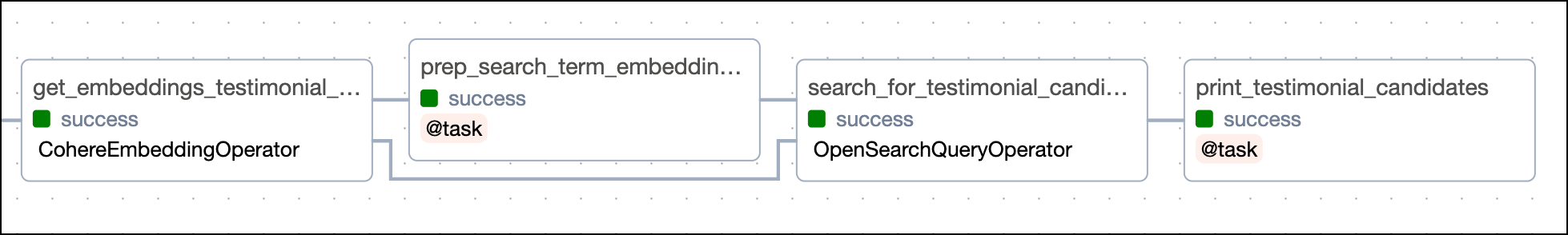

The final section of the DAG queries OpenSearch using both k-NN on the embeddings and a filter on the sentiment scores to get the most similar positive customer feedback to a target testimonial.

First, the get_embeddings_testimonial_search_term task converts the target testimonial to vector embeddings using the CohereEmbeddingOperator.

Next, the prep_search_term_embeddings_for_query task converts the embeddings returned by the get_embeddings_testimonial_search_term task to a list of floats to be used in the OpenSearch query.

The search_for_testimonial_candidates task uses the OpenSearchQueryOperator to query OpenSearch for the most similar customer feedback to the target testimonial using a k-NN algorithm on the embeddings and filter for positive sentiment. Note that k-NN search requires the knn plugin to be installed in OpenSearch.

Lastly, the print_testimonial_candidates task prints positive customer feedback that is closest to the target testimonial feedback and mentions the user experience of the cloud A service to the logs.

You can review the output of the task in the task logs. They should look similar to the following:

Congratulations! You’ve successfully run a full MLOps pipeline with Airflow, Cohere, and OpenSearch which efficiently finds a customer feedback testimonial based on a set of parameters, including its sentiment and similarity to a target testimonial.

See also

- Tutorial: Orchestrate OpenSearch operations with Apache Airflow.

- Tutorial: Orchestrate Cohere LLMs with Apache Airflow

- Documentation: Airflow OpenSearch provider documentation.

- Documentation: Airflow Cohere provider documentation.