Use Airflow object storage to interact with cloud storage in an ML pipeline

Info

This page has not yet been updated for Airflow 3. The concepts shown are relevant, but some code may need to be updated. If you run any examples, take care to update import statements and watch for any other breaking changes.

Airflow 2.8 introduced the Airflow object storage feature to simplify how you interact with remote and local object storage systems.

This tutorial demonstrates the object storage feature using a simple machine learning pipeline. The pipeline trains a classifier to predict whether a sentence is more likely to have been said by Star Trek’s Captain Kirk or Captain Picard.

Why use Airflow object storage?

Object stores are ubiquitous in modern data pipelines. They are used to store raw data, model-artifacts, image, video, text and audio files, and more. Because each object storage system has different file naming and path conventions, it can be challenging to work with data across many different object stores.

Airflow’s object storage feature allow you to:

- Abstract your interactions with object stores using a Path API. Note that some limitations apply due to the nature of different remote object storage systems. See Cloud Object Stores are not real file systems.

- Switch between different object storage systems without having to change your DAG code.

- Transfer files between different object storage systems without needing to use XToYTransferOperator operators.

- Transfer large files efficiently. For object storage, Airflow uses shutil.copyfileobj() to stream files in chunks instead of loading them into memory in their entirety.

Time to complete

This tutorial takes approximately 20 minutes to complete.

Assumed knowledge

To get the most out of this tutorial, make sure you have an understanding of:

- Airflow fundamentals, such as writing DAGs and defining tasks. See Get started with Apache Airflow.

- Task Flow API. See Introduction to the TaskFlow API and Airflow decorators.

- The basics of pathlib.

Prerequisites

- The Astro CLI.

- A object storage system to interact with. This tutorial uses Amazon S3, but you can use Google Cloud Storage, Azure Blob Storage or local file storage as well.

Step 1: Configure your Astro project

-

Create a new Astro project:

-

Add the following lines to your Astro project

requirements.txtfile to install the Amazon provider with thes3fsextra, as well as the scikit-learn package. If you are using Google Cloud Storage or Azure Blob Storage, install the Google provider or Azure provider instead. -

To create an Airflow connection to AWS S3, add the following environment variable to your

.envfile. Make sure to replace<your-aws-access-key-id>and<your-aws-secret-access-key>with your own AWS credentials. Adjust the connection type and parameters if you are using a different object storage system.

Step 2: Prepare your data

In this example pipeline you will train a classifier to predict whether a sentence is more likely to have been said by Captain Kirk or Captain Picard. The training set consists of 3 quotes from each captain stored in .txt files.

- Create a new bucket in your S3 account called

astro-object-storage-tutorial. - In the bucket, create a folder called

ingestwith two subfolderskirk_quotesandpicard_quotes. - Upload the files from Astronomer’s GitHub repository into the respective folders.

Step 3: Create your DAG

-

In your

dagsfolder, create a file calledobject_storage_use_case.py. -

Copy the following code into the file.

This DAG uses three different object storage locations, which can be aimed at different object storage systems by changing the

OBJECT_STORAGE_X,PATH_XandCONN_ID_Xfor each location.base_path_ingest: The base path for the ingestion data. This is the path to the training quotes you uploaded in Step 2.base_path_train: The base path for the training data, this is the location from which data for training the model will be read.base_path_archive: The base path for the archive location where data that has previously been used for training will be moved to.

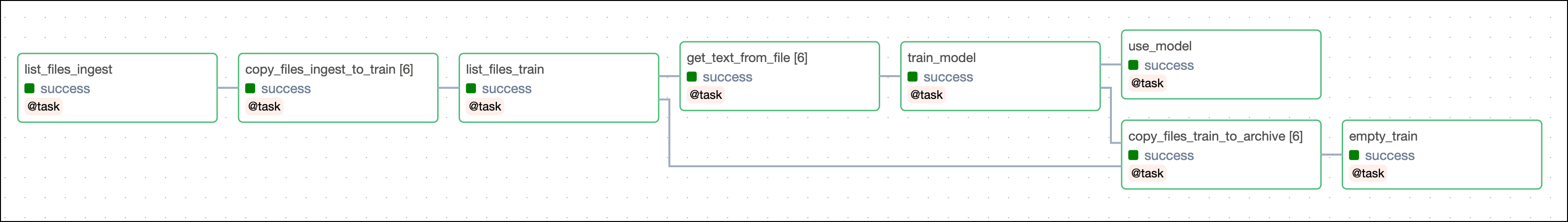

The DAG consists of eight tasks to make a simple MLOps pipeline.

- The

list_files_ingesttask takes thebase_path_ingestas an input and iterates through the subfolderskirk_quotesandpicard_quotesto return all files in the folders as individualObjectStoragePathobjects. Using the object storage feature enables you to use the.iterdir(),.is_dir()and.is_file()methods to list and evaluate object storage contents no matter which object storage system they are stored in. - The

copy_files_ingest_to_traintask is dynamically mapped over the list of files returned by thelist_files_ingesttask. It takes thebase_path_trainas an input and copies the files from thebase_path_ingestto thebase_path_trainlocation, providing an example of transferring files between different object storage systems using the.copy()method of theObjectStoragePathobject. Under the hood, this method usesshutil.copyfileobj()to stream files in chunks instead of loading them into memory in their entirety. - The

list_files_traintask lists all files in thebase_path_trainlocation. - The

get_text_from_filetask is dynamically mapped over the list of files returned by thelist_files_traintask to read the text from each file using the.read_blocks()method of theObjectStoragePathobject. Using the object storage feature enables you to switch the object storage system, for example to Azure Blob storage, without needing to change the code. The file name provides the label for the text and both, label and full quote are returned as a dictionary to be passed via XCom to the next task. - The

train_modeltask trains a Naive Bayes classifier on the data returned by theget_text_from_filetask. The fitted model is serialized as a base64 encoded string and passed via XCom to the next task. - The

use_modeltask deserializes the trained model to run a prediction on a user-provided quote, determining whether the quote is more likely to have been said by Captain Kirk or Captain Picard. The prediction is printed to the logs. - The

copy_files_train_to_archivetask copies the files from thebase_path_trainto thebase_path_archivelocation analogous to thecopy_files_ingest_to_traintask. - The

empty_traintask deletes all files from thebase_path_trainlocation.

Step 4: Run your DAG

-

Run

astro dev startin your Astro project to start Airflow, then open the Airflow UI atlocalhost:8080. -

In the Airflow UI, run the

object_storage_use_caseDAG by clicking the play button. Provide any quote you like to themy_quoteAirflow param. -

After the DAG run completes, go to the task logs of the

use_modeltask to see the prediction made by the model.

Conclusion

Congratulations! You just used Airflow’s object storage feature to interact with files in different locations. To learn more about other methods and capabilities of this feature, see the OSS Airflow documentation.