The Astronomer Providers Package — A Better Option for Long-Running Tasks

DAG authors often use Airflow operators to schedule long-running tasks, whether that means queries in a database like Snowflake, Databricks, or Redshift, or the transfer of a large volume of data between S3 buckets, geographical regions, or cloud storage providers. These tasks take 15 minutes, 30 minutes, an hour, or sometimes much longer to finish. While they’re running, each task occupies an available Airflow worker slot, although the worker slot isn’t actually doing anything except standing by, waiting for the task to complete.

As a best practice, organizations try to schedule long-running tasks to kick off during periods of light activity, so they won’t monopolize all of the available Airflow resources. For many common use cases, however, at least some long-running tasks need to repeat several times each day. And self-service practitioners like data scientists and machine learning (ML) engineers expect to be able to trigger long-running tasks on demand. The result is that organizations that depend on Airflow tend to over-provision their Airflow infrastructure, often at considerable expense.

The new Astronomer Providers package is a collection of “deferrable” operators, hooks, and sensors that give organizations the ability to schedule long-running tasks to run asynchronously. This not only minimizes the Airflow resources these tasks consume, but reduces the costs associated with provisioning capacity in both the on-premises environment and public cloud infrastructure.

As of version 1.6.0, the Astronomer Providers package includes almost 50 open source, Apache 2.0-licensed async providers for Kubernetes (K8s), Snowflake, Redshift, Databricks, S3, and many other services. We designed the new deferrable operators to function as drop-in replacements for Airflow’s standard synchronous operators, meaning that in most cases, organizations can easily convert their existing synchronous tasks to run asynchronously.

The Trouble With Long-Running Tasks

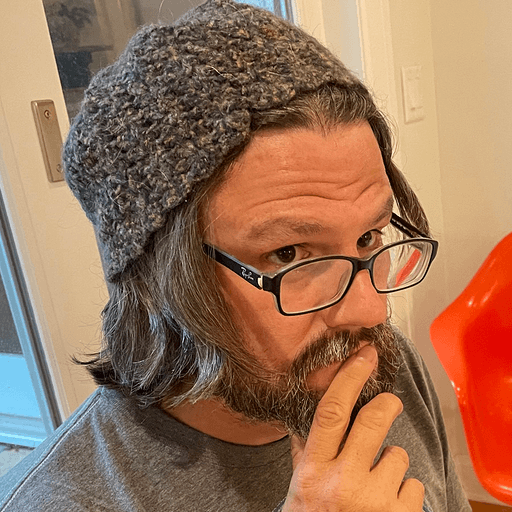

If you design your DAGs to use standard sync operators to schedule long-running tasks, and if you have several such tasks executing concurrently, they will take up multiple worker slots and could prevent other tasks from running.

In larger deployments, Airflow often runs dozens — sometimes hundreds — of concurrent DAGs. In a pool with 100 Airflow worker slots and 98 workers running concurrently, there are only two workers free to run new tasks. This may not be a problem when tasks are short-lived, running and terminating in the space of a few seconds. But when ML engineers decide to schedule a half-dozen or more long-running SQL data processing jobs at the same time, or a weekly ETL process initiates a protracted data-copying task between S3 buckets, each of these jobs spawns tasks that consume Airflow worker slots that are unavailable to other tasks.

On the Airflow side, these tasks are idling, waiting for the services (Snowflake, Databricks, Redshift, S3, etc.) running their tasks to finish their work.

Astronomer Providers Free Up Resources

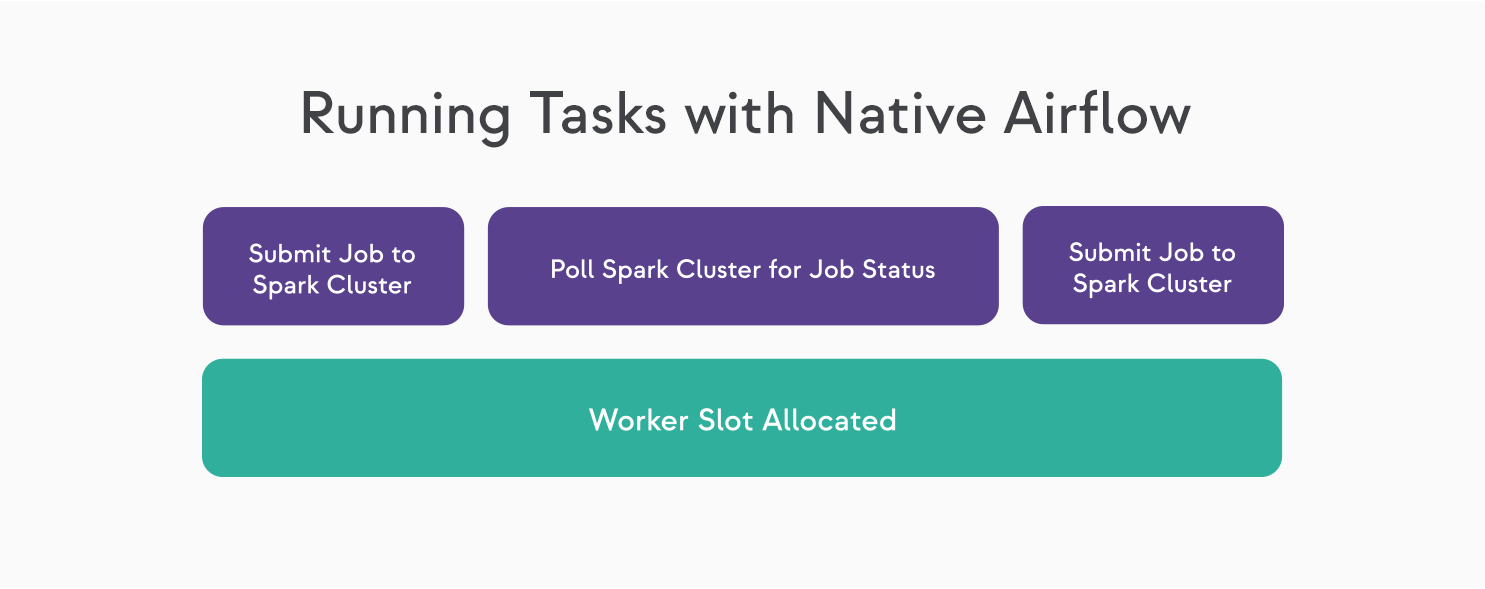

With Astronomer’s new async providers, long-running tasks no longer monopolize available Airflow workers, because these tasks automatically "defer" themselves, and the worker slots they occupy are released back to the pool. This is made possible by Airflow 2.2’s support for deferrable hooks, operators, and sensors — and by the “triggerer,” a background process that manages async tasks, also introduced with Airflow 2.2.

With the new async providers, when your task is waiting to complete in a downstream system, it automatically defers itself, defining a “trigger” — a specific condition under which it will resume — that gets handed off to the triggerer. Once this hand off is complete, the worker slot that the task was using is returned to the pool. When the triggerer determines that the remote task has finished successfully, it automatically resumes the deferred task, which again consumes a worker slot until it finishes.

The triggerer aggregates and manages all the waiting tasks. It polls the services in which these tasks are running, asking: “Are you finished yet?” You don’t have to sacrifice any workers or worker slots to do this: the triggerer runs as a separate process in Airflow itself.

This has several benefits:

- It frees up worker slots other DAGs can use to run tasks.

- It simplifies resource management in both the on-prem enterprise and the cloud.

- It does away with the need to over-provision Airflow infrastructure resources to accommodate long-running workloads, saving organizations money.

One Airflow user, a director of data engineering for a prominent multinational company, reports that long-running tasks have been a source of significant waste in his organization, which uses Airflow 1.10 to schedule and orchestrate several long-running tasks.

The tasks parallelize SQL processing across very large datasets in Spark, and the Airflow workers that run them are beefy, consuming 25-40 GB of memory on average. These workers spend most of their lives waiting for the synchronous SQL calls they kick off to finish processing in Spark. By using async providers to kick off these calls in the future — if and when the company upgrades to Airflow 2.2 or greater — this person thinks he could eliminate these idling workers and free up almost a terabyte of RAM (along with dozens of virtual CPUs) in K8s. As it stands, the company has had to over-provision K8s in order to support just these long-running tasks. Over-provisioning translates into extra physical servers, each of which costs money to acquire and operate.

The Takeaway

Astronomer Providers are written to be compatible with open-source Airflow, and come complete with long-term support and maintenance by Astronomer. Nearly 50 have been released so far, all focused on giving Airflow users different or better options for running tasks asynchronously.

This is hugely helpful for different kinds of long-running tasks. Support for async operation:

- lets users make more effective use of their Airflow resources, especially when it comes to scheduling and orchestrating tasks;

- simplifies capacity planning and provisioning in both the cloud and on-prem contexts; and

- can improve performance and lower OpEx in the cloud.

Look for additional Astronomer Providers to be released on a regular basis.