Meet the Astro Executor: Faster DAGs, Fewer Failures, Lower Costs

8 min read |

Modern data teams orchestrate thousands of workflows daily, from real-time ML feature pipelines to mission-critical financial reporting. As these workloads scale, the infrastructure underneath needs to be bulletproof. Yet teams running Apache Airflow at enterprise scale consistently hit the same wall: existing executors force an impossible choice between reliability and performance.

The problem isn’t Airflow; it’s how tasks get executed.

Celery Executor, while battle-tested, introduces operational complexity through external brokers that can lose tasks during restarts. Kubernetes Executor offers better isolation but adds significant latency through pod startup overhead. Both approaches force teams to choose between reliability and performance, but business-critical workflows demand both without compromise.

At Astronomer, we’ve been running Airflow at massive scale for years—billions of tasks across hundreds of thousands of deployments in addition to driving the evolution of open source Airflow. We’ve seen every failure mode, debugged every performance bottleneck, and learned exactly where traditional executors break down.

That’s why we built the Astro Executor from the ground up.

Purpose-built for Airflow 3 and available exclusively on Astro, as the default executor for Astro users, it handles both hosted execution and remote execution capabilities. The Astro executor eliminates the trade-offs that have constrained enterprise Airflow deployments. In testing, it delivers 70% higher worker concurrency while delivering the reliability that enterprise teams demand.

Why Existing Airflow Executors Fall Short

Enterprise Airflow deployments face a fundamental architecture problem that becomes more acute as scale increases:

Reliability vs. Performance Trade-offs: Production environments need both high throughput and bulletproof reliability. Celery Executor’s dependence on external message brokers (Redis/RabbitMQ) creates single points of failure. When these components restart during routine maintenance or autoscaling events, tasks get lost in queues. The result: stuck DAGs, manual intervention, and broken SLAs.

Meanwhile, Kubernetes Executor eliminates broker dependencies but introduces cold-start latency. Simple tasks that should complete in seconds can take minutes when factoring in pod scheduling and container startup time. Teams compensate by over-provisioning infrastructure or accepting slower execution, both of which drive up operational costs.

The Hidden Cost of Workarounds: Most enterprise teams end up implementing complex monitoring, alerting, and recovery processes to work around these executor limitations. Data engineers spend valuable time debugging “phantom” failures that stem from infrastructure, not business logic. Platform teams over-provision to maintain SLA compliance despite inefficient resource utilization.

These aren’t edge cases—they’re the predictable result of using executors designed for different requirements than modern enterprise workloads demand.

Astro Executor Delivers More Efficiency & Resilliency

We tested the Astro Executor against Celery Executor using real-world DAGs on comparable Astro Deployments. The results show clear efficiency gains and improved resilience

70% Higher Task Concurrency

On identical infrastructure (A10 workers) using a DAG with an ETL workflow that processes 5,000 rows of sales and API data through multiple transformation stages, including data extraction, pandas-based transformations, and ML model training tasks, the Astro Executor achieved 17 concurrent tasks per worker while maintaining zero task failures compared to Celery’s 10—a 70% improvement that directly translates to higher throughput without additional compute costs.

| Metric* | Celery Executor | Astro Executor | Improvement |

|---|---|---|---|

| Max Task Concurrency per Worker (A10) | 10 | 17 | 70% |

| Metric* | Max Task Concurrency per Worke (A10) | Celery Executor | 10 | Astro Executor | 17 | Improvement | 70% |

|---|---|---|---|---|---|---|---|

Benchmarks measured on a hosted (Astro) execution using the Astro Executor. Remote Execution performance expected to be slightly higher for DAG Run Duration.

Enhanced Reliability Under Stress

While both executors perform similarly under normal conditions, the Astro Executor demonstrates superior resilience during infrastructure disruptions. In extended testing using sustained workloads over longer durations, the Astro Executor maintained zero task failures while Celery experienced occasional task and DAG failures under identical conditions. This difference becomes critical during routine maintenance windows, autoscaling events, or network instability where Celery’s broker dependencies can lead to lost tasks requiring manual intervention.

The reliability advantage stems from direct API communication that eliminates external broker dependencies. When Redis or RabbitMQ restarts during scaling operations, Celery workers can lose connection and orphan queued tasks. The Astro Executor’s agent-based architecture avoids this by maintaining persistent connections directly with the Airflow API server, preventing the single points of failure that plague traditional Celery deployments.

Beyond raw performance, the impact is substantial:

- Enhanced Observability: Direct API coordination provides complete visibility into task lifecycle and agent status, unlike traditional executors that rely on opaque broker interactions.

- Predictable scaling: No more over-provisioning to compensate for broker dependencies

- Reduced incident response: Fewer “tasks stuck in queue” escalations requiring manual intervention

These improvements compound at enterprise scale, where small efficiency gains and reliability improvements deliver significant operational value.

Rethinking Task Execution Architecture

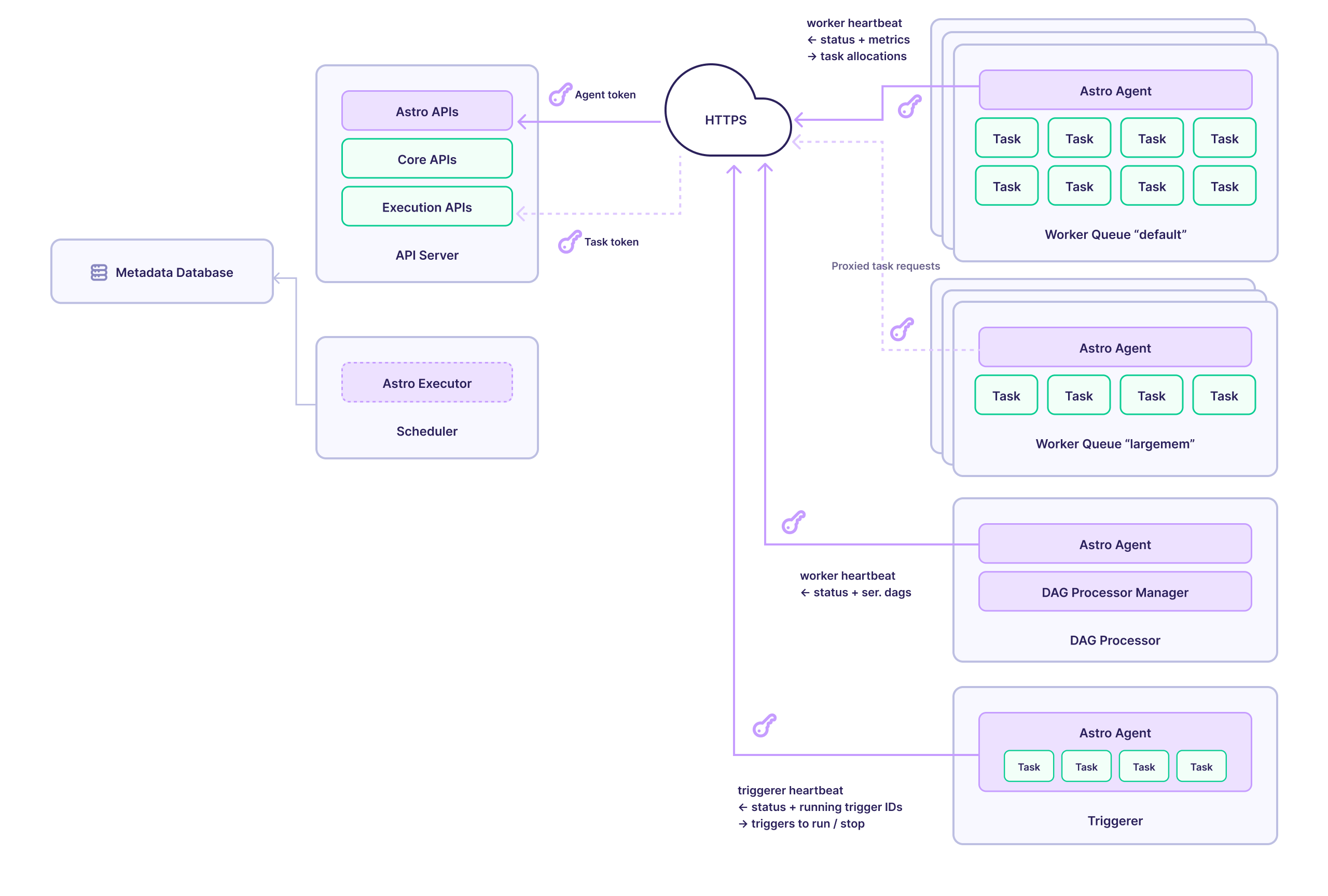

The Astro Executor fundamentally reimagines how Airflow tasks get scheduled and executed. Instead of relying on external brokers or per-task pod creation, it introduces an agent-based coordination model that gives the platform complete control over task lifecycle.

On an Astro Hosted Deployment:

On an Astro Remote Deployment:

Agent-Based Coordination

Traditional executors treat task execution as a “fire and forget” operation—tasks get queued externally and workers pick them up when available. The Astro Executor inverts this model:

Intelligent Agents: Lightweight Python processes that actively communicate with the Airflow API server, reporting their capabilities, capacity, and current workload in real-time.

Centralized Orchestration: The API server maintains complete visibility into the execution environment and makes informed decisions about task placement based on current system state.

Direct Communication: Eliminates intermediate message brokers and external schedulers that can introduce latency or lose tasks during restarts.

This architecture provides three key advantages:

- Predictable Performance: Tasks start executing immediately when agents are available—no waiting for broker polling or pod startup delays.

- Enhanced Reliability: Direct API communication eliminates external dependencies that can fail independently of Airflow itself.

- Complete Observability: Every task assignment and state transition is tracked through the API, making debugging and monitoring transparent.

Specialized Agent Types

The executor supports two distinct agent types optimized for different workload patterns:

- Synchronous agents handle traditional Python tasks like data transformations, API calls, database operations. These agents execute tasks in isolated processes, providing the compute power for most workflow operations.

- Asynchronous agents handle tasks that specifically require Airflow’s triggerer functionality, such as deferrable operators and sensors. These specialized agents prevent triggerer-dependent tasks from blocking synchronous worker resources during wait periods.

Adaptive Scaling

Because agents continuously report their status to the API server, the platform creates the foundation for sophisticated resource allocation decisions. The direct agent communication model enables coordinated scale-down that avoids terminating actively working agents during autoscaling events, unlike traditional executors that rely on external schedulers with limited visibility into task states.

This architecture positions the Astro Executor to evolve toward intelligent scaling behaviors as the platform matures, including workload-aware scaling and optimized task placement across available capacity.

Built-in Observability

Every task routed through the API is tracked from dispatch to completion, giving platform teams complete traceability:

- Real-time status: Agents emit structured heartbeats with slot availability, queue assignments, and execution status

- End-to-end tracking: Task state transitions are logged with agent identifiers and execution context

- System telemetry: Built-in metrics for task latency, retry counts, and agent uptime

This observability-first design removes the guesswork from production Airflow debugging, making the system far more transparent at scale.

Remote Execution: Built for Airflow 3

The Astro Executor is the only executor that enables remote execution—a key capability for Airflow 3 that allows tasks to run in completely separate environments from the scheduler. This opens new possibilities for:

- Workload isolation: Run sensitive or resource-intensive tasks in dedicated environments

- Multi-cloud orchestration: Coordinate workflows across different cloud providers or regions

- Compliance requirements: Keep certain data processing in specific geographic or security boundaries

Only Astro customers get access to this purpose-built executor, designed specifically for Airflow 3’s architecture. Our Professional Services team can help with Remote Execution implementation strategies tailored to your specific use case.

Available Now on Astro

The Astro Executor is compatible with existing DAGs. Simply enable it on your Airflow 3 deployment through the Astro UI. See if the Astro Executor is the right choice for you.

Key compatibility details:

- Works with existing Worker Queues

- Supports all standard Airflow operators

- Available on Airflow 3+ deployments

Ready to eliminate executor-related incidents and unlock better performance? Enable the Astro Executor on your Airflow 3 deployment, or contact our team to discuss how it fits your specific use case.