Introducing Apache Airflow® 3.1

10 min read |

It seems like just yesterday that we were here announcing the release of Apache Airflow 3.0, the biggest release in Airflow’s history. Airflow 3 reimagined data orchestration for the AI era with greater ease of use, stronger security, and the ability to run tasks anywhere, at any time. It brought game-changing, highly requested, community-driven features like dag versioning, event-driven scheduling, remote execution, and high-performance backfills.

But Airflow 3 was just the beginning. One of the main reasons for the timing of the Airflow 3 release was to make big (and breaking) architectural changes that laid the groundwork for features that allow Airflow to support new and evolving use cases like MLOps and GenAI workflows. And here we are, already talking about the next release, Airflow 3.1, and how it builds on the foundation of Airflow 3.

Airflow 3.1 brings improved support for AI workflows, a new plugins interface, and other continued UI enhancements, alongside the many smaller improvements and features that come in every minor release. So without further ado, let’s get into what’s new in 3.1.

Better support for AI workflows

The rise of generative AI and related use cases was one of the big reasons for the recent major release of Airflow. In the 2024 Airflow survey, 25% of respondents said they use Airflow for MLOps or GenAI use cases, a substantial increase over the previous year. Airflow continues to evolve to better support these use cases, including with the introduction of two big new features in 3.1.

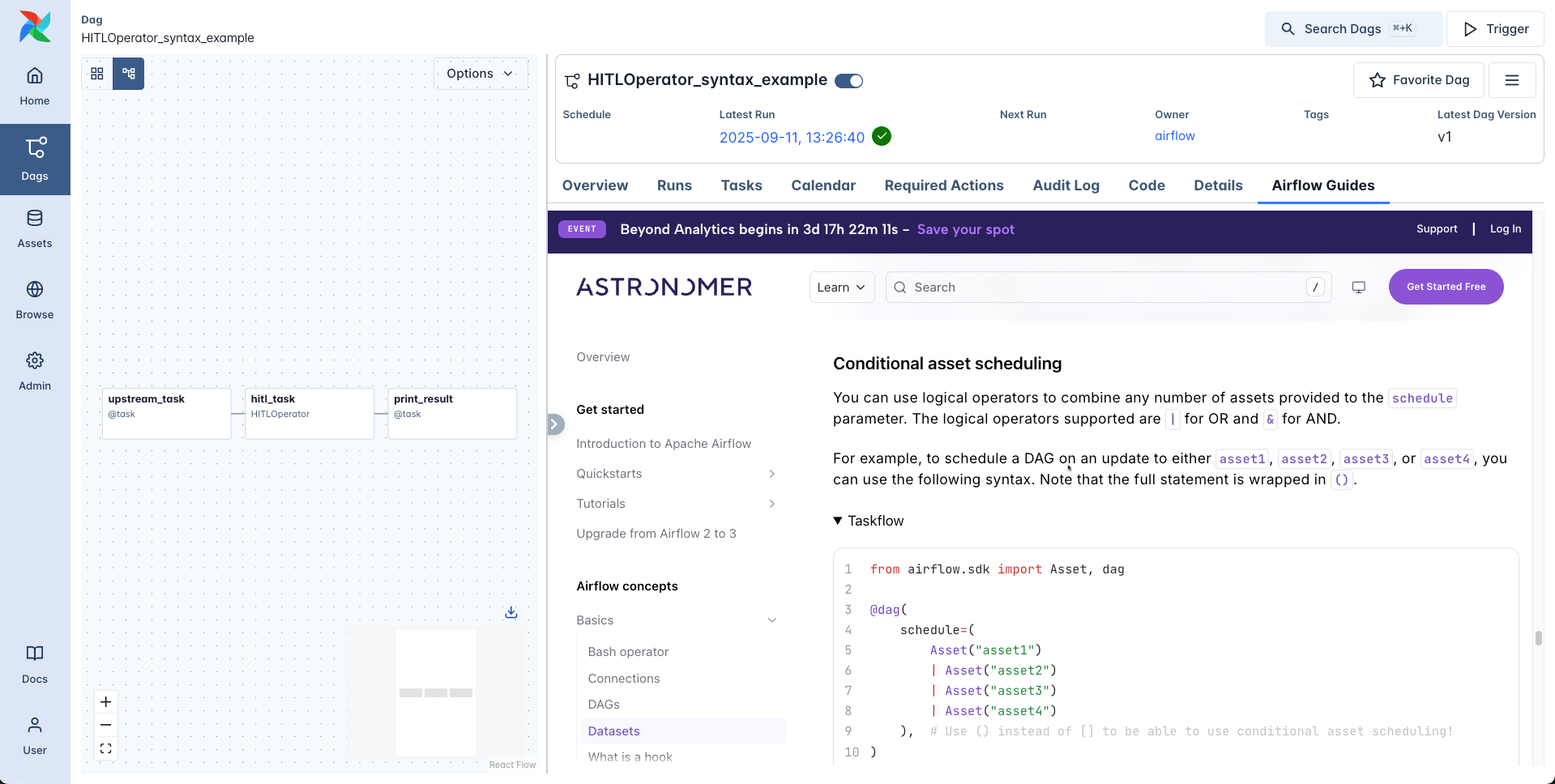

Human-in-the-loop (HITL) (AIP 90) is perhaps the most exciting feature in this release, providing the ability for users to interact with their dags while they are running. You can now do things like choose a branch in a workflow, approve or reject the output of a task, or provide textual input which can be used in subsequent tasks, all mid-dag run. This is particularly relevant for those using Airflow for GenAI applications, which often require human intervention in workflows like content moderation (to provide judgement on whether content is “appropriate”), model selection, or LLMOps where prompting might be required mid-workflow.

The Airflow standard provider has four HITL operators: HITLOperator (the base class), ApprovalOperator (for approve/reject workflows), HITLBranchOperator (for making branching decisions), and HITLEntryOperator (for entries into a form). The following code shows a simple example of using the HITLOperator to provide three options for the user to choose between (options=["ACH Transfer", "Wire Transfer", "Corporate Check"])

from airflow.providers.standard.operators.hitl import HITLOperator

from airflow.sdk import dag, task, chain, Param

@dag

def HITLOperator_syntax_example():

@task

def upstream_task():

return "Review expense report and approve vendor payment method."

_upstream_task = upstream_task()

_hitl_task = HITLOperator(

task_id="hitl_task",

subject="Expense Approval Required", # templatable

body="{{ ti.xcom_pull(task_ids='upstream_task') }}", # templatable

options=["ACH Transfer", "Wire Transfer", "Corporate Check"],

defaults=["ACH Transfer"],

multiple=False, # default: False

params={

"expense_amount": Param(

10000,

type="number",

)

},

execution_timeout=timedelta(minutes=5), # default: None

)

@task

def print_result(hitl_output):

print(f"Expense amount: ${hitl_output['params_input']['expense_amount']}")

print(f"Payment method: {hitl_output['chosen_options']}")

_print_result = print_result(_hitl_task.output)

chain(_upstream_task, _hitl_task)

HITLOperator_syntax_example()

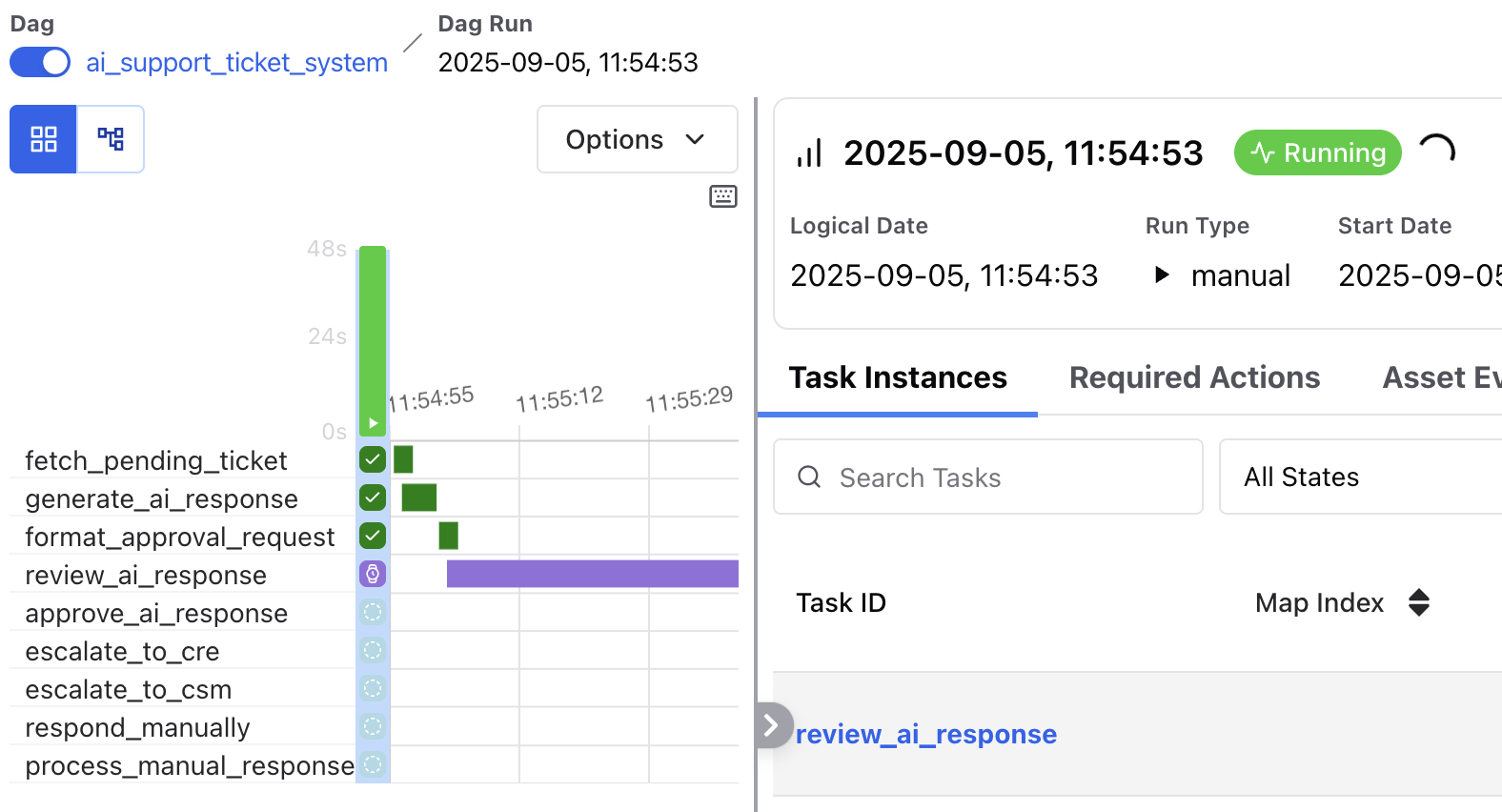

The gif below shows a more complex example where the user reviews an AI-generated response and either approves or rejects it before moving to the rest of the tasks. The code to create this dag is available here.

A gif showing a user approving the output of a task using a HITL operator

Another notable feature for AI workflows is support for synchronous dag execution. This is a way to use Airflow as the backend for services processing user requests that come from a frontend application like a website. Airflow 3 already removed the unique constraint on the logical date, allowing multiple users (or API requests) to trigger the same dag independently. Now, 3.1 expands on this feature by allowing an external service to wait for a dag run to complete and retrieve any results. This is useful for use cases like inference execution, ad-hoc requests for data analysis, or data submission by non-technical users.

The new endpoint is /dags/{dag_id}/dagRuns/{dag_run_id}/wait. Once the request is sent, the API will wait until the dag has completed before returning a response, including any XComs the dag produced. For more, see our guide.

Note that synchronous dag execution is currently experimental. There is also potentially more to come with this feature, including different ways of defining a result task and more options for propagating failures early. As always, the Airflow project moves fast, so stay tuned for future releases.

New Plugin interface

Plugins are external features that can be added to customize your Airflow installation, offering a flexible way of building on top of existing Airflow components. For example, data teams have done things like build applications for non-technical users to submit requests to run SQL queries or get summary results of dag runs, or added buttons to dynamically link to files or logs in external data tools relevant to Airflow tasks.

Plugin functionality has always existed in Airflow, but in 3.1 the plugin interface was updated and extended for React views, bringing it up to speed with the huge UI rewrite that happened in 3.0. New capabilities include adding React apps, external views, dashboard integrations, and menu integrations.

For example, in this Airflow instance we embedded our Airflow guides into the Airflow UI:

An external site embedded in the Airflow UI via a plugin

Being able to add React apps gives you the ability to add more complex applications in the Airflow UI. Oftentimes this is used to provide additional functionality in Airflow for non-technical users, so they can interact with Airflow in different ways. Or, it can just be for fun…

The Astronomer DevRel team having way too much fun with plugins

For more on implementing plugins, see our guide.

Continued UI improvements

Nearly every Airflow release brings great UI updates that improve the experience of working with Airflow. The UI was completely rewritten in Airflow 3, making it more modern and React-based, which not only made it faster and more intuitive, but it made it easier for more people in the Airflow community to contribute. The pace of changes to the UI has increased, and there are some pretty exciting new features in 3.1. (Of course the plugins feature mentioned above is also a UI update, but here we’ll focus on changes to the out-of-the-box UI).

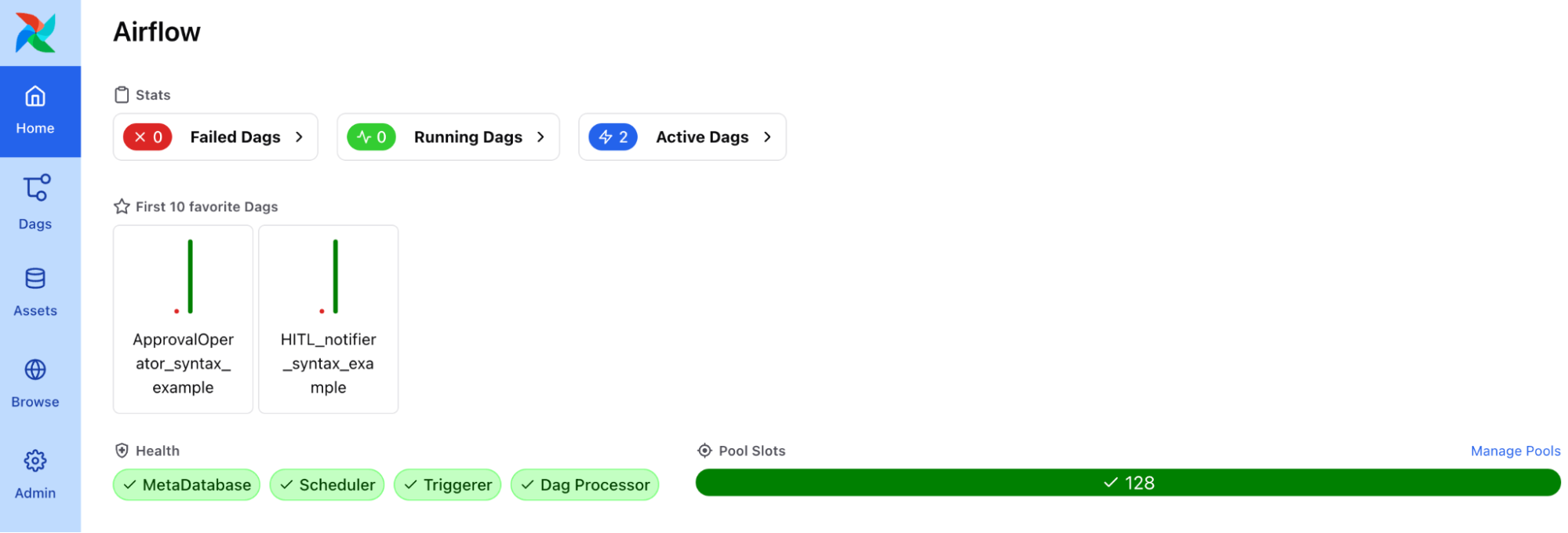

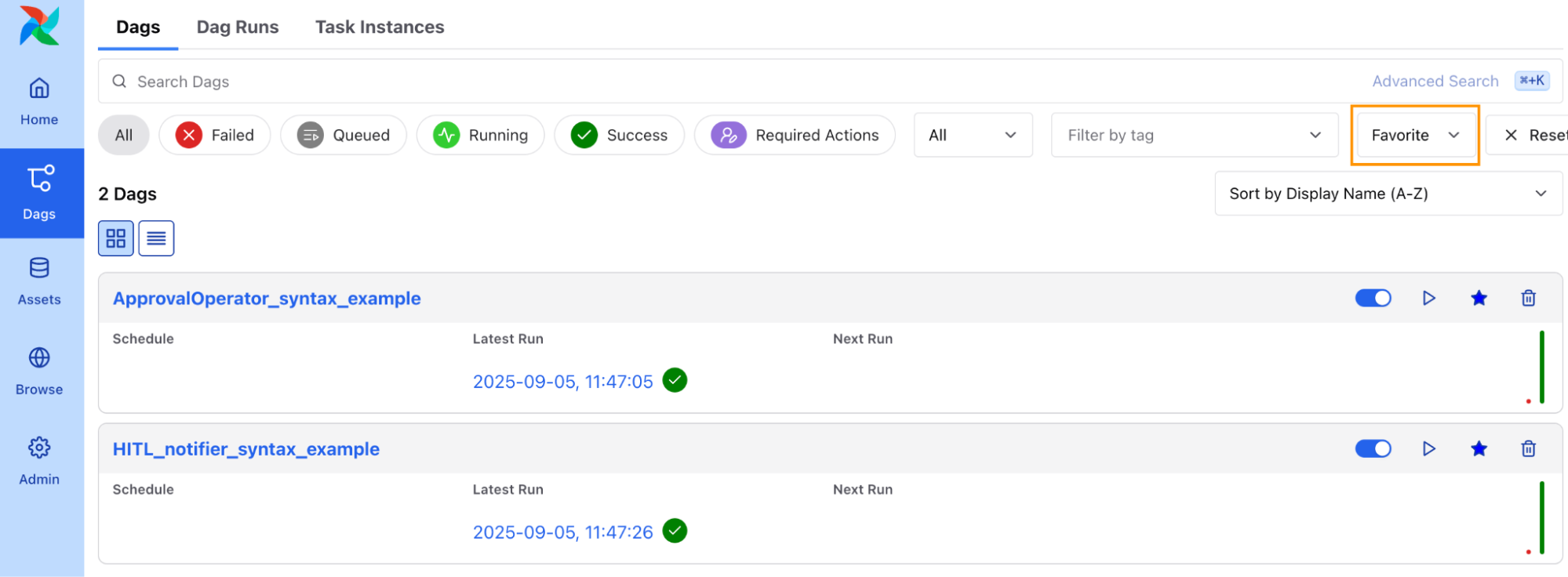

A “small” UI change that will have a big impact on most Airflow users is the ability to favorite dags so they are pinned on the Home and Dags pages. This has been a frequently requested feature from the community, especially from those working with hundreds or even thousands of dags in their Airflow environments. Being able to favorite only the dags you need to look at every day can save you a lot of time scrolling or searching.

Favorited Dags on the Airflow Home page

Once you have favorited dags, you can filter your Dags view like with any other tag.

Dags view filtered to favorited Dags

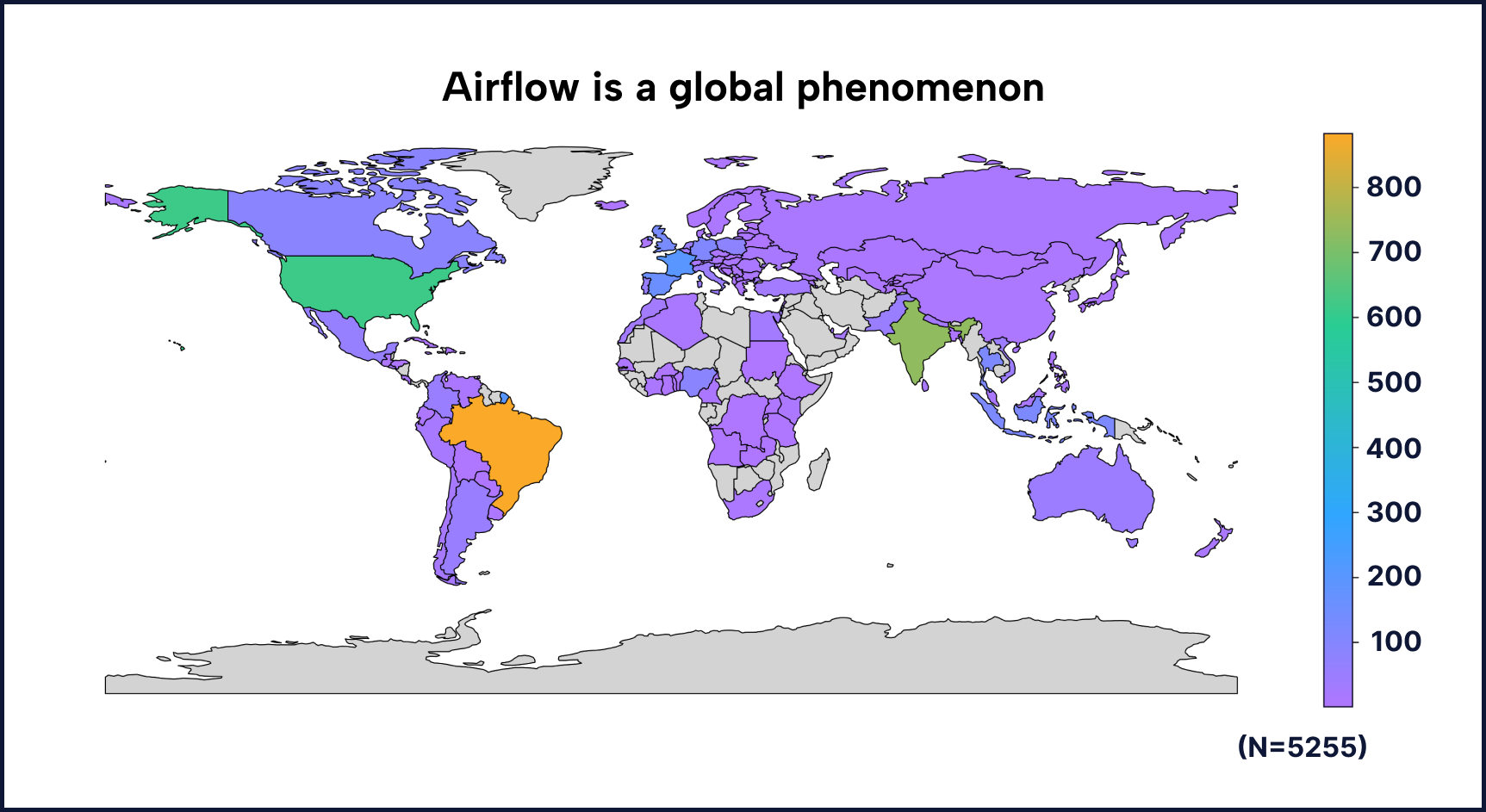

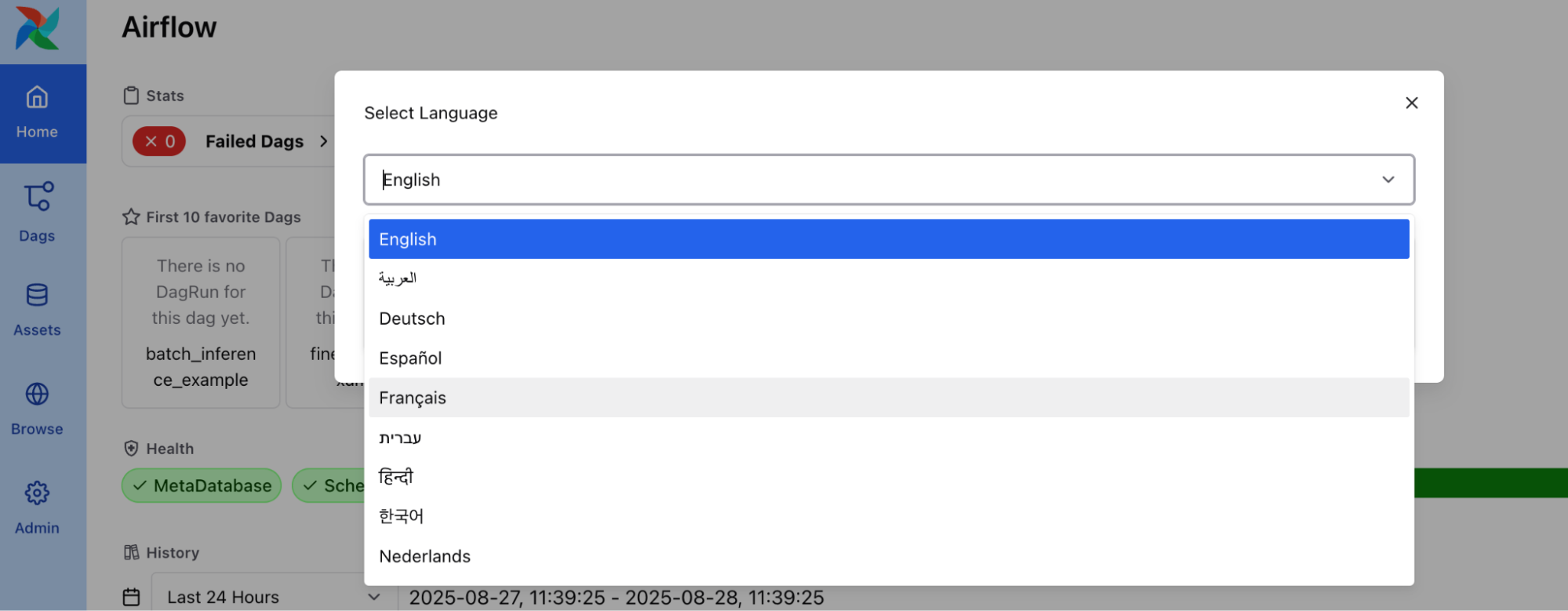

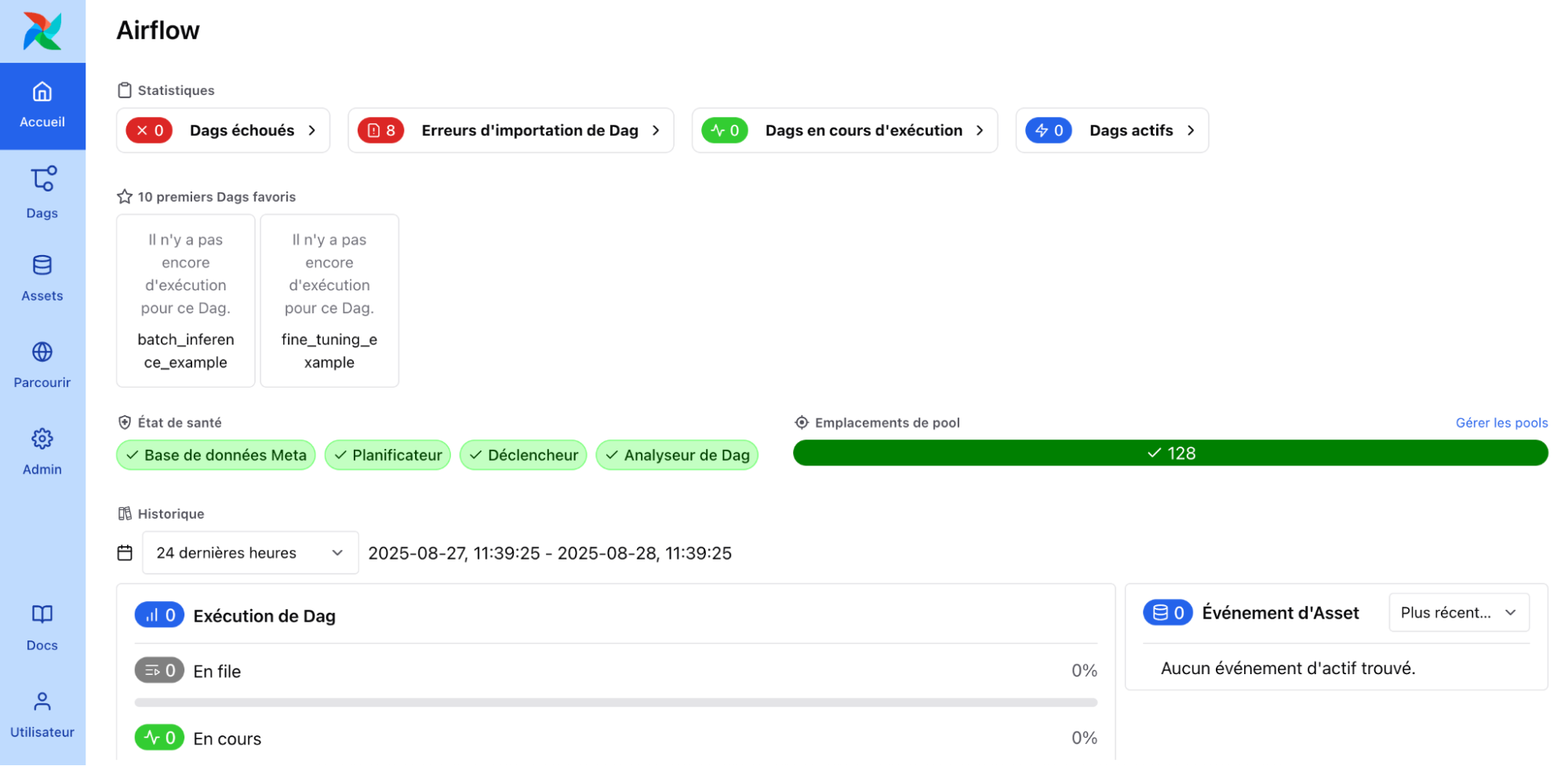

To further customize your UI, you can now also switch the default language. Airflow is used all over the world, by many users who do not work in English. Supporting other languages is a great community initiative to make Airflow more accessible.

The location of Airflow users from the 2024 Airflow survey

There are more than 10 new languages supported in 3.1, and more will certainly be added in the future.

The new UI language selection drop down

The Airflow UI, shown in French

Note that if you would like to see your native language supported, you can check out the internationalization (i18n) policy in the Airflow readme, and even volunteer to be an engaged translator!

In addition to these great updates, 3.1 now has multiple views that are new to Airflow 3 and the React-based UI, but existed in Airflow 2, like the Calendar view and gantt chart.

The gantt for an in-progress Dag run

Other noteworthy features and updates

There are many other notable updates in 3.1 to be aware of, including:

- The Task SDK is now decoupled from Airflow core and can be upgraded independently.

- There is a new trigger rule:

ALL_DONE_MIN_ONE_SUCCESS. - Dag parsing duration is now shown in the UI, giving insight into potential performance bottlenecks.

- Python 3.13 is now supported, and 3.9 support has been removed.

Get started

There is far more in Airflow 3.1 than we can cover in a single blog post, and one of the best ways to learn more is to try it out for yourself! Whether you are already running Airflow 3 and are looking to move to 3.1 quickly, or you are still exploring moving from Airflow 2 to 3, we have resources available to help.

Astro is the best place to run Airflow

Whether you are just wanting to play around with the new release or are ready to use it in production, the easiest way to get started is to spin up a new Deployment running 3.1 on Astro. If you aren’t an Astronomer customer, you can start a free trial with $300 in free credits.

Astronomer was a major contributor to the 3.1 release, driving implementation of some of the big features described here like human-in-the-loop, the new plugins interface, and more. We always offer day-zero support for new releases on Astro, because we want Airflow users to get the latest and greatest from the project right away. Astro gives you access to all of the new features and support from the team that is developing those features, without having to worry about managing your Airflow infrastructure.

Upgrading and other resources

If you are already on Airflow 3, upgrading to Airflow 3.1 is straightforward. There are no breaking changes, and if you are using Astro, you can easily do an in-place upgrade and roll back to your previous version if anything goes wrong.

However, we know that many teams are still using Airflow 2 - with major software updates, upgrading is a process. There are breaking changes you’ll need to be aware of, and in order to upgrade your existing Airflow Deployment to version 3, including on Astro, you will need to be on at least version 2.6.2. If you are considering upgrading now, you can go directly from version 2.6.2 or later to 3.1 (though we recommend upgrading to 2.11 first). You can check out our upgrading guides for more help.

Conclusion

We’re so excited by the continued momentum in the Airflow project, and are thankful to all of the Airflow contributors who helped with this release. As we’ve described in this post, 3.1 continues the massive progress brought in 3.0, and brings important, community-requested features that expand the use cases that Airflow can support and make the day-to-day lives of Airflow users easier.

If you’d like to learn more about the release, we hope you’ll join us for our Introduction to Airflow 3.1 webinar on October 22nd at 11am ET where we’ll give you a full overview of changes and a live demo of new features.