Manage Apache Airflow® Dag notifications

When you’re using a data orchestration tool, how do you know when something has gone wrong? Apache Airflow® users can check the Airflow UI to determine the status of their Dags, but this is an inefficient way of managing errors systematically, especially if certain failures need to be addressed promptly or by multiple team members. Fortunately, Airflow has several notification mechanisms that can be used to configure error notifications in a way that works for your organization.

In this guide, you’ll learn how to set up common Airflow notification mechanisms including email (SMTP) notifications, Airflow callbacks and notifiers.

Assumed knowledge

To get the most out of this guide, you should have an understanding of:

- Airflow Dags. See Introduction to Airflow Dags.

- Airflow operators. See Operators 101.

- Airflow decorators. See Introduction to the TaskFlow API and Airflow decorators.

- Airflow connections. See Manage connections in Apache Airflow.

Notification types

When setting up Airflow notifications, you must first decide between using Airflow’s built-in notification system, an external monitoring service, or a combination of both. The three types of notifications available when running Airflow on Astro are:

Airflow notifications are available in open-source Airflow itself and defined using callback parameters and/or configuration variables relating to email and SMTP.

Astro alerts are a feature of Astro that allows you to configure alerts for many Dags and Deployments at once.

Astro Observe is a product provided by Astronomer that includes the ability to define data products spanning multiple Dags and Deployments, and define Service Level Agreements (SLAs) on them.

The advantage of Airflow notifications is that you can define them directly in your Dag code. The downside is that you need Airflow to be running to send notifications, which means you might run into silent failures if there is an issue with your Airflow infrastructure. Airflow notifications also have some limitations, for example relating to defining SLAs and timeouts.

For the cases where Airflow notifications aren’t sufficient, Astro alerts and Astro Observe provide an additional level of observability. For guidance on when to choose Airflow notifications or Astro alerts, see When to use Airflow or Astro alerts for your pipelines on Astro.

Airflow notification concepts

When defining notifications in Airflow you should understand the following concepts:

Airflow allows you to send email alerts using an external SMTP server. There are different ways to configure email notifications.

Dag- and task-level parameters that allow you to define code that should be executed when a Dag or task reaches a specific state. Can use plain Python functions or notifiers.

A type of Airflow class like operators or hooks, that can be used to standardize your code. Each notifier has a .notify() method for sending notifications.

An advanced Airflow feature that runs in the background and executes code when certain events occur anywhere in your Airflow environment.

Choose your Airflow notification method

It’s best practice to use pre-built solutions whenever possible. This approach makes your Dags more robust by reducing custom code and standardizing notifications across different Airflow environments.

If you want to deliver notifications to email, use the SmtpNotifier or EmailOperator. If you want to use another email service like SendGrid or Amazon SES, see the Airflow documentation for more information.

If you want to be notified via another system, check if a notifier class exists for your use case. See the Airflow documentation for an up-to-date list of available Notifiers and the Apprise wiki for a list of services the AppriseNotifier can connect to.

Only use custom callback functions when no notifier is available for your use case. Consider writing a custom notifier to standardize the code you use to send notifications.

If you want to execute code based on events happening anywhere in your Airflow environment, for example whenever any asset is updated, a Dag run fails, or a new import error is detected, you can use Airflow listeners.

Email (SMTP) notifications

Airflow email notifications can be set up in three different ways:

- (Recommended) You can provide the

SmtpNotifierwith any callback parameter to send emails when a Dag or task reaches a specific state. - (Recommended) You can use the EmailOperator to create dedicated tasks in your Dags to send emails.

- (Legacy) You can configure email notifications using the

emailtask parameter in combination with Airflow configuration variables in theSMTPsection. This approach has limitations and will be removed in a future version. See (Legacy) Email notifications using configuration variables.

All email notifications require you to install the SMTP provider by adding it to your requirements.txt file.

Using the SmtpNotifier

The SmtpNotifier is a pre-built notifier that can be provided to any callback parameter to send emails when a Dag or task reaches a specific state.

To connect the notifier to your SMTP server, you need to create an Airflow connection, for example by setting the following environment variable to create the smtp_default connection:

The main parameters to configure for the SmtpNotifier are:

smtp_conn_id: The ID of the Airflow connection to your SMTP server. Default:smtp_default.to: The email address to send the email to. You can provide a single email address as a string or multiple in a list. Default:None. This parameter is required.cc: The email address to send the email to as a carbon copy. You can provide a single email address as a string or multiple in a list. Default:None.bcc: The email address to send the email to as a blind carbon copy. You can provide a single email address as a string or multiple in a list. Default:None.from_email: The email address to send the email from. Default:None.subject: The subject of the email. Default:None.html_content: The HTML content of the email. Default:None.files: The files to attach to the email as a list of file paths. Default:None.custom_headers: A dictionary of custom headers to add to the email. Default:None.

You provide the instantiated notifier class directly to any callback parameter to send emails when that callback is triggered. To add information about the Dag run to the email, use Jinja templating. All parameters listed above other than smtp_conn_id are templatable.

For example, to send an email notification when a task fails that includes information about the task, as well as the error message ({{ exception }}) and a link to the task’s log ({{ ti.log_url }}), you can use the SmtpNotifier as shown in the code example below.

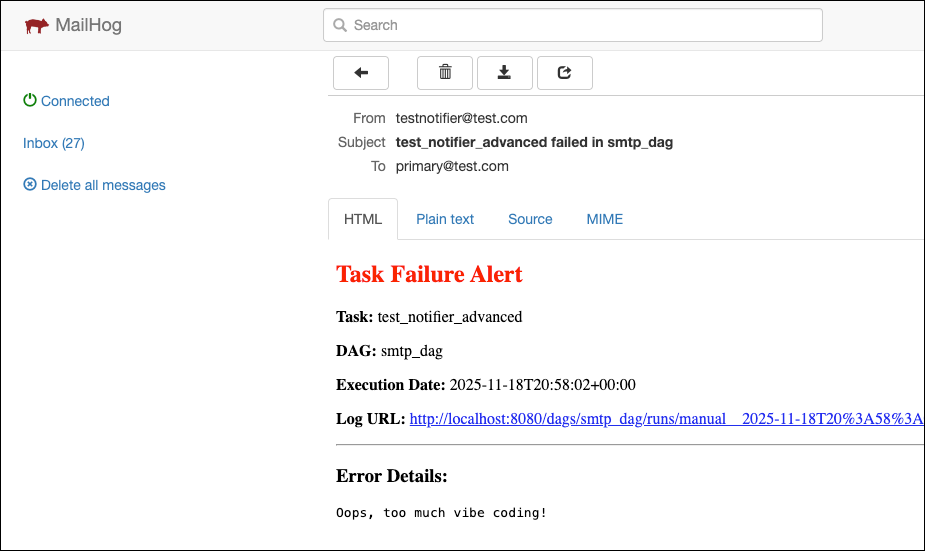

The resulting email looks like this:

If you’d like to test email formatting locally without connecting to a real SMTP server, you can use MailHog in a local Docker container to catch emails and view them in a web interface. Start the MailHog server using docker run -d -p 1025:1025 -p 8025:8025 mailhog/mailhog and use the following connection string:

Using the EmailOperator

You can use the EmailOperator to create dedicated tasks in your Dags to send emails. As with the SmtpNotifier, you need to have the SMTP provider installed and create an Airflow connection to your SMTP server. The main parameters are analogous to the SmtpNotifier.

(Legacy) Email notifications using configuration variables

In older Airflow versions it was common to configure email notifications using a mix of configuration variables and task parameters. This approach is being deprecated in Airflow 3.0 and will be removed in a future version.

To configure email notifications using configuration variables, both the SMTP configuration variables and the email task parameter are needed. Note that you cannot use an AIRFLOW_CONN_ connection with the email configuration parameters in Airflow 3.

The SMTP configuration variables define the connection to your SMTP server.

In order for a task to be able to send an email if it fails or retries, you need to provide the email task parameter to the task to specify who to send the email to.

It is common to provide this in the default_args parameter of a Dag to apply it to all tasks in the Dag.

But you can also provide it at the task level to override the default.

If you want a task to only send emails when it fails, set the email_on_retry parameter to False, if you want it to only send emails when it retries, set the email_on_failure parameter to False.

Most of the AIRFLOW__EMAIL__ configuration variables are no longer supported in Airflow 3.0 for SMTP-based email notifications. Some of those parameters are still used when utilizing other email notification methods such as SendGrid or Amazon SES, see Email Configuration in the Airflow documentation for more information.

Airflow callbacks

In Airflow you can define actions to be taken based on different Dag or task states using *_callback parameters:

on_success_callback: Invoked when a task or Dag succeeds.on_failure_callback: Invoked when a task or Dag fails.on_skipped_callback: Invoked when a task is skipped. This callback only exists at the task level, and is only invoked when anAirflowSkipExceptionis raised, not when a task is skipped due to other reasons, like a trigger rule.on_execute_callback: Invoked right before a task begins executing. This callback only exists at the task level.on_retry_callback: Invoked when a task is retried. This callback only exists at the task level.

You can provide any Python callable or Airflow notifiers to the *_callback parameters. To execute multiple functions, you can provide several callback items to the same callback parameter in a list.

Setting Dag-level callbacks

To define a notification at the Dag level, you can set the *_callback parameter in your Dag instantiation. Dag-level notifications will trigger callback functions based on the terminal state of the entire Dag run. The example below shows one function being executed when the Dag succeeds and two functions being executed when the Dag fails (one custom function and one SlackNotifier).

In Airflow 3.1, deadline alerts that are executed when a Dag run exceeds a user-defined time threshold were added as an experimental feature to replace the removed SLA feature used with the sla and sla_miss_callback parameters. See Deadline alerts in the Airflow documentation for more information.

Astronomer customers should use Astro alerts and Astro Observe to define timeliness and freshness SLAs.

Setting task-level callbacks

To apply a task-level callback to each task in your Dag, you can pass the callback function to the default_args parameter. Items listed in the dictionary provided to the default_args parameter will be set for each task in the Dag. While the example shows one callback function being assigned to each callback parameter, you can provide multiple callback functions and/or notifiers to the same callback parameter in a list as well.

For use cases where an individual task should use a specific callback, the task-level callback parameters can be defined in the task instantiation. Callbacks defined at the individual task level will override callbacks passed in via default_args.

Taskflow

Traditional

Pre-built notifiers

Airflow notifiers are pre-built or custom classes and can be used to standardize and modularize the functions you use to send notifications. Notifiers can be passed to the relevant *_callback parameter of your Dag depending on what event you want to trigger the notification.

You can find a full list of all pre-built notifiers created for Airflow providers here and connect to many more services through the AppriseNotifier.

Notifiers are defined in provider packages or imported from the include folder and can be used across any of your Dags. This feature has the advantage that community members can define and share functionality previously used in callback functions as Airflow modules, creating pre-built callbacks to send notifications to other data tools.

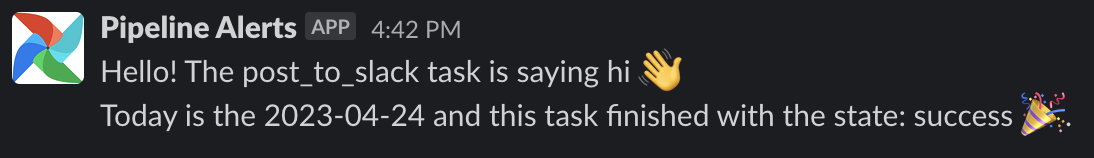

Example pre-built notifier: Slack

An example of a community provided pre-built notifier is the SlackNotifier.

It can be imported from the Slack provider package and used with any *_callback function:

The Dag above has one task sending a notification to Slack. It uses a Slack Airflow connection with the connection ID slack_conn.

Custom notifiers

If no notifier exists for your use case you can write your own! An Airflow notifier can be created by inheriting from the BaseNotifier class and defining the action which should be taken in case the notifier is used in the .notify() method.

To use the custom notifier in a Dag, provide its instantiation to any callback parameter. For example: