Run tasks in an isolated environment in Apache Airflow

It is very common to run a task with different dependencies than your Airflow environment. Your task might need a different Python version than core Airflow, or it has packages that conflict with your other tasks. In these cases, running tasks in an isolated environment can help manage dependency conflicts and enable compatibility with your execution environments.

In Airflow, you have several options for running custom Python code in isolated environments. This guide teaches you how to choose the right isolated environment option for your use case, implement different virtual environment operators and decorators, and access Airflow context and variables in isolated environments.

Other ways to learn

There are multiple resources for learning about this topic. See also:

- Astronomer Academy: Airflow: The ExternalPythonOperator.

- Astronomer Academy: Airflow: The KubernetesPodOperator.

- Webinar: Running Airflow Tasks in Isolated Environments.

- Learn from code: Isolated environments example DAGs repository.

This guide covers options to isolate individual tasks in Airflow. If you want to run all of your Airflow tasks in dedicated Kubernetes pods, consider using the Kubernetes Executor. Astronomer customers can set their Deployments to use the KubernetesExecutor in the Astro UI, see Manage Airflow executors on Astro.

Assumed knowledge

To get the most out of this guide, you should have an understanding of:

- Airflow decorators. See Introduction to the TaskFlow API and Airflow decorators.

- Airflow operators. See Airflow operators.

- Python Virtual Environments. See Python Virtual Environments: A Primer.

- Kubernetes basics. See the Kubernetes Documentation.

When to use isolated environments

There are two situations when you might want to run a task in an isolated environment:

-

Your task requires a different version of Python than your Airflow environment. Apache Airflow is compatible with and available in Python 3.8, 3.9, 3.10, 3.11, and 3.12. The Astro Runtime has images available for all supported Python versions, so you can run Airflow inside Docker in a reproducible environment. See Prerequisites for more information.

-

Your task requires different versions of Python packages that conflict with the package versions installed in your Airflow environment. To know which Python packages are pinned to which versions within Airflow, you can retrieve the full list of constraints for each Airflow version by going to:

Airflow Best Practice

Make sure to pin all package versions, both in your core Airflow environment (requirements.txt) and in your isolated environments. This helps you avoid unexpected behavior due to package updates that might create version conflicts.

Limitations

When creating isolated environments in Airflow, you might not be able to use common Airflow features or connect to your Airflow environment in the same way you would in a regular Airflow task.

Common limitations include:

- You cannot pass all Airflow context variables to a virtual decorator, since Airflow does not support serializing

var,ti, andtask_instanceobjects. See Use Airflow context variables in isolated environments. - You do not have access to your secrets backend from within the isolated environment. To access your secrets, consider passing them in through Jinja templating. See Use Airflow variables in isolated environments.

- Installing Airflow itself, or Airflow provider packages in the environment provided to the

@task.external_pythondecorator or the ExternalPythonOperator, can lead to unexpected behavior. If you need to use Airflow or an Airflow provider module inside your virtual environment, Astronomer recommends using the@task.virtualenvdecorator or the PythonVirtualenvOperator instead. See Use Airflow packages in isolated environments.

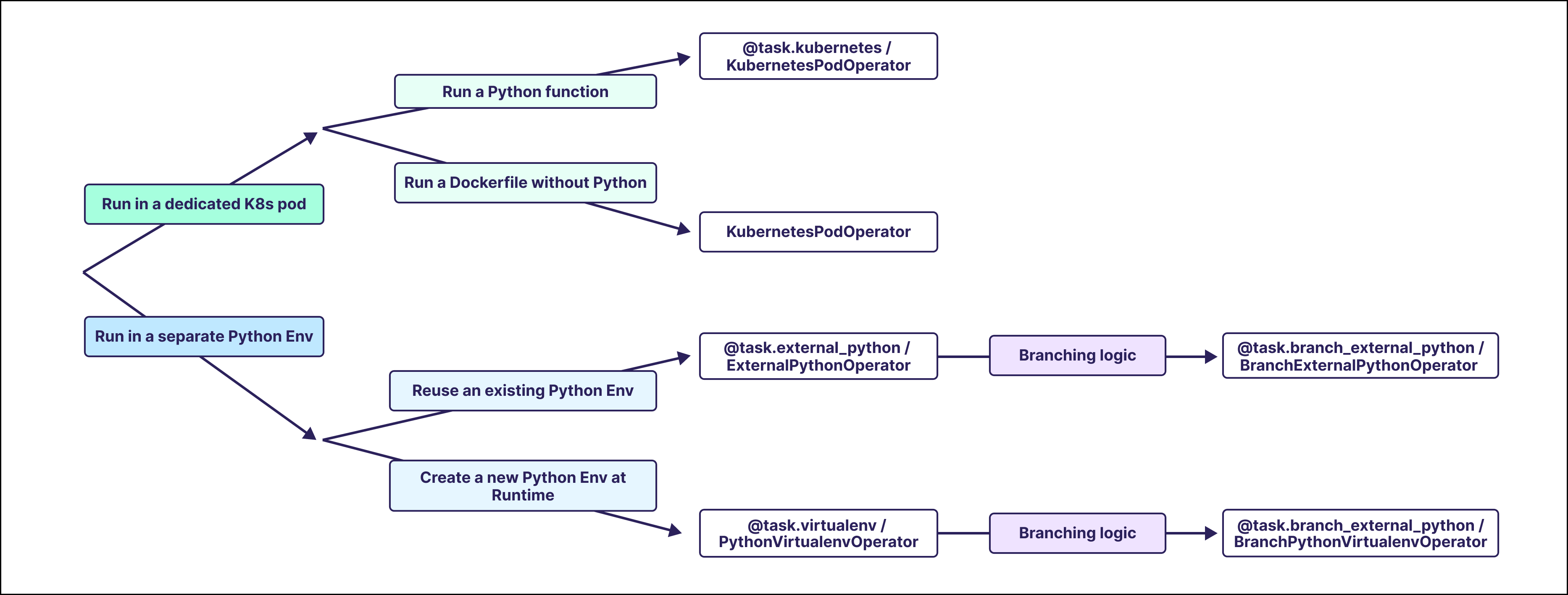

Choosing an isolated environment option

Airflow provides several options for running tasks in isolated environments.

To run tasks in a dedicated Kubernetes Pod you can use:

@task.kubernetesdecorator- KubernetesPodOperator (KPO)

To run tasks in a Python virtual environment you can use:

@task.external_pythondecorator / ExternalPythonOperator (EPO)@task.virtualenvdecorator / PythonVirtualenvOperator (PVO)@task.branch_external_pythondecorator / BranchExternalPythonOperator (BEPO)@task.branch_virtualenvdecorator / BranchPythonVirtualenvOperator (BPVO)

The virtual environment decorators have operator equivalents with the same functionality. Astronomer recommends using decorators where possible because they simplify the handling of XCom.

Which option you choose depends on your use case and the requirements of your task. The table below shows which decorators and operators are best for particular use cases.

Another consideration when choosing an operator is the infrastructure you have available. Operators that run tasks in Kubernetes pods allow you to have full control over the environment and resources used, but they require a Kubernetes cluster. Operators that run tasks in Python virtual environments are easier to set up, but do not provide the same level of control over the environment and resources used.

*Only required if you need to use a different Python version than your Airflow environment.

External Python operator

The ExternalPython operator, @task.external_python decorator or ExternalPythonOperator, runs a Python function in an existing virtual Python environment, isolated from your Airflow environment. To use the @task.external_python decorator or the ExternalPythonOperator, you need to create a separate Python environment to reference. You can use any Python binary created by any means.

The easiest way to create a Python environment when using the Astro CLI is with the Astronomer PYENV BuildKit. The BuildKit can be used by adding a comment on the first line of the Dockerfile as shown in the following example. Adding this comment enables you to create virtual environments with the PYENV keyword.

To use the BuildKit, the Docker BuildKit Backend needs to be enabled. This is the default as of Docker Desktop version 23.0, but might need to be enabled manually in older versions of Docker.

You can add any Python packages to the virtual environment by putting them into a separate requirements file. In this example, by using the name epo_requirements.txt. Make sure to pin all package versions.

Installing Airflow itself and Airflow provider packages in isolated environments can lead to unexpected behavior and is not recommended. If you need to use Airflow or Airflow provider modules inside your virtual environment, Astronomer recommends to choose the @task.virtualenv decorator or the PythonVirtualenvOperator. See Use Airflow packages in isolated environments.

After restarting your Airflow environment, you can use this Python binary by referencing the environment variable ASTRO_PYENV_<my-pyenv-name>. If you choose an alternative method to create you Python binary, you need to set the python parameter of the decorator or operator to the location of your Python binary.

To run any Python function in your virtual environment, use the @task.external_python decorator on it and set the python parameter to the location of your Python binary.

Traditional

To run any Python function in your virtual environment, define the python_callable parameter of the ExternalPythonOperator with your Python function, and set the python parameter to the location of your Python binary.

TaskFlow XCom

You can pass information into and out of the @task.external_python decorated task the same way as you would when interacting with a @task decorated task, see also Introduction to the TaskFlow API and Airflow decorators.

Traditional XCom

You can pass information into the ExternalPythonOperator by using a Jinja template retrieving XCom values from the Airflow context. To pass information out of the ExternalPythonOperator, return it from the python_callable.

Note that Jinja templates are rendered as strings unless you set render_template_as_native_obj=True in the DAG definition.

To get a list of all parameters of the @task.external_python decorator / ExternalPythonOperator, see the Astronomer Registry.

Virtualenv operator

The Virtualenv operator (@task.virtualenv or PythonVirtualenvOperator) creates a new virtual environment each time the task runs. If you only specify different package versions and use the same Python version as your Airflow environment, you do not need to create or specify a Python binary.

Installing Airflow itself and Airflow provider packages in isolated environments can lead to unexpected behavior and is generally not recommended. See Use Airflow packages in isolated environments.

Add the pinned versions of the packages to the requirements parameter of the @task.virtualenv decorator. The decorator creates a new virtual environment at runtime.

Traditional

Add the pinned versions of the packages you need to the requirements parameter of the PythonVirtualenvOperator. The operator creates a new virtual environment at runtime.

TaskFlow XCom

You can pass information into and out of the @task.virtualenv decorated task using the same process as you would when interacting with a @task decorated task. See Introduction to the TaskFlow API and Airflow decorators for more detailed information.

Traditional XCom

You can pass information into the PythonVirtualenvOperator by using a Jinja template to retrieve XCom values from the Airflow context. To pass information out of the PythonVirtualenvOperator, return it from the python_callable.

Note that Jinja templates are rendered as strings unless you set render_template_as_native_obj=True in the DAG definition.

Since the requirements parameter of the PythonVirtualenvOperator is templatable, you can use Jinja templating to pass information at runtime. For example, you can use a Jinja template to install a different version of pandas for each run of the task.

If your task requires a different Python version than your Airflow environment, you need to install the Python version your task requires in your Airflow environment so the Virtualenv task can use it. Use the Astronomer PYENV BuildKit to install a different Python version in your Dockerfile.

To use the BuildKit, the Docker BuildKit Backend needs to be enabled. This is the default starting in Docker Desktop version 23.0, but might need to be enabled manually in older versions of Docker.

The Python version can be referenced directly using the python parameter of the decorator/operator.

Traditional

To get a list of all parameters of the @task.virtualenv decorator or PythonVirtualenvOperator, see the Astronomer Registry.

Kubernetes pod operator

The Kubernetes operator, @task.kubernetes decorator or KubernetesPodOperator, runs an Airflow task in a dedicated Kubernetes pod. You can use the @task.kubernetes to run any custom Python code in a separate Kubernetes pod on a Docker image with Python installed, while the KubernetesPodOperator runs any existing Docker image.

To use the @task.kubernetes decorator or the KubernetesPodOperator, you need to provide a Docker image and have access to a Kubernetes cluster. The following example shows how to use the modules to run a task in a separate Kubernetes pod in the same namespace and Kubernetes cluster as your Airflow environment. For more information on how to use the KubernetesPodOperator, see Use the KubernetesPodOperator and Run the KubernetesPodOperator on Astro.

Traditional

Virtual branching operators

Virtual branching operators allow you to run conditional task logic in an isolated Python environment.

@task.branch_external_pythondecorator or BranchExternalPythonOperator: Run conditional task logic in an existing virtual Python environment.@task.branch_virtualenvdecorator or BranchPythonVirtualenvOperator: Run conditional task logic in a newly created virtual Python environment.

To run conditional task logic in an isolated environment, use the branching versions of the virtual environment decorators and operators. You can learn more about branching in Airflow in the Branching in Airflow guide.

@task.external_python

ExternalPythonOperator

@task.virtualenv

PythonVirtualenvOperator

Use Airflow context variables in isolated environments

Some variables from the Airflow context can be passed to isolated environments, for example the logical_date of the DAG run. Due to compatibility issues, other objects from the context such as ti cannot be passed to isolated environments. For more information, see the Airflow documentation.

@task.external_python

ExternalPythonOperator

@task.virtualenv

PythonVirtualenvOperator

Use Airflow variables in isolated environments

You can inject Airflow variables into isolated environments by using Jinja templating in the op_kwargs argument of the PythonVirtualenvOperator or ExternalPythonOperator. This strategy lets you pass secrets into your isolated environment, which are masked in the logs according to rules described in Hide sensitive information in Airflow variables.

PythonVirtualenvOperator

ExternalPythonOperator

Use Airflow packages in isolated environments

Using Airflow packages inside of isolated environments can lead to unexpected behavior and is not recommended.

If you need to use Airflow or an Airflow provider module inside your virtual environment, use the @task.virtualenv decorator or the PythonVirtualenvOperator instead of the @task.external_python decorator or the ExternalPythonOperator.

As of Airflow 2.8, you can cache the virtual environment for reuse by providing a venv_cache_path to the @task.virtualenv decorator or PythonVirtualenvOperator, to speed up subsequent runs of your task.