The Rise of Abstraction in Data Orchestration

9 min read |

From punch cards to AI

It’s raining outside. Ada Lovelace sits in a room at Ockham Park in Surrey, England. The room is bathed in candlelight as she writes Note G, a computer algorithm designed to calculate Bernoulli numbers for Charles Babbage’s Analytical Engine, a machine that would never be built in her lifetime. This work would later earn her recognition as the world’s first programmer.

Portrait of Ada Lovelace (c. 1840), watercolor, Alfred Edward Chalon, Public domain, via Wikimedia Commons

Decades later, programmers would physically encode similar algorithms by punching holes into cards, feeding them one by one into room-sized machines.

Fast-forward to today. My colleague Tamara opens Astro IDE, describes her pipeline requirements in natural language, and watches as a dag materializes on screen, curious to see if the AI has absorbed the latest Airflow 3.1 features and best practices. She’s doing work that Ada Lovelace couldn’t even dream of, yet the fundamental challenge remains the same: translating human intent into machine instructions. What’s changed is how we’ve built bridges between human thought and machine execution.

This is not only a story of how programming, data engineering and orchestration has evolved, but also a story of abstraction. Pipeline authoring has evolved from hand-crafted Python to natural language prompts, but no single level of abstraction is the winner. Instead, multiple approaches now coexist, each serving different teams, skills, and use cases.

This blog series explores those levels in Airflow: Python as the foundational orchestration level, DAG Factory for generating dags from YAML configurations, Blueprint for reusable pipeline templates, and Astro IDE offering an in-browser, AI-assisted approach to dag authoring. In this first part, we look at how abstraction reshapes orchestration, why it matters for technical managers, and how to position teams to thrive in a multi-level reality. It’s the overview and basic decision framework before we dive deeper.

Why multiple levels of pipeline authoring

“Mathematical science shows what is. It is the language of unseen relations between things. But to use and apply that language, we must be able to fully appreciate, to feel, to seize the unseen, the unconscious.”

— Ada Lovelace

Ada Lovelace understood that seeing the unseen, the hidden relationships within mathematical systems, is required to apply that language. The same is true for data orchestration. Depending on your role, goals, and skillset, you need different levels of visibility into what’s happening beneath the surface of your pipelines: the connections between tasks, the dependencies, the code behind your Airflow DAGs.

In my almost 15 years as a data engineer, I’ve learned one universal truth: everyone needs orchestration. The marketing team needs daily attribution reports. The CRM team needs personalized newsletter triggers. The platform team needs cross-cloud data transfers. The analytics team needs third-party data imports. Data touches every corner of the business, and the orchestration layer is the one layer that connects it all.

But here’s the challenge: these stakeholders come from different backgrounds. Some are Python experts. Others have never opened an IDE. Yet all share the same need: reliable data orchestration.

In my time as a data engineer, I worked on a rather small team. Especially considering that all departments were stakeholders for data orchestration, we quickly became the bottleneck. Many pipeline requests funneled through us. The solution wasn’t just to work harder, though that helped, but to enable others and accelerate time-to-value. Anything that made us faster or empowered stakeholders to build their own pipelines translated directly into business value.

This is why multiple abstraction levels are essential:

- A data engineer with deep understanding of Python and Airflow optimizes a complex dag for performance

- A data scientist with basic Python knowledge orchestrates pipelines in YAML using DAG Factory

- A data analyst deploys a common pipeline using a governed Blueprint template

- A data engineer uses Astro IDE to skip local setup and prototype an MVP in minutes through prompts

What excites me most is the timing. Airflow 3.1 just launched, DAG Factory got a major refresh, Astro IDE is available, and Blueprint is emerging as a new paradigm for governed Airflow templates. After years of limited options, the toolbox is suddenly full of powerful, purpose-built tools.

Blueprint is an open-source project by Astronomer that's currently in alpha and may change significantly. This post is a teaser for the idea behind Blueprint, so stay tuned for updates. We'll take a closer look in part three of this series.

For the first time, I feel a bit like Ada Lovelace must have felt, catching a glimpse of a future that’s finally within reach.

Let’s have a closer look at the different abstraction levels.

Four levels at a glance: Python, DAG Factory, Blueprint, Astro IDE

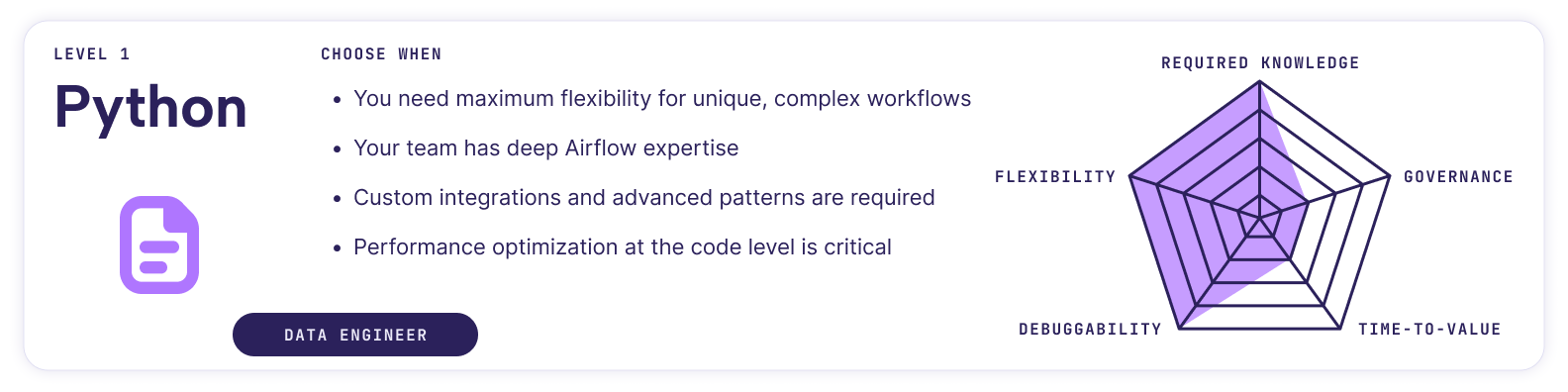

Level 1: Python

Python is the foundation of Airflow orchestration. Here, you compose tasks into pipelines using code, either through a task-oriented approach (defining dags with explicit tasks and dependencies) or an asset-oriented approach (putting data assets front and center).

The TaskFlow API lets you define tasks with decorators, while operator classes offer an alternative for composing workflows. This approach demands the highest Python and Airflow expertise but delivers maximum flexibility for complex business requirements.

Example:

@dag(schedule="@daily", start_date=datetime(2025, 9, 1))

def customer_daily_sync():

@task

def transform_data():

pass

transform_data()

customer_daily_sync()

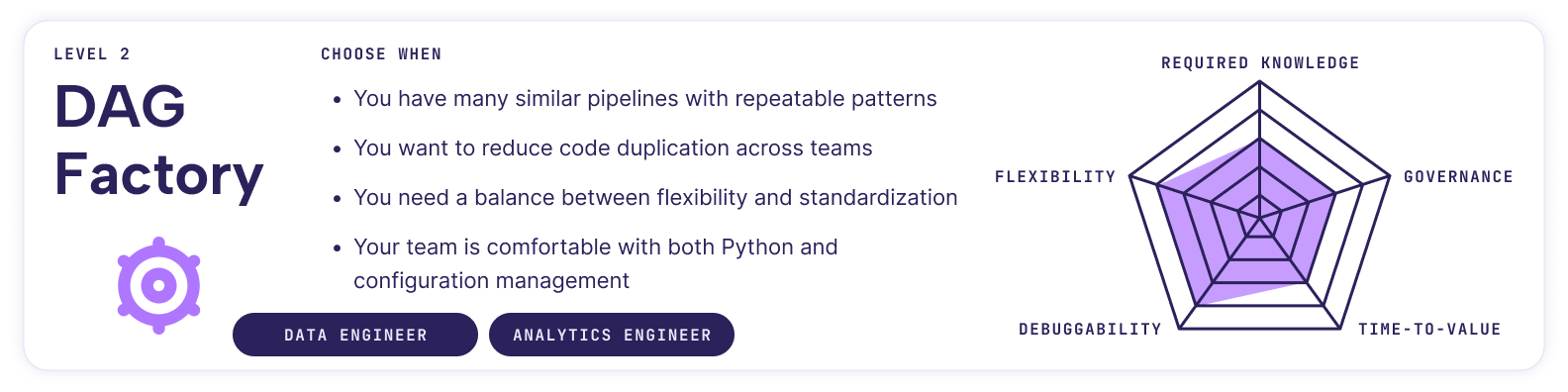

Level 2: DAG Factory

DAG Factory is an open-source tool from Astronomer that dynamically generates dags from YAML configuration files.

This declarative approach lets you describe what you want to achieve without specifying how. It still requires Airflow knowledge but separates pipeline logic from structure, standardizes repetitive patterns, and empowers a broader range of users.

Example:

customer-daily-sync:

default_args:

start_date: 2025-09-01

schedule: "@hourly"

tasks:

transform_data:

decorator: airflow.sdk.task

python_callable: include.tasks.basic_dag_tasks._transform_data

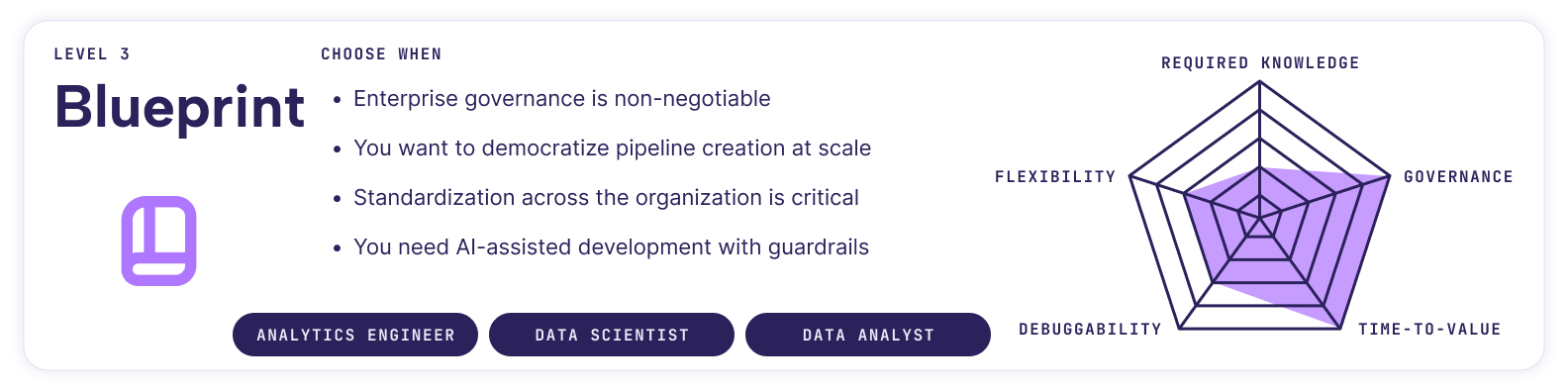

Level 3: Blueprint

Blueprint is an emerging open-source project from Astronomer that takes governed templates to the next level. In most data teams, the same dag patterns get rebuilt repeatedly with minor variations, leading to copy-paste chaos and maintenance nightmares.

Blueprint solves this by letting you:

- Create once, use everywhere: Write dag patterns as reusable templates

- Reduce errors: Validate configurations before deployment

- Build guardrails: Enforce organizational standards and best practices

- Empower non-engineers: Let others safely define dags without touching Python

Compared to DAG Factory, with Blueprint we can re-use pre-defined data pipeline templates using YAML or Python, and customize these by setting parameters, rather than using YAML to describe all the details of the pipeline.

Native Astro IDE integration is planned, enabling a much broader audience to orchestrate pipelines using templates without deep Python or Airflow knowledge required.

Example YAML API:

blueprint: daily_etl

job_id: customer-daily-sync

source_table: raw.customers

target_table: analytics.dim_customers

schedule: "@hourly"

retries: 4

Example Python API:

from etl_blueprints import DailyETL

dag = DailyETL.build(

job_id="customer-daily-sync",

source_table="raw.customers",

target_table="analytics.dim_customers",

schedule="@hourly",

retries=4

)

Let’s have a closer look at the different abstraction levels.

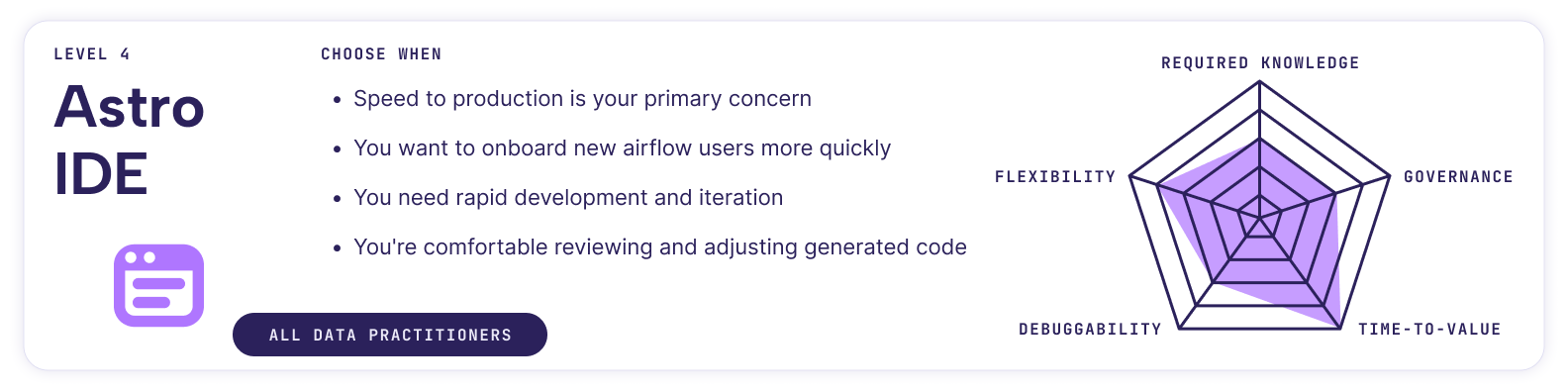

Level 4: Astro IDE

Astronomer’s in-browser, AI-assisted Astro IDE, an Astro-exclusive feature, helps engineers deliver pipelines faster, onboard new talent with ease, and ship with confidence. Describe your requirements in natural language, and watch as high-quality dags emerge autonomously within seconds.

The browser-based IDE and one-click deployment to Astro eliminate local setup overhead, dramatically accelerating time-to-value. Since the output is Python-based, you’ll still need Airflow knowledge to review and refine, but the heavy lifting is done for you.

Example prompt:

Write a DAG that moves CSV files from S3 to Snowflake

What this means for data teams

The rise of abstraction in data orchestration isn’t about replacing one approach with another, it’s about expanding your team’s toolkit. The most successful data organizations in 2025 and beyond will be those that:

- Embrace heterogeneous orchestration: Different projects warrant different abstraction levels. A critical, high-performance pipeline might demand Python-first development, while routine data transfers benefit from Blueprint.

- Invest in the right skills: As abstraction levels rise, the role of data engineers evolves from writing every line of code to architecting systems, reviewing generated code, and establishing governance frameworks.

- Prioritize governance early: Higher abstraction levels make it easier to enforce standards, as it enables teams to roll out standardized data pipeline templates, but only if those standards are defined upfront. Blueprint-style approaches excel here, but require organizational maturity.

- Measure what matters: Time-to-value, debuggability, and flexibility aren’t just theoretical concerns, but they directly impact team productivity and system reliability. Track these metrics as you adopt new abstraction levels.

Don’t limit yourself to a single level of abstraction. Get the most from your data by choosing the right tool for the job. With Astro and Airflow, you’ll have a toolbox that meets all your orchestration needs and delivers fast time-to-value.

The following table offers initial guidance on selecting a level based on project requirements and the people involved in implementing the orchestration. Keep these two aspects in mind, and the table will help you choose the right level.

| Abstraction levels overview | ||||

|---|---|---|---|---|

| Attribute | Python (Level 1) | DAG Factory (Level 2) | Blueprint (Level 3) | Astro IDE (Level 4) |

| Required Python knowledge | Very High | Medium | Low | Medium |

| Required Airflow knowledge | Very High | Medium | Low | Medium |

| Flexibility | Very High | High | Medium | High |

| Debuggability | Very High | High | Medium | Medium |

| Governance | Low | Medium | Very High | Medium |

| Time-to-value (low is good) | High | Medium | Very Low | Very Low |

This table is based on the perception of Astronomer employees with different technical backgrounds.

Summary and Outlook

Decision Cards

What comes next

The punch card era taught us that abstraction is inevitable. The AI era is teaching us that abstraction is additive, not substitutive. In data orchestration, we’re witnessing this evolution in real-time and the teams that recognize it will build the data platforms of tomorrow.

In the next part of this series, we will have a deeper look at how DAG Factory bridges code and config and enables teams for more declarative pipeline orchestration.

Get started free.

OR

By proceeding you agree to our Privacy Policy, our Website Terms and to receive emails from Astronomer.