Calculating Airflow’s Total Cost of Ownership

7 min read |

Almost every data team runs Apache Airflow®. Few know what it really costs.

The new Astro TCO Calculator turns that guesswork into clarity by modeling the engineering, infrastructure, and operations costs behind orchestration to help you decide when managed Airflow delivers better ROI.

Why TCO now?

Workflow orchestration has become critical in most organizations. Done well, it connects reliable, timely, and governed data to every agent, model, dashboard, report, and application.

The intense pressure engineering teams are under to ship AI has amplified the need for orchestration. This is because there is no way to deliver on your AI strategy without a data strategy, and no data strategy without unified orchestration. But the impacts are far broader than AI. Without well managed orchestration, reports arrive late, analytics drift, apps turn brittle, and costs keep climbing.

It’s these drivers that have made Apache Airflow the industry standard for orchestration as well as one of the most active open source projects on the planet. Airflow is shaped by over 3,000 contributors, downloaded over 30 million times every month and has over 1,900 pre-built integrations into every tool, framework, and platform in the data stack. It’s trusted by the world’s leading enterprises and frontier AI labs as the indispensable control plane for data workflows.

Where enterprises can struggle is operating Airflow at scale, demanding deep engineering resources across development, on-going maintenance and custom management tooling. For many organizations, this work is essential but is either not strategic to their business or not a core competency, diverting critical resources from other objectives. That’s the platform gap filled by Astro, the fully managed Airflow service from Astronomer.

One challenge data teams face is how to justify investment in Astro to the business. That’s why we built the Astro TCO Calculator.

What you can do with the Astro TCO Calculator

With the calculator you can instantly model the cost, efficiency, and productivity benefits of a fully-managed orchestration platform.

Built on Astronomer’s proven Business-Value Assessments (BVAs) adopted by leading enterprises, the TCO Calculator analyzes developer hours, incident response efforts, data-stack spend, and more to surface hard-dollar savings.

Whether you’re authoring new DAGs, optimizing Airflow and dbt pipelines, building for agentic AI, consolidating fragmented DataOps tooling, or modernizing legacy workflows, this calculator models realizable savings. To get you started fast, the calculator is pre-populated with smart defaults derived from our work with thousands of data teams. You can customize any of these inputs to match your specific environment and priorities.

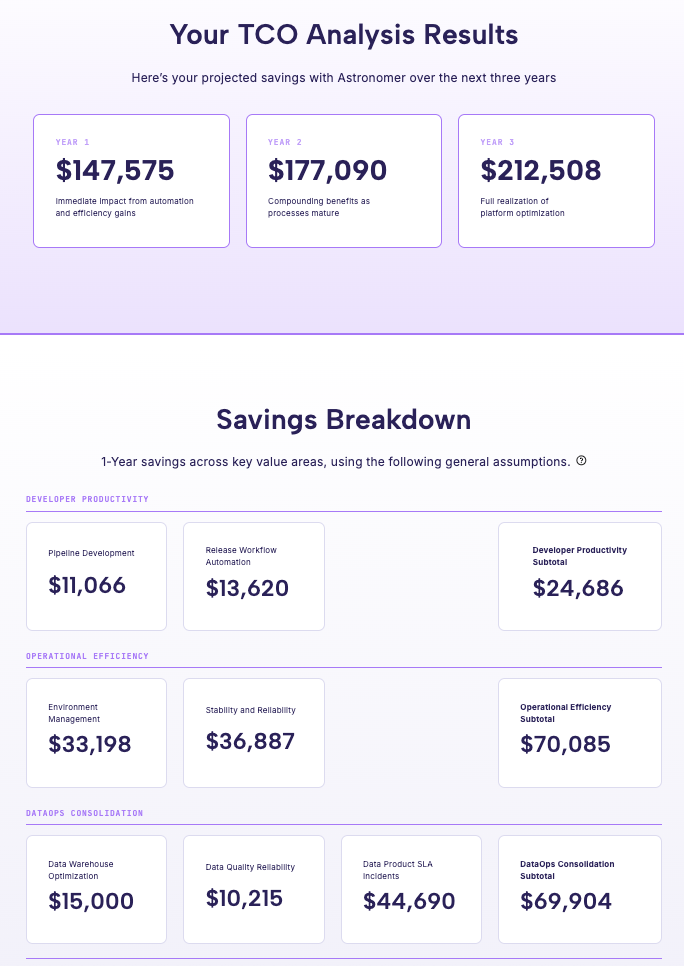

Figure 1: Calculated TCO savings can help you quickly model business cases across your data estate

All of the assumptions we make to create your TCO analysis are available in the calculator so you know exactly how we arrive at each number.

While the TCO Calculator gives you a fast, high-level estimate, a Business Value Assessment goes deeper. An Astronomer expert works with your team to capture a complete set of cost, productivity, and reliability metrics, producing a fully customized report that reflects your exact environment and business needs. For a full Business Value Assessment, please send our team a message using the Contact Astronomer form.

21 ways you save with Astro

The calculator assess time, effort, and cost across the three lifecycle stages of orchestrated data workflows:

-

Developer Productivity: Build data pipelines.

-

Operational Efficiency: Run data pipelines.

-

DataOps Consolidation: Observe data pipelines.

Digging into each stage, here’s how Astro cuts cost, complexity, and time:

Build Data Products

Astro streamlines development with powerful tooling that accelerates pipeline creation and testing:

| Feature | How it cuts cost and boosts efficiency |

|---|---|

| Astro IDE | Ship Airflow pipelines in minutes, not days. The only AI-native IDE for data engineers auto-generates, tests, and debugs DAGs using context from your code, configs, and observability metadata |

| Astro CLI | Streamline local pipeline development. Developers run and debug Airflow DAGs locally previewing changes instantly without consuming shared infrastructure or waiting on deploys |

| Branch-Based Deploys | Accelerate testing and improve code quality with lower overhead. Spin up isolated dev environments to run, test, and deploy pipeline branches safely without affecting production or maintaining long-lived staging infrastructure |

| CI/CD | Cut manual release effort and risk. Automate reliable code deployment, enforce reviews, promote code across environments, and ensure testing. Integrated with your favorite tools. |

Run Data Products

Astro’s infrastructure optimizations deliver maximum performance while minimizing operational overhead:

| Feature | How it cuts cost and boosts efficiency |

|---|---|

| Central Connection Management | Reduce setup time and eliminate licensing costs. A centralized secrets backend manages all credentials across deployments, avoiding duplication and cutting reliance on external vaults |

| Hypervisor Autoscaling Infrastructure | Maximize throughput while lowering compute costs. Purpose-built engine dynamically right-sizes resources and doubles Airflow task execution throughput, delivering more performance per core without manual tuning |

| Scale-to-Zero Deployments | Eliminate idle infrastructure costs. Non-production environments automatically hibernate when inactive, reducing spend to zero during off-hours |

| Dynamic Workers | Match capacity to demand: no overprovisioning. Worker nodes scale up and down automatically, ensuring you only pay for the compute you use |

| Task-Optimized Worker Queues | Run tasks efficiently and eliminate waste. Separate queues for heavy and light workloads prevent over-provisioning, optimizing resource usage and reducing cloud CPU and memory costs |

| Event-Driven Scheduling | Cut compute costs and enable real-time data workflows. Event-driven pipelines eliminate constant polling and rigid schedules, triggering instantly on data arrival to save resources |

| Remote Execution Agents | Reduce cloud spend with flexible workload placement. Run compute-heavy tasks or sensitive data pipelines on lower-cost infrastructure whether on-prem, in GPUs or in cheaper cloud regions. Minimize egress fees and avoid premium pricing |

| Multi-Zone High Availability | Prevent zone‑level outages and missed SLAs. Replicated schedulers, DAG processors, and web servers run across multiple nodes and zones, keeping pipelines active even if one zone fails |

| In-Place Upgrades + Deployment Rollbacks | Reduce downtime and engineering overhead during upgrades. In-place Airflow upgrades and instant rollbacks let teams update environments without rebuilds and recover from failed deployments. Minimize outages, maintenance windows, and effort |

| dbt Orchestration with Cosmos | Eliminate redundant tooling and lower platform costs. Runs dbt projects natively in Airflow with 10 lines of code. Gain full control over transformations in a single, scalable orchestration layer and saving hundreds of thousands of dollars |

Observe Data Products

Astro consolidates observability, monitoring, and cost management into a unified platform:

| Feature | How it cuts cost and boosts efficiency |

|---|---|

| DataOps Consolidation | Cut tooling costs and simplify your data stack. Replace fragmented observability, lineage, quality, and cost tools with a single platform. Eliminate redundant licenses, integration effort, and brittle custom glue code |

| Unified Asset Catalog + Popularity Scores | Optimize spend by focusing on high-value data. Table popularity scores highlight the most-used assets so teams can prioritize storage, caching, and quality efforts where they deliver the greatest return |

| SLA Monitoring & Proactive Alerting | Avoid SLA breaches and costly emergency reruns. Custom alerts flag freshness and latency risks early so teams can intervene before issues escalate and disrupt downstream data products |

| End-to-End Lineage & Pipeline Visibility | Accelerate root cause analysis and avoid rework. Task-level lineage shows exactly how data flows across pipelines, making it easy to trace issues, assess impact, and prevent unnecessary recomputations |

| AI Log Summaries | Faster incident resolution. Generates instant, plain-language root-cause reports, compressing MTTR and the costly engineer hours that go with it |

| Data Quality Monitoring | Catch bad data early and prevent downstream failures. Inline checks for volume, schema, and completeness run with pipeline context, making it easy to trace issues and avoid expensive reprocessing |

| Data Warehouse Cost Management | Cut platform costs with pipeline-level attribution. Usage and spend are mapped directly to DAGs, tasks, and assets so teams can detect anomalies, uncover trends, and eliminate waste tied to specific workflows |

It's never been easier to put your Airflow estate to the test:

Get started free.

OR

By proceeding you agree to our Privacy Policy, our Website Terms and to receive emails from Astronomer.