Apache Airflow® GenAI Quickstart

Welcome to Astronomer’s Apache Airflow® GenAI Quickstart! 🚀

You will set up and run a fully functional Airflow project for a TaaS (Trees-as-a-Service) business that provides personalized, hyperlocal recommendations for which trees to plant in an area. This quickstart focuses on a GenAI DAG that uses the Airflow AI SDK to create a personalized description of your future garden 🌲🌳🌴!

Other ways to Learn

You can watch the recording of our recent Orchestrating LLM workflows with the Airflow AI SDK webinar to learn more about all decorators in the Airflow AI SDK.

For a hands-on version of this project, check out the Airflow quickstart workshop containing exercises to practice how to use key Airflow features.

Time to complete

This quickstart takes approximately 15 minutes to complete.

Assumed knowledge

To get the most out of this quickstart, make sure you have an understanding of:

- Basic Airflow concepts. See Introduction to Apache Airflow.

- Basic Python. See the Python Documentation.

Prerequisites

- Homebrew installed on your local machine.

- An integrated development environment (IDE) for Python development. This quickstart uses Cursor which is very similar to Visual Studio Code.

- An OpenAI API Key.

- (Optional) A local installation of Python 3 to improve your Python developer experience.

Step 1: Install the Astro CLI

The free Astro CLI is the easiest way to run Airflow locally in a containerized environment. Follow the instructions in this step to install the Astro CLI on a Mac using Homebrew, for other installation options and operating systems see Install the Astro CLI.

-

Run the following command in your terminal to install the Astro CLI.

-

Verify the installation and check your Astro CLI version. You need to be on at least version 1.34.0 to run the quickstart.

-

(Optional). Upgrade the Astro CLI to the latest version.

Note

If you can’t install the Astro CLI locally, skip to Run the quickstart without the Astro CLI to deploy and run the project with a free trial of Astro.

Step 2: Clone and open the project

-

Clone the quickstart code from its branch on GitHub. This command will create a folder called

devrel-public-workshopson your computer. -

Open the project folder in your IDE of choice.

Tip

If you quickly need a new Airflow project in the future you can always create one in any empty directory by running

astro dev init.

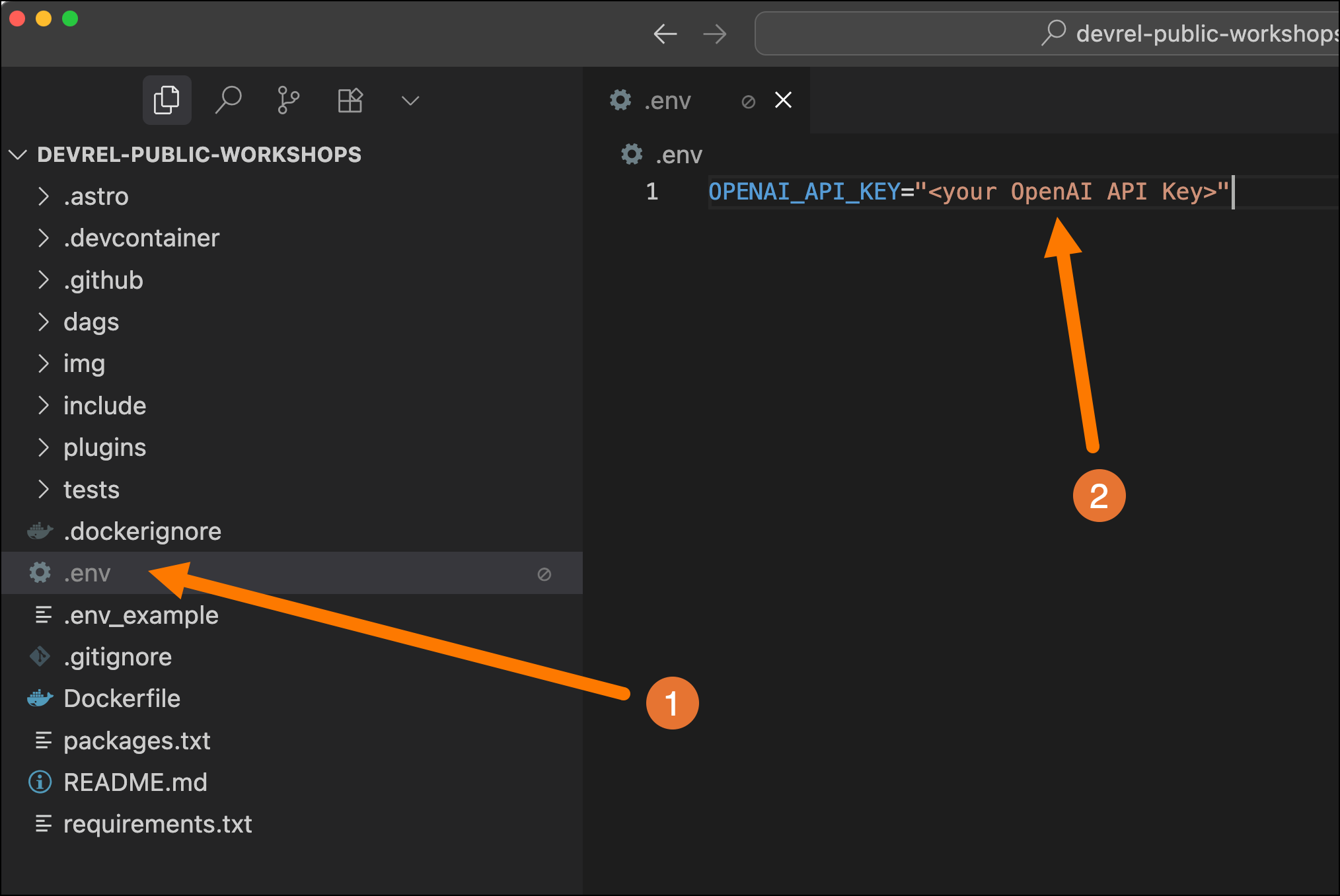

Step 3: Add your OpenAI API Key

In order to be able to send requests to a Large Language Model (LLM) via the Airflow AI SDK you need to have an API Key and make it available to the Airflow AI SDK as an environment variable. This quickstart uses OpenAI; you can learn about other model providers that are compatible with the Airflow AI SDK here.

-

In the root of your repository, create a new file called

.env(1). This file is ignored by git and a good place to define your (secret) environment variables

-

In the

.envfile, define a variable calledOPENAI_API_KEYand set it to your OpenAI API Key (2). -

To use the AI SDK you also need to install the Airflow AI SDK Python package with the extra relevant to your model provider by adding it to the

requirements.txtfile. This has already been done for you in the quickstart repository.

Note

Whenever you make changes to your

.envorrequirements.txtfile you need to restart your Airflow environment usingastro dev restartfor the changes to take effect.

Step 4: Start the project

The code you cloned from GitHub already contains a fully functional Airflow project. Let’s start it!

-

Run the following command in the root of the cloned folder to start the quickstart:

Info

If port 8080 or 5432 are in use on your machine, Airflow won’t be able to start. To run Airflow on alternative ports, run:

-

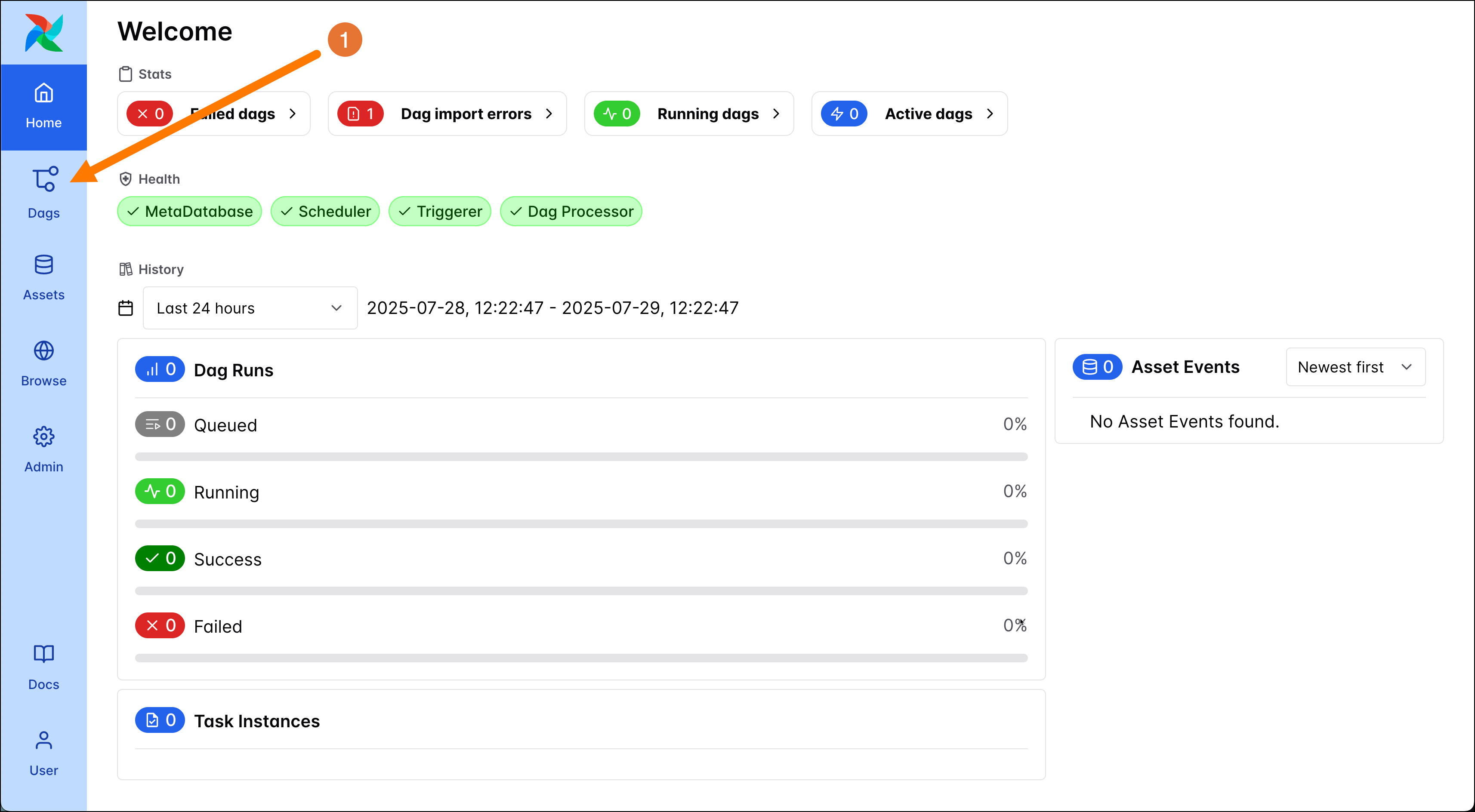

As soon as the project has started, the Airflow UI opens in your default browser. When running the start command for the first time this might take a couple of minutes. Note that as long as the Airflow project is running, you can always access the UI in another browser or additional tab by going to

localhost:8080. -

Click on the Dags button (1) in the Airflow UI to get to the DAG overview page to see the DAGs contained in this project.

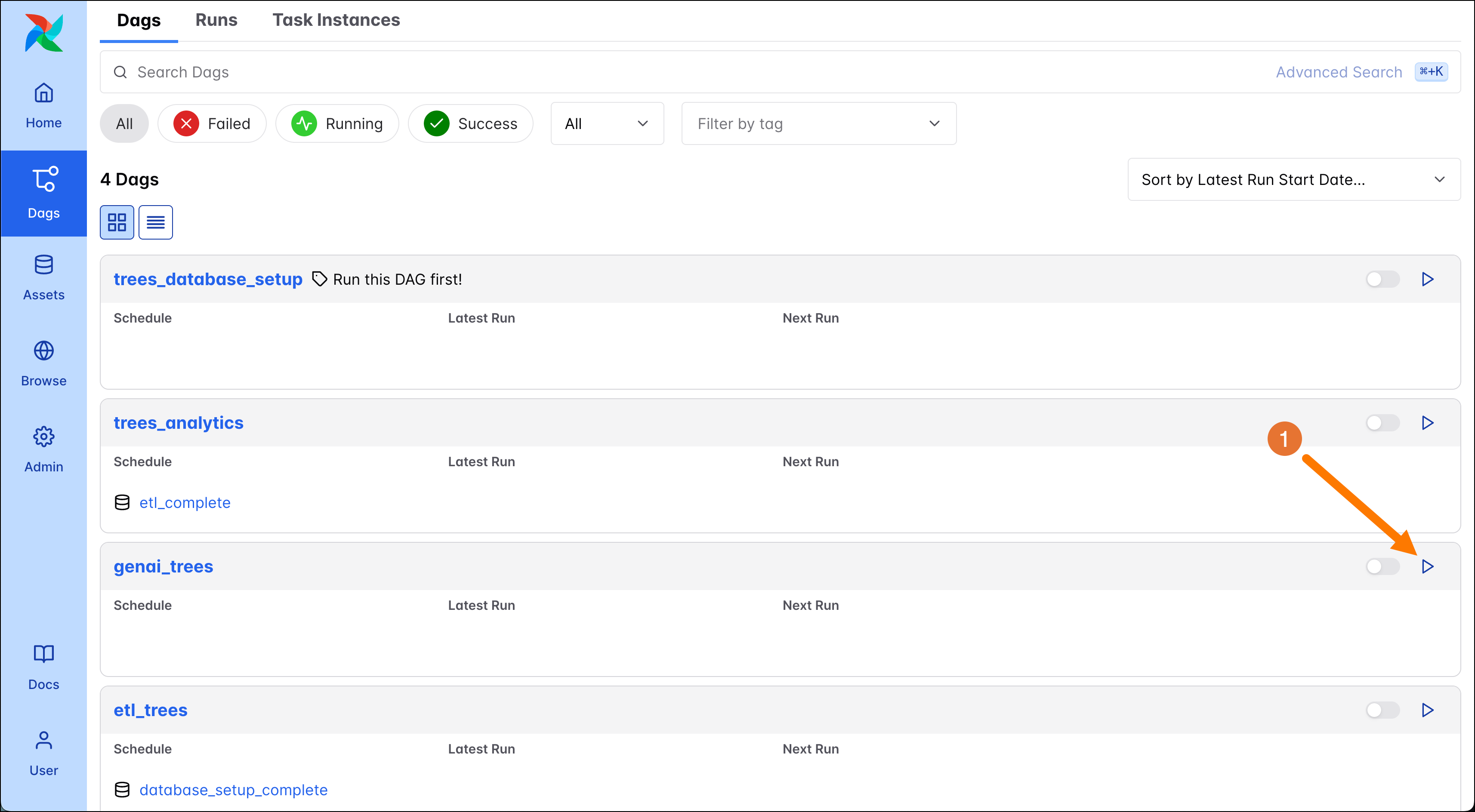

Step 5: Run the GenAI DAG

You should now see 4 DAGs. They will be paused by default, with no runs yet. For this quickstart, only the genai_trees DAG will be relevant. The other DAGs belong to the Airflow ETL Quickstart.

-

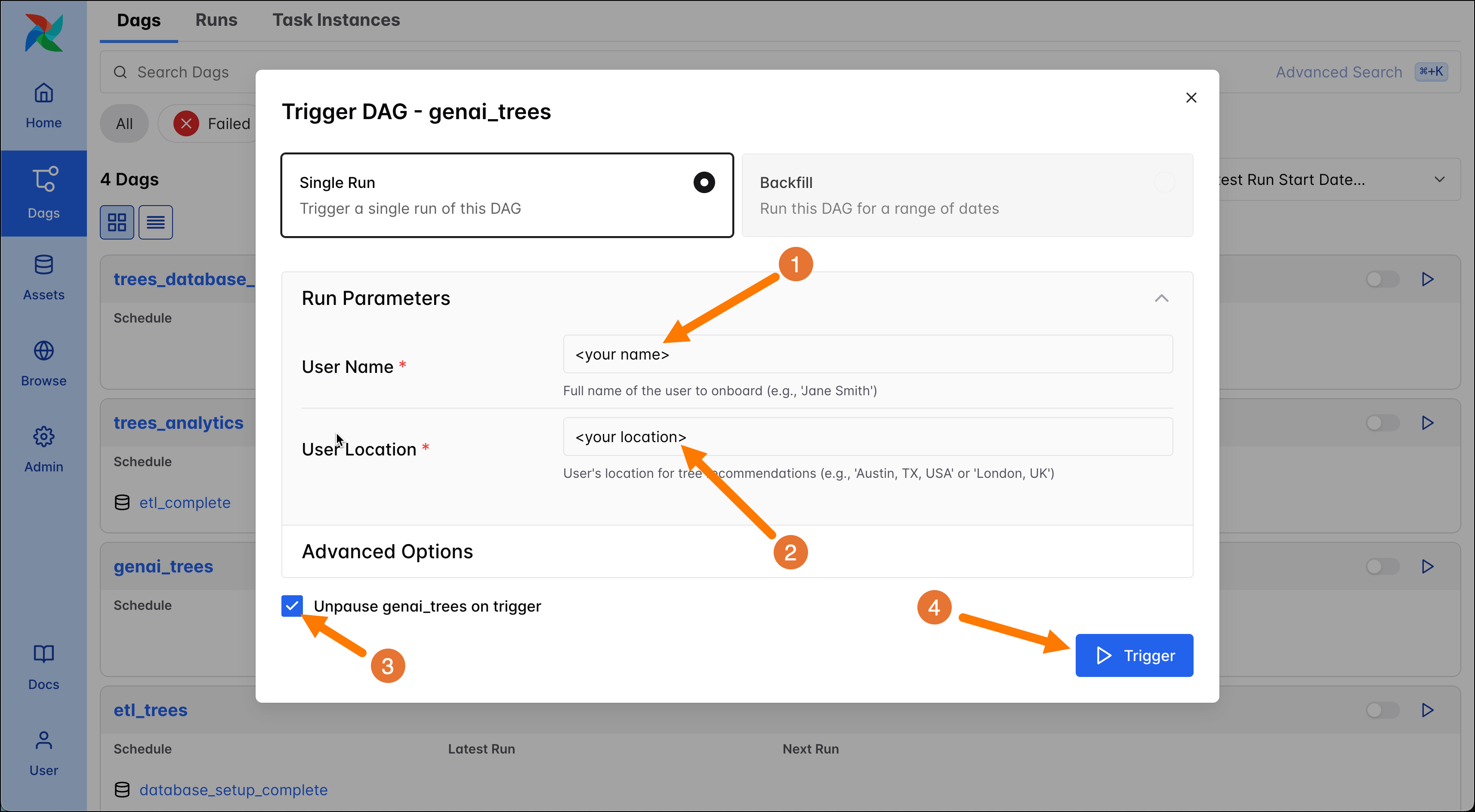

Open the Trigger DAG form of the

genai_treesDAG by clicking on its play button (1).

-

The

genai_treesDAG runs with params. If the DAG were to run based on a schedule the given defaults are used, but on a manual run, like right now, you can provide your own values. In the Trigger DAG form, enter your name (1) and your location (2), then trigger (3) a DAG run. Make sure the Unpause genai_trees on trigger checkbox (4) is selected.

-

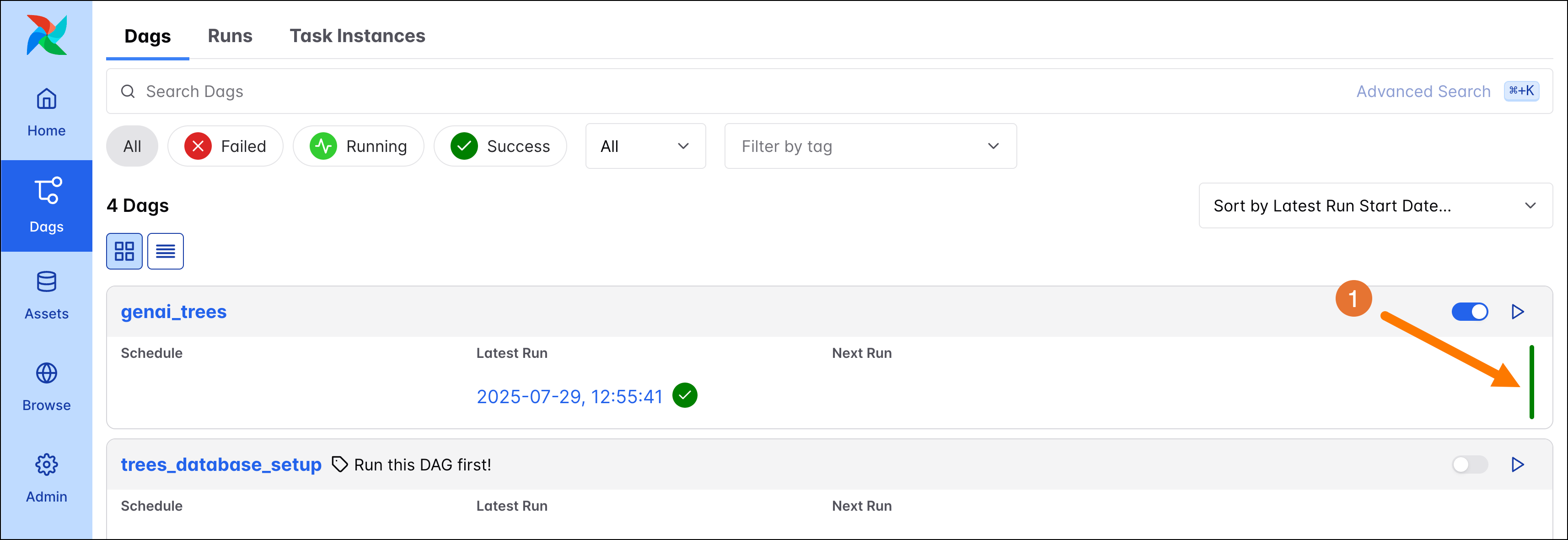

After 20-30 seconds the

genai_treesDAG has completed successfully (1).

Step 6: Explore the GenAI DAG

Let’s explore the ETL DAG in more detail.

-

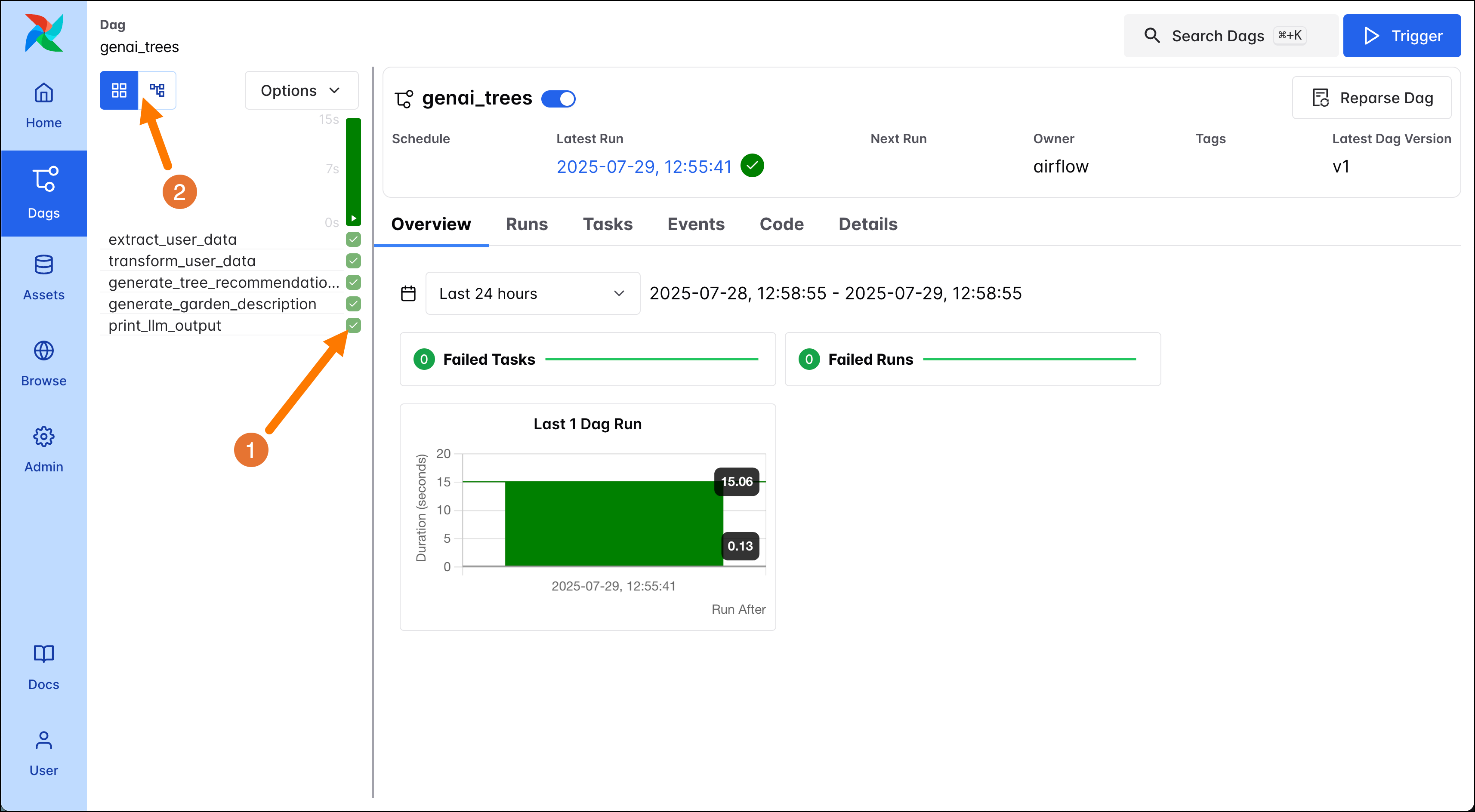

Click on the DAG name to get to the DAG overview. From here you can access a lot of detailed information about this specific DAG.

-

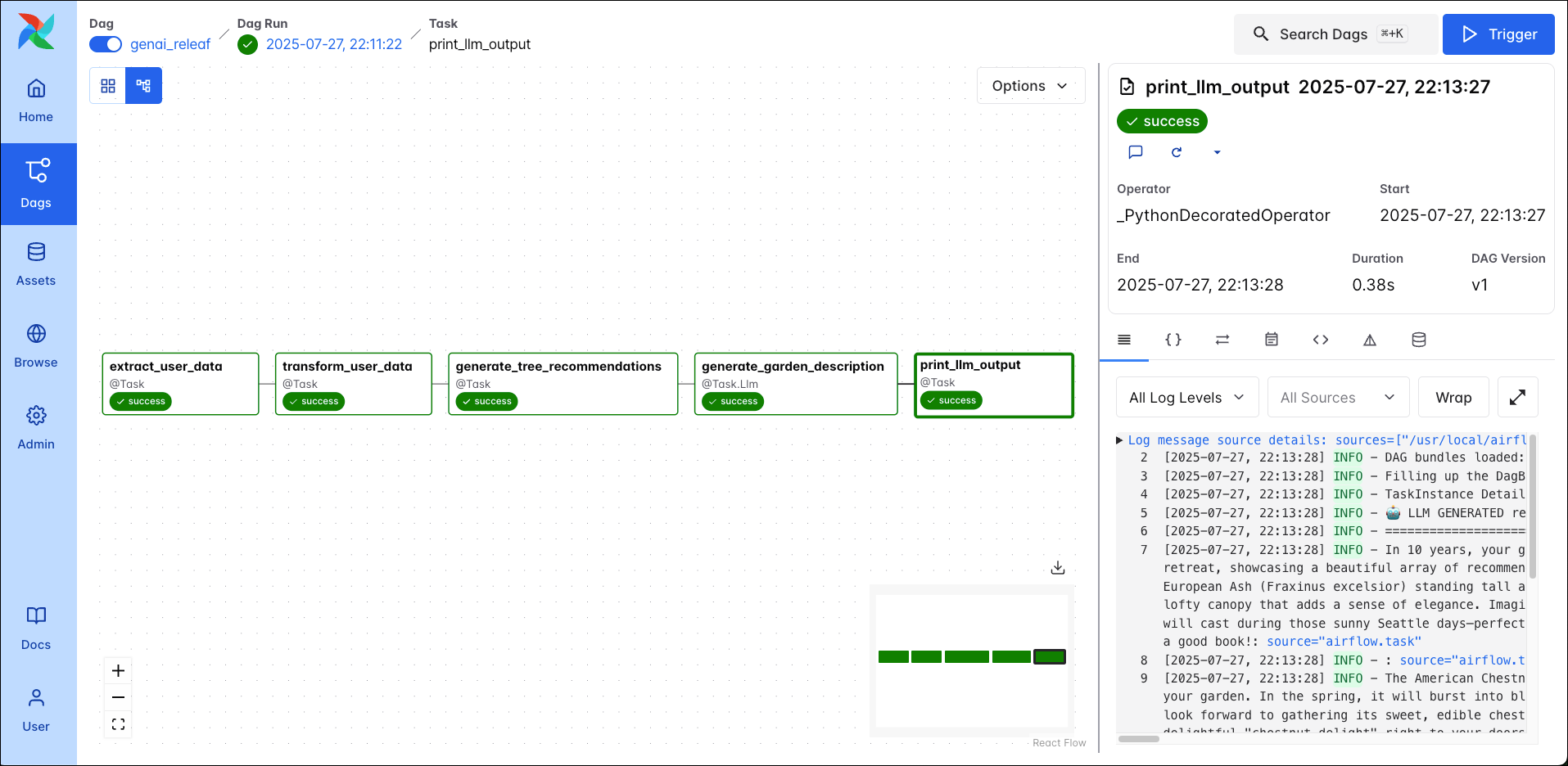

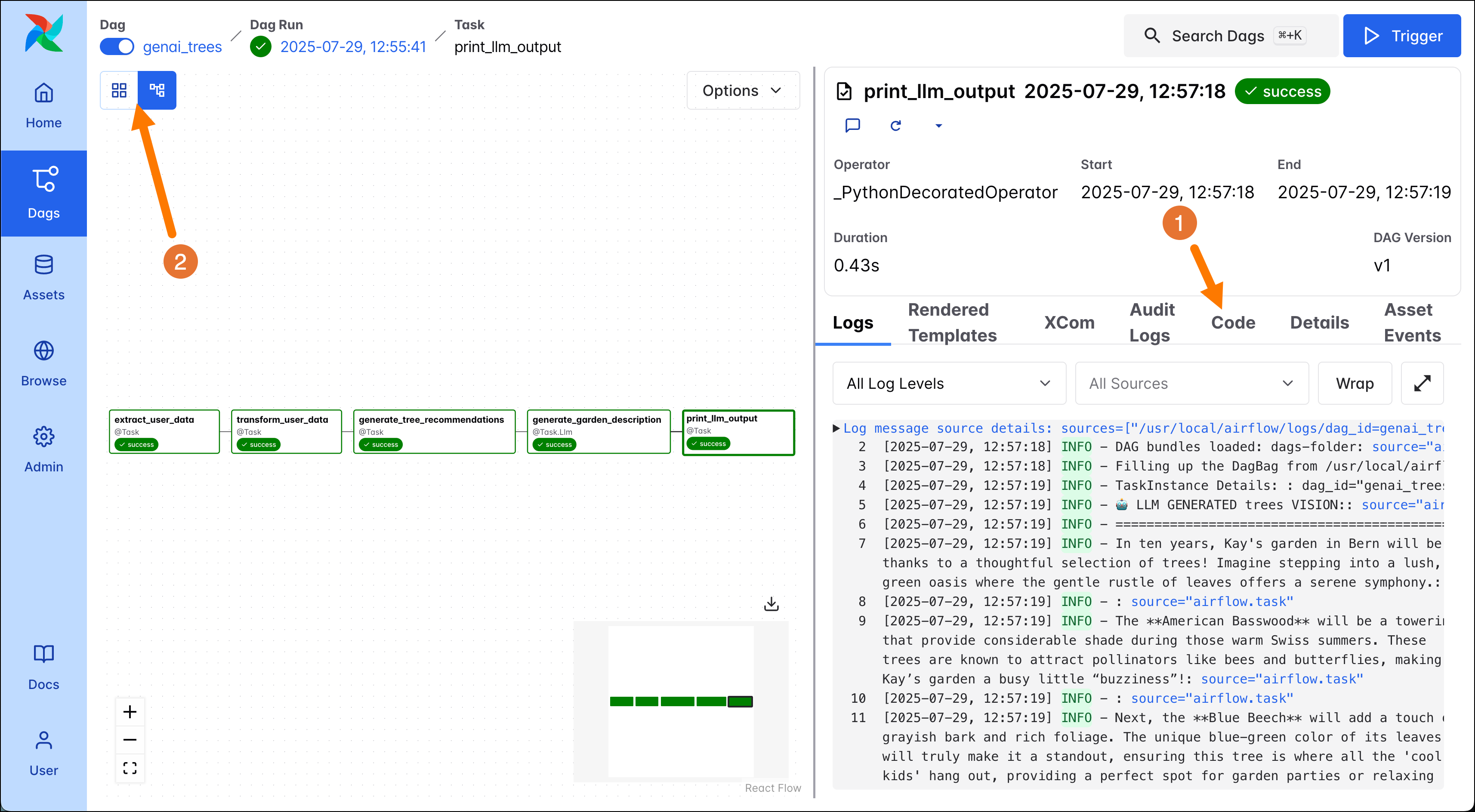

To navigate to the logs of individual task instances, click on the squares in the grid view. Open the logs for the

print_llm_outputtask (1) to see your garden description! -

Next, to view the dependencies between your DAGs you can toggle between the Grid and Graph view in the top left corner of the DAG overview (2). Each node in the graph corresponds to one task. The edges between the nodes denote how the tasks depend on each other, and by default the DAG graph is read from left to right. The code of the DAG can be found by viewed by clicking on the Code tab (1).

In the screenshot above you can see the graph of the ETL DAG, it consists of 6 tasks:

extract_user_data: This task extracts user data. In this quickstart example the DAG uses the data entered in the DAG Trigger form, the name and location of one user. In a real-world use case, it would likely extract several users’ records at the same time through calling an API or reading from a database.transform_user_data: This task uses the data the first task extracted and creates a comprehensive user record from it using modularized functions stored in theincludefolder.generate_tree_recommendations: The third task generates the tree recommendations based on the transformed data about the user.generate_garden_description: This task uses the@task.llmdecorator of the Airflow AI SDK to make a call to the OpenAI API to generate a description of your future garden, if you were to follow the tree recommendations.print_llm_output: The final task prints the output of the LLM to the task logs.

-

Lastly, let’s check out the code that defines this DAG by clicking on the Code tab. Note that while you can view the DAG code in the Airflow UI, you can only make changes to it in your IDE, not directly in the UI. You can see how each task in your DAG corresponds to one function that has been turned into an Airflow task using the

@taskdecorator.

Note

The

@taskdecorator is one of several options for defining your DAGs. The two other options are using traditional operators or the@assetdecorator.

- (Optional). Make a small change to your DAG code, for example by adding a print statement in one of the

@taskdecorated functions. After the change has taken effect, run your DAG again and see the added print statement in the task logs.

Tip

When running Airflow with default settings, it can take up to 30 seconds for DAG changes to be visible in the UI and up to 5 minutes for a new DAG (with a new DAG ID) to show up in the UI. If you don’t want to wait, you can run the following command to parse all existing and new DAG files in your

dagsfolder.

Step 7: Deploy your project

It is time to move the project to production!

-

If you don’t have access to Astro already, sign up for a free Astro trial.

-

Create a new Deployment in your Astro workspace.

-

Run the following command in your local CLI to authenticate your computer to Astro. Follow the login instructions in the window that opens in your browser.

-

Run the command to the deploy to Astro.

-

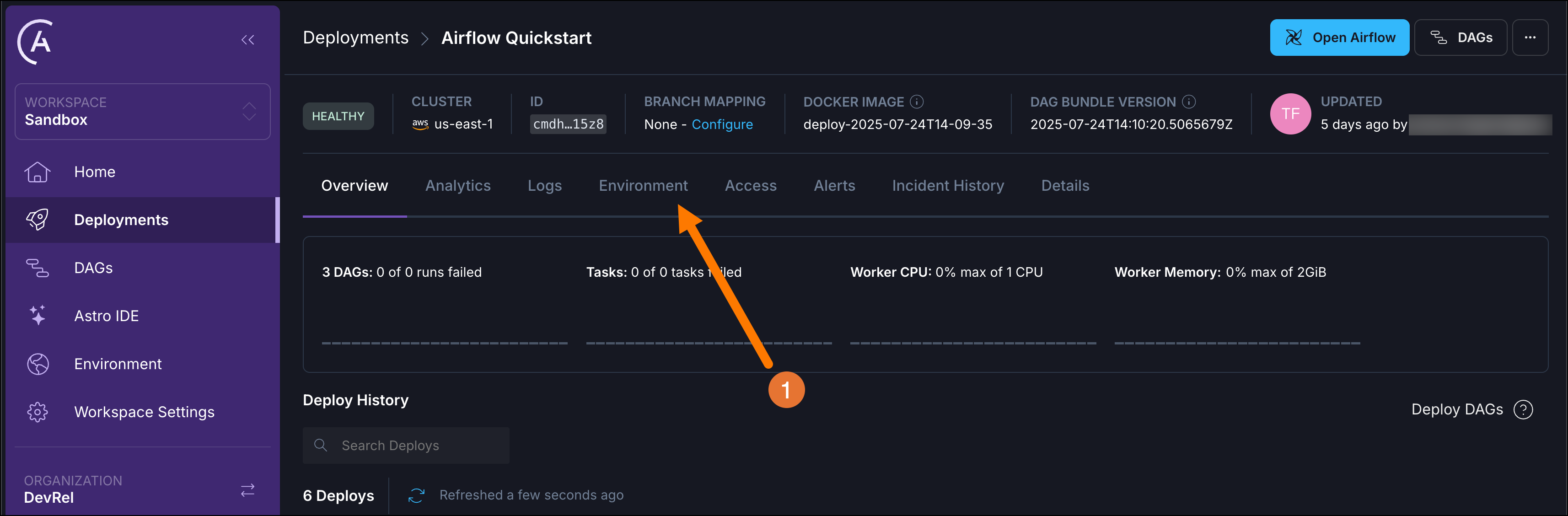

Since environment variables often contain secrets, they are not deployed from

.envwith the rest of your project code. To add environment variables like yourOPENAI_API_KEYto an Astro Deployment, use theEnvironmenttab (1) on your Deployment. Make sure to mark the environment variables as secret!

-

Once the deploy has completed, click the blue

Open Airflowbutton on your Deployment to see the DAGs running in the cloud. Here, you can run thegenai_treesDAG again to get another garden description!

Next steps

Awesome! You ran a GenAI DAG locally and in the cloud. To continue your learning we recommend the following resources:

- If you are curious about the other DAGs in this Quickstart which form an ETL pipeline, check out the Airflow ETL quickstart.

- To get a structured video-based introduction to Apache Airflow and its concepts, sign up for the Airflow 101 (Airflow 3) Learning Path in the Astronomer Academy.

- For a short step-by-step walkthrough of the most important Airflow features, complete our Airflow tutorial.

Run the quickstart without the Astro CLI

If you cannot install the Astro CLI on your local computer, you can still run the pipeline in this example.

-

Sign up for a free trial of Astro.

-

Create a new Deployment in your Astro workspace.

-

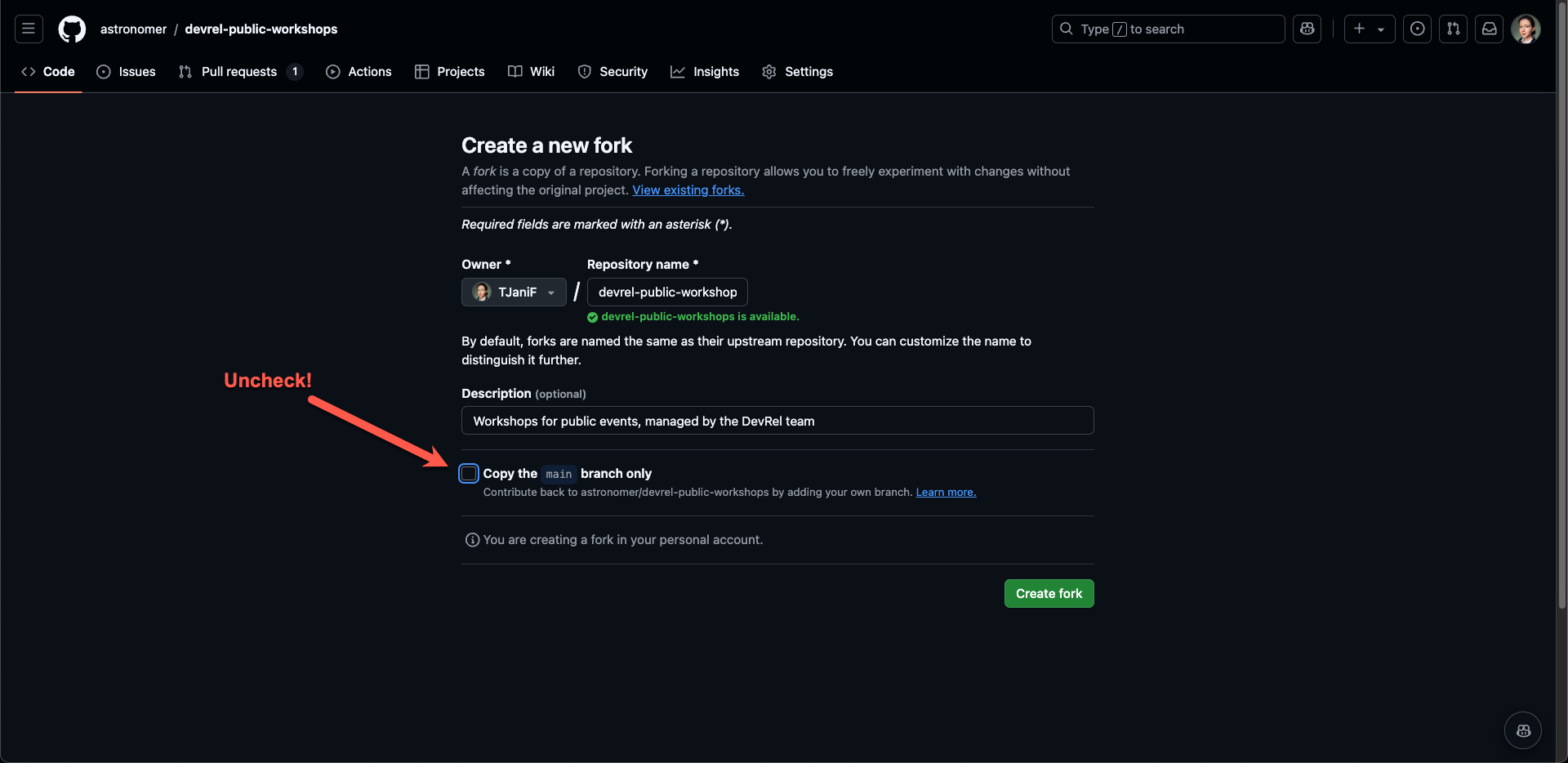

Fork the Airflow quickstart repository to your GitHub account. Make sure to uncheck the

Copy the main branch onlybox!

-

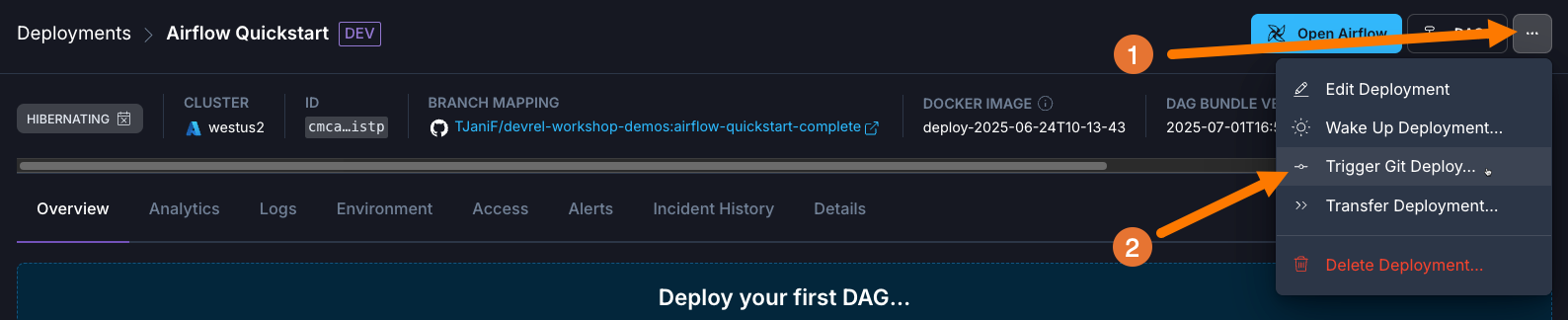

Set up the Astro GitHub integration to map your Deployment to the

airflow-quickstart-completebranch of your forked repository. -

Select Trigger Git Deploy from the More actions menu in your Deployment settings to trigger the first deploy for the mapped repository.

-

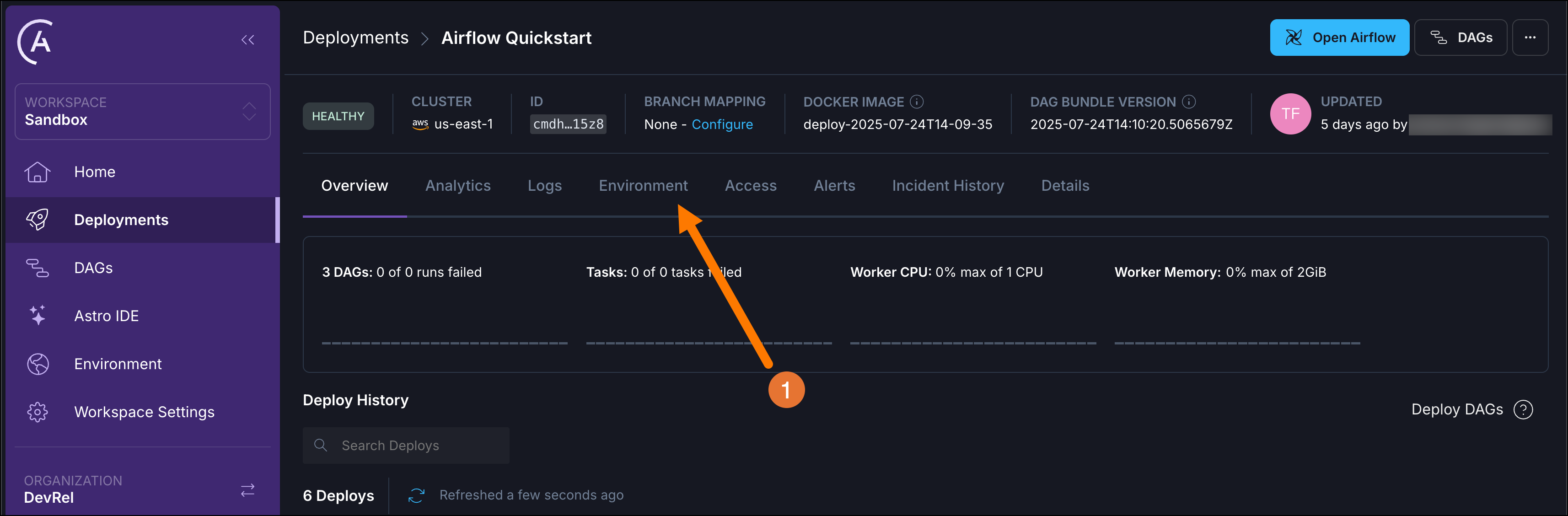

Add an environment variable called

OPENAI_API_KEYwith the value of your OpenAI API Key to your Astro Deployment by clicking on theEnvironmenttab (1) on your Deployment. Make sure to mark the environment variables as secret! This key will be used by the Airflow AI SDK to make a call to OpenAI generating your future garden description.

-

Once the deploy has completed, click the blue

Open Airflowbutton on your Deployment to see the DAGs running in the cloud. From here you can complete Step 5 nd complete the quickstart, skipping over the deploy instructions in Step 7, since you already deployed your project!