Airflow plugins

Airflow plugins are external features that can be added to customize your Airflow installation, including the Airflow UI. Airflow 3 added comprehensive plugin support in version 3.1 with a plugin manager interface that allows you to add many different components to a plugin, from custom macros, to FastAPI endpoints, to React apps.

In this guide, you’ll learn about when you might want to use plugins and how to create them, including examples for popular types of plugins.

Airflow 2 supported Flask Appbuilder views, Flask AppBuilder menu items, and Flask Blueprints in plugins, which have been deprecated in Airflow 3. All new plugins in Airflow 3 should use External views, React apps, FastAPI apps, and FastAPI middlewares instead.

If you are looking to use legacy FAB-based plugin in Airflow 3, see the Upgrading Guide in the FAB provider documentation.

Assumed knowledge

To get the most out of this guide, you should have an understanding of:

- Basic Airflow concepts. See Introduction to Apache Airflow.

- Airflow core components. See Airflow’s components.

- Basics of FastAPI.

- Basics of JavaScript and React.

When to use plugins

Plugins offer a flexible way to build on top of Airflow. While most plugins are written to extend the Airflow UI, you can also add other functionality to your Airflow instance like a FastAPI app. Some examples of when you might want to use plugins include:

- Adding a button to the Home view to trigger a custom action, for example to pause and unpause all dags.

- Adding middleware to the Airflow API to log or modify requests and responses.

- Adding a custom button to the task instance Details view that links to files or logs in external data tools relevant to the task.

- Creating additional API endpoints for your Airflow instance, for example to run a specific set of dags.

- Adding a custom dashboard displaying information related to your data pipelines on a new page in the Airflow UI, for example showing the status of your most critical tasks.

Plugin interface

The plugin interface is defined by the AirflowPlugin class and allows you to add components to your plugin. A plugin can consist of one or more components; for example you can add a React app, a FastAPI app, and several custom macros in the same plugin. You can add as many plugins as you want to your Airflow instance.

To register a plugin, place it in a Python file in the plugins folder of your Airflow instance. Astronomer recommends keeping each plugin in a separate file.

The code snippet below shows the my_plugin plugin. It is instantiated by inheriting from the AirflowPlugin class and adding components to the plugin. Currently the plugin does not have any components, so it does not do anything. You can learn more about the different components in the Plugin components section.

Note that when developing plugins you’ll need to restart the Airflow API server to see the changes you make to the plugin. You can set AIRFLOW__CORE__LAZY_LOAD_PLUGINS=False in your airflow.cfg file to reload plugins automatically, however, changes will not be reflected in new running tasks until after the scheduler is restarted.

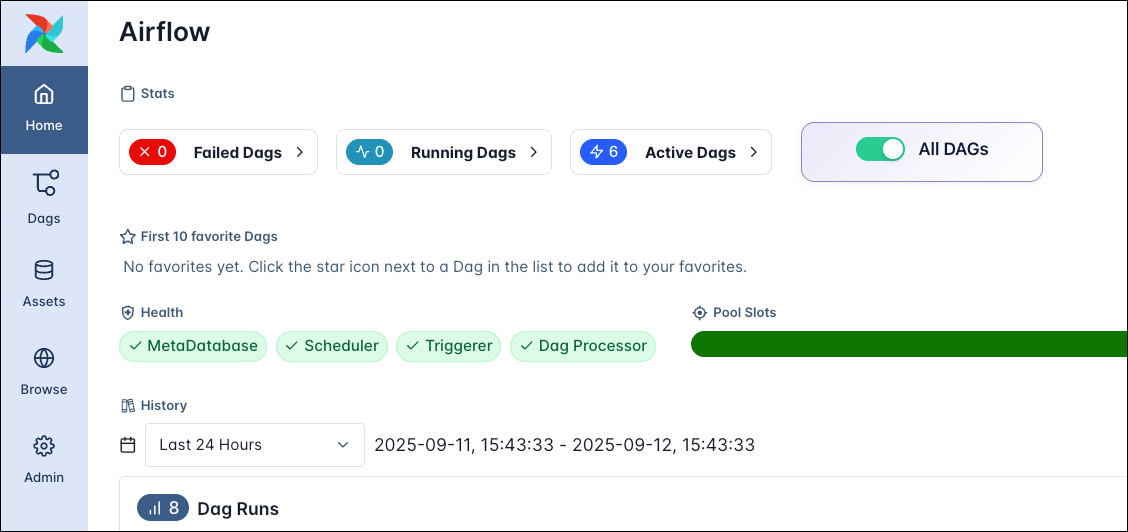

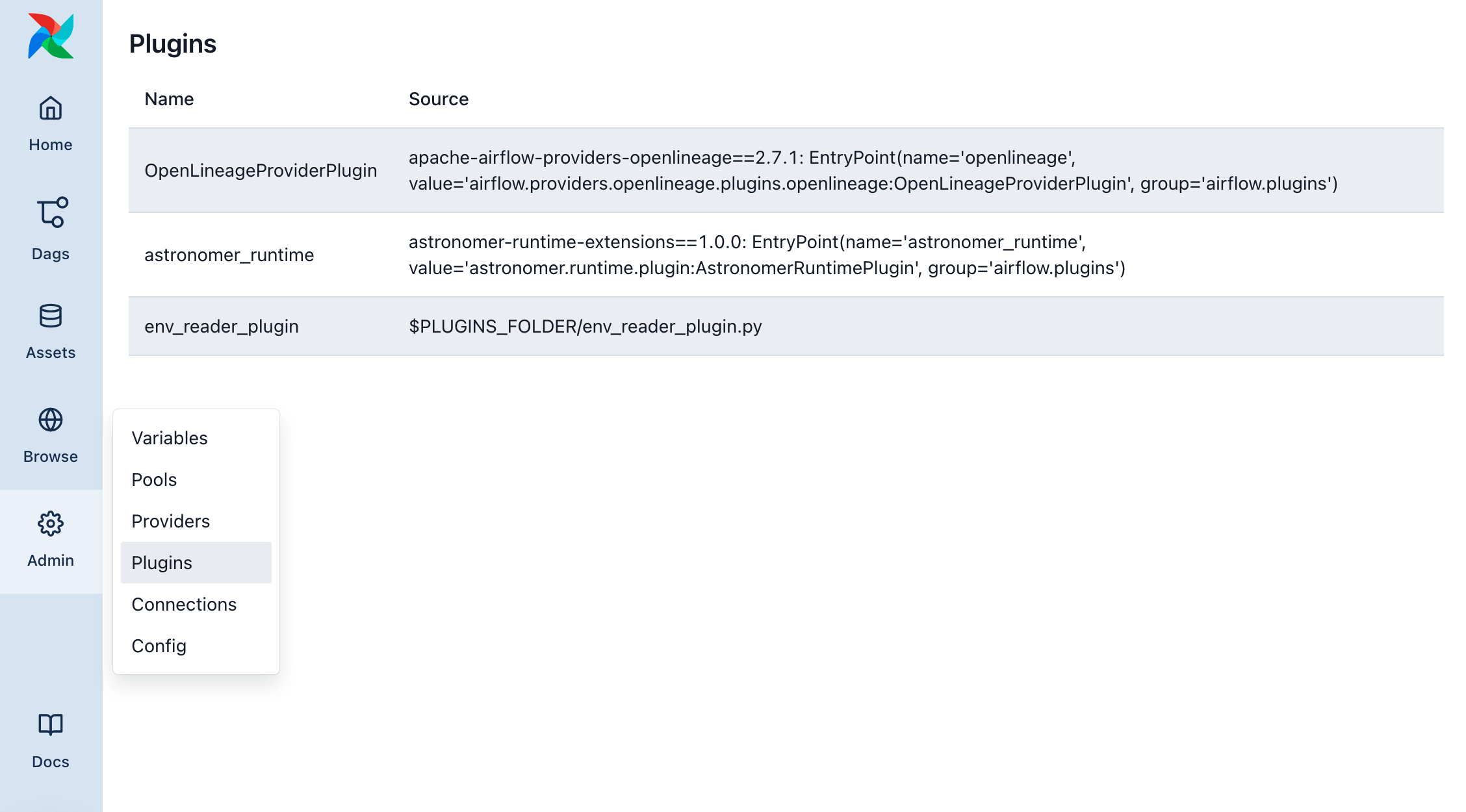

Verify loaded plugins

To see all currently loaded plugins, and to verify whether your plugin has been loaded, open the Plugins page from the Admin menu on the left navigation bar.

Plugin components

This section contains examples for each of the different components that can be added to a plugin. The available components are:

- External views: Additional Airflow UI views in different locations.

- React apps: Embedding a React app in the Airflow UI.

- Macros: Pre-defined functions that can be used in Jinja templates in templatable fields of your operators.

- FastAPI apps: Additional API endpoints for your Airflow instance.

- Middlewares: Middleware for Airflow API.

- Operator extra links: Buttons for your operators that often link to external systems.

- Timetables: Additional timetables for your dags. See timetables in the Airflow documentation.

- Listeners: Listeners for your Airflow instance that execute when certain events occur. See listeners in the Airflow documentation.

External views

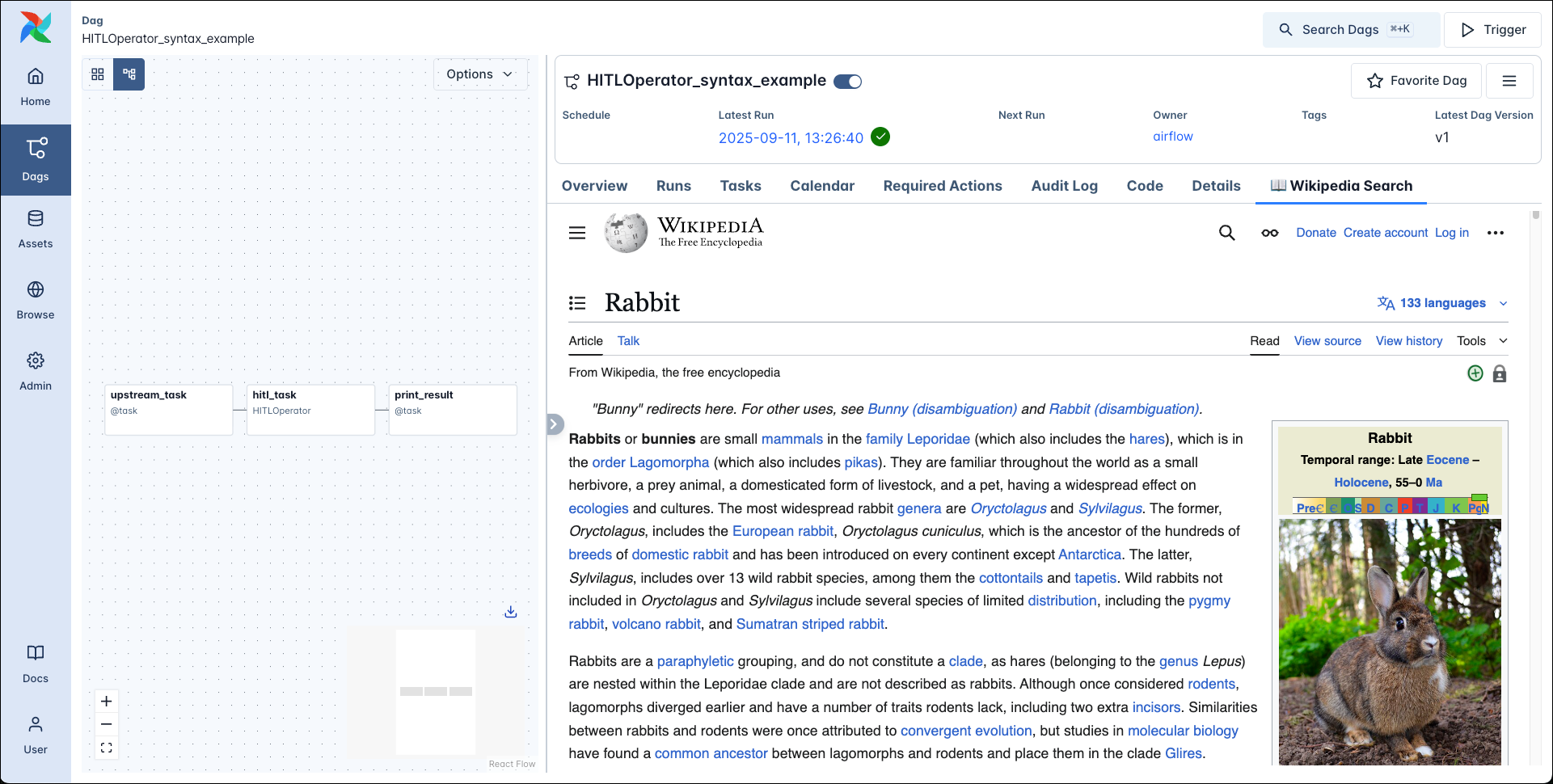

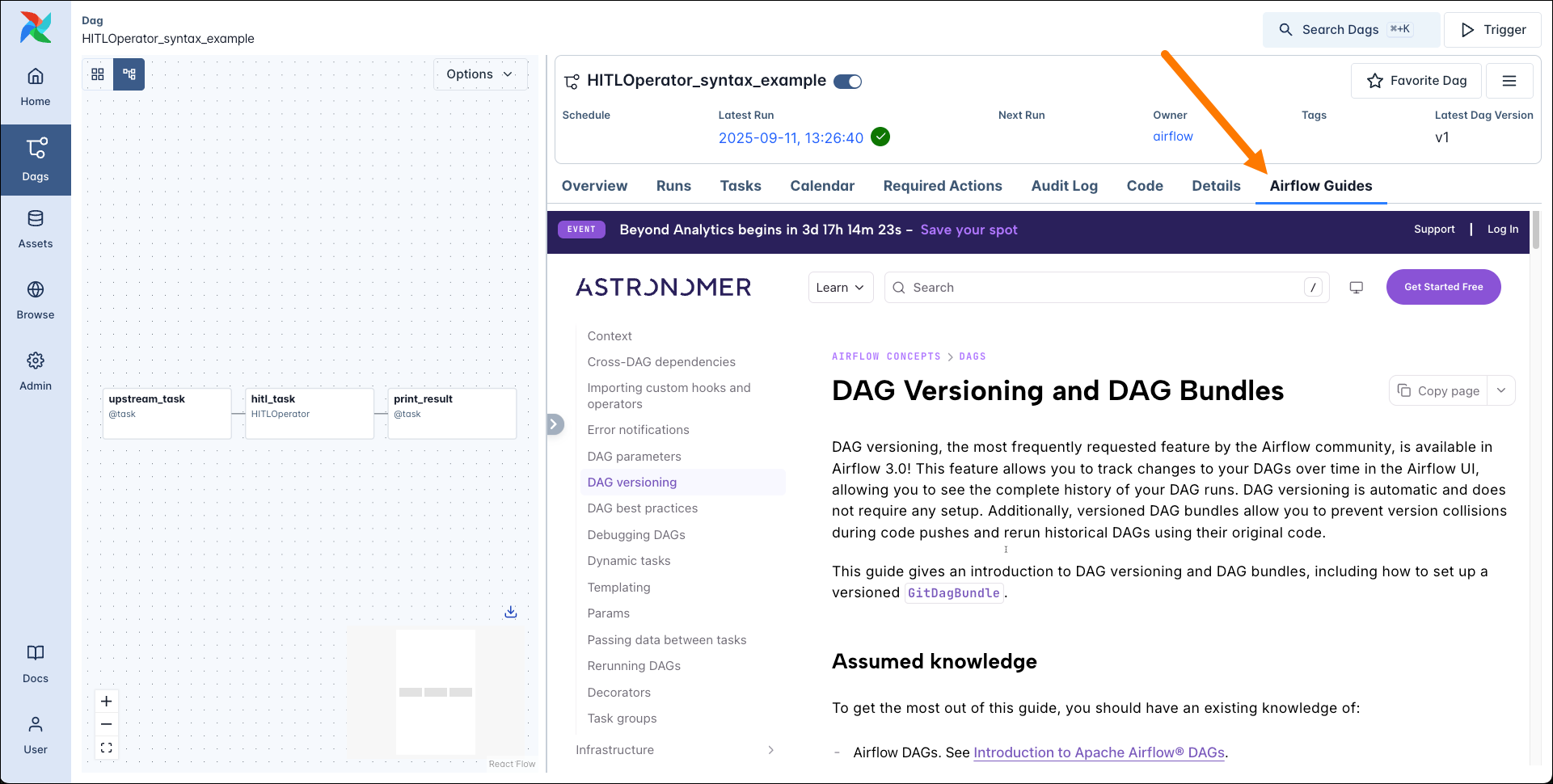

You can add additional views to your Airflow instance in different locations. You can point the view to an existing Website to embedd it as an iframe or to a new one you create in a FastAPI app that is registered alongside the external view in the same plugin. The example below shows how to add a view that embeds Wikipedia as an iframe.

In the Airflow UI, you can see the external view button in the Details view of the dag.

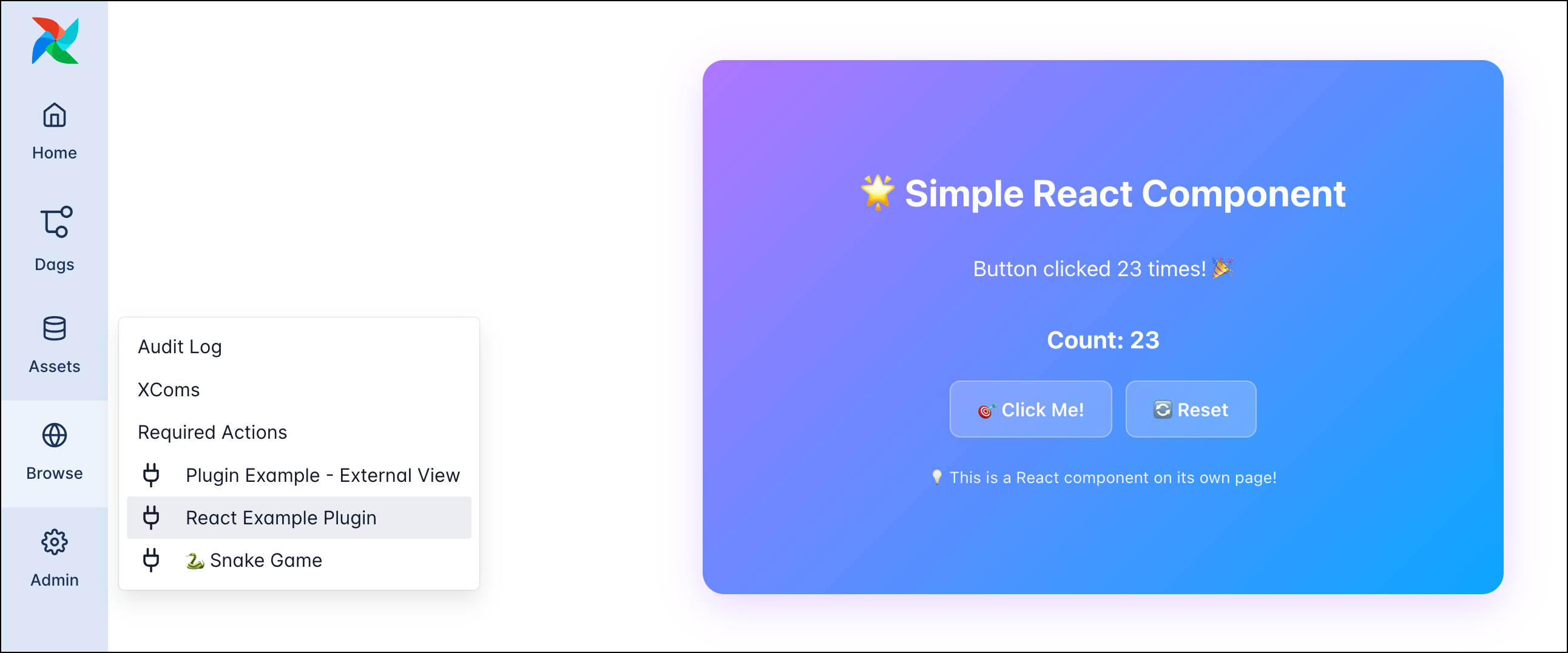

React apps

If you want to embed a more complex application in the Airflow UI, you can use a React app. React apps can be added in the same locations as External views.

After this you can add your React app in the my-app.js file and access it in the Airflow UI at http://localhost:8080/simple-react-app/.

You can put your React app inside of a few selected existing pages in the Airflow UI. The supported views are dashboard, dag_overview and task_overview. To position the element in the existing page use the css order rule which will determine the flex order.

Note that you need to make the plugin available as a global variable in the JavaScript file. The example below shows how to do this for the DAGToggleWidget plugin.

The React app integration is experimental and interfaces might change in future versions. Particularly, dependency and state interactions between the UI and plugins may need to be refactored for more complex plugin apps.

Macros

A macro is a pre-defined function that can be used in Jinja templates in templatable fields of your operators.

In your dag your can use the macro in any templateable field.

FastAPI apps

You can use a FastAPI app in your plugin to add endpoints to your Airflow instance through which you can interact with it, in addition to the public Airflow API.

After adding the plugin to your Airflow instance, you can access the endpoints of the FastAPI app at http://localhost:8080/<url_prefix>. In the example above, you can send a GET request to http://localhost:8080/hello-app/hello to get the response “Hello from Airflow!”.

FastAPI endpoints on Astro

For calling FastAPI plugin endpoint on Astro, for example when combining a FastAPI app with an external_views nav link, use a relative path (no leading /) in the href. On Astro, the API server is reachable at a different base URL than in other environments, and a relative path ensures that URIs are resolved correctly.

When serving static files via FastAPI’s StaticFiles, always use relative paths in your HTML templates and Python code. Absolute paths like /my-app/static/style.css break on Astro because the base URL differs from local development.

API documentation is automatically generated:

- OpenAPI JSON schema under

https://<api_server_url>/<url_prefix>/openapi.json - Swagger UI under

https://<api_server_url>/<url_prefix>/docs - Redoc under

https://<api_server_url>/<url_prefix>/redoc

Note that this endpoint is not protected by the Airflow API, so you need to set up authentication for it on your own. See the FastAPI docs for more information.

Middlewares

You can add middleware to the Airflow API server to modify requests and responses to all its APIs. This includes the REST API, and also the API serving the Airflow UI or any FastAPI app you might have added.

This simple example just adds a print statement to the console when a request is made to the Airflow API, and adds a custom header to the response.

Remember that middleware is applied to all requests to any API served by the Airflow API server. If you’d like to be selective about which requests to modify, you need to implement the logic in the middleware to only execute the middleware for the specific requests you want to modify.

Operator extra links

An operator extra link is a button that can be added to the Details view of a task instance of any operator. Operator extra links can be implemented in two ways:

global_operator_extra_links: A button that will be added to the Details view of every task instance of every operator.operator_extra_links: A button that will be added to the Details view of all task instances of a specific operator.

Global and specific operator extra links are added separately in Airflow plugins. The example below shows how to add a global operator extra link that links to the Google search results of the value returned by a task instance.

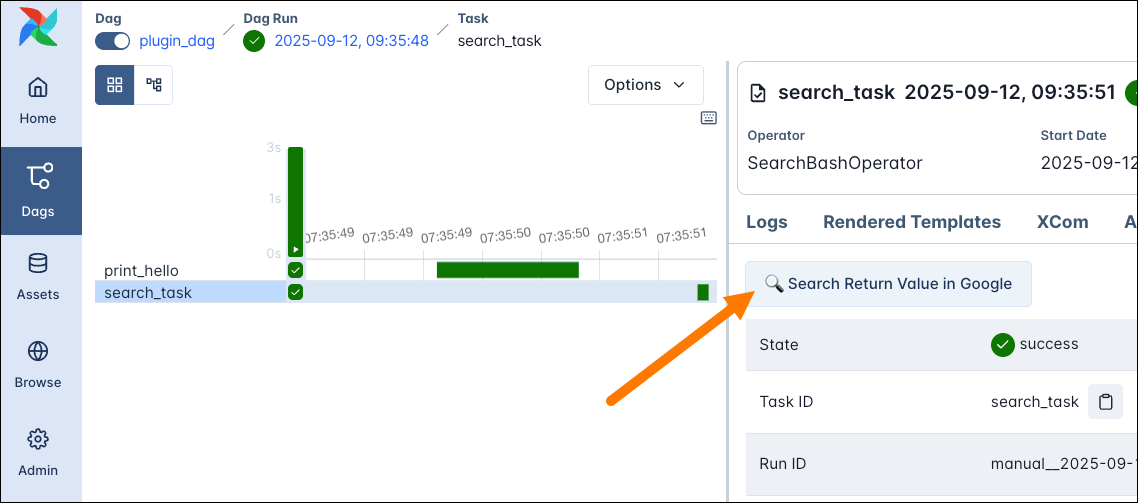

To use this operator extra link you need to add it to one of your operators, essentially creating a custom operator. The code snippet below shows the SearchBashOperator that subclasses the BashOperator and adds the GoogleSearchXComLink to it.

In the Airflow UI, you can see the operator extra link button in the Details view of the task instance.

Locations

UI plugins like React apps and External views can be added to the Airflow UI in different locations.

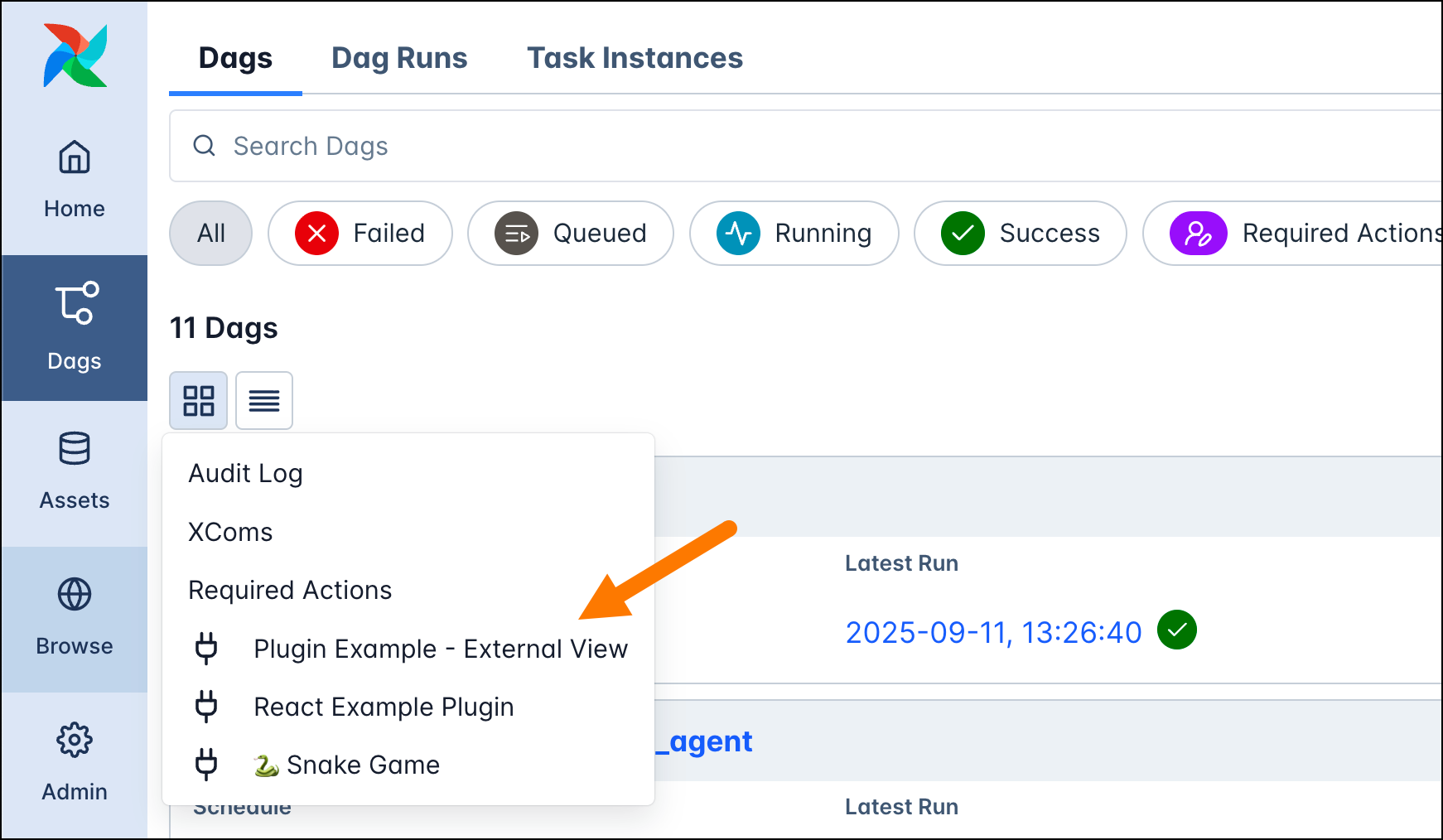

Plugins set to destination="nav" will be added in the navigation bar to the left. You also need to specify the category to the plugin, for example the browse category will add the plugin to the Browse menu.

The dag destination will add the plugin in an additional tab on every dag page.

Similary, the dag_run, task and task_instance destinations will add the plugin in an additional tab on every dag run, task and task instance page respectively.

In the case of React apps you can also embed them in existing pages in the Airflow UI, the supported locations are dashboard, dag_overview and task_overview. The example below shows a button that pauses and unpauses all dags in the Airflow instance, embedded in the Home (dashboard) page.