Authenticate Astro to GCP

Prerequisites

- A user account on GCP with access to GCP cloud resources.

- The Google Cloud SDK.

- The Astro CLI.

- An Astro project.

- Optional. Access to a secrets backend hosted on GCP, such as GCP Secret Manager.

Retrieve GCP user credentials locally

Run the following command to obtain your user credentials locally:

The SDK provides a link to a webpage where you can log in to your Google Cloud account. After you complete your login, the SDK stores your user credentials in a file named application_default_credentials.json.

The location of this file depends on your operating system:

- Linux:

$HOME/.config/gcloud/application_default_credentials.json - Mac:

/Users/<username>/.config/gcloud/application_default_credentials.json - Windows:

%APPDATA%/gcloud/application_default_credentials.json

Configure your Astro project

docker-compose.override.yml. For Airflow 2, replace api-server with webserver and remove the dag-processor block.The Astro CLI runs Airflow in a Docker-based environment. To give Airflow access to your credential file, mount it as a Docker volume.

- In your Astro project, create a file named

docker-compose.override.ymlto your project with the following configuration:

Mac

Linux

Windows

- In your Astro project’s

.envfile, add the following environment variable. Ensure that this volume path is the same as the one you configured indocker-compose.override.yml.

When you run Airflow locally, all GCP connections without defined credentials automatically fall back to your user credentials when connecting to GCP. Airflow applies and overrides user credentials for GCP connections in the following order:

- Mounted user credentials in the

/~/gcloud/folder - Configurations in

gcp_keyfile_dict - An explicit username & password provided in the connection

For example, if you completed the configuration in this document and then created a new GCP connection with its own username and password, Airflow would use those credentials instead of the credentials in ~/gcloud/application_default_credentials.json.

Test your credentials with a secrets backend

Now that Airflow has access to your user credentials, you can use them to connect to your cloud services. Use the following example setup to test your credentials by pulling values from different secrets backends.

-

Create a secret for an Airflow variable or connection in GCP Secret Manager. You can do this using the Google Cloud Console or the gcloud CLI. All Airflow variables and connection keys must be prefixed with the following strings respectively:

airflow-variables-<my_variable_name>airflow-connections-<my_connection_name>

For example when adding the secret variable

my_secret_varyou will need to give the secret the nameairflow-variables-my_secret_var. -

Add the following environment variables to your Astro project

.envfile. For additional configuration options, see the Apache Airflow documentation. Make sure to specify yourproject_id. -

Run the following command to start Airflow locally:

-

Access the Airflow UI at

localhost:8080and create an Airflow GCP connection namedgcp_standardwith no credentials. See Connections.When you use this connection in your dag, it will fall back to using your configured user credentials.

-

Add a dag which uses the secrets backend to your Astro project

dagsdirectory. You can use the following example dag to retrieve<my_variable_name>and<my_connection_id>from the secrets backend and print it to the terminal: -

In the Airflow UI, unpause your dag and click Play to trigger a dag run.

-

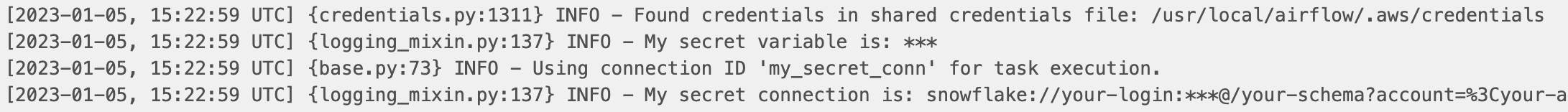

View logs for your dag run. If the connection was successful, your masked secrets appear in your logs. See Airflow logging.