Airflow Provider Framework

Providers are Python packages containing all relevant modules for a specific service provider (ie. AWS, Snowflake, Google etc.)

Unlike Apache Airflow 1.10, now Airflow 2.0 is delivered in multiple, separate but connected packages. The core of the Airflow scheduling system is delivered as apache-airflow package and there are around 60 Airflow Provider Packageswhich can be installed separately.

In Airflow 2.0, Providers are decoupled from core Airflow releases:

- Providers can now be independently versioned, maintained, and released

- Users just `pip install` the provider package and then import modules into their DAG files

- Providers can now be built from any public repository

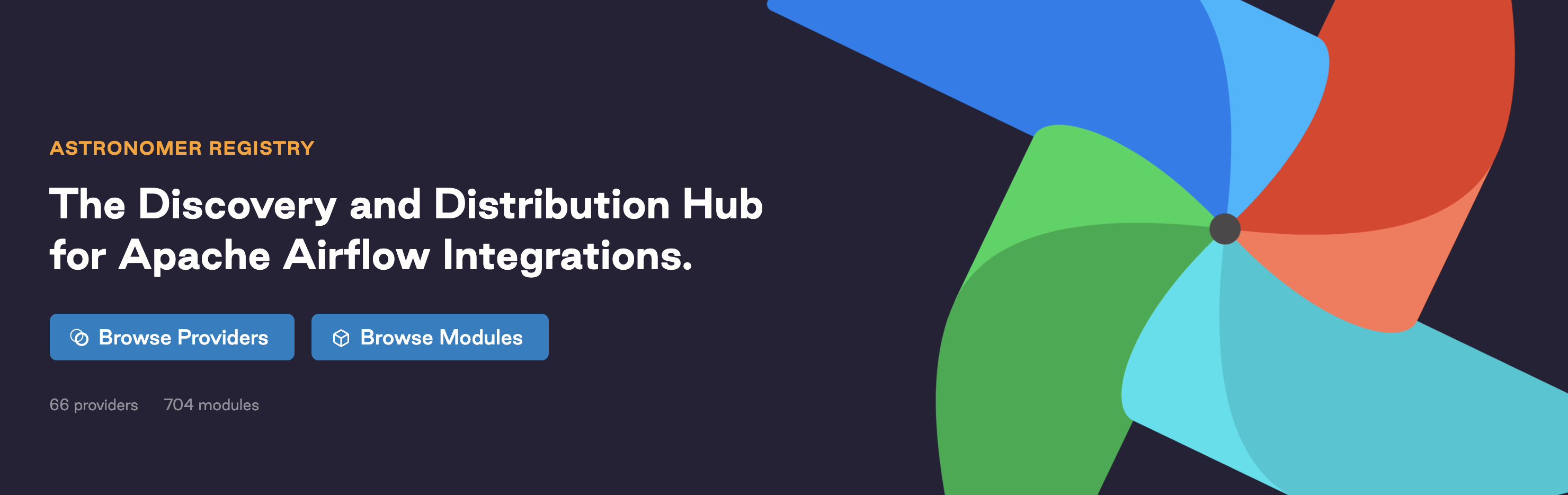

The Astronomer Registry

Recently we announced The Astronomer Registry: a discovery hub for Airflow providers designed to bridge the gap between the Airflow community and the broader data ecosystem.

The Airflow providers framework has long been a valuable asset for the community. DAG authors benefit from access to a deep library of standard patterns while maintaining code-driven customizability; it enables a tight integration between Airflow and almost any tool in the modern data stack.

The easiest way to get started with providers and Apache Airflow 2.0 is by using the Astronomer CLI. To make it easy you can get up and running with Airflow by following our Quickstart Guide.