Note: There is a newer version of this webinar available: Airflow 101: How to get started writing data pipelines with Apache Airflow.

By Kenten Danas, Lead Developer Advocate at Astronomer

Webinar Links:

- Airflow Guides: https://docs.astronomer.io/learn/

- Registry: https://registry.astronomer.io/ (example DAGs!)

- Github repository for this webinar: https://github.com/astronomer/intro-to-airflow-webinar

1. What is Apache Airflow?

Apache Airflow is one of the world’s most popular data orchestration tools — an open-source platform that lets you programmatically author, schedule, and monitor your data pipelines.

Apache Airflow was created by Maxime Beauchemin in late 2014, and brought into the Apache Software Foundation’s Incubator Program two years later. In 2019, Airflow was announced as a Top-Level Apache Project, and it is now considered the industry’s leading workflow orchestration solution.

Key benefits of Airflow:

- Proven core functionality for data pipelining

- An extensible framework

- Scalability

- A large, vibrant community

Apache Airflow Core principles

Airflow is built on a set of core principles — and written in a highly flexible language, Python — that allow for enterprise-ready flexibility and reliability. It is highly secure and was designed with scalability and extensibility in mind.

2. Airflow core components

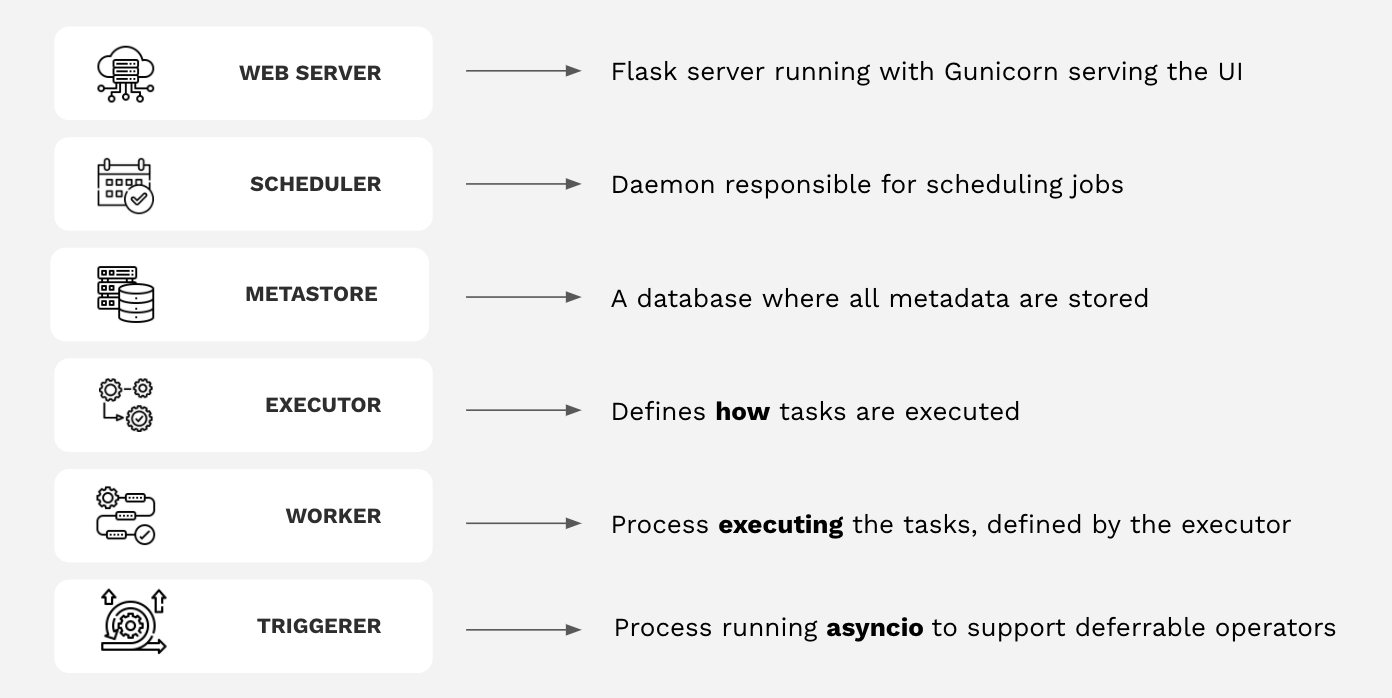

The infrastructure:

3. Airflow core concepts

DAGs

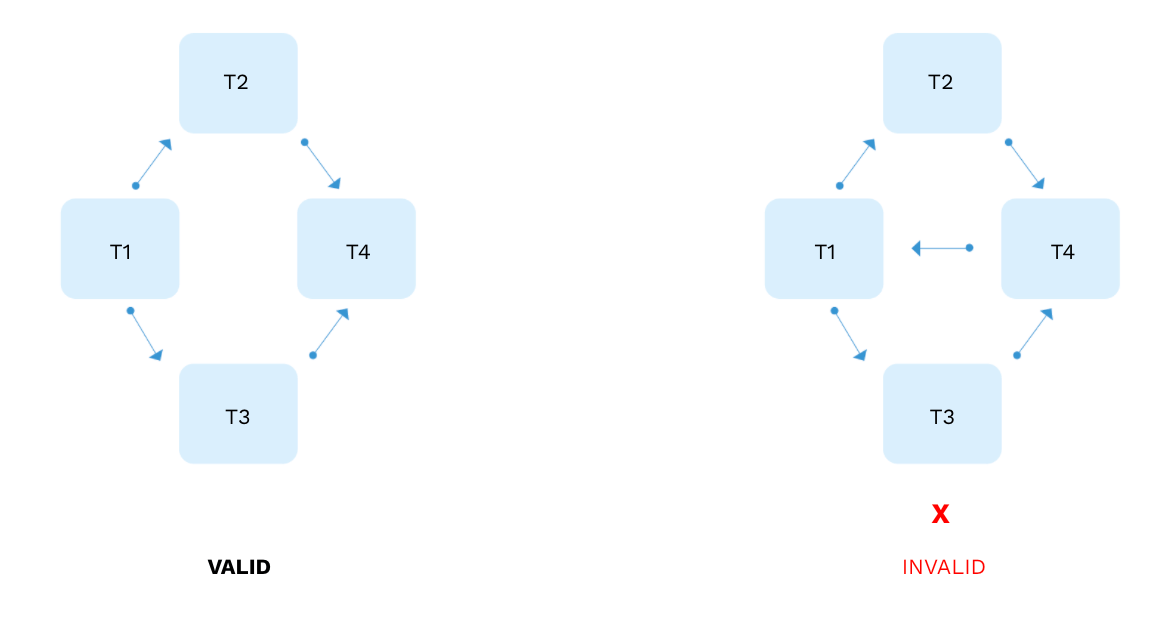

A DAG (Directed Acyclic Graph) is the structure of a data pipeline. A DAG run either extracts, transforms, or loads data - becoming a data pipeline, essentially.

DAGs must flow in one direction, which means that you should always avoid having loops in the code.

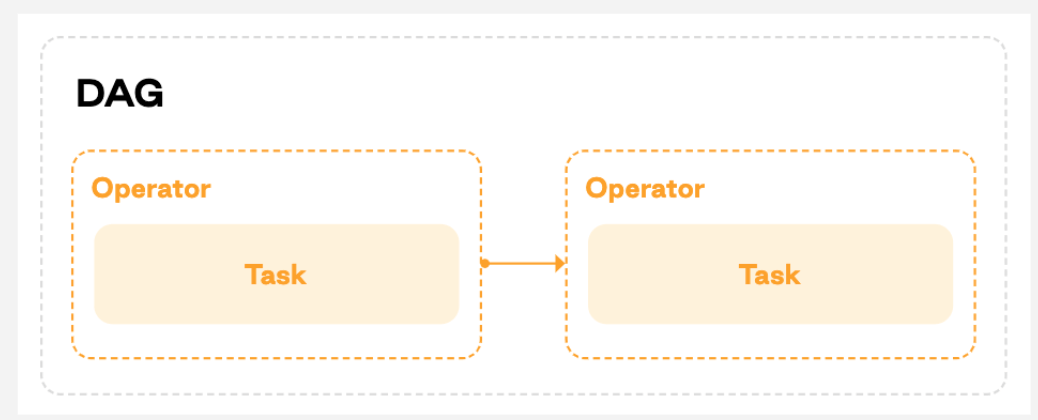

Each task in a DAG is defined by an operator, and there are specific downstream or upstream dependencies set between tasks.

Tasks

A task is the basic unit of execution in Airflow. Tasks are arranged into DAGs, and then have upstream and downstream dependencies set between them to express the order in which they should run. Best practice: keep your tasks atomic by making sure they only do one thing.

A task instance is a specific run of that task for a given DAG (and thus for a given data interval). Task instances also represent what stage of the lifecycle a given task is currently in. You will hear a lot about task instances (TI) working with Airflow.

Operators

Operators are the building blocks of Airflow. They determine what actually executes when your DAG runs. When you create an instance of an operator in a DAG and provide it with its required parameters, it becomes a task.

- Action Operators execute pieces of code. For example, a Python action operator will run a Python function, a bash operator will run a bash script, etc.

- Transfer Operators are more specialized, and designed to move data from one place to another.

- Sensor Operators, frequently called “sensors,” are designed to wait for something to happen — for example, for a file to land on S3, or for another DAG to finish running.

Providers

Airflow providers are Python packages that contain all of the relevant Airflow modules for interacting with external services. Airflow is designed to fit into any stack: you can also use it to run your workloads in AWS, Snowflake, Databricks, or whatever else your team uses.

Most tools already have community-built Airflow modules, giving Airflow spectacular flexibility. Check out the Astronomer registry to find all the providers.

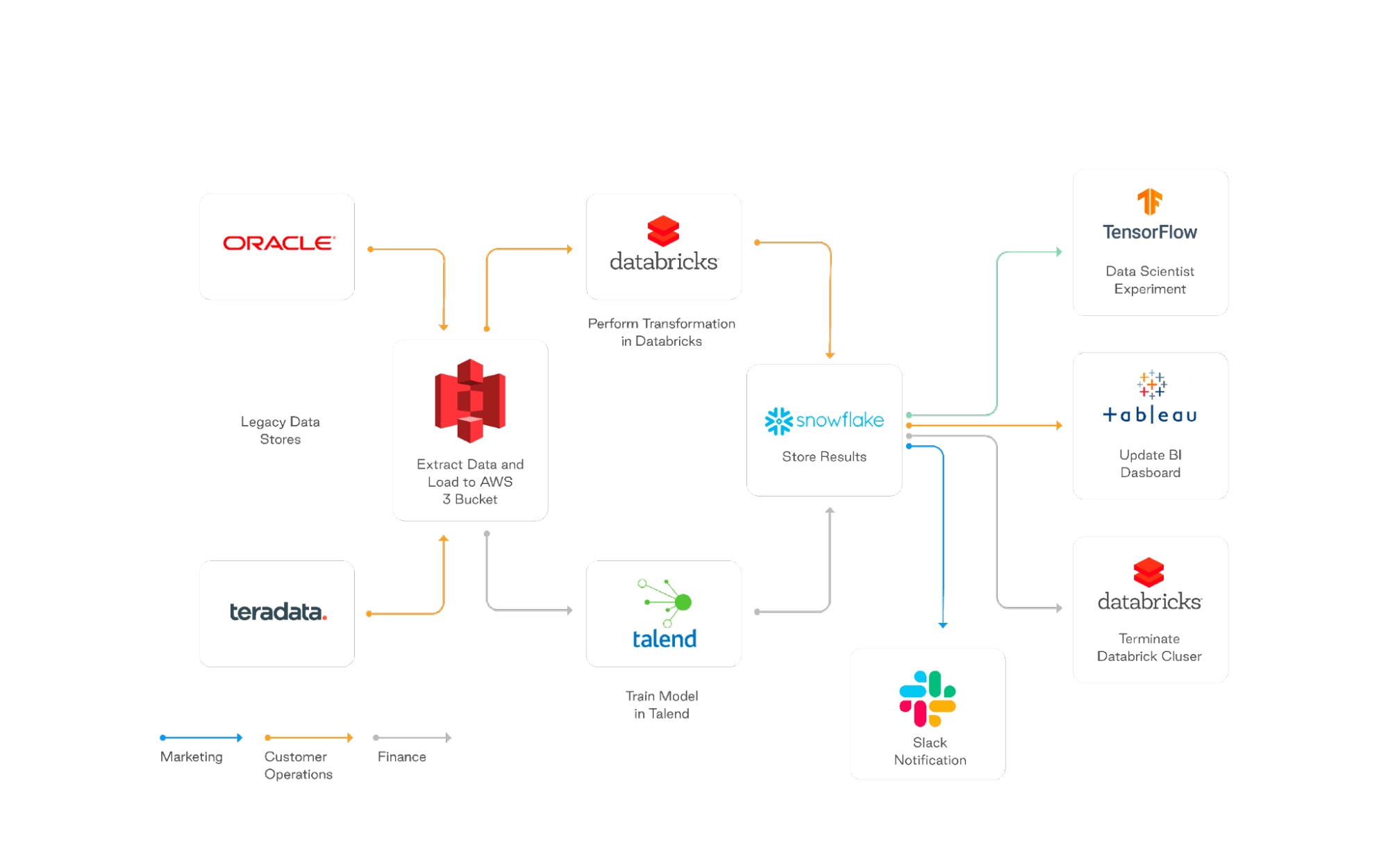

The following diagram shows how these concepts work in practice. As you can see, by writing a single DAG file in Python using an existing provider package, you can begin to define complex relationships between data and actions.

4. Best practices for beginners

- Design Idempotent DAGs

DAG runs should produce the same result regardless of how many times they are run. - Use Providers

Don’t reinvent the wheel with Python Operators unless needed. Use provider packages for specific tasks. And go to the Astronomer Registry for Providers, everything there is to know about providers is right there. - Keep Tasks Atomic

When designing your DAG, each task should do a single unit of work. Use dependencies and trigger rules to schedule as needed. - Keep Clean DAG Files

Define one DAG per .py file. Keep any code that isn’t part of the DAG definition (e.g., SQL, Python scripts) in an /include directory. - Use Connections

Use Airflow’s Connections feature to keep sensitive information out of your DAG files. - Use Template Fields

Airflow’s variables and macros can be used to update DAGs at runtime and keep them idempotent.

For more, check out this DAG Best practices written guide and watch the webinar, including the Q&A session.

5. Demo

Watch the Demo to learn:

- How to get Airflow running: Astronomer’s CLI “Astro” is probably the easiest way to get started with Airflow — very straightforward.

- The basics of Airflow UI.

- Error notifications in the UI.

- An example of DAGs and task instances.

- How to initialize a project in the terminal.

- How to use providers.

- How to define a DAG from scratch.

- How to create task dependencies.

- Kenten’s secret best practices.

You can find the code from the webinar in this Github repo.