What is Apache Airflow?

Apache Airflow lets you programmatically author, schedule, and monitor your data pipelines using Python.

Created at Airbnb as an open-source project in 2014, Airflow was brought into the Apache Software Incubator Program in 2016 and announced as a Top-Level Apache Project in 2019. Now, it’s widely recognized as the industry’s leading workflow management solution.

Airflow has many data integrations with popular databases, applications, and tools, as well as dozens of cloud services — with more added each month. The power of a large and engaged open community ensures that Airflow offers comprehensive coverage of new data sources and other providers, and remains up to date with existing ones.

How is Airflow Used?

Apache Airflow is especially useful for creating and managing complex workflows — like the data pipelines that crisscross cloud and on-premises environments.

Airflow provides the workflow management capabilities that are integral to modern cloud-native data platforms. It automates the execution of jobs, coordinates dependencies between tasks, and gives organizations a central point of control for monitoring and managing workflows.

Airflow benefits include:

Flexibility, Extensibility, and Scalability

Airflow is Python-based, so it supports all of the libraries, frameworks, and modules available for Python, and benefits from the huge existing base of Python users. Airflow’s simple and flexible plugin architecture allows users to extend its functionality by writing their own custom operators, hooks, and sensors. Airflow can scale from very small deployments — with just a few users and data pipelines — to massive deployments, with thousands of concurrent users, and tens of thousands of pipelines. This flexibility enables it to be used for traditional batch data processing use cases, as well as for demanding near-real-time, low-latency applications.

Ease of Use

Airflow simplifies data pipeline development, allowing users to define their data pipelines as Python code. It offers hundreds of operators — pre-built Python functions that automate common tasks — that users can combine like building blocks to design complex workflows, reducing the need to write and maintain custom code, and accelerating pipeline development. Treating data pipelines as code lets you create CI/CD processes that test and validate your data pipelines before deploying them to production. This makes the production dataflows that power your critical business services more resilient. Airflow's web-based UI simplifies task management, scheduling, and monitoring, providing at-a-glance insights into the performance and progress of data pipelines.

Community and Open-Source Functionality

Airflow has a large community of engaged maintainers, committers, and contributors who help to steer, improve, and support the platform. The community provides a wealth of resources — such as reliable, up-to-date Airflow documentation and use-case-specific Airflow tutorials, in addition to discussions forums, a dev mailing list, and an active Airflow Slack channel — to support novice and experienced users alike. The Airflow community is the go-to resource for information about implementing and customizing Airflow, as well as for help troubleshooting problems.

Cloud-Native, Digital-Transformation Solution

Airflow is a critical component of cloud-native data architecture, enabling organizations to automate the flow of data between systems and services, ensuring that dataflows are processed reliably and performantly, and allowing for monitoring and troubleshooting of data outages. For the same reasons, Airflow plays a key role in digital transformation, giving organizations a programmatic foundation they can depend on to efficiently manage and automate their data-driven processes.

Integrate securely and seamlessly

Airflow is a mature and established open-source project that is widely used by enterprises to run their mission-critical workloads. It is a proven choice for any organization that requires powerful, cloud-native workflow management capabilities. New versions of Airflow are released at a regular cadence, each introducing useful new features — like support for asynchronous tasks, data-aware scheduling, and tasks that adjust dynamically to input conditions — that give organizations even greater flexibility in designing, running, and managing their workflows.

Who Uses Airflow?

Airflow is popular with data professionals as a solution for automating the tedious work involved in creating, managing, and maintaining data pipelines, along with other complex workflows. Data platform architects depend on Airflow-powered workflow management to design modern, cloud-native data platforms, while data team leaders and other managers recognize that Airflow empowers their teams to work more productively and effectively.

Here’s a quick look at how a variety of data professionals use Airflow.

Airflow and Data Engineers

Data engineers can apply Airflow’s support for thousands of Python libraries and frameworks, as well as the hundreds of pre-built Airflow operators, to create the data pipelines they depend on to acquire, move, and transform data. Airflow’s ability to manage task dependencies and recover from failures allows data engineers to design rock-solid data pipelines.

Airflow and Data Scientists

Python is the lingua franca of data science, and Airflow is a Python-based tool for writing, scheduling, and monitoring data pipelines and other workflows. Today, thousands of data scientists depend on Airflow to acquire, condition, and prepare the datasets they use to train their machine learning, as well as to deploy these models to production.

Airflow and Data Analysts

Data analysts and analytic engineers depend on Airflow to acquire, move, and transform data for their analysis and modeling tasks, tapping into Airflow’s broad connectivity to data sources and cloud services. With optimized, pre-built operators for all popular cloud and on-premises relational databases, Airflow makes it easy to design complex SQL-based data pipelines. Data analysts and analytic engineers can also take advantage of versatile open-source Airflow add-ons, like the Astro SDK, to abstract away much of the complexity of designing data pipelines for Airflow.

Airflow and Data Team Leads

Data team leaders rely on Airflow to support the work of the data practitioners they manage, recognizing that Airflow’s ability to reliably and efficiently manage data workflows enables their teams to be productive and effective. Data team leads value Airflow’s Python-based foundation for writing, maintaining, and managing data pipelines as code.

Airflow and Data Platform Architects

Airflow-powered workflow management underpins the data layers that data platform architects design to knit together modern, cloud-native data platforms. Data platform architects trust in Airflow to automate the movement and processing of data through and across diverse systems, managing complex data flows and providing flexible scheduling, monitoring, and alerting.

How Does Airflow Work?

Airflow allows you to programmatically define the tasks that make up a data pipeline or other workflow, as well as specify when that workflow should run. At the scheduled time, Airflow runs the workflow, coordinating dependencies between tasks, detecting task failures, and automatically rescheduling failed tasks

Airflow Architecture

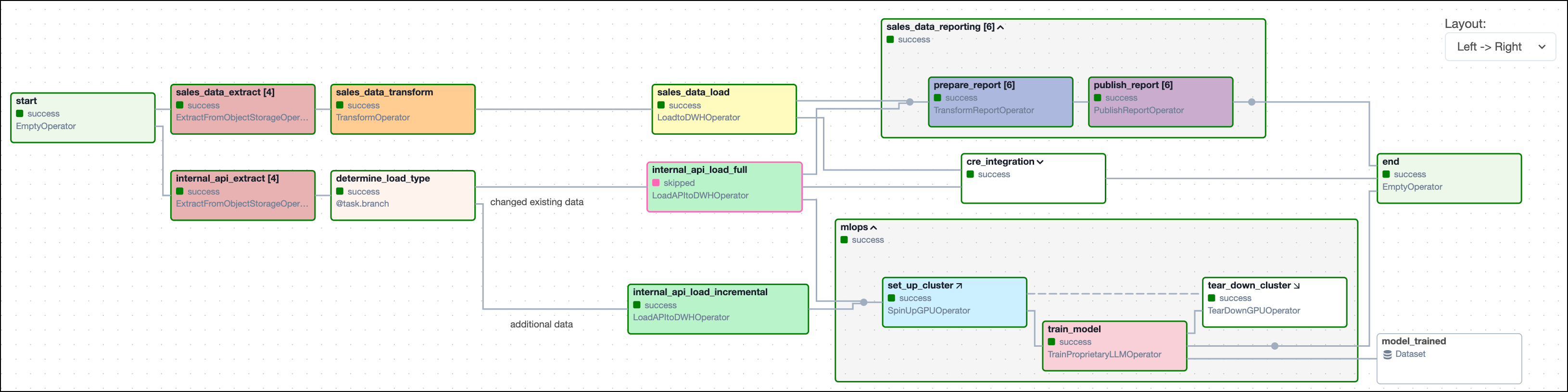

In Airflow, workflows are abstracted as directed acyclic graphs, or DAGs. Hundreds of Airflow operators are the building blocks of DAGs, giving you pre-built functions you can use to simplify common tasks, like running SQL queries and Python scripts, or interacting with cloud storage and database services. You can combine operators to create DAGs that automate complex workflows — like the data extraction, transformation, and loading tasks involved in ETL. Airflow’s built-in logic allows you to focus on making sure your data pipelines are reliable and performant — without worrying about writing custom code to manage complicated dependencies or recover from task failures.

Figure 1: An example of a DAG (current as of Airflow 2.9)

View Image in New Tab

The rich Airflow UI helps you manage your data pipelines, providing granular logging capabilities, and generating detailed lineage metadata. Thanks to its integrations with Slack, email, and other services, Airflow can be configured to send real-time alerts in response to performance issues or data outages. The combination of these features makes it easier for data teams and ops personnel to monitor performance and troubleshoot errors, as well as rapidly diagnose data outages. Airflow can scale to support thousands of concurrent users, with configurable support for high availability and fault tolerance, making it suitable for the most demanding enterprise environments.

What are Airflow’s Key Features?

Apache Airflow’s large and growing feature set addresses the complete lifecycle of building, running, and managing complex workflows, like data pipelines. On top of its rock-solid scheduling and dependency management features, you can use Airflow to orchestrate automated, event-driven workflows, creating datasets that update automatically, as well as tasks that adapt dynamically to input conditions.

Datasets and Data-Aware Scheduling

Create and manage datasets that update automatically. Chain datasets together so they serve as sources for other datasets. Visualize dataset relationships from sources to targets.

Explore Datasets and Scheduling and see Data-Aware Scheduling in action

Deferrable Operators

Accommodate long-running tasks with deferrable operators and triggers that run tasks asynchronously, making more efficient use of resources.

Dynamic Task Mapping

Spin up as many parallel tasks as you need at runtime in response to the outputs of upstream tasks. Chain dynamic tasks together to simplify and accelerate ETL and ELT processing.

Event-Driven Workflows

Leverage dynamic tasks, data-aware scheduling, and deferrable operators to create robust, event-driven workflows that run automatically.

Task Flow API

Use a simple API abstraction to move data between tasks — without the need to write and maintain complicated custom code.

Highly Available Scheduler

Run more data pipelines concurrently, executing hundreds or thousands of tasks in parallel with near-zero latency. Create scheduler replicas to increase task throughput and achieve high availability.

Full REST API

Build programmatic services around your Airflow environment with Airflow’s REST API, which features a robust permissions framework.